- WebRTC音视频数据采集

var constraints={

video: true,

audio: true,

}

navigator.mediaDevices.getUserMedia(constraints)

.then(gotMediaStream)

.then(gotDevices)

.catch(handleError)

function gotMediaStream(stream){

videoplay.srcObject=stream;

}

音视频数据采集主要使用getUserMedia方法获取媒体数据,constraints配置采集轨道的参数,video,audio的true表示采集,false表示不采集,然后将数据流通过gotMediaStream方法添加到视频组建上。

- WebRTC_API_适配

<script src="https://webrtc.github.io/adapter/adapter-latest.js"></script>

添加官方的adapter-latest支持即可

- 获取音视频设备的访问权限

function gotMediaStream(stream){

videoplay.srcObject=stream;

return navigator.mediaDevices.enumerateDevices();

}

function gotDevices(deviceInfos){

deviceInfos.forEach(function(deviceinfo){

var option= document.createElement('option');

option.text=deviceinfo.label;

option.value=deviceinfo.deviceId;

if(deviceinfo.kind==='audioinput'){

audioSource.appendChild(option);

}else if(deviceinfo.kind==='audiooutput'){

audioOutput.appendChild(option);

}else if(deviceinfo.kind==='videoinput'){

videoSource.appendChild(option);

}

})

}

在gotMediaStream方法返回return navigator.mediaDevices.enumerateDevices(),这时gotDevices方法中就可以获取音视频设备

- 视频约束

var constraints={

video: {

width:640,

height:480,

frameRate:30,

//environment:后置摄像头,user:前置摄像头

facingMode:"user",

deviceId: {exact:deviceId ? deviceId:undefined}

},

视频约束所有的配置都在constraints中进行配置,更多详细可以查看官方api

- 音频约束

var constraints={

video: {

width:640,

height:480,

frameRate:30,

//environment:后置摄像头,user:前置摄像头

facingMode:"user",

deviceId: {exact:deviceId ? deviceId:undefined}

},

audio: {

//降噪

noiseSuppression:true,

//回音消除

echoCancellation:true

},

}

音频约束和视频约束一样,在constraints中进行配置,更多详细可以查看官方api

- 视屏特效

<div>

<label>Filter:</label>

<select id="filter">

<option value="none">None</option>

<option value="blur">blur</option>

<option value="grayscale">Grayscale</option>

<option value="invert">Invert</option>

<option value="sepia">sepia</option>

</select>

</div>

//特效

filtersSelect.onchange = function(){

videoplay.className=filtersSelect.value;

}

设置特效直接设置视频源video的className即可

- 从视频中获取图片

<!--从视频中获取图片-->

<div>

<button id="snapshot">Take snapshot</button>

</div>

<div>

<canvas id="picture"></canvas>

</div>

//从视频中获取图片

var snapshot =document.querySelector("button#snapshot");

var picture =document.querySelector("canvas#picture");

picture.width=480;

picture.height=640;

//从视频中获取图片

snapshot.onclick=function(){

picture.className=filtersSelect.value

picture.getContext('2d').drawImage(videoplay,

0,0,

picture.width,

picture.height);

}

从视频中获取图片主要使用的是canvas来绘制的

- MediaStreamAPI及获取视频约束

//获取屏幕约束

var divConstraints = document.querySelector('div#constraints')

function gotMediaStream(stream){

var videoTrack = stream.getVideoTracks()[0];

var videoConstraints = videoTrack.getSettings();

divConstraints.textContent= JSON.stringify(videoConstraints,null,2);

videoplay.srcObject=stream;

return navigator.mediaDevices.enumerateDevices();

}

结果

{ "aspectRatio": 1.3333333333333333, "deviceId": "97953df027728ab0acac98c670d59f654a1e7f36f9faf70f2e0fd7a479394fe3",

"frameRate": 29.969999313354492, "groupId": "1b83734781c08e3c51519598002aa1d5acb1bcd73772f5d2db4b976586af3666",

"height": 480, "width": 640, "videoKind": "color" }

获取视频约束,在gotMediaStream方法中获取视频轨道,信息都在轨道中获取

- 录制音频视屏

//视频录制

btnRecord.onclick=()=>{

if(btnRecord.textContent==='Start Record'){

startRecord();

btnRecord.textContent='Stop Record'

btnPlay.disabled=true;

btnDownload.disabled=true;

}else{

stopRecord();

btnRecord.textContent='Start Record'

btnPlay.disabled=false;

btnDownload.disabled=false;

}

}

function gotMediaStream(stream){

...

window.stream=stream;

...

return navigator.mediaDevices.enumerateDevices();

}

//开始录制

function startRecord(){

buffer=[];

var options={

mimeType: 'video/webm;codecs=vp8'

}

if(!window.MediaRecorder.isTypeSupported(options.mimeType)){

console.error('${options.mimeType} is not supported');

return;

}

try {

mediaRecorder= new window.MediaRecorder(window.stream,options);

} catch (e) {

console.error('failed to create MediaRecorder:',e);

return;

}

mediaRecorder.ondataavailable= handleDataAvailable;

mediaRecorder.start(10);

}

//停止录制

function stopRecord(){

mediaRecorder.stop();

}

- 播放录制视频

btnPlay.onclick=()=>{

var blob =new Blob(buffer,{type: 'video/webm'});

recvideo.src=window.URL.createObjectURL(blob);

recvideo.srcObject=null;

recvideo.controls=true;

recvideo.play();

}

- 下载录制视频

btnDownload.onclick=()=>{

var blob =new Blob(buffer,{type:'video/webm'});

var url = window.URL.createObjectURL(blob);

var a=document.createElement('a');

a.href=url;

a.style.display='none';

a.download='aaa.webm';

a.click();

}

- 采集屏面数据

//getDisplayMedia 捕获桌面 ,getUserMedia 捕获摄像头数据

function start(){

//捕获桌面

if (!navigator.mediaDevices||

!navigator.mediaDevices.getDisplayMedia) {

console.log("getUserMedia is not supported!")

return;

} else {

//捕获桌面

var constraints1={

video: true,

audio: true,

}

//getDisplayMedia 捕获桌面 ,getUserMedia 捕获摄像头数据

navigator.mediaDevices.getDisplayMedia(constraints1)

.then(gotMediaStream)

.then(gotDevices)

.catch(handleError)

}

}

采集屏幕数据其实和采集音视频信息一直,只是将getUserMedia替换成getDisplayMedia即可.

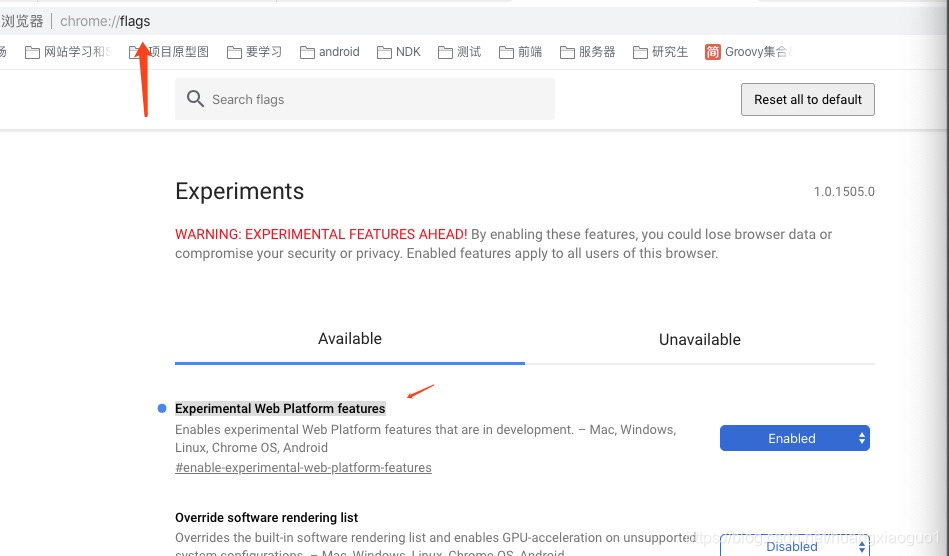

注意:要使用google浏览器打开Experimental Web Platform features

全部代码

- html

<html>

<head>

<title>

WebRtc capture video and audio

</title>

<style>

.none{

-webkit-filter:none;

}

.blur{

-webkit-filter:blur(3px);

}

.grayscale{

-webkit-filter:grayscale(1);

}

.invert{

-webkit-filter:invert(1);

}

.sepia{

-webkit-filter:sepia(1);

}

</style>

</head>

<body>

<div>

<label>audio input device:</label>

<select id="audioSource"></select>

</div>

<div>

<label>audio output device:</label>

<select id="audioOutput"></select>

</div>

<div>

<label>video input device:</label>

<select id="videoSource"></select>

</div>

<div>

<label>Filter:</label>

<select id="filter">

<option value="none">None</option>

<option value="blur">blur</option>

<option value="grayscale">Grayscale</option>

<option value="invert">Invert</option>

<option value="sepia">sepia</option>

</select>

</div>

<div>

<table>

<tr>

<td>

<video autoplay playsinline id="player"></video>

</td>

<td>

<video playsinline id="recplayer"></video>

</td>

<td>

<div id="constraints" class="output"></div>

</td>

</tr>

<tr>

<td>

<button id="record">Start Record</button>

</td>

<td>

<button id="recplay" disabled>Play</button>

</td>

<td>

<button id="download" disabled>Download</button>

</td>

</tr>

</table>

</div>

<!--从视频中获取图片-->

<div>

<button id="snapshot">Take snapshot</button>

</div>

<div>

<canvas id="picture"></canvas>

</div>

<script src="https://webrtc.github.io/adapter/adapter-latest.js"></script>

<script src="./js/client.js"></script>

</body>

</html>

- js

'use strict'

var videoplay=document.querySelector('video#player')

var audioSource =document.querySelector("select#audioSource");

var audioOutput =document.querySelector("select#audioOutput");

var videoSource =document.querySelector("select#videoSource");

//特效

var filtersSelect =document.querySelector("select#filter");

//从视频中获取图片

var snapshot =document.querySelector("button#snapshot");

var picture =document.querySelector("canvas#picture");

picture.width=480;

picture.height=640;

//获取屏幕约束

var divConstraints = document.querySelector('div#constraints')

//录制音视频

var recvideo = document.querySelector('video#recplayer')

var btnRecord = document.querySelector('button#record')

var btnPlay = document.querySelector('button#recplay')

var btnDownload = document.querySelector('button#download')

var buffer;

var mediaRecorder;

function gotMediaStream(stream){

var videoTrack = stream.getVideoTracks()[0];

var videoConstraints = videoTrack.getSettings();

divConstraints.textContent= JSON.stringify(videoConstraints,null,2);

window.stream=stream;

videoplay.srcObject=stream;

return navigator.mediaDevices.enumerateDevices();

}

function handleError(err){

console.log("getUserMedia error:",err);

}

function gotDevices(deviceInfos){

deviceInfos.forEach(function(deviceinfo){

var option= document.createElement('option');

option.text=deviceinfo.label;

option.value=deviceinfo.deviceId;

if(deviceinfo.kind==='audioinput'){

audioSource.appendChild(option);

}else if(deviceinfo.kind==='audiooutput'){

audioOutput.appendChild(option);

}else if(deviceinfo.kind==='videoinput'){

videoSource.appendChild(option);

}

})

}

//getDisplayMedia 捕获桌面 ,getUserMedia 捕获摄像头数据

function start(){

//捕获摄像头数据

if (!navigator.mediaDevices||

!navigator.mediaDevices.getUserMedia) {

//捕获桌面

// if (!navigator.mediaDevices||

// !navigator.mediaDevices.getDisplayMedia) {

console.log("getUserMedia is not supported!")

return;

} else {

var deviceId=videoSource.value;

//捕获摄像头数据

var constraints={

video: {

width:640,

height:480,

frameRate:30,

//environment:后置摄像头,user:前置摄像头

facingMode:"user",

deviceId: {exact:deviceId ? deviceId:undefined}

},

audio: {

//降噪

noiseSuppression:true,

//回音消除

echoCancellation:true

},

}

//捕获桌面

var constraints1={

video: true,

audio: true,

}

//getDisplayMedia 捕获桌面 ,getUserMedia 捕获摄像头数据

navigator.mediaDevices.getUserMedia(constraints)

// navigator.mediaDevices.getDisplayMedia(constraints1)

.then(gotMediaStream)

.then(gotDevices)

.catch(handleError)

}

}

start();

videoSource.onchange=start;

//特效

filtersSelect.onchange = function(){

videoplay.className=filtersSelect.value;

}

//从视频中获取图片

snapshot.onclick=function(){

picture.className=filtersSelect.value

picture.getContext('2d').drawImage(videoplay,

0,0,

picture.width,

picture.height);

}

//视频录制

btnRecord.onclick=()=>{

if(btnRecord.textContent==='Start Record'){

startRecord();

btnRecord.textContent='Stop Record'

btnPlay.disabled=true;

btnDownload.disabled=true;

}else{

stopRecord();

btnRecord.textContent='Start Record'

btnPlay.disabled=false;

btnDownload.disabled=false;

}

}

function handleDataAvailable(e){

if(e&&e.data&&e.data.size>0){

buffer.push(e.data)

}

}

function startRecord(){

buffer=[];

var options={

mimeType: 'video/webm;codecs=vp8'

}

if(!window.MediaRecorder.isTypeSupported(options.mimeType)){

console.error('${options.mimeType} is not supported');

return;

}

try {

mediaRecorder= new window.MediaRecorder(window.stream,options);

} catch (e) {

console.error('failed to create MediaRecorder:',e);

return;

}

mediaRecorder.ondataavailable= handleDataAvailable;

mediaRecorder.start(10);

}

function stopRecord(){

mediaRecorder.stop();

}

btnPlay.onclick=()=>{

var blob =new Blob(buffer,{type: 'video/webm'});

recvideo.src=window.URL.createObjectURL(blob);

recvideo.srcObject=null;

recvideo.controls=true;

recvideo.play();

}

btnDownload.onclick=()=>{

var blob =new Blob(buffer,{type:'video/webm'});

var url = window.URL.createObjectURL(blob);

var a=document.createElement('a');

a.href=url;

a.style.display='none';

a.download='aaa.webm';

a.click();

}

这里提供一个我自己的测试地址:https://www.huangxiaoguo.club/mediastream/index.html