1, different load scenarios

We know the role of load balancing layer is "to deal with the pressure from the outside by a certain law / means allocated to the inside of each processing node," then different business scenarios require load balancing mode is not the same, the architect also to consider the structure of the program cost, scalability, ease of operation and maintenance and other issues. Here we introduce some typical different business scenarios, you can first think about if you will how to set up load balancing layer of these scenes.

It should be noted that this system of articles, we will use these typical business scenarios to explain the design of recursive design of the system architecture. In several subsequent presentation layer architecture, load article, we will explain the architecture program supported by several layers of a typical business scenario.

1.1, load a scene

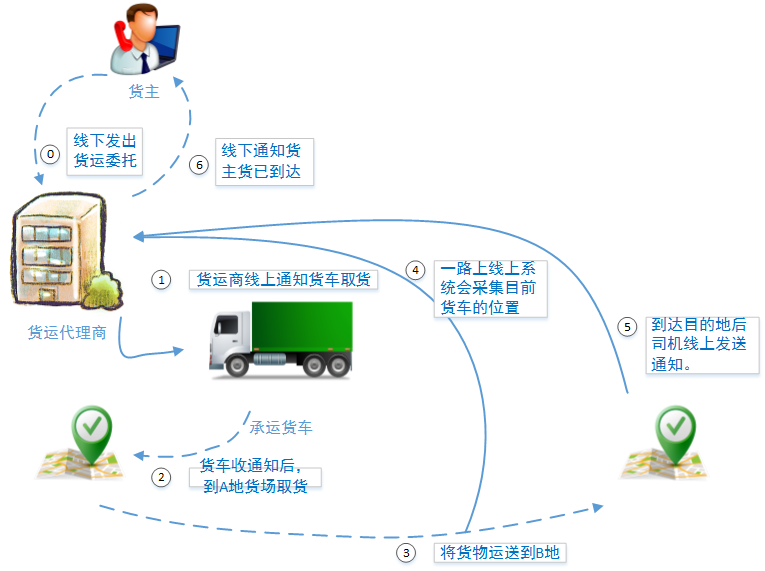

This is a freight and logistics management system orders a national logistics park. Freight forwarders in the logistics park, cooperation driver (freight vehicles), the park administrators and customer service staff have to use the system. Daily RUV 1 million people, about 100,000 Japanese PV. Party general manager of the system using the original is holding "try on the role of mobile Internet products if the logistics can play to improve efficiency." It can be seen virtually no access to the entire system pressure, Party for your design system is only one requirement: to ensure the future of the system functionality and performance scalability.

1.2, two load scenarios

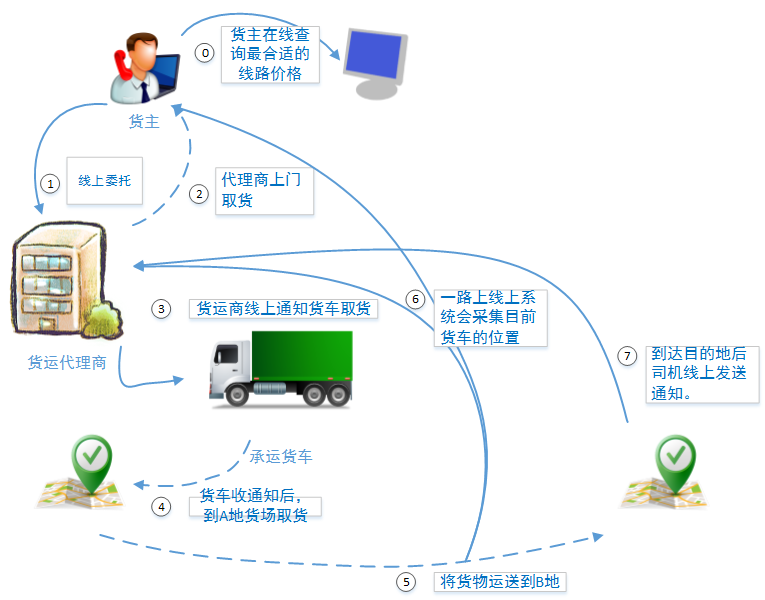

Good results! In the six months after the first version of the system set up, lost cargo yard situation greatly reduced, and because the trucks in transit monitoring, on-time arrival rate was also significantly improved, because the entire truck drivers also reflects information are shared yard truck, van the goods to be significantly shorter time. During this period, a logistics park in a growing number of freight forwarders, truck drivers are beginning to use the system, the amount of access to the entire system of linear growth.

General manager of the logistics park is satisfied with the role of the entire system, decided to expand the use of the system, and add new features. After discussion Party finally decided to open the entire system to the owner: or can be viewed on line quotation system, freight forwarders, agents pick up the line to inform, monitor the current state of their own goods transport, third-party sign to understand the situation. Preliminary estimates of daily RUV system will reach 100,000 daily PV will exceed 500,000.

1.3, load Scene Three

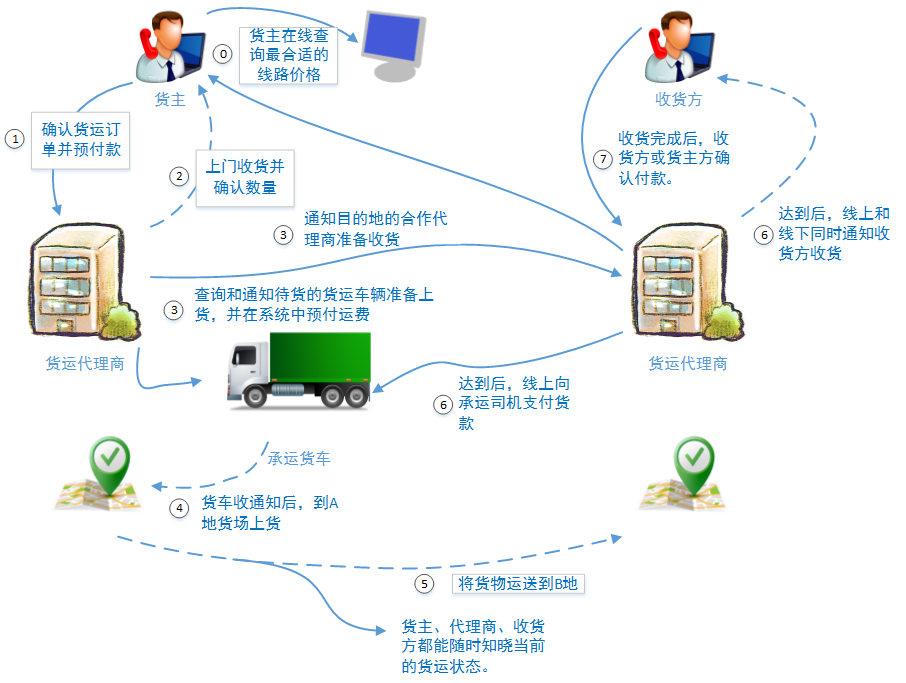

一年后,赞不绝口的大宗货品运输服务质量终于传到了政府领导的耳朵里。省里分管运输的领导亲自领队到物流园区参观考察,最终决定由省政府牵头,各地方政府参与,将这套管理办法在整个省级范围进行推广使用。全省10家大型物流园和50家二级物流园中的上万货运代理商、散落省内的零散代理商、10万个人/企业货主、40万优等资质车源共同接入系统。

新的功能上,增加了费用结算和运费保障功能,从货主预付款开始到第三方确认收货的整个环节都进行费用管理。为了保证线上收货环节的顺利,新版本中还增加了代理商之间的合作收货功能。新系统的日RUV将超过50万,日PV将突破250万。

1.4、负载场景四

服务效应、经济效应、口碑效应不断发酵,经过近两年多的发展,目前这套系统已经是省内知名的物流配送平台,专门服务大宗货运物流。联合政府向全国推广服务的时机终于到来。预计全国1000多个物流园区,50万左右物流代理商,500万货运车辆、数不清的个人和企业货主都将使用该系统。预估的RUV和PV是多少呢?无法预估,如果按照全国32省来进行一个简单的乘法,是可以得到一个大概的值(50万 * 32 = 1500万+;500万 * 32 = 1.5亿+,已经超过了JD.com的平峰流量),但是各省的物流业规模是不一样的,从业者数量也不一样,所以这样的预估并不科学。而且再这样的系统规模下我们应该更过的考虑系统的峰值冗余。

业务功能的情况:为了保证注册货车的有效性,您所在的公司被政府允许访问政府的车辆信息库,在车辆注册的过程中进行车辆信息有效性的验证(第三方系统接口调用,我们并不知道第三方系统是否能够接收一个较高水平的并发量,所以这个问题留给我我们的架构师,我们将在业务层讲解时进行详细的描述)。

1.5、沉思片刻

看到这里,我们已经将几个递进的业务场景进行了详细的说明(甚至在后文中我们讨论业务层、业务通信层、数据存储层时所涉及的业务场景也不会有什么大的变化了)。看客们看到这里,可以稍作休息,先想想如果是您,您会如何搭建负载层,甚至整个系统的顶层架构。

由于整个系统的性能除了和硬件有关外,业务层的拆分规则,代码质量,缓存技术的使用方式,数据库的优化水平都可能对其产生影响。所以:

我们在讨论负载层的几篇文章中,我们要假设系统架构中各层的设计都没有对系统性能产生瓶颈

如果您已经思考好了,那么可以继续看以下的内容。

2、负载方案构想

2.1、解决方案一:独立的Nginx/Haproxy方案

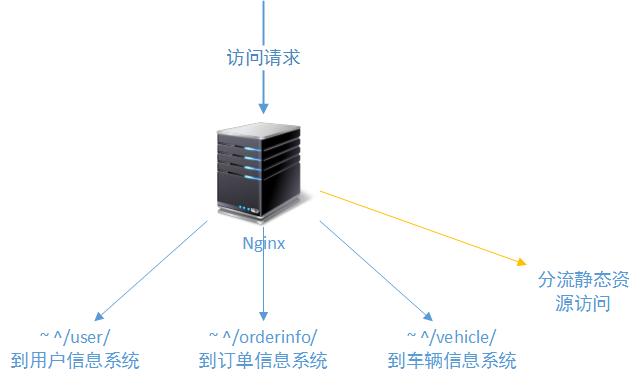

很显然,第一个业务场景下,系统并没有多大的压力就是一套简单业务系统,日访问量也完全没有“有访问压力”这样的说法。但是客户有一个要求值得我们关注:要保证系统以后的功能和性能扩展性。为了保证功能和性能扩展性,在系统建立之初就要有一个很好的业务拆分规划,例如我们首先会把用户信息权限子系统和订单系统进行拆分,独立的车辆信息和定位系统可能也需要拆分出来。

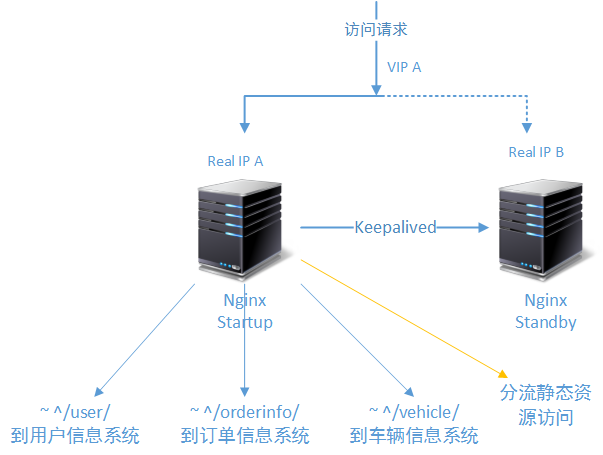

这也是我们在系统建立时就要引入负载均衡层的一个重要原因。也是负载均衡层的重要作用之一。如下图所示:

可以看出,这时负载均衡层只有一个作用,就是按照设定的访问规则,将访问不同系统的请求转发给对应的系统,并且在出现错误访问的情况下转发到错误提示页面。

2.2、解决方案二:Nginx/Haproxy + Keepalived方案

此后,系统的访问压力进一步加大,系统的稳定性越来越受到我们的关注。所以在单节点处理还能满足业务要求的情况下,我们为负载层(还有各层)引入热备方案,以保证一个节点在崩溃的情况下,另一个节点能够自动接替其工作,为工程师解决问题赢得时间。如下图所示:

2.3、解决方案三:LVS(DR)+ Keepalived+ Nginx方案

在第三版本架构方案中,为了保证负载层足够稳定的状态下,适应更大的访问吞吐量还要应付可能的访问洪峰,我们加入了LVS技术。LVS负责第一层负载,然后再将访问请求转发到后端的若干台Nginx上。LVS的DR工作模式,只是将请求转到后端,后端的Nginx服务器必须有一个外网IP,在收到请求并处理完成后,Nginx将直接发送结果到请求方,不会再经LVS回发(具体的LVS工作原理介绍将在后文中详细介绍)。

这里要注意的是:

-

有了上层的LVS的支撑Nginx就不再需要使用Keepalived作为热备方案。因为首先Nginx不再是单个节点进行负载处理,而是一个集群多台Nginx节点;另外LVS对于下后端的服务器自带基于端口的健康检查功能;

-

LVS是单节点处理的,虽然LVS是非常稳定的,但是为了保证LVS更稳定的工作,我们还是需要使用Keepalived为 LVS做一个热备节点,以防不时之需。

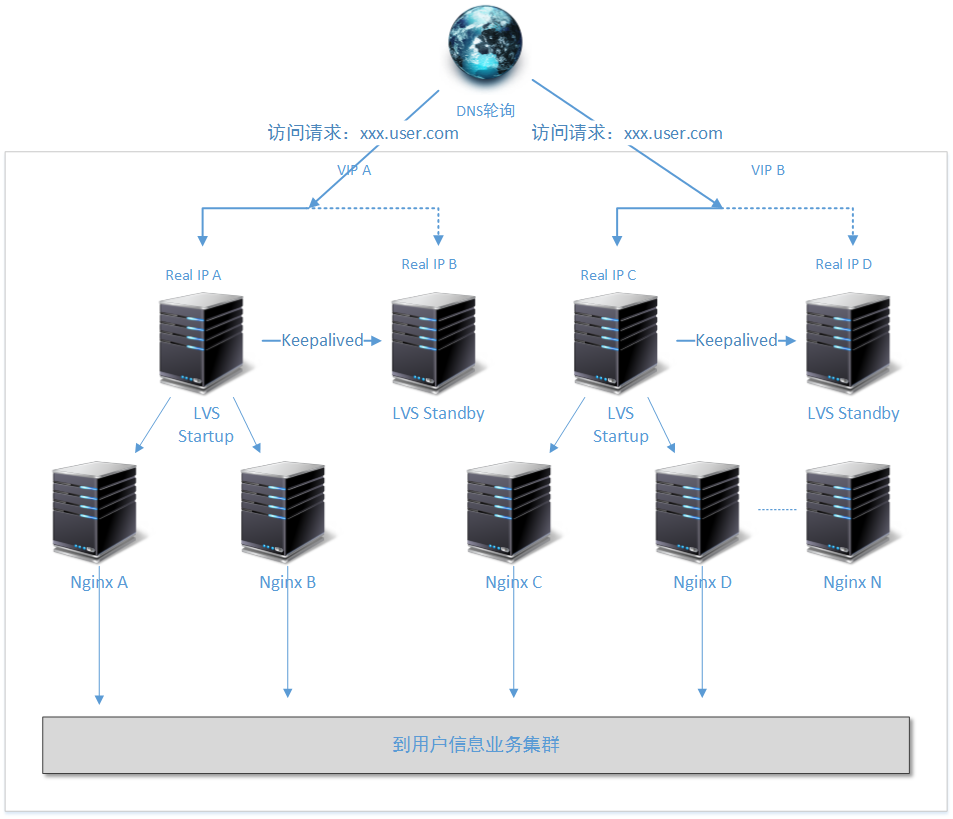

2.4、解决方案四:DNS轮询 + LVS(DR)+ Keepalived + Nginx方案

场景四中,为了满足平均上亿的日PV访问,在对业务进行外网暴露的基础上,我们在互联网的最前端做了一个DNS轮询。然后将(对用户信息系统)访问压力首先分摊到两个对称LVS组上,再由每个组向下继续分拆访问压力。

注意上图的负载层方案的不同:

-

首先我们不在像前面的方案中,使用目录名分割业务系统了,而是直接将业务系统的访问使用不同的二级域名进行拆分。这样的变化有利于每个业务系统都拥有自己独立的负载均衡层。

-

请注意上图中的细节,这个负载均衡层是专门为“用户信息子系统”提供负载均衡支撑的,而可能还存在的“订单子系统”、“车辆信息子系统”都会有他们独立的负载均衡层。

-

在LVS下方的Nginx服务可以实现无限制的扩展,同样的就像场景三种所给出的解决方案一样,Nginx本身不在需要Keepalived保持热备,而是全部交由上层的LVS进行健康情况检查。而即使有一两台Nginx服务器出现故障,对整个负载集群来说问题也不大。

方案扩展到了这一步,LVS层就没有必要再进行扩展新的节点了。为什么呢?根据您的业务选择的合适的LVS工作模式,两个LVS节点的性能足以支撑地球上的所有核心WEB站点。如果您对LVS的性能有疑惑,请自行谷歌百度。这里我们提供了一份参考资料:《LVS性能,转发数据的理论极限》http://www.zhihu.com/question/21237968

3、为什么没有独立的LVS方案

In the next article, "architecture: load balancing layer scheme (2) - LVS, keepalived, Nginx installation and the core principles of Resolution" we will refer to this issue. In fact by this article analyzes the evolution of architecture, some readers may be discerned. If one sentence description of the reasons that LVS order to ensure its performance has been sacrificed on the configuration, the use of words alone are often unable to meet the requirements of the business layer and flexible layer load distribution request.

4, description

4.1 Term Description

-

TPS: (number of processing per request / transaction) an important indicator of business layer processing performance. Business services dealing with a complete business process, the process returns to the upper layer processing result is a request / transaction. Then in one second the number of the entire business system capable of such a process is completed, its denomination is TPS. TPS and system architecture not only have a great relationship (especially the business layer and the communication layer architecture business Xiangtai), and the relationship between the physical environment, code quality is also very close.

-

PV: Web browsing is the number one most commonly used indicator traffic review sites, referred to as PV. Page Page of the Views generally refers to regular html page also contains html content PHP, JSP and the like dynamically generated. Note that a complete display Page became a PV. But a PV, generally require multiple HTTP requests, in order to obtain more static resource, which is the need to pay attention.

-

UV: Unique Visitor the IP independently, (e.g. day / one hour) a PV requests in a unit time of the system, as a UV (PV repeated not counted).

-

RUV: Repeat User Visitor a separate user, (e.g. day / one hour) a request within a PV system per unit of time, and repeated access to counted.

Article from: http: //blog.csdn.net/yinwenjie/article/details/46605451

Thanks to the selfless sharing of the original author