When your application is still small scale, you probably will not care about Webpack compilation speed, regardless of the use 3.X or 4.X versions, which are fast enough, or at least did not make you impatient. But with the increase in traffic, wind, wind projects have a look at the hundreds of components, is a very simple matter. This time again when you compile a separate front-end module production package, braided or when the entire project, if not optimized configuration Webpackp CI tool that compilation speed will slow mess. Compiler takes more than 10 seconds and the compiler takes a minute or two experiences are very different. For mood when considering development, coupled not let the code compile our front-end drag the entire CI speed of the two starting point, forcing us to have to speed up the compilation speed. This article is to explore the place to do next compilation speed optimization for use on some of the API will not do much to explain the need to introduce students can directly look at the document. The author of Webpack version 4.29.6, later in this release are based on content.

First, the existing compiler optimized for speed

I eject this Webpack shelf from CRA based Webpack4.x, in the selection and design have been Plugin Loader and best practices, what is basically no need to change. Its original configuration for optimizing compiler that these three:

1. The results compiled in parallel processing by terser-webpack-plugin of the parallel configuration and cache and cache before. terser-webpack-plugin before a substitute UglifyPlugin because UglifyPlugin has no continuing maintenance, and starting from Webpack4.x, has recommended terser-webpack-plugin to code compression, confusion, and Dead Code Elimination in order to achieve Tree Shaking . For parallel from the name of the entire set of people you will know what use is it, yes, that is parallel, while the cache is caching the results of the plug-in, the next time compilation for the content unchanged files can be reused directly spend time the results compiled.

2. to cache the results compiled by the cache configuration babel babel-loader's.

3. By setting the entire locale localized IgnorePlugin file folder moment introduced regular match, to prevent all of the localized files packaged. If you do need a certain language, you can only manually import language packs that country.

In the project progressively larger process, compile-time package production also increased from ten seconds to a minute, this way, people can not stand, forcing the author must perform additional optimizations to speed up compilation for braided save time. The following paragraphs will explain a few additional optimizations I do next.

Second, multithreading (process) Support

From the parallel set terser-webpack-plugin of the last paragraph, we can get this inspiration: Enable multi-process to simulate multi-threaded, parallel processing compiled resources. So I introduced HappyPack, set of old shelves before I also use it, but did not write anything before to introduce the set of shelves, here together to say. About HappyPack, used to play Webpack students are no strangers to the Internet, there are some introductory essay on the principle, also write very well. After the works HappyPack roughly between Webpack and Loader is an added layer, into a Webpack not go directly to a Loader and work, but Webpack test to the need to build a certain type of resource modules, the processing tasks to the resources of the HappyPack, then by HappyPack be renewed internal thread scheduling, distribution Loader a thread calls handle the types of resources to deal with this resource, after the completion of reporting the results, and finally HappyPack the results are returned to the Webpack, and finally by Webpack output path to the destination. Will operate in a thread assigned to a different thread in the parallel processing.

Use as follows:

First introduced HappyPack and create a thread pool:

const HappyPack = require('happypack');

const happyThreadPool = HappyPack.ThreadPool({size: require('os').cpus().length - 1});

Loader before the replacement is HappyPack plugins:

{ test: /\.(js|mjs|jsx|ts|tsx)$/, include: paths.appSrc, use: ['happypack/loader?id=babel-application-js'], },

Loader original configuration, to the corresponding plug-in movement:

new HappyPack({ id: 'babel-application-js', threadPool: happyThreadPool, verbose: true, loaders: [ { loader: require.resolve('babel-loader'), options: { ...省略 }, }, ], }),

As shown generally in use, HappyPack configuration article has a lot to explain, not with students can search your article here talking about it only in passing.

HappyPack long ago and no maintenance, and its handling url-loader is a problem, can cause the picture after url-loader treatment are ineffective, before I also mentioned to a Issue, other developers have also found this problem. In short, we must use the time to test.

For multithreaded advantage, we give an example:

For example, we have four tasks, named A, B, C, D.

Task A: takes 5 seconds

Task B: takes 7 seconds

Task C: takes 4 seconds

Task D: takes 6 seconds

Total time threaded serial processing about 22 seconds.

After the change multi-threaded parallel processing, total elapsed time of about seven seconds, which is long when performing the most time-consuming task B, just by configuring multi-thread processing we can get significantly faster compile.

I write to you, we are not that compilation speed optimization can to this end? Haha, of course not, the above example does not have broad representation in the actual project, the project's actual situation the author is this:

We have four mission, named A, B, C, D.

Task A: takes 5 seconds

Task B: time-consuming 60 seconds

Task C: takes 4 seconds

Task D: takes 6 seconds

Total time single-threaded serial processing of about 75 seconds.

After the change multi-threaded parallel processing, in a total time of about 60 seconds, optimization, from 75 seconds to 60 seconds, does have to enhance the speed, but because the time-consuming task B is too long, resulting in compilation speed of the entire project and not fundamental change occurs. In fact the author before the set Webpack3.X the shelf because of this problem causes the compiler to slow, so rely on the introduction of multi-threaded wanted to solve the problem of large projects compile slow is unrealistic.

That we have what way? Of course, we can get inspiration from TerserPlugin that rely on caching: the next compilation can be multiplexed with the last results without performing compilation is always the fastest.

There exist at least three ways, you can not let us compile some files when performing building, elevating the front side of the overall project to build speed from the most essential:

1. like terser-webpack-plugin cache that way, cache default generated under the widget node_modules / .cache / terser-plugin file, the file before the processing results or SHA base64 encoding, and save the file mapping relationship, before you can see if we can cache the same files (with contents) at convenient time when processing files. Other Webpack platform tools have similar functions, but not necessarily the same cache way.

2. Configure ignored by externals out directly dependent on external libraries at compile time, they will not be compiled, but at run time, label production environment file download libraries through a <script> directly from the CDN server.

3. After some can compile libraries saved, compiled every time skip them, but in the final compiled code can be referenced to them, this is Webpack DLLPlugin work done, DLL dynamic reference to Windows link library concept.

The following paragraphs will explain for doing this in several ways.

Three, Loader's Cache

In addition terser-webpack-plugin mentioned paragraphs a babel-loader and an outer support cache, Webpack also directly be used to further provide a preamble buffering Loader Loader processing result, it is the cache-loader. Usually we can be time-consuming Loader have to cache the compiled results cache-laoder. For example, we packaged the production environment for Less cache file you can use it like this:

{ test: /\.less$/, use: [ { loader: MiniCssExtractPlugin.loader, options: { ...省略 }, }, { loader: 'cache-loader', options: { cacheDirectory: paths.appPackCacheCSS, } }, { loader: require.resolve('css-loader'), options: { ...省略 }, }, { loader: require.resolve('Postcss-loader' ), options: { ... omitted } } ] }

Loader execution order is from bottom to top, and therefore the above-described configuration, we can compile results cache cache-laoder postcss-loader, and the css-loader.

But we can not use the cached results to the cache-loader mini-css-extract-plugin because its role is to compile the front Loader order to form JS file containing the character string pattern string to separate out a separate labeled CSS files, cache files are not the work of these independent CSS cache-loader's.

But if it is Less compilation results to be cached development environment, cache-loader can cache the results of style-loader, because style-loader is not out of style code separate from the JS file, just added some extra code in the compiled code in so compiled code at run time to create a customized style <style> tag and placed in the <head> tag inside, so performance is not very good, so basically the only development environment in this way.

When configuring cache-loader on the style file, we must remember that the above two points, can not compile correctly or else the style of problems.

In addition to the results compiled style file cache, the other types of files to compile the results (in addition to be packaged into a separate file) of cache is also possible. For example url-laoder, as long as the size does not reach the limitation of the picture will be labeled as base64, files larger than limitation will be labeled as a separate class picture files, cache-loader can not be cached, if you encounter this situation, resource requests 404, which is in the use of cache-loader that need attention.

Of course, by using the cache can significantly improve the speed of compilation, those Loader is still time-consuming, if not time-consuming to compile certain types of files, or the file itself is too small quantity, you can start having to do cache because even made a cache to enhance compilation speed is not obvious.

Finally, the author of all Loader and Plugin cache directory from the default node_modules / .cache / moved build_pack_cache / directory of the project root directory (production) and dev_pack_cache directory (development environment), automatic distinguished by NODE_ENV. Before I do so because of the CI project will remove node_modules each folder, and a new folder from node_modules.tar.gz node_modules decompression, so the cache on node_modules / .cache / directory will be invalid, I also CI do not want to touch the code. With this change, the management of the cache files more intuitive, but also to avoid node_modules volume has been increased. If you want to clear the cache, you can directly delete the corresponding directory. Of course, these two directories do not need all of them to be tracked by Git, you need to add on .gitignore in. CI environment if there is no corresponding cache directory, associated Loader will be automatically created. Moreover, because the development and production environments compile the resource is different, in a development environment, compiler of resources often does not do compression and obfuscation, etc., in order to effectively cached compiled the results under different circumstances, needs to distinguish cache directory .

Fourth, external expansion externals

Webpack according to the official statement: Our project if you want to use a library, but we do not want to Webpack compile it (because it is likely to have the source code is compiled and optimized production package, can be used directly). And we may be accessed globally through the window it or access it through a variety of modular fashion, then we can put it into the configuration in extenals.

For example, I want to use jquery can be configured:

externals: { jquery: 'jQuery' }

I can use this, just as we introduced directly into a package in node_modules of the same:

import $ from 'jquery';

$('.div').hide();

This can greatly premise is that we in the HTML file in the above code is executed ago has passed the <script> tag downloaded from CDN dependent libraries we need a, externals configuration will automatically load the html file our application added:

<script src="https://code.jquery.com/jquery-1.1.14.js">

externals also supports other flexible configuration syntax, for example, I just want to visit some of the methods the library, we can even attach these methods to the window object:

externals : { subtract : { root: ["math", "subtract"] } }

I can window.math.subtract access subtract the method.

If you are interested, you can view the document itself to other configurations.

However, the author of the project and did not do so, because after its final delivery to the customer, it should be in a network environment (or a firewall is severely constrained environments), the maximum may not have access to any Internet resources, and therefore by < script> script requests CDN resource way fail, the pre-reliance can not download properly will cause the entire application Ben collapse.

Five, DllPlugin

At the end of the previous paragraph, the author mentions the plight of the network project after the delivery of the user's face, so I must choose another way to achieve similar externals configuration capabilities can provide. That is Webpack DLLPlugin as well as its good partner DLLReferencePlugin. I use about DLLPlugin are used when constructing the production package.

To use DLLPlugiin, we need to open a separate webpack configuration, for the time being named as webpack.dll.config.js, in order to distinguish and profile webpack.config.js the main Webpack. It reads as follows:

'use strict';

process.env.NODE_ENV = 'production'; const webpack = require('webpack'); const path = require('path'); const {dll} = require('./dll'); const DllPlugin = require('webpack/lib/DllPlugin'); const TerserPlugin = require('terser-webpack-plugin'); const getClientEnvironment = require('./env'); const paths = require('./paths'); const shouldUseSourceMap = process.env.GENERATE_SOURCEMAP !== 'false'; module.exports = function (webpackEnv = 'production') { const isEnvDevelopment = webpackEnv === 'development'; const isEnvProduction = webpackEnv === 'production'; const publicPath = isEnvProduction ? paths.servedPath : isEnvDevelopment && '/'; const publicUrl = isEnvProduction ? publicPath.slice(0, -1) : isEnvDevelopment && ''; const env = getClientEnvironment(publicUrl); return { mode: isEnvProduction ? 'production' : isEnvDevelopment && 'development', devtool: isEnvProduction ? 'source-map' : isEnvDevelopment && 'cheap-module-source-map', entry: dll, output: { path: isEnvProduction ? paths.appBuildDll : undefined, filename: '[name].dll.js', library: '[name]_dll_[hash]' }, optimization: { minimize: isEnvProduction, minimizer: [ ...省略 ] }, plugins: [ new webpack.DefinePlugin(env.stringified), new DllPlugin({ context: path.resolve(__dirname), path: path.resolve(paths.appBuildDll, '[name].manifest.json'), name: '[name]_dll_[hash]', }), ], }; };

In order to facilitate the management DLL, we also opened a separate file to manage dll.js entrance entry webpack.dll.config.js, we put all the libraries need DLLPlugin process are recorded in this file:

const dll = { core: [ 'react', '@hot-loader/react-dom', 'react-router-dom', 'prop-types', 'antd/lib/badge', 'antd/lib/button', 'antd/lib/checkbox', 'antd/lib/col', ...省略 ], tool: [ 'js-cookie', 'crypto-js/md5', 'ramda/src/curry', 'ramda/src/equals', ], shim: [ 'whatwg-fetch', 'ric-shim' ], widget: [ 'cecharts', ], }; module.exports = { dll, dllNames: Object.keys(dll), };

For what should a DLL into the library, please according to their own project to set, for some particularly large library, and can not do and do not support segmentation module Tree Shaking, such Echarts, recommended to go to the official website required by project a custom function, do not directly use the entire Echarts library, otherwise it will consume a lot of download time in vain, JS pretreatment time will increase, decrease first screen performance.

Then we add in the plugins configuration webpack.config.js in DLLReferencePlguin to the library DLLPlugin process mapping, so that the compiled code can be found from the libraries they depend window object:

{ ... omitted plugins: [ ... omitted // here ... to expand the array for casting, because we have a plurality of DLL, the DLL output of each individual needs to have a corresponding DLLReferencePlguin, to obtain manifest.json library mapping file DLLPlugin output.

Under // dev environment temporarily adopt DLLPlugin optimization. ? ... (isEnvProduction dllNames.map (dllName => new new DllReferencePlugin ({ context: path.resolve (dirname __), the manifest: path.resolve (__ dirname, '..', `Build / static / DLL /} $ {dllName .manifest.json`) })): [] ), ... omitted ] ... }

We also need to carry our application template index.html added <script>, output from the output configuration file in the folder in front webpack.dll.config.js refer to these DLL libraries. For this to work DLLPlguin and its partner will not help us to do this thing, just some html-webpack-plugin does not help us to do this thing, because we can not index.html template to include specific content through it, but it has a reinforced version of the brothers script-ext-html-webpack-plugin can help us do this, I have used before JS linked to the index.html inside the plugin. But I do not bother to go down to node_modules added dependencies, and open up a creative writing:

CRA set of shelves have been used DefinePlugin to create a global variable at compile time, the most common is to create a process environment variables, so that our code can be resolved is the development or production environment, since it has such a design, why not continue to use, let DLLPlugn JS file name compiled independently exposed to a global variable, and circulating the array of variables in the template index.html, loop to create <script> tag is not on the line, and finally exported in dll.js files mentioned above in dllNames It is this array.

Then we transform the look index.html:

<!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8"> <title></title> <% if (process.env.NODE_ENV === "production") { %> <% process.env.DLL_NAMES.forEach(function (dllName){ %> <script src="/static/dll/<%= dllName %>.dll.js"></script> <% }) %> <% } %> </head> <body> <noscript>Please allow your browser to run JavaScript scripts.</noscript> <div id="root"></div> </body> </html>

Finally, we transform the look build.js script, DLL package of steps to join:

function buildDll (previousFileSizes){ let allDllExisted = dllNames.every(dllName => fs.existsSync(path.resolve(paths.appBuildDll, `${dllName}.dll.js`)) && fs.existsSync(path.resolve(paths.appBuildDll, `${dllName}.manifest.json`)) ); if (allDllExisted){ console.log(chalk.cyan('Dll is already existed, will run production build directly...\n')); return Promise.resolve(); } else { console.log(chalk.cyan('Dll missing or incomplete, first starts compiling dll...\n')); const dllCompiler = webpack(dllConfig); return new Promise((resolve, reject) => { dllCompiler.run((err, stats) => { ...省略 }) }); } }

checkBrowsers(paths.appPath, isInteractive) .then(() => { // Start dll webpack build. return buildDll(); }) .then(() => { // First, read the current file sizes in build directory. // This lets us display how much they changed later. return measureFileSizesBeforeBuild(paths.appBuild); }) .then(previousFileSizes => { // Remove folders contains hash files, but leave static/dll folder. fs.emptyDirSync(paths.appBuildCSS); fs.emptyDirSync(paths.appBuildJS); fs.emptyDirSync(paths.appBuildMedia); // Merge with the public folder copyPublicFolder(); // Start the primary webpack build return build(previousFileSizes); }) .then(({stats, previousFileSizes, warnings}) => { ... 省略 }) ... 省略

Roughly logic is that if the file exists and xxx.dll.js corresponding xxx.manifest.json there, then do not recompile the DLL, if missing any one, it is recompiled. DLL and then finish the compilation process of compiling the main package. Since we will DLLPlugin compile the files into the build folder, so before each start compiling the main package needs to erase the entire build folder only be modified in order to empty and placed dll.js directory in addition to manifest.json .

If our underlying dependencies indeed changed, we need to update the DLL, in accordance with the detection logic before, we only need to remove the entire file to a dll.js, or simply delete the entire build folder.

Haha, all configuration related to this DLL is complete, you're done.

This paragraph has DLLPlugin mentioned at the beginning are used in a production environment. Because the case of packaged development environment is very special and complex:

In the development environment is to package the entire application and played by a webpack-dev-server to serve the Express service. Express service within the mount all the resources webpack-dev-middleware as middleware, webpack-dev-middleware can be achieved serve compiled by the Webpack compiler will feature all of the resources into memory file system, and can combine dev -sever achieve monitor source file changes and provide HRM. webpack-dev-server receives a config Webpack compiler and a related configuration as HTTP parameter instantiation. The compiler will perform in webpack-dev-middleware, a compiler after starting with listening mode, compiler of outputFileSystem will be replaced webpack-dev-middleware into a memory file system, packing out everything after its execution has no real place orders, but this is stored in the memory file system, and itself is encapsulated in ouputFileSystem fs Node.js module based on. The results will be compiled into memory is stored inside webpack-dev-middleware most wonderful place.

When we initiated a static resource requests in the front-end interface development environment, the request reaches the dev-server, after determining the route, the static memory resources will be redirected to the file system to acquire resources, resources are binary in memory format, the flow returns to the form of the response request, and response time will add a corresponding MIME type, the browser will be able to get the data stream and then parses the server response in accordance with the correct value of the content-type header for the content-type return of the resources. In fact, even if the file off the disk resource, it must first file from disk into memory, and then returned as stream to the client, so that will be one more step into memory to read the files from disk, but also There is no direct operation of fast memory. Memory file system after npm start to get up process be killed, was recovered the next time when the renewed process, it creates a new memory file system.

All because of the special nature of the development environment package, still need to study how to use DLLPlugin under the development environment. So I just use a multi-threaded development environment and a variety of cache. Because the development environment, compiler of the workload is less than the production environment, and to read and write all memory resources are gone, so fast, each time it detects changes to the source file and recompile the process will soon. So compilation speed development environment can also be accepted in the present situation, no need to optimize.

Incidentally, to say here, before I look at the documents and articles related to DLLPlugin, also noticed a phenomenon that many people have said DLLPlugin obsolete, externals easier to use, simpler configuration, even in the CRA, vue-cli these scaffolding best practices are already no longer continue to use DLLPlugin, because Webpack4.x compilation speed is fast enough. The author's experience is this: my project is based Webpack4.X of future large-scale projects, I did not feel just how fast 4.X there. The author of the project after delivery customers most likely not have Internet access, so the externals configuration of the author of the project is of no use, can only increase compilation speed production package by using DLLPlugin. I think this is the reason why the Webpack to 4.X version is still not removed DLLPlugin because not all front-end projects must be Internet Project. Again: practice makes perfect, do not parrot.

Sixth, faster compile how much?

It developed machine 4 and 8 threads, the fundamental frequency single-core 2.2GHz. All tests have been based on customized Echarts function.

1. The rate of the most original CRA scaffolding compiled author of this project.

First pack, no caches the original configuration of the CRA, and this case was originally constructed in the CI exactly the same, because every time node_moduels be deleted again, you can not cache any results:

About 1 minute more than 10 seconds.

There cache later:

If you do not customize Echarts then directly introduced into the entire Echarts, not in the cache when probably more than five seconds.

2. The introduction of the dll.

First pack, and no DLL file cache and CRA original configuration:

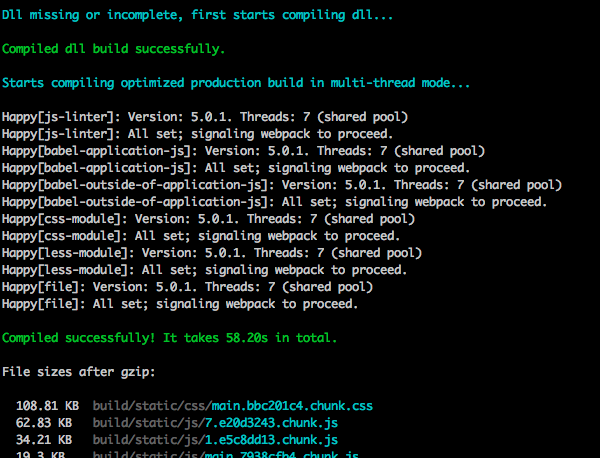

First compiled DLL, and then compile the main package, the whole process takes directly into 57 seconds.

There DLL files and cache after:

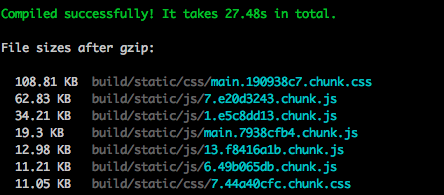

Down to 27 seconds more.

3. Finally, we put the multi-threading and cache-loader up:

无任何DLL文件、CRA原始配置的缓存以及cache-loader的缓存时:

大概在接近60秒左右。

有DLL文件和所有缓存后:

最终,耗时已经下降至17秒左右了。在CI的服务器上执行,速度还会更快些。

在打包速度上,比最原始的1分10多秒耗时已经有本质上的提升了。这才是我们想要的。

七、总结

至此,我们已经将所有底层依赖DLL化了,几乎所有能做缓存的东西都缓存了,并支持多线程编译。当项目稳定后,无论项目规模多大,小幅度的修改将始终保持在这个编译速度,耗时不会有太大的变化。

据说在Webpack5.X带来了全新的编译性能体验,很期待使用它的时候。谈及到此,笔者只觉得有淡淡的忧伤,那就是前端技术、工具、框架、开发理念这些的更新速度实在是太快了。就拿Webpack这套构建平台来说,当Webpack5.X普及后,Webpack4.X这套优化可能也就过时了,又需要重新学习了。