@ [TOC]

Stage 1 Split

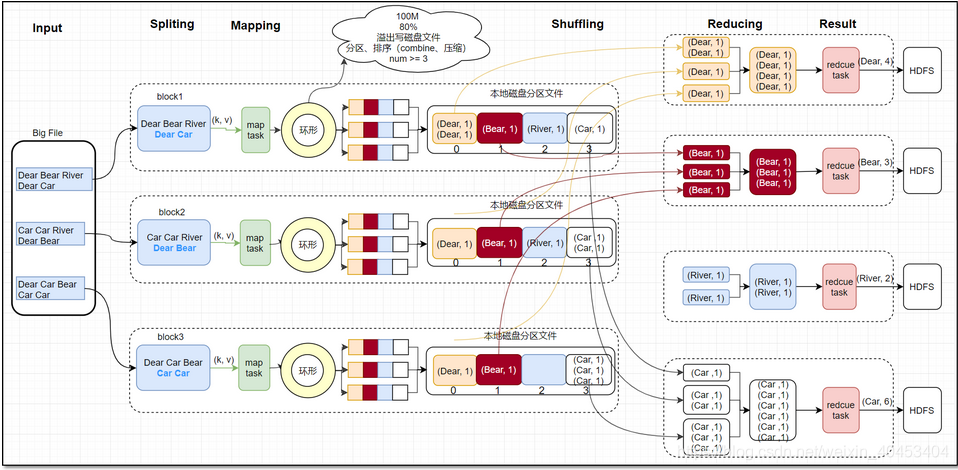

First, the received file input hdf, in mapreduce map task before the beginning of the file is cut into a plurality of portions of the specified size, each part is called a split, and the default block size is equal to the size of the split, are 128MB . the size of the split minSize, maxSize, blocksize decisions to wordcount code as an example, the following is the main () method

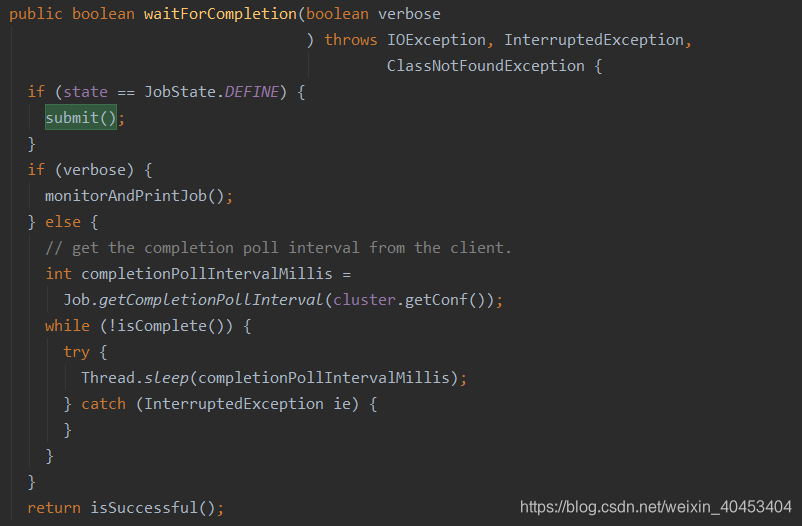

into the waitForCompletion(true)method proceeds submit()methods

find return submitter .submitJobInternal(Job.this, cluster);

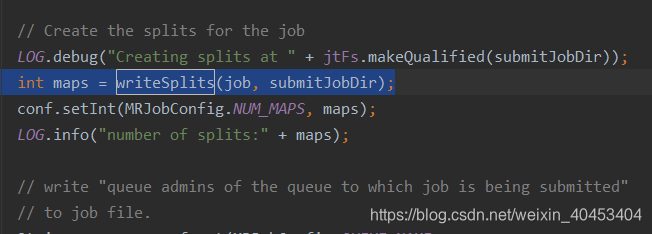

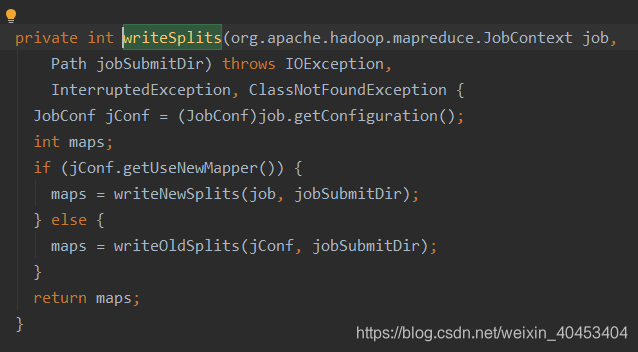

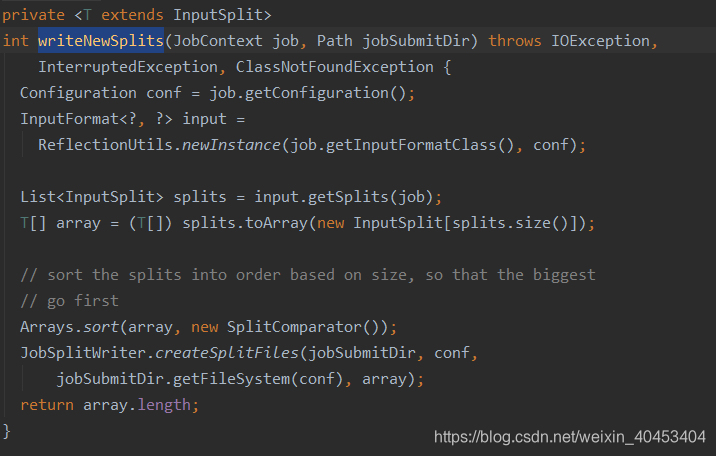

entering, find int maps = writeSplits(job, submitJobDir);

into the writeNewSplits()method

enters writeNewSplits () method, it can be seen that the method first obtains information array splits , sorting, priority will be dealing with large files. The final returns the number of mapper. Which it is divided into two parts: determining the number of slices and slice written information. Determine the number of tasks referred FileInputFormat slice of getSplits (job) is completed, the task to write slice information referred JobSplitWriter.createSplitFiles (jobSubmitDir, conf, jobSubmitDir.getFileSystem ( conf), array) method, which will slice information and SplitMetaInfo They are written to HDFS, the return array.length;return of the number of tasks that map, the map is the default number:default_num = total_size / block_size;

The actual number of mapper is to enter the number of slices, while the number of slices in turn is determined by the input format, the default is TextInputFormat, the class is a subclass of FileInputFormat. Determine the number of tasks referred FileInputFormat slice of getSplits (job) is completed. Inherit from abstract class InputFormat FileInputFormat, MapReduce class defines the input job specification, wherein the abstract method List <InputSplit> getSplits (JobContext context) defines how the divided input InputSplit, different inputs have different logical partition, and the partition each different InputSplit referred obtained mapper process, so the return value of the method determines the number of mapper.

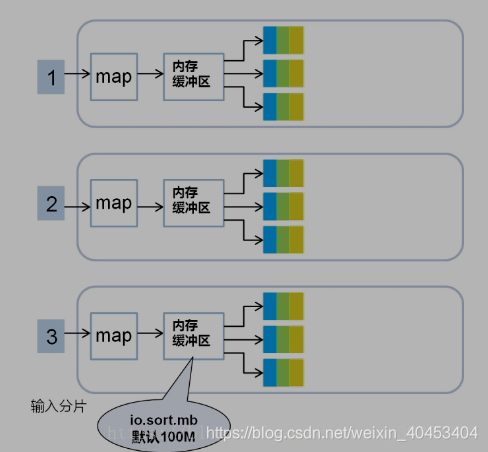

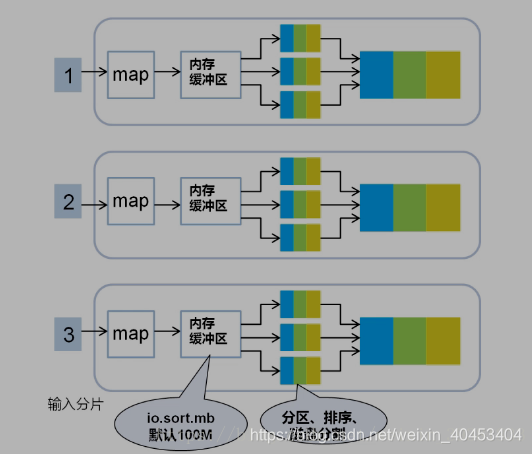

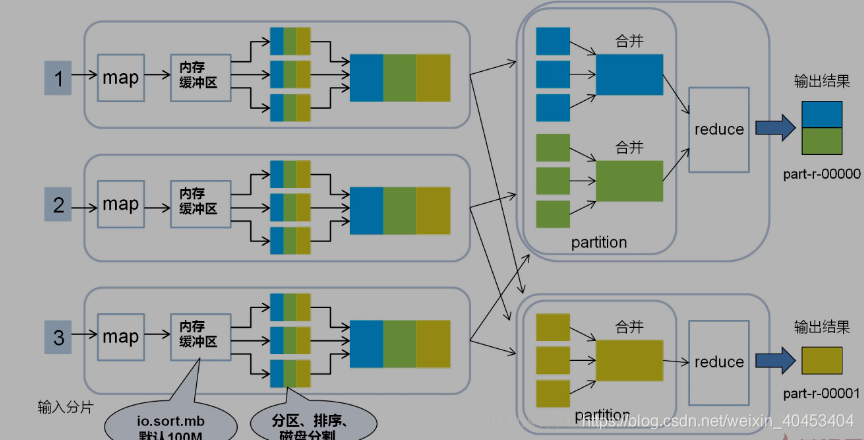

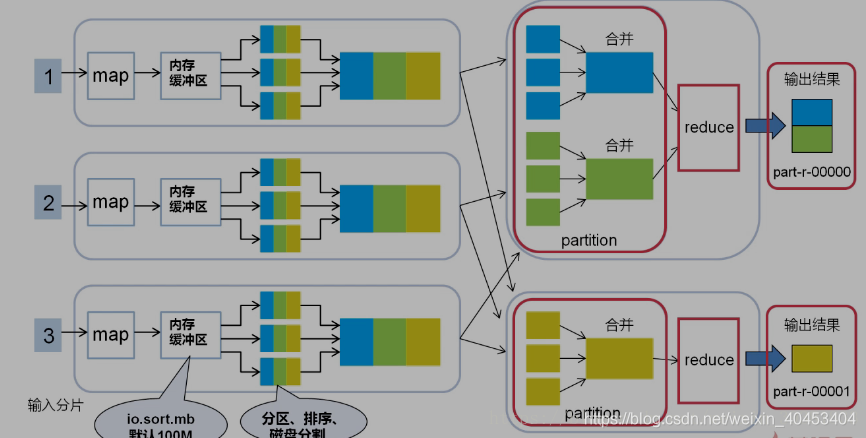

Stage 2 Map

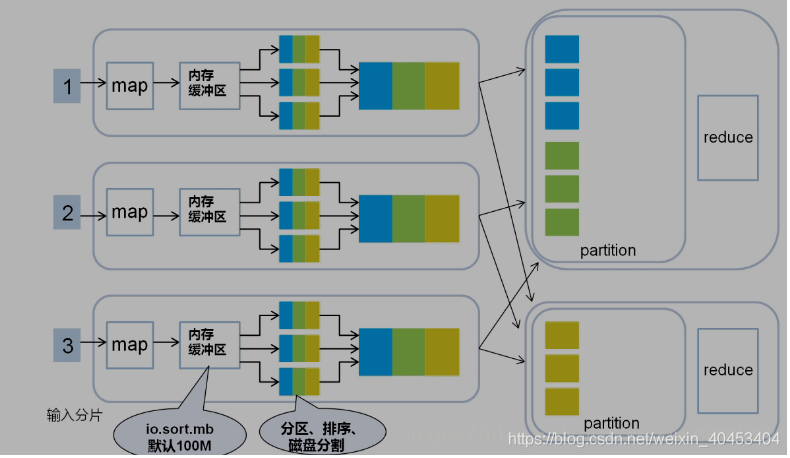

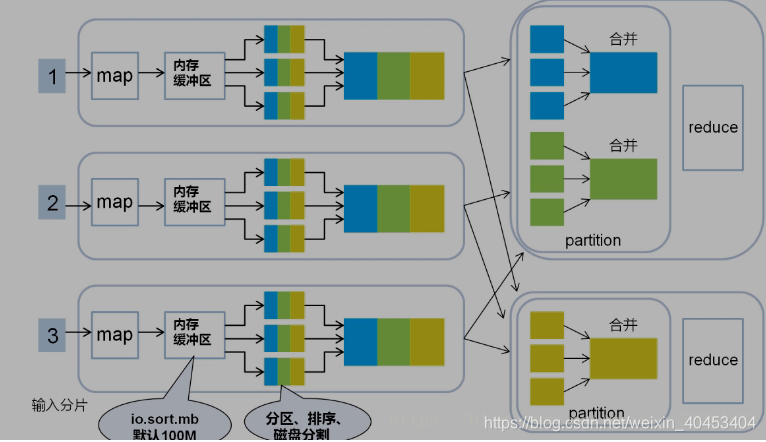

每个map task都有一个内存缓冲区, map的输出结果先写到内存中的环形缓冲区,缓冲区为100M,不断的向缓冲区力写数据,当达到80M时,需要将缓冲区中的数据以一个临时文件的方式存到磁盘,当整个map task结束后再对磁盘中这个map task所产生的所有临时文件做合并,生成最终的输出文件。最后,等待reduce task来拉取数据。当然,如果map task的结果不大,能够完全存储到内存缓冲区,且未达到内存缓冲区的阀值,那么就不会有写临时文件到磁盘的操作,也不会有后面的合并。在写入的过程中会进行分区、排序、combine操作。

环形缓冲区:是使用指针机制把内存中的地址首尾相接形成一个存储中间数据的缓存区域,默认100MB;80M阈值,20M缓冲区,是为了解决写入环形缓冲区数据的速度大于写出到spill文件的速度是数据的不丢失;Spill文件:spill文件是环形缓冲区到达阈值后写入到磁盘的单个文件.这些文件在map阶段计算结束时,会合成分好区的一个merge文件供给给reduce任务抓取;spill文件过小的时候,就不会浪费io资源合并merge;默认情况下3个以下spill文件不合并;对于在环形缓冲区中的数据,最终达不到80m但是数据已经计算完毕的情况,map任务将会调用flush将缓冲区中的数据强行写出spill文件。

经过map类处理后,输出到内存缓冲区(默认大小100M),超过一定大小后,文件溢写到磁盘上,按照key分类

按照key合并成大文件,减少网络开销

2.1分区

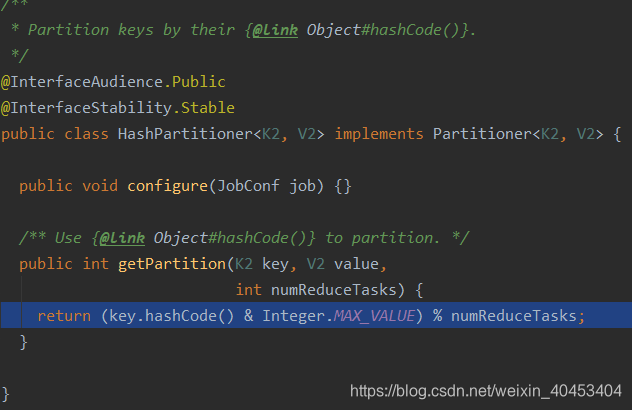

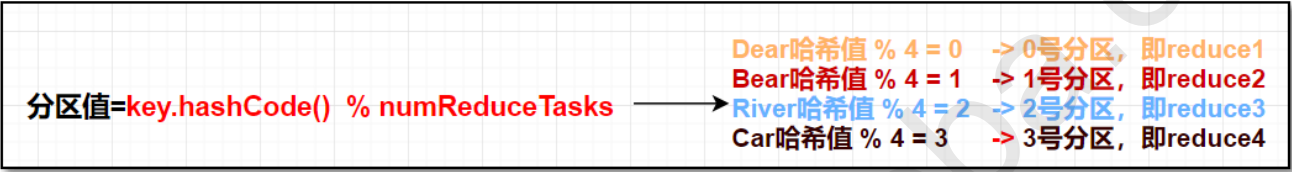

看一下MapReduce自带的分区器HashPartitioner

假设有听个reduce任务,则分区的计算如下:

2.2排序

在对map结果进行分区之后,对于落在相同的分区中的键值对,要进行排序。

3 Shuffle阶段

Shuffle过程是MapReduce的核心,描述着数据从map task输出到reduce task输入的这段过程。reducetask根据自己的分区号,去各个maptask分区机器上取相应的结果分区数据,reducetask会将这些文件再进行合并(归并排序)。

所有相同key的数据汇集到一个partition

将相同的key value汇聚到一起, 但不计算

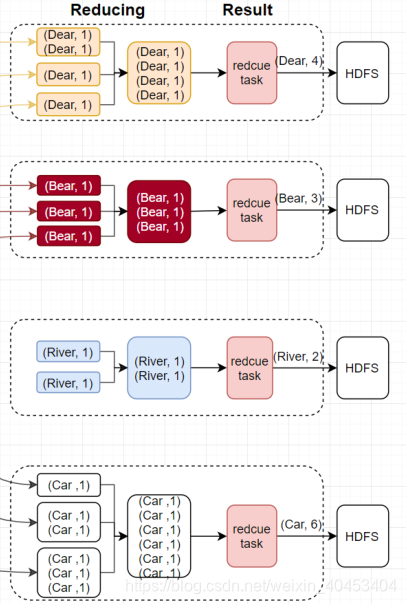

4 Reduce阶段

reduce阶段分三个步骤:

抓取,合并,排序

1 reduce 任务会创建并行的抓取线程(fetcher)负责从完成的map任务中获取结果文件,是否完成是通过rpc心跳监听,通过http协议抓取;默认是5个抓取线程,可调,为了是整体并行,在map任务量大,分区多的时候,抓取线程调大;

2 抓取过来的数据会先保存在内存中,如果内存过大也溢出,不可见,不可调,但是单位是每个merge文件,不会切分数据;每个merge文件都会被封装成一个segment的对象,这个对象控制着这个merge文件的读取记录操作,有两种情况出现:在内存中有merge数据 •在溢写之后存到磁盘上的数据 •通过构造函数的区分,来分别创建对应的segment对象

3 这种segment对象会放到一个内存队列中MergerQueue,对内存和磁盘上的数据分别进行合并,内存中的merge对应的segment直接合并,磁盘中的合并与一个叫做合并因子的factor有关(默认是10)

4 排序问题,MergerQueue继承轮换排序的接口,每一个segment 是排好序的,而且按照key的值大小逻辑(和真的大小没关系);每一个segment的第一个key都是逻辑最小,而所有的segment的排序是按照第一个key大小排序的,最小的在前面,这种逻辑总能保证第一个segment的第一个key值是所有key的逻辑最小文件合并之后,最终交给reduce函数计算的,是MergeQueue队列,每次计算的提取数据逻辑都是提取第一个segment的第一个key和value数据,一旦segment被调用了提取key的方法,MergeQueue队列将会整体重新按照最小key对segment排序,最终形成整体有序的计算结果;

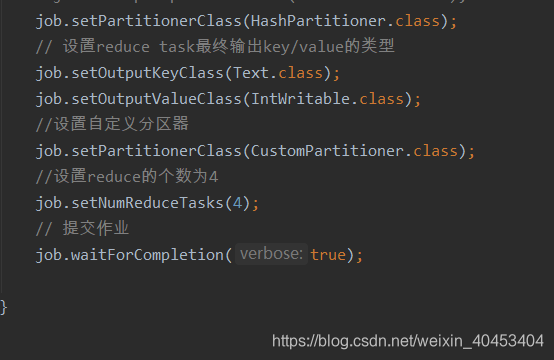

Partition, Reduce number, equal to the output file

number Reduce task

in the case of a large amount of data, if only one set Reduce tasks, the other nodes will be idle, so that the efficiency under Reduce arranged a large value (max: 72) . Reduce the number of tasks method of adjusting the number of nodes Reduce tasks of a number of tasks that are not as Map by multiple factors

通过参数调节mapred.reduce.tasks(在配置文件中)

在代码中调用job.setNumReduceTasks(int n)方法(在code中)