No public pure smile article: [12] I follow hand drawing micro Services Architecture!

What is micro-services?

Micro Services Architecture is an architectural pattern or a kind of architectural style, it advocates a single application into a set of smaller services, each running its own separate process, co-ordination between services, co-ordination, as to provide users with the ultimate value.

The use of lightweight communication mechanism between the service to communicate with each other (usually HTTP-based RESTful API). Each service around the specific business to build, and can be independently deployed into a production environment, production-like environment and so on.

In addition, you should try to avoid a unified, centralized service management mechanism, for a specific service should be based on the business context, select the appropriate language, tools to build them, can have a very lightweight centralized management to coordinate these services. You can use different language to write the service, you can use different data storage.

According to Martin Fowler's description, I summarized the following points:

① small service

Small service, no specific standards or norms, but he was on the whole specification must be small.

② independent process

Each set of services are run independently, may I run this service in Tomcat container, while another service running on Jetty. Way through the process, we continue to scale the entire service.

③ communication

Past agreements are heavy, like ESB, like SOAP, light communication, which means lighter than in the past smarter services call each other, it is the so-called smart endpoints and dumb pipes.

These are decoupled Endpoint, a complete business communications call these Micro Service strung like a Linux system through a series of commands strung pipeline business.

Past business, we usually consider a variety of dependency, consider coupling system brings. Micro service that allows developers to focus more on business logic development.

④ deployment

More than business to be independent, should be deployed independently. But this also means that the traditional development process will be a certain degree of change, developed for operation and maintenance should have a certain responsibility.

⑤ Management

Traditional enterprise SOA services tend to be large and difficult to manage, coupling high, team development costs is relatively large.

Micro service that allows each team to think their government's choice of technology to achieve different Service may choose a different technology stacks according to their needs to achieve its business logic.

Advantages and Disadvantages of Micro services

Why micro services? For fun? no. Here is what I find to say more full from the network advantages:

-

The advantage is cohesive enough to each service, is small enough, the code can be focused readily understood that such a specific business function or service requirements.

-

Development of simple, improve development efficiency, is a single-minded service may only do one thing.

-

Micro-service small team can be developed separately, this small team is 2-5 people development staff.

-

Micro services are loosely coupled, there is a functional significance of service, whether it is in the development stage or deployment phase is independent.

-

Micro service can use a different language development.

-

Ease and third-party integration, micro service allows easy and flexible way to integrate automatic deployment, through continuous integration tools, such as Jenkins, Hudson, bamboo.

-

Micro service easily understood by a developer, modify and maintain, such a small team can be more concerned about the outcome of their work. Without the need to reflect the value of cooperation. Micro service allows you to take advantage of integration of the latest technology.

-

Micro service is just the business logic code, and not HTML, CSS, or other interface components mixed.

-

Each service has its own micro-storage capacity, can have its own database, you can have a unified database.

In general, the advantages of micro-services, is that the face of large systems, can effectively reduce the complexity of the service logic architecture of clarity.

But it will also bring a lot of problems, such as data on consistency in distributed environment, the complexity of the test, the complexity of operation and maintenance.

Micro service with all sorts of advantages, drawbacks, then what is the use of micro-organization for service?

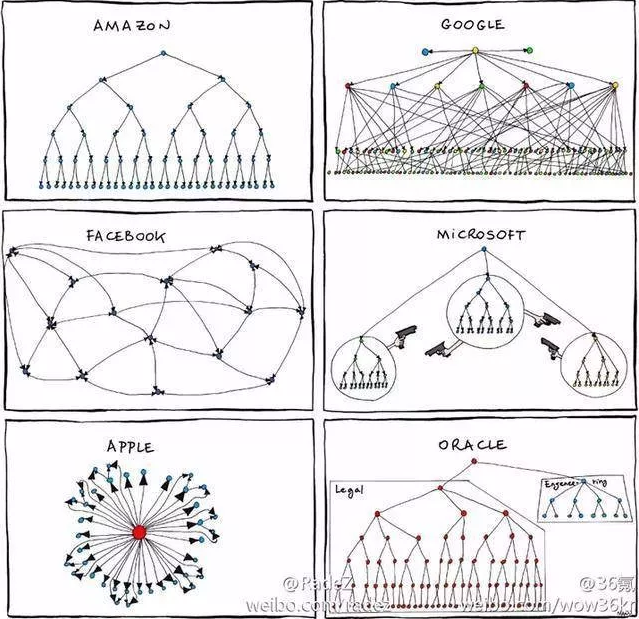

① Murphy's Law (system design) and Conway's Law (system partitioning)

Conway's Law, is a Over fifty years ago was put forward the concept of micro-services. In this article Conway, the most famous words:

Organizations which design systems are constrained to produce designs which are copies of the communication structures of these organizations.

Chinese interpreter probably means: system design organization, its design produced equivalent within the organization, communication structure between the organizations.

Look at the picture below, and then think about Apple's product, Microsoft's product design, vivid can understand this sentence.

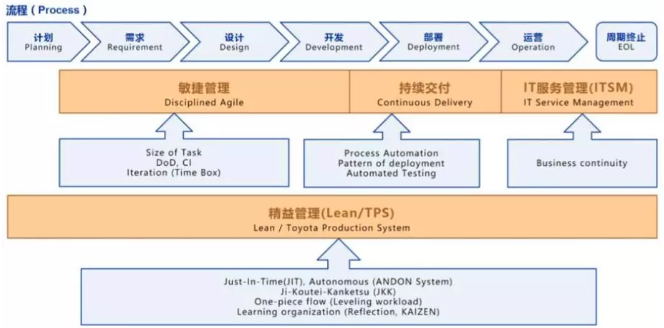

② architecture evolution

Architecture is evolving out of the micro-service, too, when from major technology companies, large-scale to a certain extent, completely evolved technology architecture system needs further management.

The traditional team, are process-oriented technology, products, want to go over the planning, development planning finished looking, then follow the step by step to find.

Our technology is to make the product, once the process out what the problem is, looking back issues can be very time consuming.

Use a micro-services architecture system, team organization needs into cross-functional teams that each team has product experts, planning experts, development specialists, operation and maintenance experts, the way they use the API to publish their functions, and to use their platform feature release product.

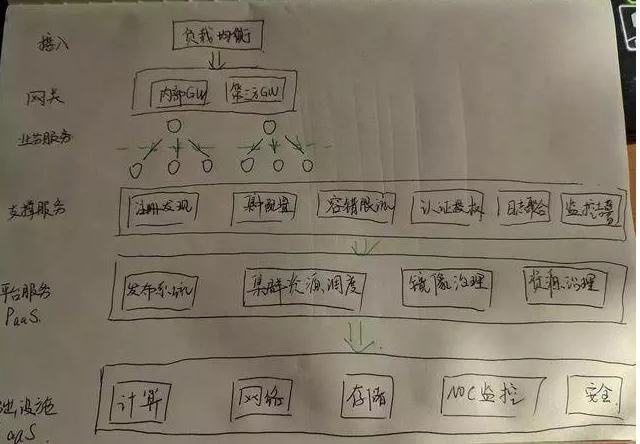

Micro architecture technology service system

Let me share with you micro technology service system architecture used by most companies:

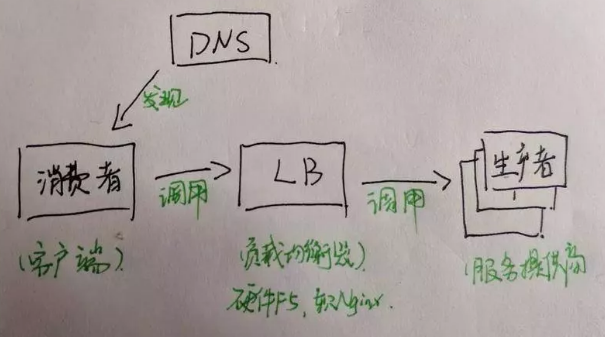

Service Discovery

Mainstream service discovery, divided into three types:

First, developers of the program in the future, will find operation and maintenance with a domain name, DNS services through the words we can find the corresponding service.

The disadvantage is that, because the service is not load balancing, load balancing services, there may be a significant performance issues.

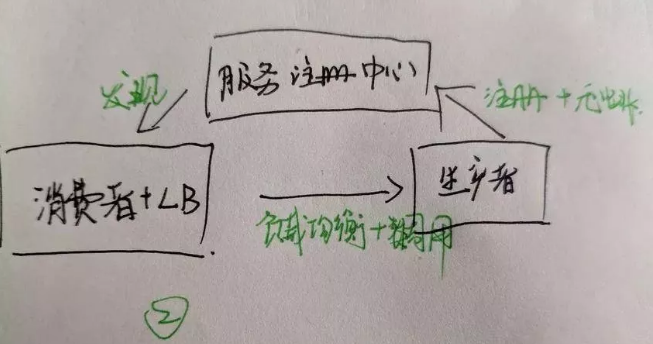

Second, it is the common practice. Zuul gateway can refer to each service through the server the built-in functions registered to the registry, services, consumers continue to poll found corresponding service registry, use the built-in load balancing call service.

The disadvantage is, multi-language environment is not good, you need to separate the consumer client service discovery and development of load balancing. Of course, this method is usually used in the Spring Cloud.

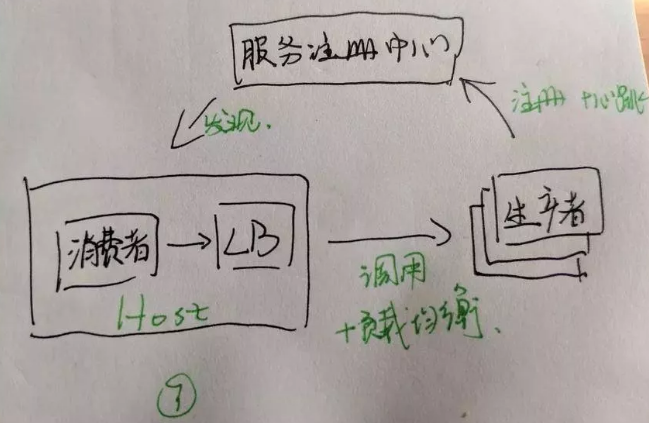

The third is the client and load balancing on the same host, rather than within the same process.

This method is relatively first second method, the improvement of their shortcomings, but will greatly increase the cost of operation and maintenance.

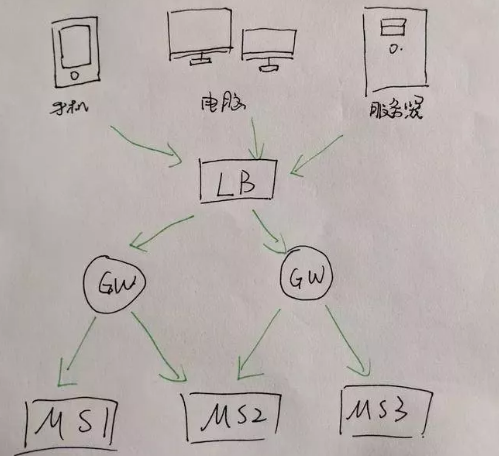

Gateway

What micro gateway service is? We can contact real life to think about. Every large company will have their own one-sided construction area, which is built-up areas, there are a lot of guards. If there are foreign workers into the company, and the guard will first lay the greeting, to get in.

The real life linked to the micro-service, not difficult to understand the meaning of the gateway:

Gateway acts as follows:

-

Reverse route: In many cases, companies do not want outsiders to see inside our company, we need to reverse the gateway routing. About to external requests into specific internal service calls.

-

Safety Certification: network there are many malicious access, such as reptiles, such as hacker attacks, to maintain security gateway function.

-

Limiting fuse: when the request overwhelmed many services, our services will automatically shut down, resulting in service can not be used. Limiting fuse can effectively avoid such problems.

-

Log Monitoring: all outside requests will go through the gateway, the gateway so that we can use to record log information.

-

Published gray, blue, green and deployment. It refers to a way to be able to publish a smooth transition. Can be A / B testing thereon.

That let some users continue to use the product characteristics A, some users began to use the product characteristics B, if the user does not have any objections to B, then gradually expand the scope, all users are migrated to the B to the top.

Zuul open gateway architecture:

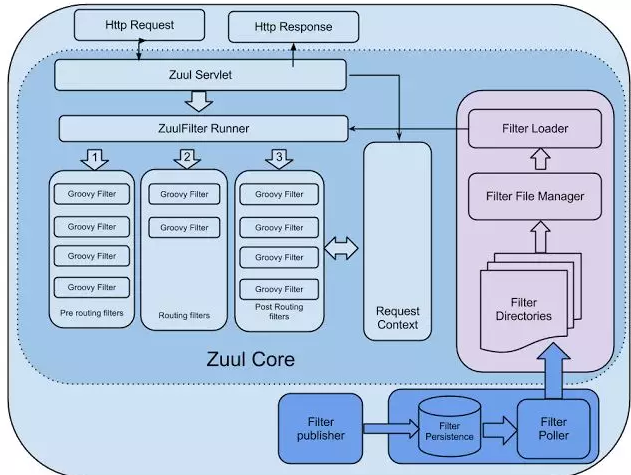

Zuul gateway core is actually a Servlet, all requests will go through Zuul Servlet spread ZuulFilter Runner, and then distribute or three filters.

First talk architecture diagram left part, respectively, using pre-filters implemented in Groovy routing, routing filter, post-filter route.

Usually requests are routed first through the pre-filter processing, general custom Java packing logic also implemented here.

Route filters to achieve is to find the corresponding micro-service calls. Call finished, the response coming back will be routed through the post-filter, the filter through the post route we can encapsulate log audit process.

It can be said the most important feature is its three Zuul gateway filter. Right part of FIG architecture, the gateway is designed Zuul custom filter loading mechanism.

Interior Gateway producer-consumer model will automatically filter script will be posted to the gateway read Zuul load operation.

Configuration Center

Previously, developers put the configuration file development files inside, so there will be many hidden dangers. For example, different configuration specification, unable to trace staffing.

Once the need for large-scale changes to the configuration, change a very long time, unable to trace staffing, thus affecting the entire product, we can not afford the consequences.

So there Configuring the center of this myself! Baidu is now open source center distribution center Disconf, Spring Cloud Config, Apollo.

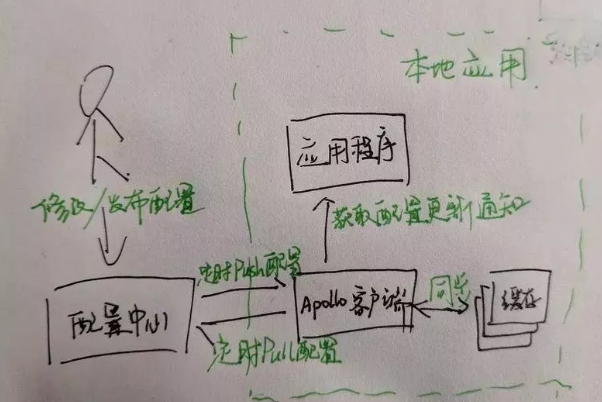

Today, the focus is now to talk about the quality of a good application configuration center, Ctrip open source Apollo (Apollo):

Apollo's large-scale distribution center, distribution center will respond to the client's local application, you can synchronize the timing of Configuration Center. If the idle machine distribution center, we will use the cache to be configured.

communication method

About communication, in general the market is two kinds of remote invocation, I compiled a table:

Monitoring and early warning

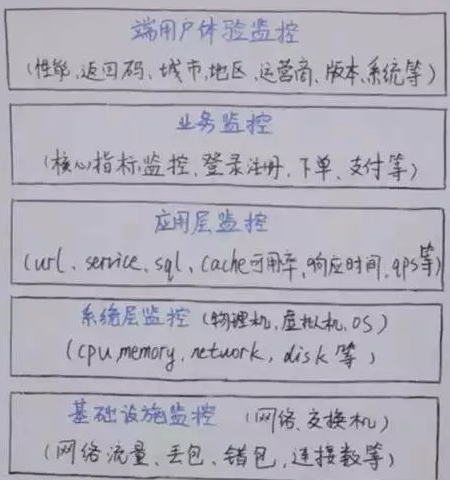

Monitoring and early warning is important for micro-services, a reliable monitoring and early warning system is essential for micro-service operation.

General monitoring divided into the following levels:

From the infrastructure to the end user, there are layers of control, all-round, multi-angle, every level is very important.

Overall, the micro-monitoring service can be divided into five points:

-

Log Monitoring

-

Monitoring Metrics

-

health examination

-

Check the call chain

-

Warning system

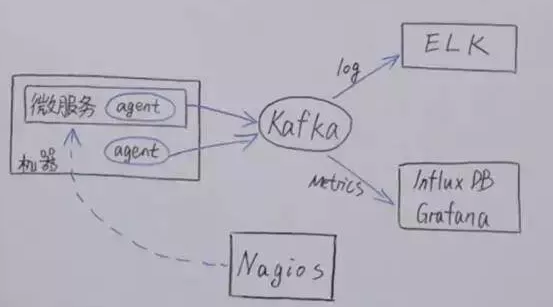

① control framework

The following chart is a company's most monitoring architecture diagram. Each service has an Agent, Agent to collect critical information, it will spread some of the MQ, in order to decouple.

Meanwhile log incoming ELK, the Metrics incoming InfluxDB time series library. And like Nagios, you can sponsor periodic inspection information services to micro Agent.

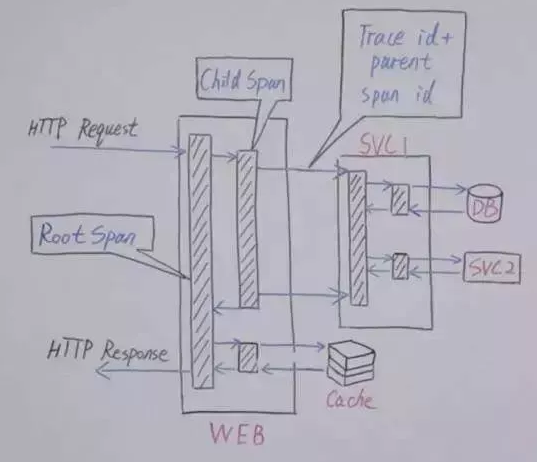

② call chain monitoring APM

Many companies have call chain monitoring, such as Ali would have Hawkeye surveillance, reviews of Cat, most of the call chain monitoring (yes, I mean Zipkin) architecture like this:

When the container enter the Web request will be created through the Tracer, connected to the Spans (analog potential delay distributed work, the module further comprises a context information transfer between the tracking system network kit, such as by HTTP Headers).

Spans has a context, which comprises Tracer identifier, which indicates the correct position on the tree of distributed operation.

When we put all kinds of Span figure into the back end of our service call chain will generate dynamic call chain.

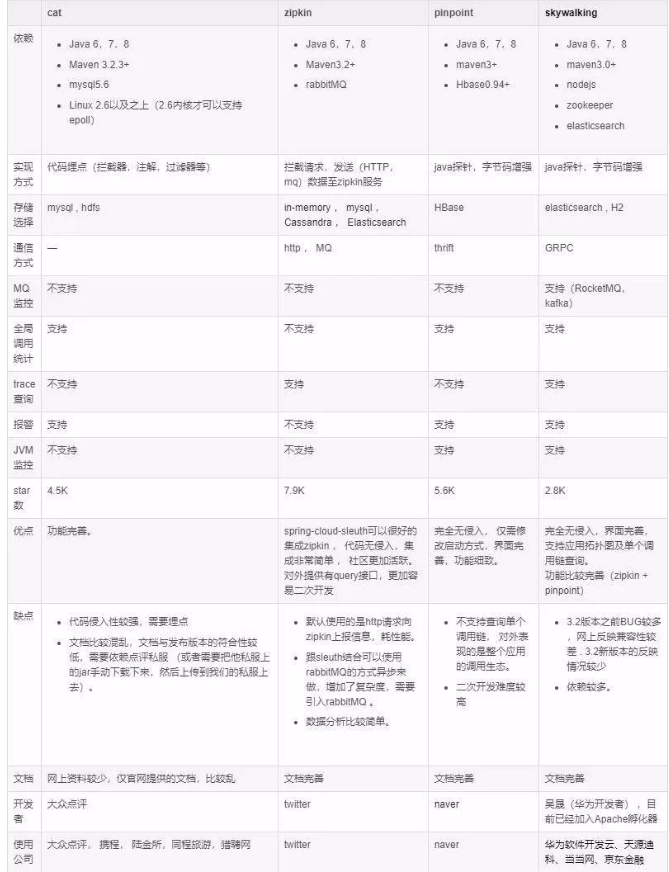

Here are some more surveillance contrast with the call chain on the market:

Fuse, isolation, current limiting, downgrade

The face of the huge burst traffic, large companies generally adopt a series fuse (the system automatically shut down the service will allow maximization problem to prevent occurrence of), isolation (quarantine service and service to prevent a service linked to other services can not be accessed time), limiting (it allows users to access a certain number per unit time), downgrade (when the entire load of the whole micro-services architecture beyond the preset upper limit threshold or upcoming traffic is expected to exceed a preset threshold, in order to ensure important or essential services can function properly, we can not unimportant or emergency services or use the services of tasks delayed or suspended) measures.

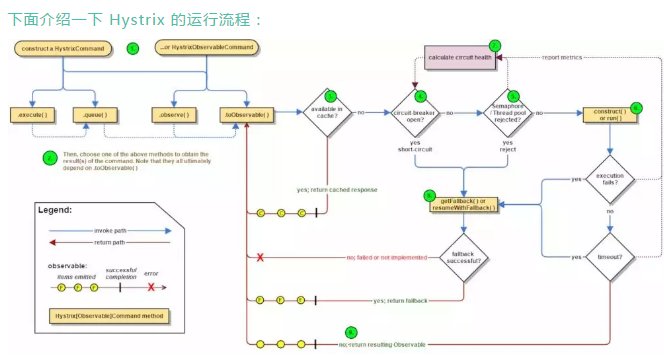

Here are some Hystrix running process:

As each micro-service call, will use the Command Hystrix manner (on the upper left corner of the FIG.), And then use the Command synchronization, or responsive to, or asynchronous, whether the fuse determination circuit (down to the left in FIG. right look), if the break is to go downgraded Fallback.

If the line is closed, but the thread resources did not, the queue is full, take limiting measures (Figure Step 5).

If completed, the execution is successful, then go run () method to get Response, but the process if an error occurs, and then continue to walk downgrade Fallback.

At the same time, the plug has a top suffix Health, which is a calculation for the entire link is healthy components, each step of the operation are recorded it.

Container and service orchestration engine

From a physical machine to a virtual machine from the virtual machine to the container; from the physical cluster to OpenStack, OpenStack to Kubernetes; science and technology constantly changing, our cognitive did not refresh.

We begin with the container, it is above all a relatively independent of the operating environment, at this point is somewhat similar to the virtual machine, the virtual machine but not as thorough.

Virtual machine virtual hardware, the kernel (ie operating system) as well as user-space package in the new virtual machines, Virtual machines can use the "hypervisor" to run on a physical device.

Hypervisor virtual machine depends on, which is typically mounted on the "bare metal" hardware system, which results in some ways be considered Hypervisor is an operating system.

Hypervisor Once installed, can be calculated from the allocation of resources among the virtual machine instances the system may be used, each virtual machine can be obtained only operating system and the load (the application).

In short, the first virtual machine requires a virtual physical environment, and build a complete operating system, and then build a layer of Runtime, and then run the application provider.

The container environment, the host operating system does not require the installation, the container layer directly (such LXC or Libcontainer) installed in a host operating system (usually Linux variant) above.

After installing the container layer, the container can be allocated instance from among the available computing resources of the system, and the enterprise application may be deployed among the container.

However, each application container will share the same operating system (OS single host). A container may be installed as a set of application-specific virtual machine, which directly host kernel, the virtual machine abstraction layer is less than, more lightweight, fast start.

Compared to the virtual machine, the container has a higher efficiency of resource use, because it does not require a separate operating system assigned to each application - examples of smaller and create and migrate faster. This means that compared to a virtual machine, a single operating system is able to carry more containers.

Cloud providers is very keen on container technology, because among the same hardware device, you can deploy a larger number of container instance.

In addition, the container is easy to migrate, but can only be migrated to another server which has a compatible operating system kernel, which would constrain the migration options.

Because the container not the same as the virtual machine or virtual hardware kernel package, so each container has its own spatial isolation of the user, so that multiple sets of containers can be run on the same host system.

We can see all of the operating system level architecture shared across containers can be realized, the only need is to build separate binaries and libraries.

Because of this, it has a very good container lightweight characteristics. Our most popular container is a Docker.

① container arrangement

past the virtual machine can manage OpenStack cloud platform through virtualization, how to manage container vessel era it? It is necessary to look at the container orchestration engine.

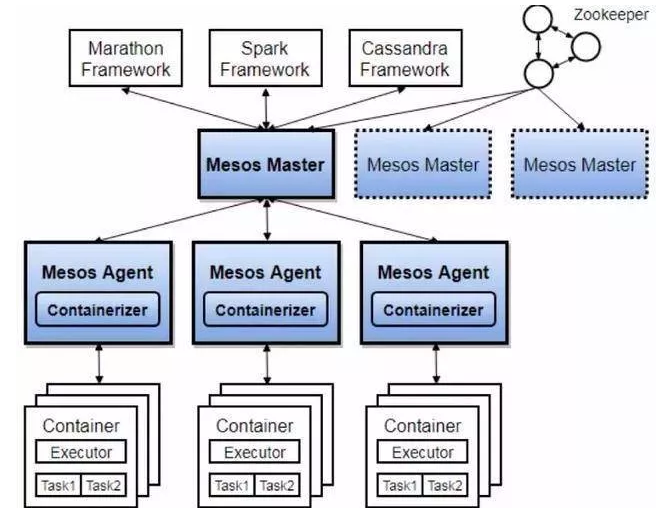

Mesos the Apache: Mesos is based on Master, Slave architecture framework to decide how to use resources, Master is responsible for managing the machine, Slave machine regularly will report the situation to the Master, Master and then to frame information. Master is a highly available, because ZK, also Leader of existence.

Here is the architecture diagram:

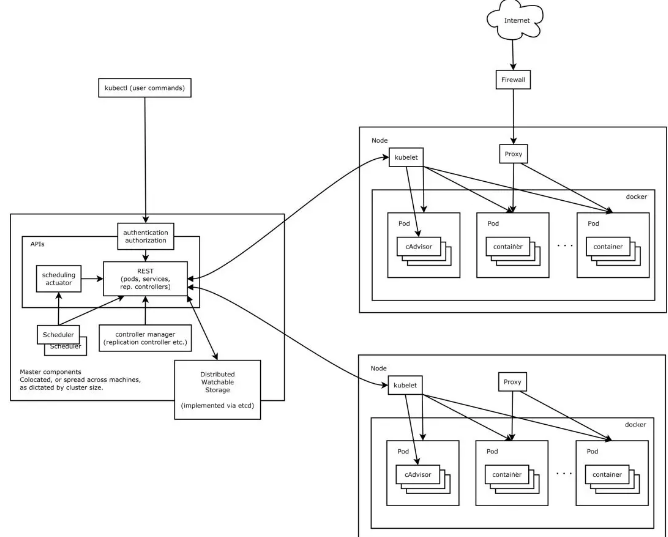

Kubernetes: Kubernetes is very hot recently, open source container orchestration engine

Kubernetes design and function is actually a Linux-like layered architecture, let us talk about each Kubernetes internal node, kubelet management Global Global pod, and each pod carrying one or more containers, kube-proxy agent and is responsible for network load balanced.

Kubernetes external node, it is the corresponding control management server, each node is responsible for unified management and scheduling assignments run.

② service grid