The first two introduced Spark of yarn client and yarn cluster mode herein, this continues to introduce the Spark STANDALONE mode and Local mode.

PI is calculated by the following specific procedures described, examples of three versions of the program, respectively Scala, Python, and Java language. The Java programming JavaSparkPi do explain.

1 package org.apache.spark.examples; 2 3 import org.apache.spark.api.java.JavaRDD; 4 import org.apache.spark.api.java.JavaSparkContext; 5 import org.apache.spark.sql.SparkSession; 6 7 import java.util.ArrayList; 8 import java.util.List; 9 10 /** 11 * Computes an approximation to pi 12 * Usage: JavaSparkPi [partitions] 13 */ 14 public final class JavaSparkPi { 15 16 public static void main(String[] args) throws Exception { 17 SparkSession spark = SparkSession 18 .builder() 19 .appName("JavaSparkPi") 20 .getOrCreate(); 21 22 JavaSparkContext jsc = new JavaSparkContext(spark.sparkContext()); 23 24 int slices = (args.length == 1) ? Integer.parseInt(args[0]) : 2; 25 int n = 100000 * slices; 26 List<Integer> l = new ArrayList<>(n); 27 for (int i = 0; i < n; i++) { 28 l.add(i); 29 } 30 31 JavaRDD<Integer> dataSet = jsc.parallelize(l, slices); 32 33 int count = dataSet.map(integer -> { 34 double x = Math.random() * 2 - 1; 35 double y = Math.random() * 2 - 1; 36 return (x * x + y * y <= 1) ? 1 : 0; 37 }).reduce((integer, integer2) -> integer + integer2); 38 39 System.out.println("Pi is roughly " + 4.0 * count / n); 40 41 spark.stop(); 42 } 43 }

Program logic with the previous program, like Python and Scala, I do not explained. Comparative Scala, Python, and Java program also calculates the PI logic program are 26 lines, 30 lines and 43 rows, can be seen Spark write programs using a more concise Scala or Python is more than Java, Python is recommended to use or prepared Scala Spark program.

Let's STANDALONE way to execute this program, you need to start Spark comes with the cluster service (executed $ SPARK_HOME / sbin / start-all.sh on the master) prior to execution, while the best start spark the history server, so that even in the program after running finish you can also be viewed from the Web UI to program operation. After starting the cluster service Spark, Master Worker daemon and the daemon will appear on the master host and slave master, respectively. And in Yarn mode, you need to start the cluster service Spark, Spark only need to deploy to the client, and STANDALONE mode requires each machine in the cluster are deployed Spark.

Enter the following command:

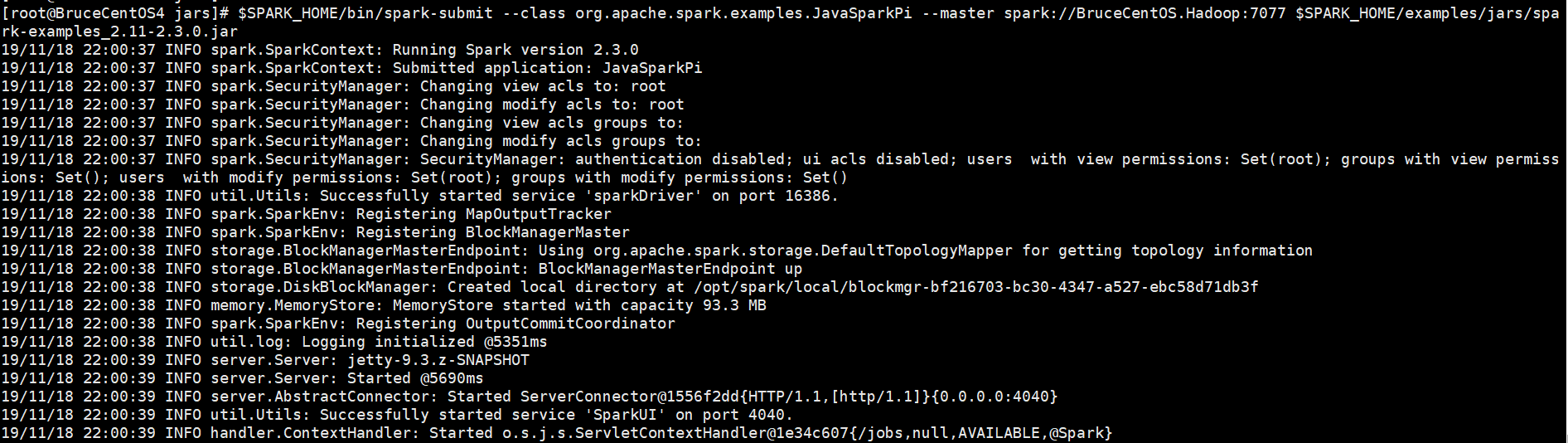

[root@BruceCentOS4 jars]# $SPARK_HOME/bin/spark-submit --class org.apache.spark.examples.JavaSparkPi --master spark://BruceCentOS.Hadoop:7077 $SPARK_HOME/examples/jars/spark-examples_2.11-2.3.0.jar

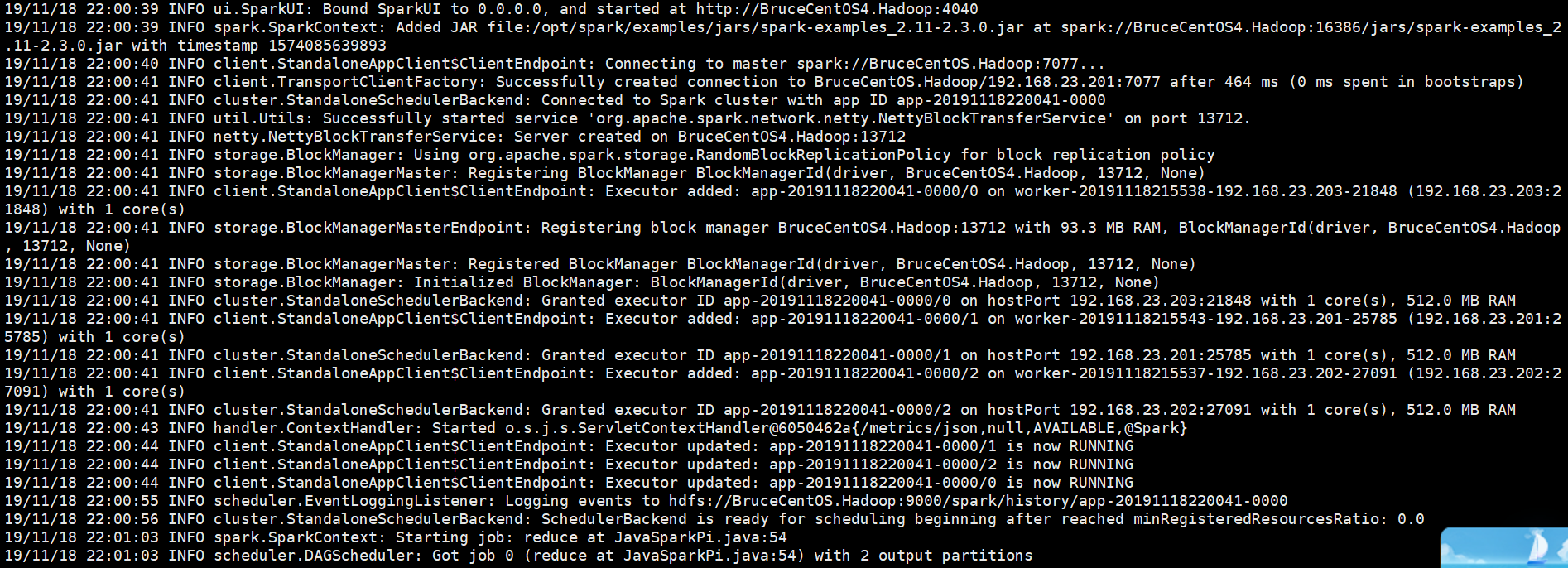

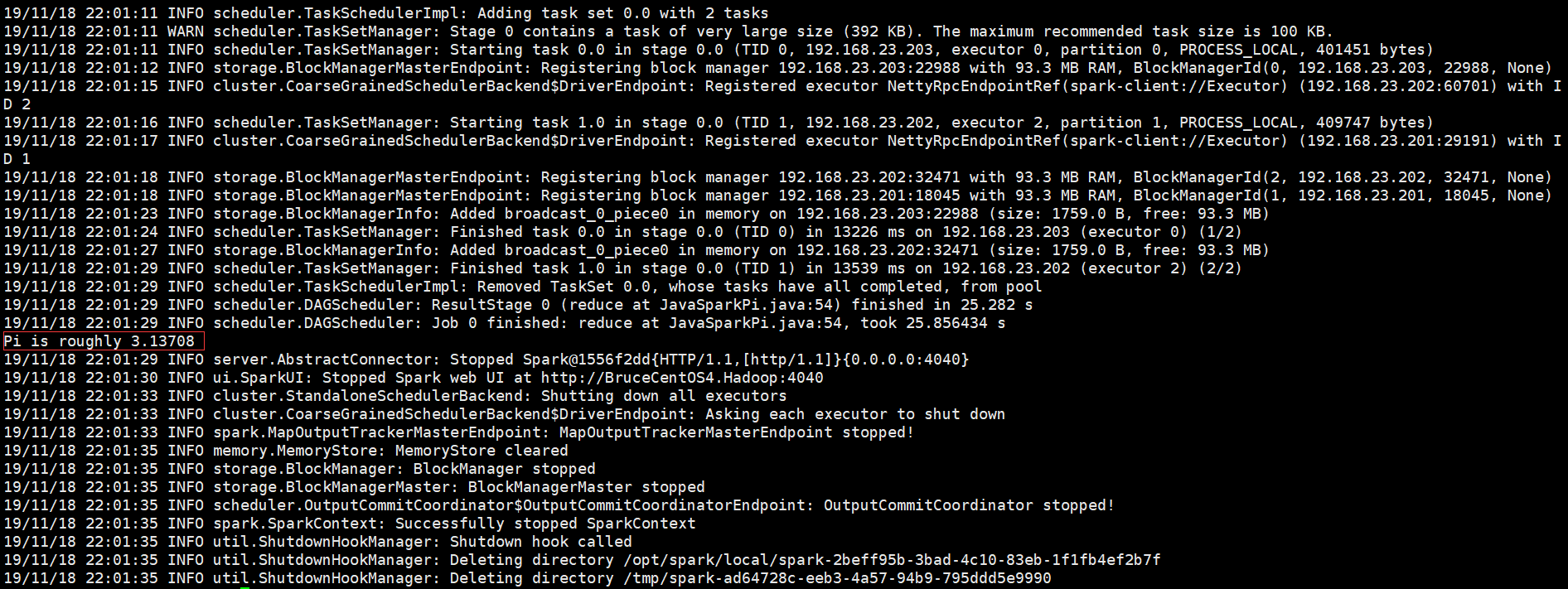

The following is a program running output information part shots,

The beginning:

Intermediate portion:

End section:

Output Information Section seen from the above procedures, Spark Driver is one of the processes running on the client SparkSubmit BruceCentOS4, the Spark comes with a cluster of clusters.

Executor information on SparkUI:

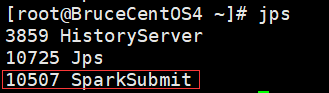

The client process on BruceCentOS4 (includes Spark Driver):

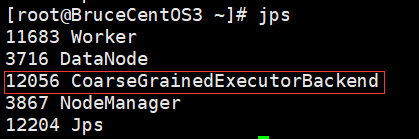

Executor process on BruceCentOS3:

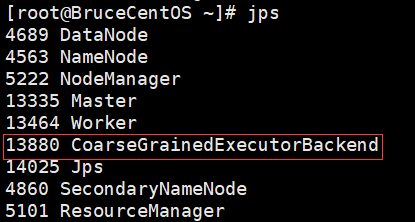

Executor process on BruceCentOS:

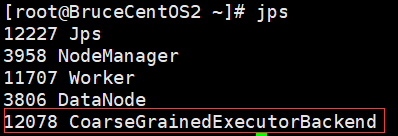

Executor process on BruceCentOS2:

The following detailed description Spark under specific process running in standalone mode.

Here is a flow chart:

- SparkContext connected to the Master, the Master register and apply for resources (CPU Core and Memory).

- Master decided to allocate resources on which Worker, and access to resources on the Worker, and then start CoarseGrainedExecutorBackend based on the information reported in the resource application claims SparkContext and Worker heartbeat cycle.

- CoarseGrainedExecutorBackend registered to SparkContext.

- Transmitting the code to SparkContext Applicaiton CoarseGrainedExecutorBackend; and SparkContext Applicaiton parsing code, build the DAG, DAG and submitted to the Scheduler decomposed into Stage (Action encountered when operating, will birth Job; Job each containing one or more Stage , Stage typically generated before the external data acquisition and shuffle), then submitted to Stage (alternatively referred taskset) to Task Scheduler, Task Scheduler Task is responsible for assigning to the corresponding Worker, and finally submitted to CoarseGrainedExecutorBackend performed.

- CoarseGrainedExecutorBackend will create a thread pool Executor Begin Task, and SparkContext report until Task completed.

- After all Task completed, SparkContext to Master cancellation, free up resources.

Finally, look at Local operation mode, which is executed in a single local environment, primarily for program testing. All parts of the program, including the Client, Driver and Executor all running on the client's SparkSubmit process them. Local model has three starts.

# Start an Executor run the task (a thread)

[root@BruceCentOS4 ~]#$SPARK_HOME/bin/spark-submit --class org.apache.spark.examples.JavaSparkPi --master local $SPARK_HOME/examples/jars/spark-examples_2.11-2.3.0.jar

# Start the N Executor running tasks (threads N), where N = 2

[root@BruceCentOS4 ~]#$SPARK_HOME/bin/spark-submit --class org.apache.spark.examples.JavaSparkPi --master local[2] $SPARK_HOME/examples/jars/spark-examples_2.11-2.3.0.jar

# * Start a task to run Executor (* threads), where * refers to the number of CPU cores on behalf of the local machine.

[root@BruceCentOS4 ~]#$SPARK_HOME/bin/spark-submit --class org.apache.spark.examples.JavaSparkPi --master local[*] $SPARK_HOME/examples/jars/spark-examples_2.11-2.3.0.jar

These are the little personal understanding of the Spark operating mode (STANDALONE and Local), where the reference to " thirsty for knowledge Stay Foolish " blogger " : Spark (a) the basic structure and principle part of the content" (which based Spark2.3.0 some details of the amendment), to express my gratitude.