ELK log analysis system deployment, more consumption of computer hardware, if virtual machines are used to test the deployment, it is recommended to allocate more hardware resources, otherwise, when the elk container run, it will not function properly. Assign me to docker host 5G memory, four CPU.

First, prepare the environment

I am here using a docker host (such as the need to deploy docker service, you can refer Bowen: Docker detailed installation configuration ), its IP address is 192.168.20.6, running elk container on its.

Second, the host arranged to run elk container docker

[root@docker01 ~]# echo "vm.max_map_count = 655360" >> /etc/sysctl.conf

#更改其虚拟内存

[root@docker01 ~]# sysctl -p #刷新内核参数

vm.max_map_count = 655360 #若容器不能正常运行,可适当调大此参数值

[root@docker01 ~]# docker pull sebp/elk #elk镜像大小在2G以上,所以建议先下载到本地,再运行容器

[root@docker01 ~]# docker run -itd -p 5601:5601 -p 9200:9200 -p 5044:5044 -e ES_HEAP_SIZE="3g" -e LS_HEAP_SIZE="1g" --name elk sebp/elk

#基于sebp/elk运行elk容器

# “-e ES_HEAP_SIZE="3g" ”:是限制elasticsearch所使用的内存大小

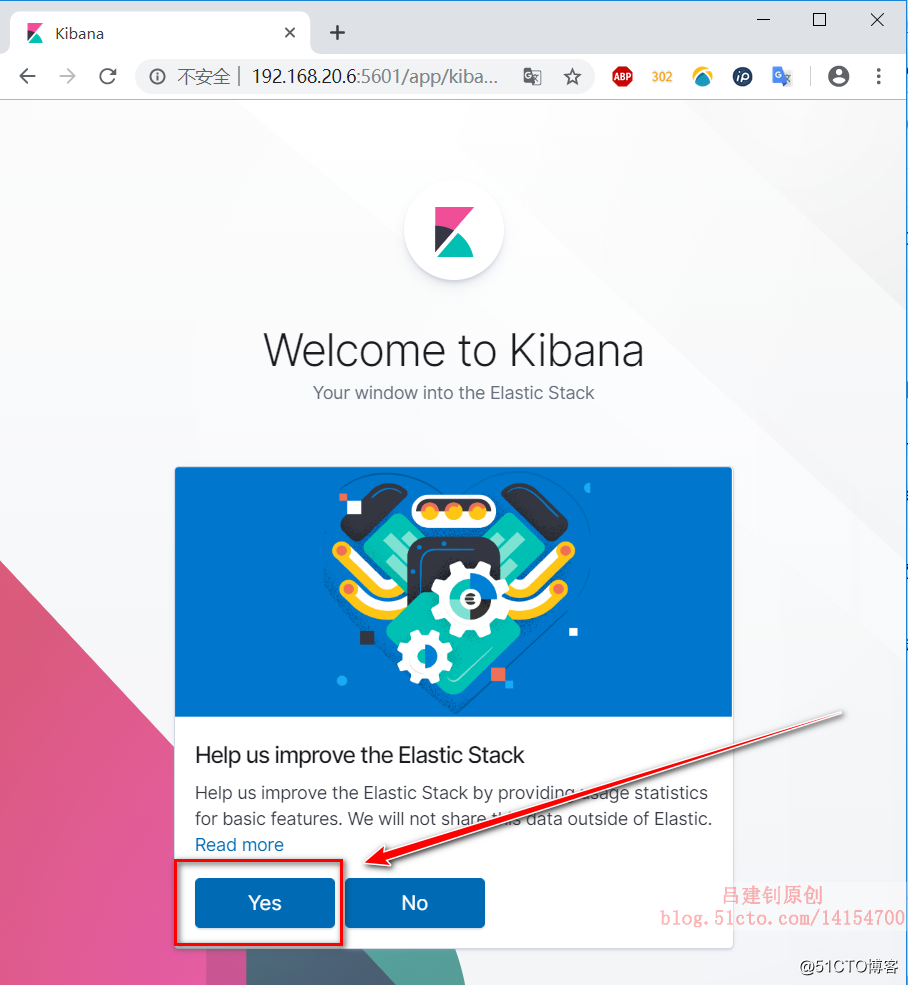

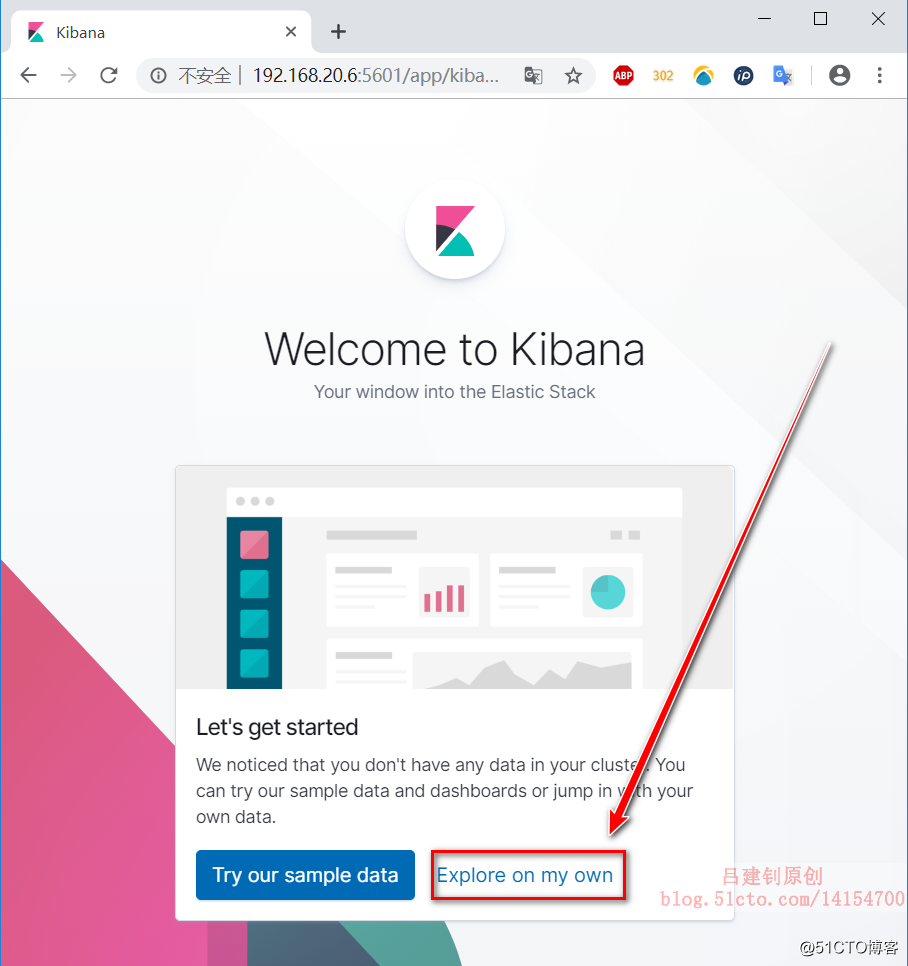

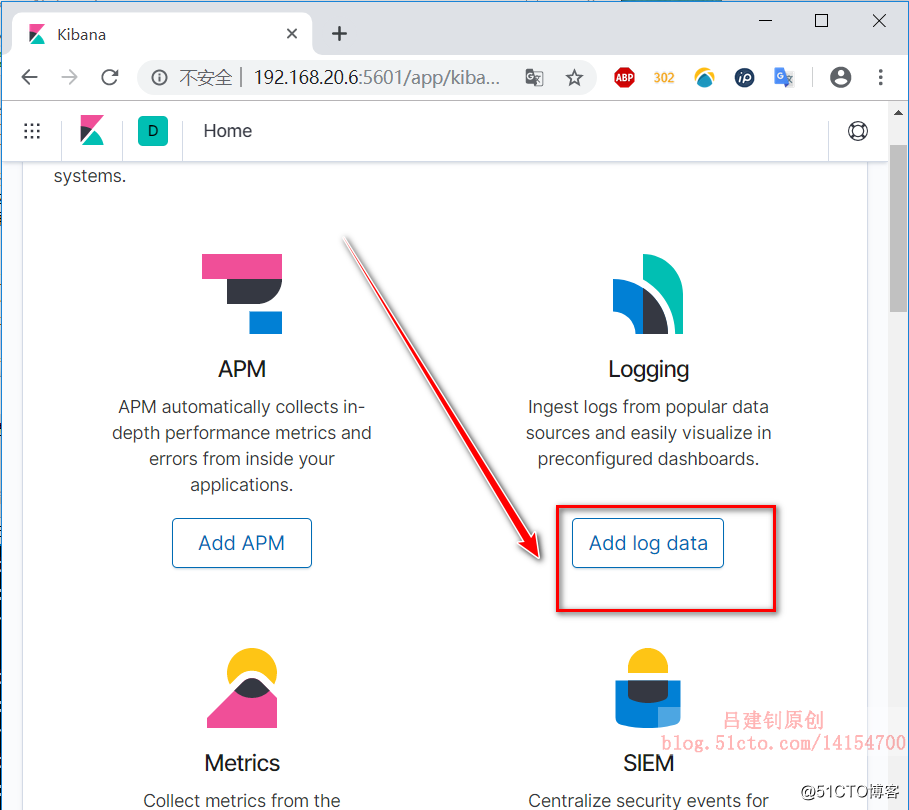

# -e LS_HEAP_SIZE="1g" :限制logstash使用的内存大小At this point, you can access the docker host 5601 through a browser interface port access to the following (all on the operations carried out, the plug can be carried out, have been marked on the map):

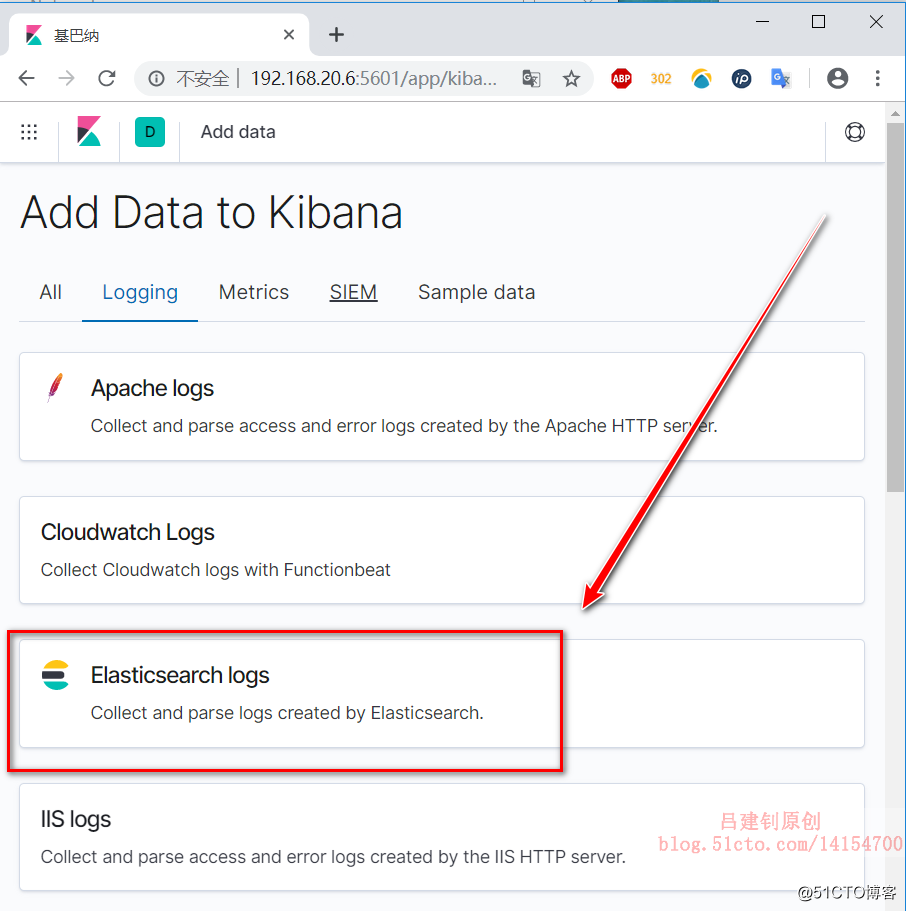

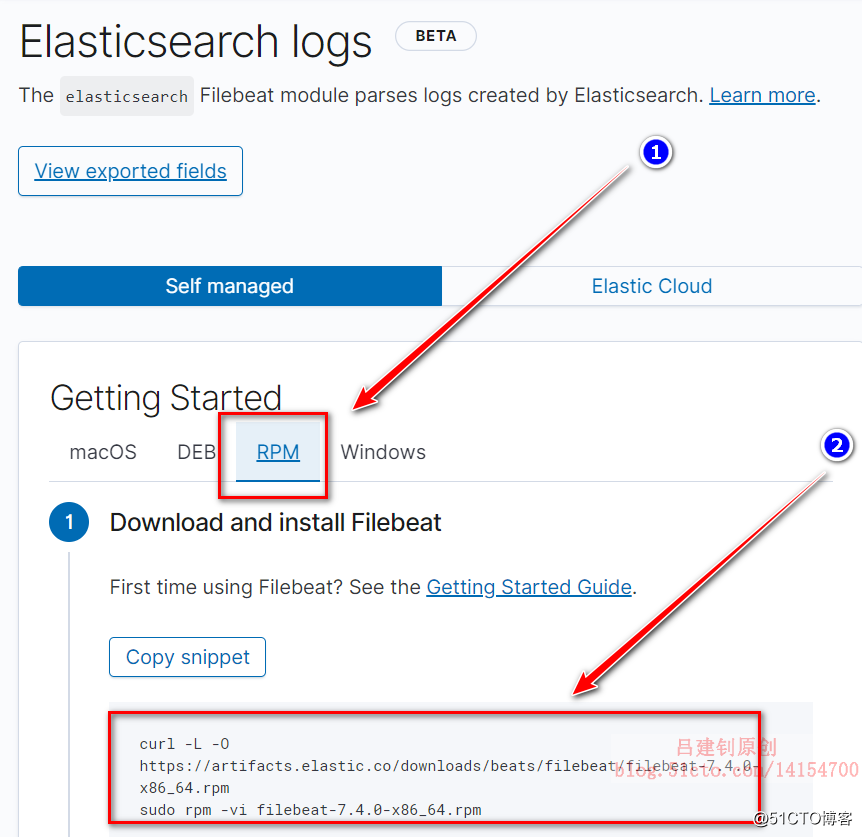

(Note Select the tab RPM), according to the following command prompt to execute on our docker host when you see the following pages.

Implementation of the above tips page command, as follows:

[root@docker01 ~]# curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.4.0-x86_64.rpm

#下载rpm包

[root@docker01 ~]# rpm -ivh filebeat-7.4.0-x86_64.rpm #安装下载的rpm包

[root@docker01 ~]# vim /etc/filebeat/filebeat.yml

======== Filebeat inputs ==========

filebeat.inputs: #修改filebeat.inputs下面的内容

enabled: true #改为true,以便启用filebeat

paths: #修改path段落,添加要收集的日志路径

- /var/log/messages #指定一下系统日志的文件路径

- /var/lib/docker/containers/*/*.log #这个路径是所有容器存放的日志路径

========== Kibana =============

host: "192.168.20.6:5601" #去掉此行注释符号,并填写kibana的监听端口及地址

------------ Elasticsearch output ------------

hosts: ["192.168.20.6:9200"] #修改为Elasticsearc的监听地址及端口

#修改完上述配置后,保存退出即可

[root@docker01 ~]# filebeat modules enable elasticsearch #启用elasticsearch模块

[root@docker01 ~]# filebeat setup #初始化filebeat,等待时间稍长

Index setup finished.

Loading dashboards (Kibana must be running and reachable)

Loaded dashboards

Loaded machine learning job configurations

Loaded Ingest pipelines

#出现上述信息,才是初始化成功

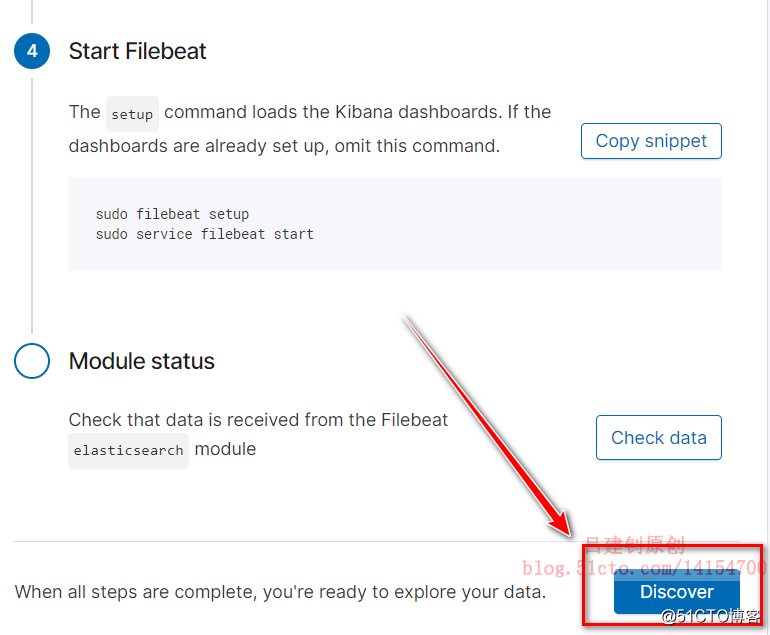

[root@docker01 ~]# service filebeat start #启动filebeatAfter the preceding operations, you can click on the following "Dicover", view the log of the operation, as follows:

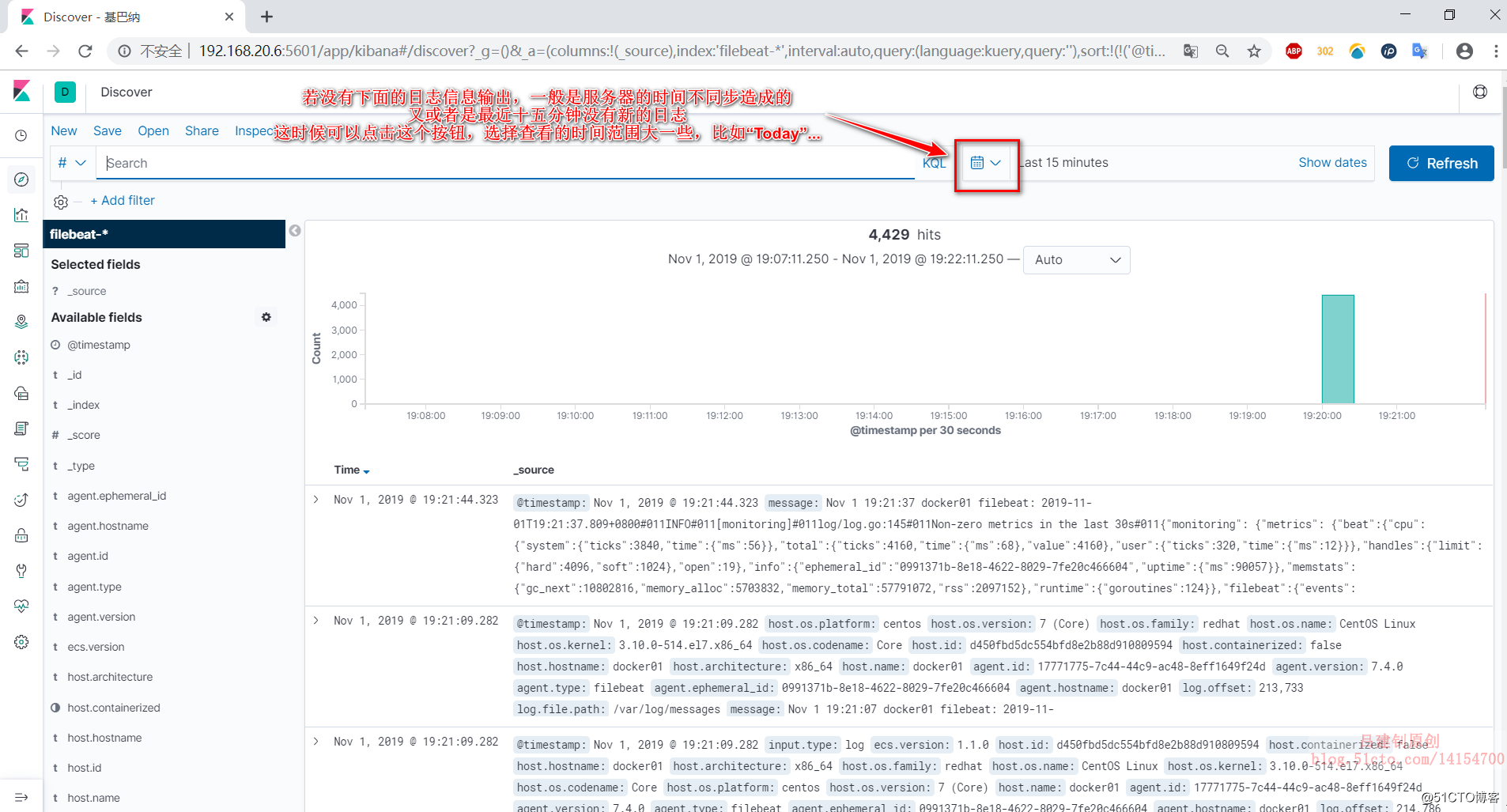

If there is a new log in the last fifteen minutes, and the time docker host is also in sync, consider the following command to restart the container elk longer available for viewing.

[root@docker01 ~]# systemctl daemon-reload #重新加载配置文件

[root@docker01 ~]# docker restart elk #重启elk容器When normal access to the top of the page, then we run a container, let it prints a character every ten seconds, and then see if kibana can be collected log information related to the container, as follows:

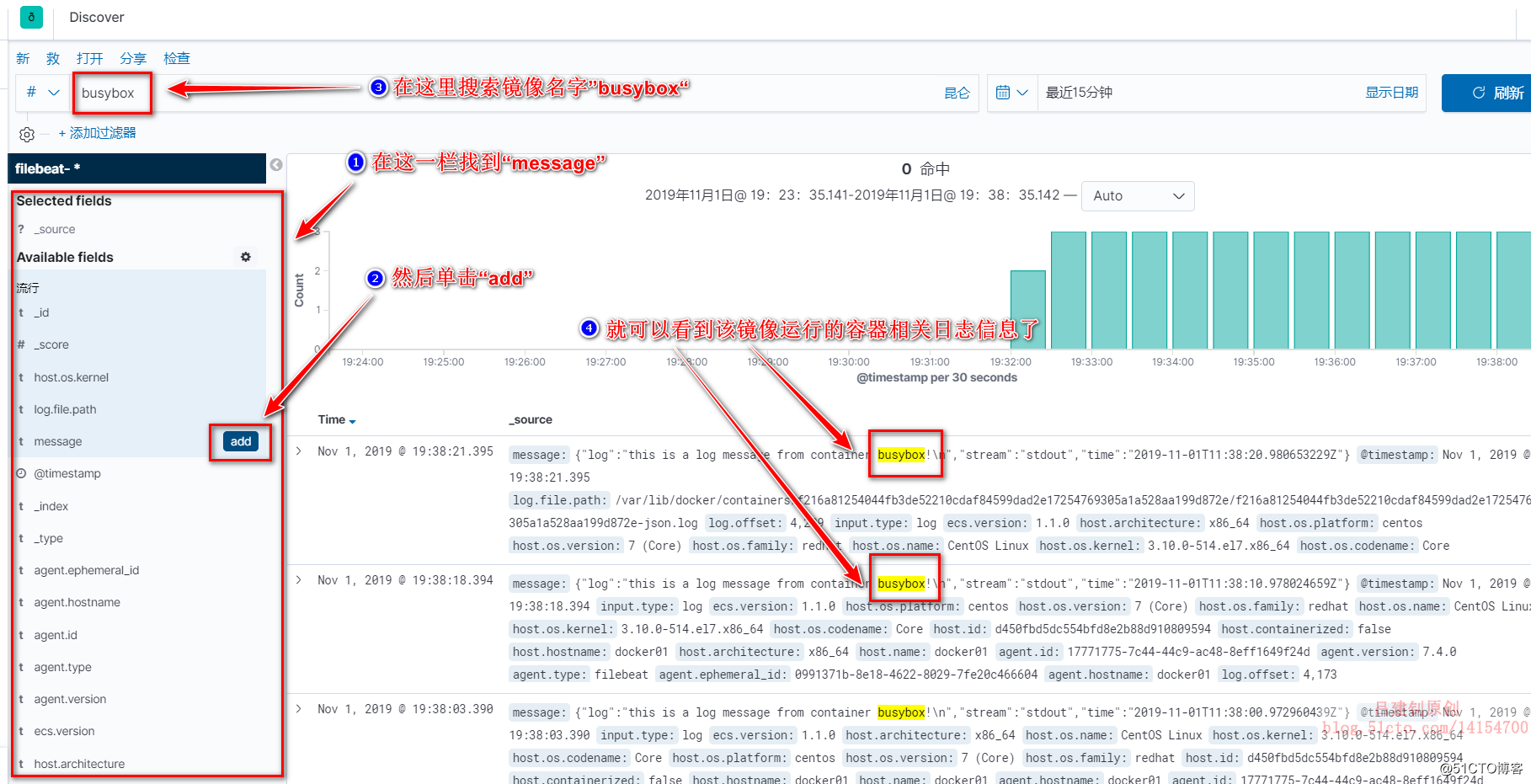

[root@docker01 ~]# docker run busybox sh -c 'while true;do echo "this is a log message from container busybox!";sleep 10;done'

#运行这个容器,每隔十秒输出一段字符FIG then look, sequentially, as follows:

If you can see the corresponding log information, then the elk this vessel is operating normally.

-------- end of this article so far, thanks for reading --------