Foreword

Any online system can not do without data, some data is required for the business system itself, such as the system of accounts, passwords, page display of content. Some of the data is real-time business system or user-generated, such as a purchase order business system logs, records the user's browser to access the system, payment information, member's personal information. Most businesses internally and externally there are many such online system, these data are driving business development, decision-making and innovation in the core of things. These data allow a better online support system is a database and data analysis platform.

Database is mainly responsible for the real-time data is written to the system and online query against the predefined requirements, strictly speaking, is the database type OLTP database. Such a database is the most well known is that Oracle and MySQL. Business system requirements for the database may be diverse, but also in recent years into the NoSQL database and NewSQL a traditional relational database. In addition to the service system, and data storage services directly related to and accumulated in the database, there are vast amounts of data system monitor, the system generates service log. If we want these data can be stored more durable and do some real-time or off-line analysis, we assist business decision making, providing business and market reports, many companies will build their own data analysis platform. That is the origin of today's "big data" platform. Such platform is mainly to solve the following problems:

1. rich data sources and data formats supported by late binding

Rich data source because it is a data analysis platform is a summary of where we all types of business data, the data source may come from various databases such as MySQL, MongoDB, log sources, and so on. The platform needs to be able to facilitate convenient storage of all types of data sources, for example, usually you will find that there is a big data architecture Kafka, various types of data sources will first enter Kafka, Kafka pushed again by the large storage system data. Kafka here assumed the role of upstream data storage interface and source data decoupling large platform. Delay binding data format is a very important concept, TP class databases often require pre-defined Schema based on business needs, it is often said of the write-Schema, when writing data that is going to do strict type checking of data fields. However, because the analytical system is not intended to restrict or limit the Schema data storage usually employed Schema reading type, i.e. where the delay bound, the data will be processed according to the data type corresponding in the analysis.

2. The storage and computing the elastic extension

Extended storage and computing the elastic means capable of supporting large data systems requires huge amounts of data and to maintain a high throughput of reading and writing. Data analysis platform aggregates accept all types of data of various types of online systems, while data is accumulated over time. Big Data Analytics platform capable of supporting massive data storage is necessary, but this size is not predefined, but with the accumulation of data increases elasticity, where the amount of storage may PB from TB level to level, even hundreds PB. Meanwhile computing power the entire architecture is the same with elastic, give an intuitive example, we might do in the TB level a full amount of processing takes 20 minutes, is not to a hundred PB level, the processing time to rummage through several orders of magnitude resulting in a daily analysis the results can not be produced in time, so that the value of big data platform greatly reduced, limiting the rapid development of business.

3. large-scale low-cost

Many big data platform designed from the beginning may not be aware of the cost, mainly based on their familiarity with the open source program, the business side of the scale data analysis and the effectiveness of the program were selected. But when the traffic is really up, had to face a challenging problem is the cost of big data platform. There may even have to lead to the transformation of the platform architecture or data migration. So for large enterprise data platform designed from the beginning, we need to put our whole cost structure is taken into account. This is to select the corresponding calculation engine tiered storage and data storage. Nowadays big data cloud platform will eventually tend to choose a scalable, low-cost storage platform final landing data, such as OSS or AWS on cloud Ali S3, the storage platform itself also supports further tiered storage. Such computing platform above the storage Elastic MapReduce scheme may be selected. On the formation of the whole architecture nowadays hot "data lake" program. Users may self-built online a Hadoop cluster, and uses HDFS to store these data summary, and then build their own large data warehouse.

4. online business analysis and business separation

Isolation is because business analysis often need to scan more data for analysis, if this type of scanning large flow occurred in the online library, it may affect the SLA online services. Simultaneous access to analyze traffic patterns and not necessarily the same as the online mode, storage and distribution format online library data may not be suitable for analysis system. It is generally typical of big data platform will have its own copy of storage, data distribution, format and indexes oriented analysis needs accordingly optimized. For example a typical class TP engine line memory storage format often, when the analysis will be transformed into columns exist.

Introduced here, we hope to guide people to think about the question, whether traditional data warehouse or data on cloud lake, we eventually hope to effectively solve the problem of business data storage and analysis. That is exactly what the business needs, especially when we want to analyze the data source is a database, a log monitoring this type of structured or semi-structured data, for what needs big data platform is it ? I think here we can start to think about, we will be back and we take a look at some of today's mainstream open-source programs and build solutions on the cloud, and then come back summarize large demand for structured data storage and analysis.

Open source big data storage platform architecture analysis

Earlier we mentioned achieve online business without the support OLTP databases, to achieve real-time data read and write. In this chapter we take a look at open source and cloud on a combination of some mainstream database and Big Data Analytics platform architecture.

Hadoop Big Data solutions

we Uber to a large data architecture as an example, the figure shows the various databases by Kafka pushed to the Hadoop cluster full grant amount is calculated, the result set will be re-written several types of storage engine the results of queries.

In traditional Hadoop architecture, various types of structured data such as log data collected by Kafka into the pipeline, Spark Kafka real-time consumption data written to HDFS in the cluster. Databases such as data in the RDS will use the full amount Spark regularly sweep table synchronization to HDFS, usually once a day cycle, synchronize business-peak hours. Such use of HDFS storage summarizes the user's data, database data concerned is actually a regular snapshot. For example, every day early in the morning in the database will log the user behavior information of joint analysis, generate analysis report of the day such as the day included visits summary, the user's propensity to consume reports and other data, to the head of the decision to use. The reason why the architecture is full RDS data storage volume, mainly due to HDFS itself is just a distributed file storage, update, delete Record levels not friendly. Therefore, in order to simplify the consolidation of these databases modify delete logic, in the case of small-scale data is selected by the full amount of the scan. When a large database data, such as Uber architecture, based on HDFS has developed a storage engine to support modification, and deletion.

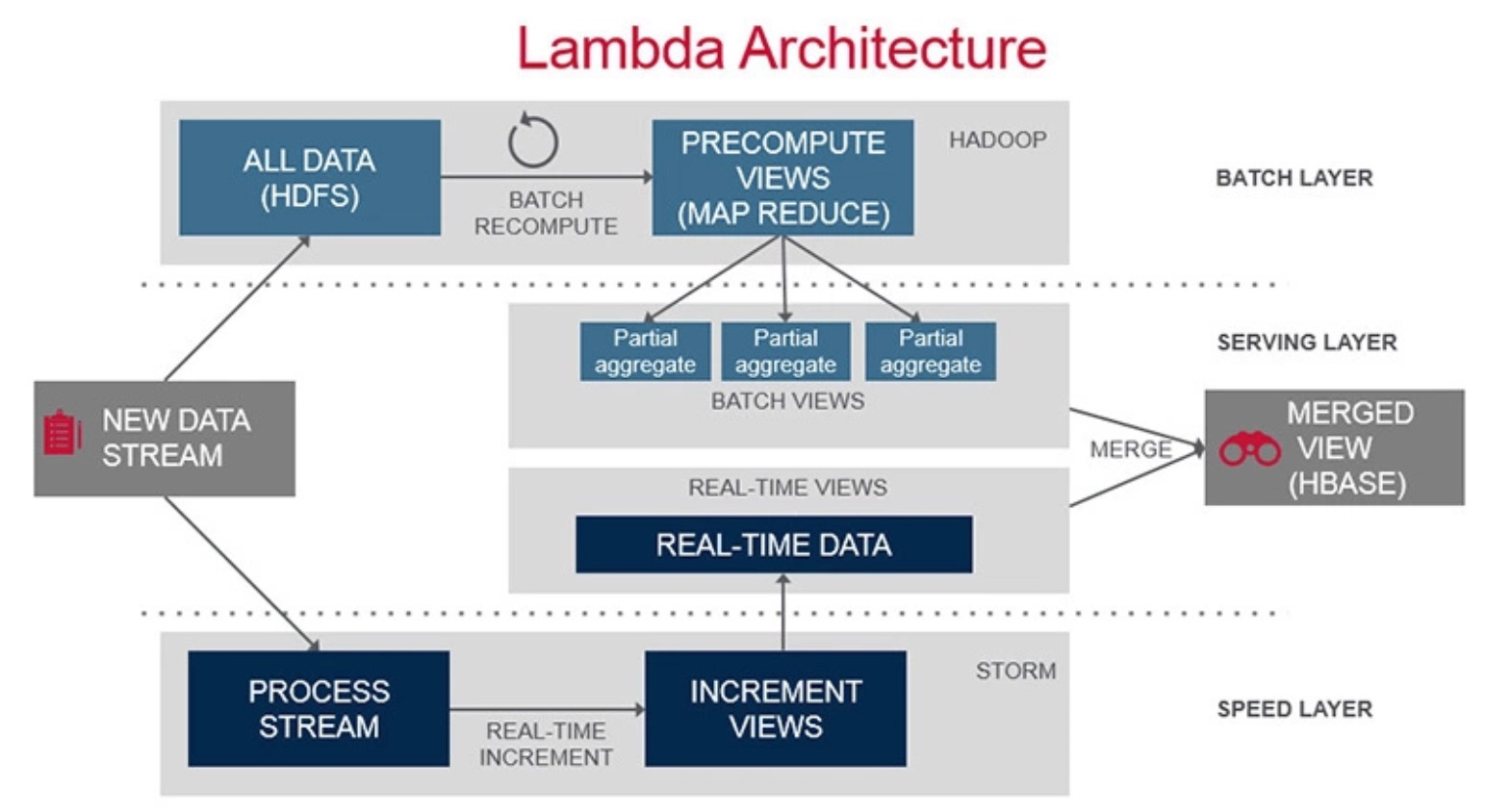

Features of this package is that the data has been static analysis, by means of a Hadoop cluster of high concurrency, can be more easily accomplished off-line computation and processing of data behavior to the order of one hundred PB TB, while chunks of data stored in HDFS the integrated storage costs are relatively low. The only drawback is that the data storage regularly, timeliness of data is typically calculated T + 1. If the business side has recommended near real-time demand, then the infrastructure will be upgraded from an offline to calculate the "Lambda architecture." FIG architecture is as follows:

Lambda architecture

details reference may Lambda description .

To achieve off-line and real-time computing needs two types of storage through HDFS full amount of incremental storage and Kafka. Essentially the whole amount of memory is still HDFS T + 1 type. But to make up for real-time computing needs calculated by Kafka docking flow. That is more of a demand for storage and computational logic to achieve real-time services.

Whether traditional or offline analysis architecture Lambda architecture, the result set may still be relatively large, it is necessary to persist in a structured storage system. At this point the main storage as a result set of a query, such as real-time market, reporting, ad hoc query BI business decision makers and so on. So mainstream approach is to write the result RDS and then synchronized to Elasticsearch or write directly to Elasticsearch, where the main means of ES hope powerful full-text search and the ability to query multiple-field combination.

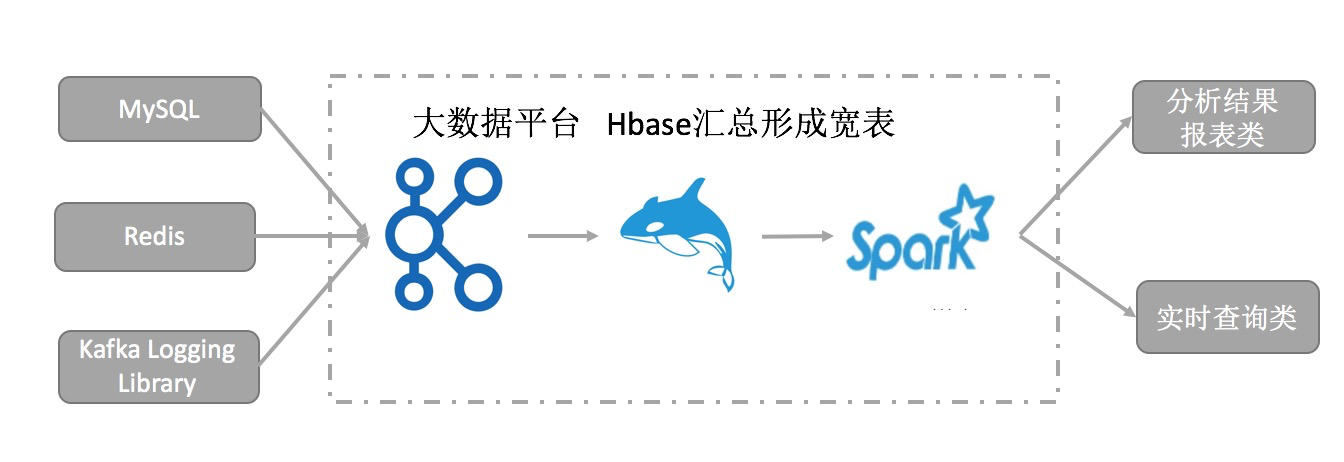

NoSQL distributed database scheme

before architecture we can easily find, RDS when doing batch calculations need to be synchronized to form a static HDFS data do batch calculations. This architecture may encounter a scene, a large amount of data the whole day, the whole amount of synchronization, timeliness is poor even if the resource is not synchronized finish, how to optimize this problem? If we can think of the data warehouse itself is a database, direct support for CRUD operations, it would mean that no synchronization full amount! Even some online data may be written directly to this massive database, many open-source programs right industry will be based on the distributed NoSQL databases such as Hbase to build this infrastructure. It is a simple example of the FIG. Hbase schema free CRUD operations and support real-time, greatly simplifies the real-time data is written to the source data, synchronization problems. At the same time create large data sources across a wide table, join the complexity of building a complete data table through the large width calculation will be greatly reduced. Meanwhile Hbase combination Kafka can also be achieved Lambda supports two types of batch and flow requirements. That this architecture is perfect it? You can completely replace a program it ?

The answer is certainly not On the one hand Hbase to support good real-time data is written, is the use of LSM storage engine, by adding new data warehousing way, data update and merge Merge Optimization reduce reliance background read operation. Data read and write data to support the cost of this type of engine is higher than the static files directly read and write HDFS. On the other hand Hbase data off the disk storage format is organized in rows, that is, we usually say that the line storage. Ability rows on compression and support batch processing of scanned data is far less memory columns, a program tend to choose the HDFS such Parquet Orc or column storage. So when the amount of data grows to hundreds or even PB PB, the whole amount Hbase storage for batch analysis, it is possible in terms of performance and cost will encounter bottlenecks. So the mainstream Hbase program will be a combination of programs, accelerate the use of HDFS Hbase way to store various types of structured data, so that the entire infrastructure to control costs and enhance scalability. However, this combination also creates a problem, operation and maintenance component increased the difficulty will increase. At the same time the number of data in HDFS Hbase and hot and cold stratification, according to business needs or to divide. If the scene is hierarchical, data Hbase of how convenient inflow HDFS, these are very real challenges.

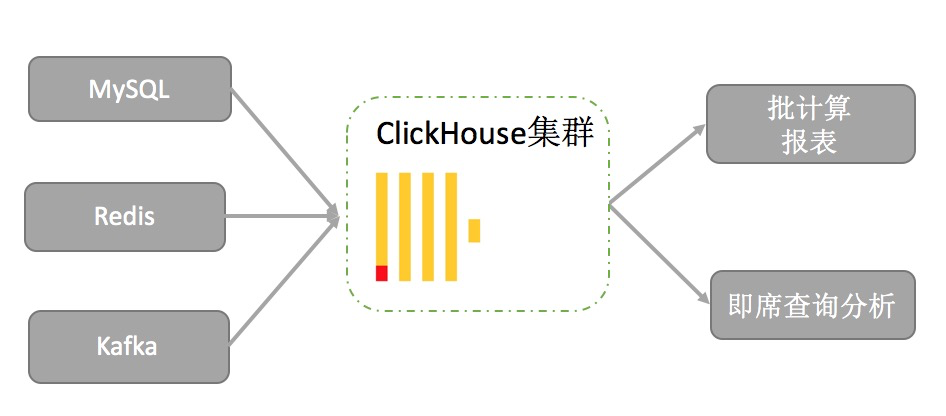

AP analysis combined with database engine program

On the front of that solution of the NoSQL does not solve the problem of data ad hoc query result set, Hbase can support itself based Rowkey query, but for ad hoc query support more multi-field effort. Some senior players, manufacturers will be based Hbase docking Solr or their own secondary development of various types of customized indexes to speed up queries, and then docking Phoenix implementing distributed computing power. This is a complex development, the multi-component integration capability is essentially want to give a TP database AP. It is also natural to our engine architecture combined with the introduction of TP AP engine to achieve a complete analysis framework.

example, it may be convenient after interactive analysis shown above, by constructing a set of clusters based ClickHouse analysis engine, various types of structured data synchronized to the analysis engine. This architecture looks compared to the previous architecture simplifies the number of steps, mainly because this type of engine itself provides database-like reading and writing skills but also comes with a comprehensive analysis engine.

AP distributed the industry mainstream engines are available, such Druid, ClickHouse, Piont, Elasticsearch or column store version hbase - Kudu. Such systems also have different emphases, with a pre-polymerization good Append scene reanalysis of data to support such as Druid, there are all kinds of indexes to achieve, by reducing the number of IO powerful filter capabilities index to speed up the analysis of Elasticsearch, like Kudu directly to optimization Hbase batch scanning capabilities while retaining its ability to operate a single line, the persistent store format turned into a column. These systems have in common is that the data are based on columns deposit, part of the engine is introduced inverted index, Bitmap indexing further speed up queries. The benefits of this architecture is directly aside the traditional offline Big Data architecture, hoping storage engine supports good storage and computation pushdown format itself for real-time batch computing, real-time to show results. In this architecture to 100TB GB level, compared to the previous architecture has been greatly improved, even at this time is calculated in real time and off-line batch computing boundaries have become blurred, TB level of data aggregation can be in the seconds to minutes in response, BI staff no longer like the traditional big data architecture to wait for a T + 1 data synchronization delay after the conduct of minutes or even hours off-line computing class in order to get the final result, significantly speeding up data brings value to the business pace. That this architecture will be the Terminator structured big data processing it? Of course, I do not think a short time, because although this architecture has good scalability, but off-line processing one hundred PB, in the scalability, complex computing scenarios and storage costs still relatively weak compared to Hadoop solution. Such as full index of Elasticsearch, the index itself will usually bring three times the storage space expansion, usually you need to rely on such SSD storage media. All other aspects of this type of data needed to calculate architecture will be loaded into memory to make real-time calculation, it is difficult to support two large tables Join the scene, if there is heavy computational logic may also affect the timeliness of calculation. ETL scene TB level or above level of data is not good at this type of engine.

Lake Datalake program data on the cloud

this scheme Lake AWS data can be understood as the traditional Hadoop cloud floor plan and upgrades, and the help of native storage engine S3 cloud, while retaining the distributed self HDFS clusters external storage reliability and high throughput, by means of its strong pipeline capabilities such as Kinesis Firehose and Glue to achieve quick and easy data into the lake all kinds of data, further reducing storage costs and operation and maintenance of traditional solutions. This architecture is also an example of big data platform for users to make a distinction and definitions for different usage scenarios, use data analysis complexity and timeliness will be different, this is also a program we mentioned earlier and bi- same situation. Of course, this data solutions lake itself does not solve all the pain points of traditional programs, such as how to ensure data quality in the lake do data warehousing atomicity, or how to effectively support data updates and deletes.

Delta Lake

云上希望通过数据湖概念的引入,把数据进行汇总和分析。同时借助于云上分布式存储的技术红利,在保证数据的可靠性前提下大幅降低汇总数据持久化存储的成本。同时这样一个集中式的存储也使得我们的大数据分析框架自然演进到了存储计算分离的架构。存储计算分离对分析领域的影响要远大于OLTP数据库,这个也很好理解,数据随着时间不断累积,而计算是根据业务需求弹性变化,谷歌三驾马车中的GFS也是为了解决这个问题。数据湖同时很好的满足了计算需要访问不同的数据源的需求。但是数据湖中的数据源毕竟有不同,有日志类数据,静态的非结构化数据,数据库的历史归档和在线库的实时数据等等。当我们的数据源是数据库这类动态数据时,数据湖面临了新的挑战,数据更新如何和原始的数据合并呢?当用户的账号删除,我们希望把数据湖中这个用户的数据全部清除,如何处理呢?如何在批量入库的同时保证数据一致性呢。Spark商业化公司Databricks近期提出了基于数据湖之上的新方案『Delta Lake』。Delta Lake本身的存储介质还是各类数据湖,例如自建HDFS或者S3,但是通过定义新的格式,使用列存来存base数据,行的格式存储新增delta数据,进而做到支持数据操作的ACID和CRUD。并且完全兼容Spark的大数据生态,从这个角度看Databricks希望引入Delta Lake的理念,让传统Hadoop擅长分析静态文件进入分析动态数据库源的数据,离线的数据湖逐步演进到实时数据湖。也就是方案二和三想解决的问题。

介绍了这些结构化数据平台的架构后,我们再来做一下总结,其实每套架构都有自己擅长的方案和能力:

| 适合场景 | 数据规模 | 存储格式 | 数据导入模式 | 成本 | 计算方式 | 方案运维复杂度 | 数据变更性 | |

|---|---|---|---|---|---|---|---|---|

| 传统Hadoop | 海量数据离线处理 Append为主的场景 |

大 | 列存 | 批量离线 | 低 | MapReduce | 较高 | 不可更新 静态文件 |

| 分布式NoSQL数据库 | 海量数据,支持实时CRUD 批量离线处理,可以部分做方案一的结果存储集 |

中上 | 行存 | 实时在线 | 中 | MapReduce | 中 | 可更新 |

| 分布式分析型数据库 | 实时/近实时入库,即席查询分析,经常做为方案一的结果存储集 | 中 | 行列混合 | 实时/近实时 | 高 | MPP | 中 | 可更新 |

| 数据湖/DeltaLake | 海量数据离线处理,实时流计算 具备ACID和CRUD能力 |

大 | 行列混合 | 批量离线/近实时 | 低 | MapReduce | 中 | 可更新 |

通过上面对比我们不难看出,每套方案都有自己擅长和不足的地方。各方案的计算模式或者计算引擎甚至可以是一个,例如Spark,但是它们的场景和效率确相差很大,原因是什么呢?区别在于存储引擎。这里我们不难看出大数据的架构抛开计算引擎本身的性能外,比拼的根本其实是存储引擎,现在我们可以总结一下大数据分析平台的需求是什么:在线和分析库的隔离,数据平台需要具备自己的存储引擎,不依赖于在线库的数据,避免对线上库产生影响。有灵活的schema支持,数据可以在这里进行打宽合并,支持数据的CRUD,全量数据支持高效批量计算,分析结果集可以支持即席查询,实时写入支持实时流计算。

综上所述,架构的区别源自于存储引擎,那是否有一些解决方案可以融合上面的各类存储引擎的优点,进一步整合出一套更加全面,可以胜任各类业务场景,也能平衡存储成本的方案呢? 下面我们就来一起看看构建在阿里云上的一款云原生结构化大数据存储引擎:Tablestore如何解决这些场景和需求。

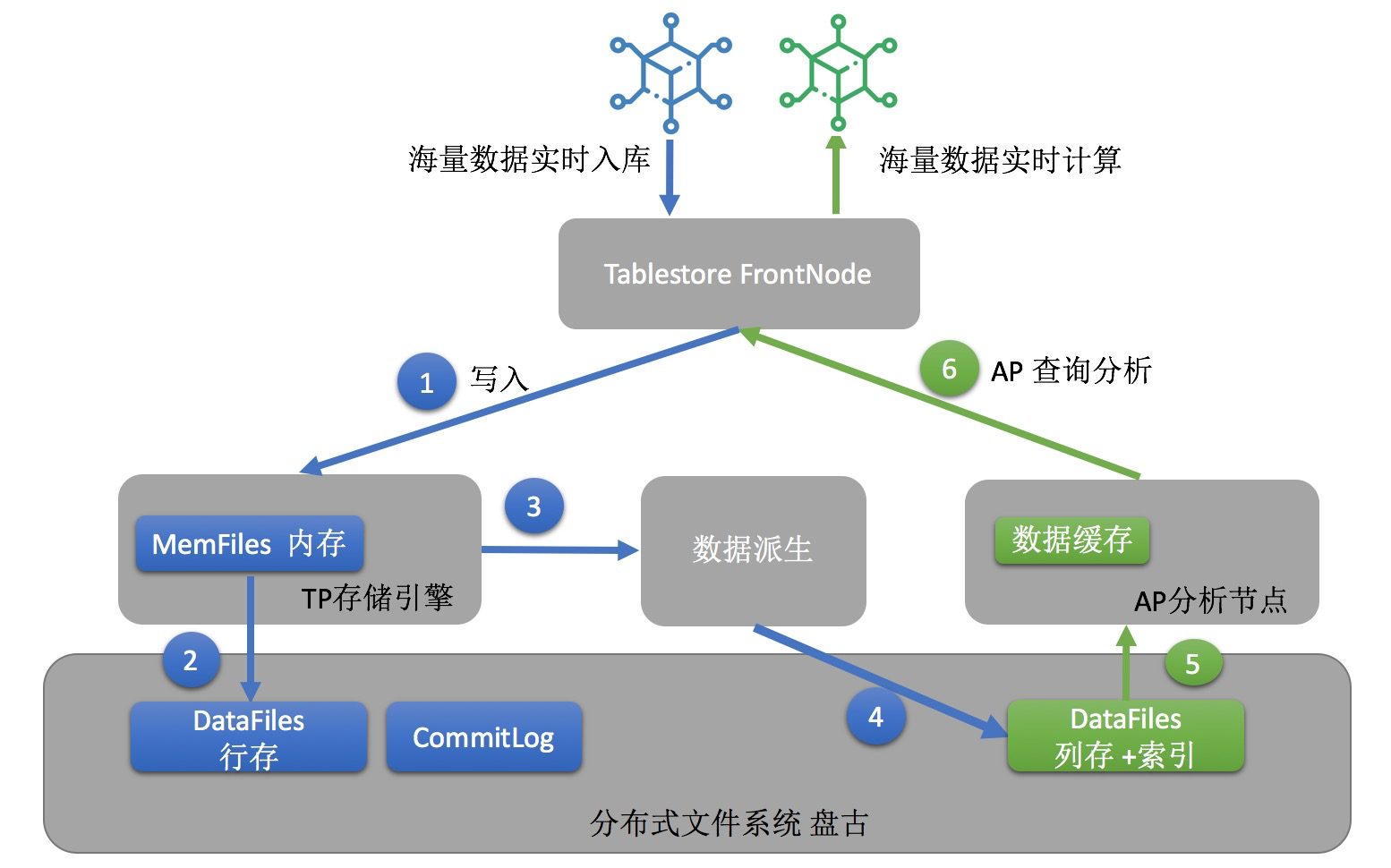

Tablestore的存储分析架构

Tablestore是阿里云自研的结构化大数据存储产品,具体产品介绍可以参考官网以及权威指南。Tablestore的设计理念很大程度上顾及了数据系统内对结构化大数据存储的需求,并且基于派生数据体系这个设计理念专门设计和实现了一些特色的功能,也通过派生数据能力打通融合了各类存储引擎。Tablestore的基本设计理念可以参考这篇文章的剖析。

大数据设计理念

- 存储计算分离架构:采用存储计算分离架构,底层基于飞天盘古分布式文件系统,这是实现存储计算成本分离的基础。

- CDC技术:CDC即数据变更捕获,Tablestore的CDC技术名为Tunnel Service,支持全量和增量的实时数据订阅,并且能无缝对接Flink流计算引擎来实现表内数据的实时流计算。基于CDC技术可以很便捷的打通Tablestore内的各类引擎以及云上的其他存储引擎。

- 多存储引擎支持:理想的数据平台希望可以拥有数据库类的行存,列存引擎,倒排引擎,也能支持数据湖方案下的HDFS或者DeltaLake,热数据采用数据库的存储引擎,冷全量数据采用更低成本数据湖方案。整套数据的热到冷可以做到全托管,根据业务场景定制数据在各引擎的生命周期。Tablestore上游基于Free Schema的行存,下游通过CDC技术派生支持列存,倒排索引,空间索引,二级索引以及云上DeltaLake和OSS,实现同时具备上述四套开源架构方案的能力。

- The final landing data must be archived data Lake OSS: well understood here, when our hot data over time to become cold data, data archiving is bound to gradually enter the OSS, OSS and even an archive store. This will allow us to achieve the lowest cost of PB-level data highly available storage. At the same time face a very occasional full amount of analysis scenarios can also be effective at a relatively stable rate of throughput the file you want. So big data platform on Tablestore eventually we would recommend filing into the OSS.

We can say that based on Tablestore more easily build infrastructure following four sets of these ideas, specific architecture selection can be combined with business scenarios, and can easily achieve dynamic switching solutions:

- Higher value-added data , want to have high concurrency point query, ad hoc query and analysis capabilities (published in September) :

Tablestore combination of wide tables, Tablestore Tunnel of CDC technology index analysis engine incorporating this architecture is similar to Option 2 and 3, while the wide table of the combined high throughput with low-cost storage, can provide TB level data ad hoc query and analysis ability. The biggest advantage of this architecture is no need to over-reliance on extra computing engine to achieve efficient real-time analysis capabilities.

- Huge amounts of data, high-frequency non-updated data, the EMR has a cloud clusters (coming soon so stay tuned):

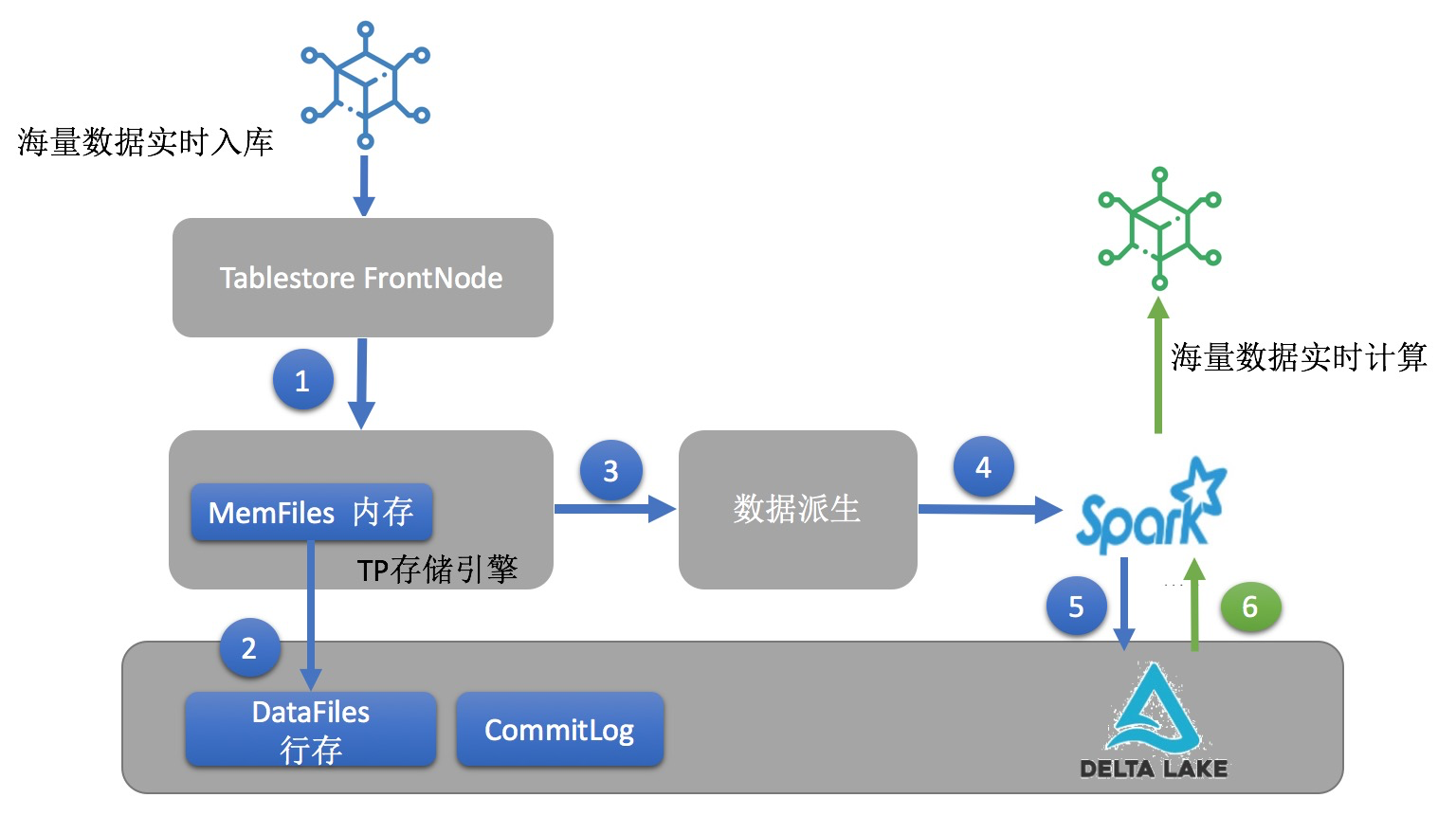

Tablestore combination wide table, Tablestore Tunnel CDC data derived technology, Spark Streaming and DeltaLake, build open architecture similar to Scheme 1 or 4. By CDC technology, EMR cluster Spark Streaming real-time subscription incremental data Tablestore Tunnel is written EMR cluster DeltaLake, by means of DeltaLake ability to merge the data CRUD, data modification, and deletion of data to support the lake. Batch calculated by means of high-throughput analysis capabilities Spark cluster.

- Massive data, less data update, data partitioning significantly dimension attributes (e.g., attributes available for data hierarchical timestamp):

Tablestore combination wide table, Tablestore Tunnel technology of CDC, OSS and DLA, low-cost construction scheme of a fully managed architecture. Real-time data is written Tablestore, by CDC technique, Tablestore will fully managed data of the synchronization periodically or pushed to the OSS, the OSS data high throughput can be achieved by means of a calculation processing batch Spark. The biggest advantage of this program is to store and operation and maintenance costs are relatively low.

- Full engine integration program:

Tablestore combination wide table, the CDC technology, multivariate analysis engine, and cold data archived automatically DeltaLake / OSS. This thermal data to achieve a wide table architecture merge, second-level ad hoc query and analysis capabilities, provide cold data off-line high-volume throughput computing power. Such architecture can have a good balance in the cold storage costs and calculate the delay data.

总结一下,基于Tablestore的大数据架构,数据写入都是Tablestore的宽表行存引擎,通过统一写来简化整个写入链路的一致性和写入逻辑,降低写入延时。大数据的分析查询的需求是多样化的,通过数据派生驱动打通不同引擎,业务可以根据需求灵活组合派生引擎是势不可挡的趋势。同时强调数据的冷热分层,让热数据尽可能的具备最丰富的查询和分析能力,冷数据在不失基本批量计算能力的同时尽可能的减少存储成本和运维成本。这里说的大数据架构主要说批计算和交互分析这部分,如果是实时流计算需求,可以参考我们的云上Lambda Plus架构。

存储引擎方面Tablestore,基于分布式NoSQL数据库也就是行存做为主存储,利用数据派生CDC技术整合了分布式分析型数据库支持列存和倒排,并结合Spark生态打造Delta Lake以及基于OSS数据湖。在计算查询方面,Tablestore自身通过多维分析引擎或者DLA支持MPP,借助于Spark实现传统MapReduce大数据分析。未来我们也会规划在查询侧打通计算引擎的读取,可以做到基于查询语句选取最佳的计算引擎,例如点查命中主键索引则请求访问行存,批量load分析热数据则访问数据库列存,复杂字段组合查询和分析访问数据库列存和倒排,历史数据定期大批量扫描走DeltaLake或者OSS。我们相信一套可以基于CDC技术统一读写的融合存储引擎会成为未来云上大数据方案的发展趋势。

总结和展望

In this article we talked about the typical open source structured big data architecture, and analyzes the characteristics of each set architecture. By summarizing and analyzing the existing architecture of precipitation, leads us on a cloud platform Tablestore structured storage and the ability to have support in the upcoming big data analysis. Hope this can be done by CDC-driven big data platform TP AP classes and various data needs the best fully managed convergence, the entire Serverless architecture allows our computing and storage resources can be fully utilized, so that data-driven business development go further.

If large data storage based Tablestore analytical framework Interested friends can join our technical exchange group (nails: 23307953 or 11789671), to discuss with us.