logback and log4 J is written by one person ,

springboot default logging framework is logback.

logback mainly by logback-core: infrastructure other modules, other modules based on its build, provides a key general mechanism ,

logback-classic: lightweight log4j implementation, to achieve a simple logging facade SLF4J ,

logback-access: main module as a servlet container and the interaction

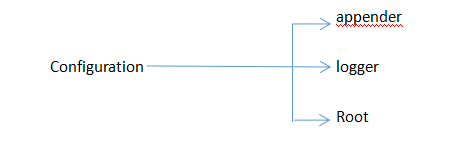

The configuration file structure logback.xml

Detailed configuration file contents:

<!--其他配置省略-->

</configuration>

<contextName>myAppName</contextName>

</ Configuration>

<property name="APP_Name" value="myAppName" />

<contextName>${APP_Name}</contextName>

<!--其他配置省略-->

</configuration>

<timestamp key="bySecond" datePattern="yyyyMMdd'T'HHmmss"/>

<contextName>${bySecond}</contextName>

<!-- 其他配置省略-->

</configuration>

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>%-4relative [%thread] %-5level %logger{35} - %msg %n</pattern>

</encoder>

</appender>

<root level="DEBUG">

<appender-ref ref="STDOUT" />

</root>

</configuration>

<appender name="FILE" class="ch.qos.logback.core.FileAppender">

<file>testFile.log</file>

<append>true</append>

<encoder>

<pattern>%-4relative [%thread] %-5level %logger{35} - %msg%n</pattern>

</encoder>

</appender>

<root level="DEBUG">

<appender-ref ref="FILE" />

</root>

</configuration>

class = "ch.qos.logback.core.rolling.TimeBasedRollingPolicy": The most commonly used strategy rolling, rolling it to develop strategies based on time, responsible for both the scroll is also responsible for starting the scroll. Has the following child nodes:

<fileNamePattern>: necessary node contains the file name and "% d" conversion specifier, "% d" may comprise a java.text.SimpleDateFormat specified time format, such as:% d {yyyy-MM} .

If the direct use of% d, the default format is yyyy-MM-dd. RollingFileAppender the file byte point dispensable, by setting the file, you can specify a different location for active files and archive files, always record the current log file to the specified file (active document), the name of the active file does not change;

if not set file, the file name of the event will be based on the value fileNamePattern change every once in a while. "/" Or "\" will be treated as a directory separator.

<maxHistory>:

Optional node, control the maximum number of reserved archive, delete old files exceeds the number. Assuming that the scrolling of each month, and <maxHistory> 6, only the last 6 months save files, old files before deleting. Note that deleting old files, those directories created for the archive will be deleted.

class = "ch.qos.logback.core.rolling.SizeBasedTriggeringPolicy": see the size of the current active file, if more than the specified size will inform RollingFileAppender trigger currently active document scrolling. Only one node:

<maxFileSize>:

<prudent>: When is true, it does not support FixedWindowRollingPolicy. Support TimeBasedRollingPolicy, but there are two restrictions, one does not support file compression is not allowed, 2 not set the file attributes, you must be left blank.

<triggeringPolicy>: RollingFileAppender inform appropriate activated scrolling.

class = "ch.qos.logback.core.rolling.FixedWindowRollingPolicy" according to a fixed window algorithm rename the scroll policy file. It has the following child nodes:

<minIndex>: window index Min

<maxIndex>: maximum window index, when the user designates the window is too large, automatically the window is set to 12.

<fileNamePattern>: must contain "% i" For example, assume that minimum and maximum values are 1 and 2, designated mode mylog% i.log, and will have the archive mylog1.log mylog2.log. You can also specify the file compression options, e.g., mylog% i.log.gz or not log% i.log.zip

example:

<Configuration>

<the appender name = "the FILE" class = "ch.qos.logback.core.rolling. the RollingFileAppender ">

<rollingPolicy class =" ch.qos.logback.core.

<fileNamePattern> YYYY-the logFile% {D} .log the MM-dd </ fileNamePattern>.

<maxHistory> 30 </ maxHistory>

</ rollingPolicy>

<Encoder>

<pattern>% - 4relative [Thread%]%% -5level Logger {35} -% MSG% n-</ pattern>

</ Encoder>

</ the appender>

<the root Level = "the DEBUG">

<the appender-REF REF = "the FILE" />

</ the root>

</ configuration>

above-described configuration represents generate a log file every day, for 30 days of log files.

<!-- show parameters for hibernate sql 专为 Hibernate 定制 -->

<logger name="org.hibernate.type.descriptor.sql.BasicBinder" level="TRACE" />

<logger name="org.hibernate.type.descriptor.sql.BasicExtractor" level="DEBUG" />

<logger name="org.hibernate.SQL" level="DEBUG" />

<logger name="org.hibernate.engine.QueryParameters" level="DEBUG" />

<logger name="org.hibernate.engine.query.HQLQueryPlan" level="DEBUG" />

<!--myibatis log configure-->

<logger name="com.apache.ibatis" level="TRACE"/>

<logger name="java.sql.Connection" level="DEBUG"/>

<logger name="java.sql.Statement" level="DEBUG"/>

<logger name="java.sql.PreparedStatement" level="DEBUG"/>

logback replace log4j reason of:

1 faster implementation: logback kernel rewrite, not only to enhance performance, and initialize the memory load becomes smaller.

2, very well tested: logback completely different levels of test

3, logback-classic achieve a very natural SLF4j.

4, very full documentation

5, logback-classic automatically reload the configuration file

6, Lilith is an event log viewer, can handle large amounts of log data

7, discreet and very friendly mode of recovery

8, the configuration files can handle different situations

9, Filters need to diagnose a problem, need to print out the log

10, SiftingAppender: a very versatile Appender: can be used to split the log file according to any given operating parameters.

11, automatic compression has been playing out of log

12, the stack package version with a tree

13, automatically remove old log files

You want to see more details, please click: https: //blog.csdn.net/zbajie001/article/details/79596109