In the United States there is such a strange thing supermarket, it will beer and diapers so two strange things to put together sales and eventually sales of beer and diapers these two seem unrelated things both increased . This name is called the Wal-Mart supermarket.

You will not feel a little weird? Although it turned out that this case does have according to the US ladies often told their husbands after work to buy diapers for the children, and the husband had to buy diapers and then to hand back their favorite beer. But this is a post hoc analysis, we should be concerned about, it is in such a scenario, how to find association rules between items . Then on to introduce how to use the Apriori algorithm to find the bar association rules between items.

I. Overview association analysis

Select the association rules among items that is, to find potential relationships between items. To find such a relationship, there are two steps to supermarkets, for example

- A collection of items set to identify frequently occurring together, we call frequent item sets . For example, frequent item sets a supermarket might have {{beer, diapers}, {eggs, milk} {bananas, apples}}

- In the frequent item sets based on the use of association rules algorithm to identify which items correlated results .

Simply put, it is to first find frequent item set, then find related articles based on association rules.

Why do you want to go first to the frequent item sets? Or in supermarkets, for example, you think, ah, what we find articles of association rules aims is to increase sales items. If you purchase an item itself, not many people, so no matter how you upgrade, it will not be high where to go. So from the perspective of efficiency and value, this is definitely a priority to find related items that people frequently purchased items.

Since association rules to find items there are two steps that we can step by step. We will first introduce frequent itemsets Apriori How to find the items, and then the next one will be on the basis of frequent itemsets Apriori after treatment, the association Goods.

Two. Apriori algorithm based on the concept

Before introducing Apriori algorithm, we need to understand a few concepts, do not worry, we will combine the following example to be explained.

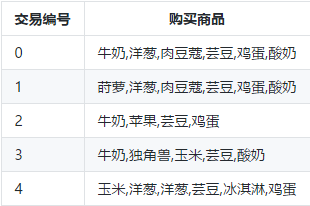

These are part of a purchase of goods inside the supermarket record:

2.1 Analysis of several concepts related

Support (Support) : support can be understood as the current popularity of articles. Calculation is:

Support = (the number of records containing articles A) / (total number of records)

Using the above example supermarkets records, a total of five transactions appear in three transactions milk, milk therefore {3/5} degree of support. Egg {4/5} is the support. The number of simultaneous occurrence of milk and eggs is 2, and therefore {milk, eggs, 2/5} of support.

Confidence (Confidence) : Confidence that if the purchase of items A, were more likely to purchase items B. Calculated like this:

Confidence level (A -> B) = (the number of recording items comprising A and B) / (A comprising a number of records)

For example: We already know that (milk, eggs) with twice the number of purchases, the number of eggs purchased four times. Then Confidence (milk -> egg) is calculated as the Confidence (milk -> eggs) = 2/4.

Lift (Lift) : lift means that when selling an item, another rate will increase the number of items sold. Calculation is:

Lift (A -> B) = confidence (A -> B) / (support A)

Example: Above, we calculated the confidence Confidence milk and eggs (milk -> eggs) = 2/4. Support The support of milk (milk) = 3/5, then the support of milk and eggs Lift (milk -> Eggs) = 0.83 we can calculate the

time when a lift value (A-> B) is greater than 1, indicating A sell more goods, B will sell more. And lift means is equal to a product no correlation between A and B. Finally, the lift is less than 1 it means that it will reduce sales to buy A B's.

Which support and related Apriori, while confidence and lift are looking at an item will be used when association rules.

2.2 Apriori algorithm introduced

Apriori role is to find frequent item sets of articles based on the degree of support among the items. Through the above we know that the higher the degree of support, indicating the more popular items. So how decision support it? This is our subjective decision, we will give Apriori provide a minimum support parameters, then Apriori returns than those that frequent item sets high minimum support.

Here, one might find that, since all know the formula for calculating the degree of support, through all the combinations that directly calculate their support can not it do ?

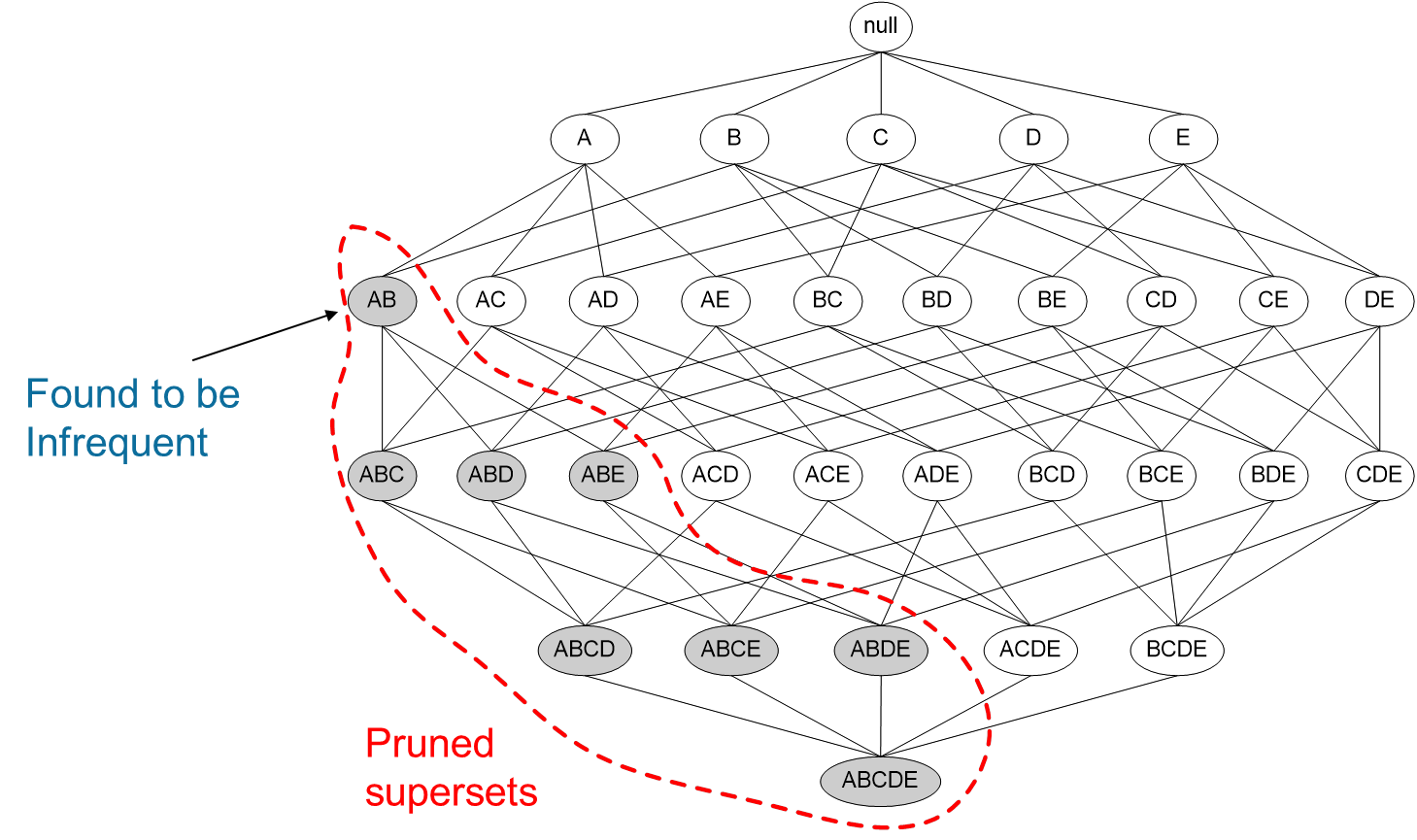

Yes, that's right. Indeed you may be able to find all frequent item set by traversing all combinations. But the problem is that all too many combinations to traverse the time spent, efficiency is too low, it is assumed that there are N items, you need to calculate a total of 2 ^ N-1 times. Each additional item, magnitude is growing exponentially. The Apriori is a kind of efficient algorithm to find frequent item sets. Its core is the following sentence:

An item set is frequent, then it's all subsets also frequent .

This sentence appears to be useless, but the reverse is very useful.

If an item set is not frequent item set, then it is a superset of all non-frequent item sets .

As shown, we find {A, B} the item set is not frequent, the superset {A, B} of the itemsets, {A, B, C}, {A, B, D}, etc. are also They are infrequent, which you can not calculate ignored.

Apriori algorithm using the idea, we'll get rid of many non-frequent item sets, greatly simplifying the computation.

2.3 Apriori algorithm flow

To use Apriori algorithm, we need to provide two parameters, data sets and minimum support . We already know from the front will traverse all the items Apriori combinations, how to traverse it? The answer is recursive. A first traversal where the combined article, weed out the data item is lower than the support of minimum support, then combined with the rest of the article. Traversing two combinations where the article, and then remove the combination conditions are not satisfied. Recursion constantly, until no more items can be combined.

Let's use Apriori algorithm combat it.

Three. Apriori algorithm combat

We use a simple example to use what Apriori bar, where the library is used mlxtend.

Prior to release the code, first introduced Apriori algorithm parameters of it.

def apriori(df, min_support=0.5,

use_colnames=False,

max_len=None)

参数如下:

df:这个不用说,就是我们的数据集。

min_support:给定的最小支持度。

use_colnames:默认False,则返回的物品组合用编号显示,为True的话直接显示物品名称。

max_len:最大物品组合数,默认是None,不做限制。如果只需要计算两个物品组合的话,便将这个值设置为2。

OK, on to the next with a simple example to see how the use of Apriori algorithm to find frequent item sets it.

import pandas as pd

from mlxtend.preprocessing import TransactionEncoder

from mlxtend.frequent_patterns import apriori

#设置数据集

dataset = [['牛奶','洋葱','肉豆蔻','芸豆','鸡蛋','酸奶'],

['莳萝','洋葱','肉豆蔻','芸豆','鸡蛋','酸奶'],

['牛奶','苹果','芸豆','鸡蛋'],

['牛奶','独角兽','玉米','芸豆','酸奶'],

['玉米','洋葱','洋葱','芸豆','冰淇淋','鸡蛋']]

te = TransactionEncoder()

#进行 one-hot 编码

te_ary = te.fit(records).transform(records)

df = pd.DataFrame(te_ary, columns=te.columns_)

#利用 Apriori 找出频繁项集

freq = apriori(df, min_support=0.05, use_colnames=True)

First, the need to first commodities one-hot encoding, encoding is represented by a boolean value. The so-called ont-hot encoding it, it is intuitively how many states there are that many bits, and only a bit is one, the other as a whole symbology zero. For example, there is only ice cream last a total of a single transaction, the other transactions did not occur. Ice cream that can be used [0,0,0,0,1] to represent

Here the coded data as follows:

冰淇淋 洋葱 牛奶 独角兽 玉米 肉豆蔻 芸豆 苹果 莳萝 酸奶 鸡蛋

0 False True True False False True True False False True True

1 False True False False False True True False True True True

2 False False True False False False True True False False True

3 False False True True True False True False False True False

4 True True False False True False True False False False TrueWe set the minimum support is 0.6, then the only support is greater than 0.6, a collection of articles is the frequent item set, the final results are as follows:

support itemsets

0 0.6 (洋葱)

1 0.6 (牛奶)

2 1.0 (芸豆)

3 0.6 (酸奶)

4 0.8 (鸡蛋)

5 0.6 (芸豆, 洋葱)

6 0.6 (洋葱, 鸡蛋)

7 0.6 (牛奶, 芸豆)

8 0.6 (酸奶, 芸豆)

9 0.8 (芸豆, 鸡蛋)

10 0.6 (芸豆, 洋葱, 鸡蛋)IV. Summary

Today, we introduce a few concepts, support, confidence association analysis will be used to enhance the degree. Then about the role of Apriori algorithm, Apriori algorithm and frequent item sets have to figure out how to efficiently items.

Finally, the use of Apriori algorithm to find frequent item sets the example.

Above ~

Recommended reading:

Scala Functional Programming Guide (a) functional ideas introduced

layman's terms the decision tree algorithm (b) Examples resolve

the evolutionary history of large data storage - from RAID to HDFS Hadoop

C, the Java, Python, rivers and lakes behind these names !