Google launched EfficientNet-EdgeTPU algorithm to speed up the AI edge device performance. EfficientNet-EdgeTPU algorithm is optimized for Coral Dev Board, Tinker Edge T Edge TPU and the like mounted tensor processors, neural networks can improve computation performance up to 10 times, the limited energy calculating edge device, it is a very important breakthrough, and can bring more application possibilities.

Moore's Law to make up for the slowdown through AI

Moore's Law (Moore's Law) proposed by Intel co-founder Gordon Moore ‧, he predicted every two years integrated circuit can accommodate the number of transistors will be doubled in the past few decades, computers have developed quite fit this law.

Google official in AI research to tribal Gatti, after the semiconductor manufacturing process more and more sophisticated, to further reduce the size of transistors is more difficult than ever, and therefore will develop the information industry will gradually shift the focus to specific fields of application hardware acceleration, so as to continue to promote industrial development.

This phenomenon also occurs in AI, machine learning, many R & D units are committed to creating a neural network (neural network, NN) of accelerated computing unit, but the irony is that, even if used in neural computing device or data center edge of the device and more common, but rarely for these hardware optimized design algorithms.

To solve this problem, Google published a EfficientNet-EdgeTPU image classification calculation model, the name suggests that it may well Google's own open-source EfficientNets model, and optimized for Edge TPU, in order to facilitate the performance of edge devices to improve performance in AI operations.

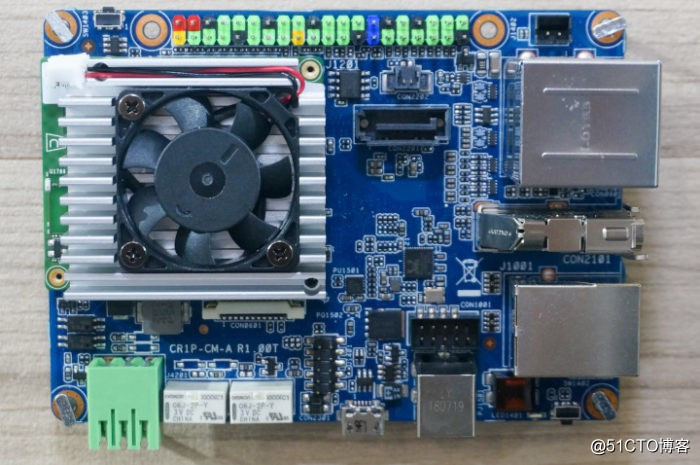

▲ ASUS launched Tinker Edge T development board is also equipped with Edge TPU.

Optimized for Edge TPU

In order to optimize EfficientNets, Google's R & D team uses AutoML MNAS frame, and adjust the properties of neural networks for Edge TPU search space (Search Space), but also the integration of delay forecasting module, to facilitate the operation of the estimated Edge TPU delay.

The operation is performed, EfficientNets mainly separable convolution depth (Depthwise-Separable Convolutions), although the amount of calculation can be reduced, but the structure is not suitable for Edge TPU, thus EfficientNet-EdgeTPU shift to convolution generally conventional, although the amount of calculation will increase, but there are better overall computing performance.

The actual validation test, EfficientNet-EdgeTPU-S representative of the basic model, and -M and -L-generation models are scaled to the original composite image adjusted to an optimum resolution, a larger, more accurate interpretation of the model, the sacrificial delay in exchange for higher accuracy. The report shows the results, regardless of Which model, performance and accuracy are excellent performance, effectiveness significantly ahead ResNet-50, accuracy is much higher than the MobileNet V2.