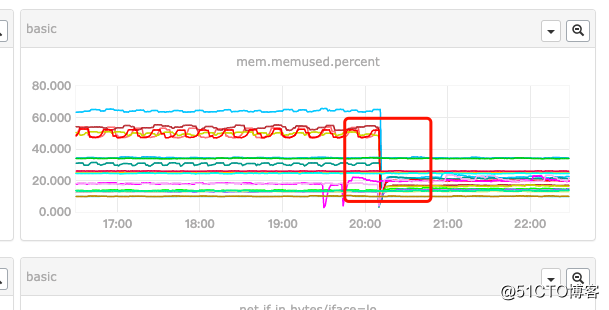

The company uses a monitoring system mainly millet open source falcon, physical machine million units, virtual machines, and containers, storage and performance monitoring systems have great challenges in the underlying storage layer selected opentsdb, while the interior done developed tsdb-proxy module to write data kafka and opentsdb, early on the line after the discovery server memory usage has been high, after the machine has not been increased very significantly improved, this time to consider optimizing code again, before looking at specific code you can look at the associated monitoring.

falcon tsdb-prxoy cluster machine memory usage, decreased place behind is to do a version upgrade, we found 4C8G machines more memory is reduced from 50% to more than 20%, the effect is obvious.

The first part of the code is the code convert2TsdbItem production run and two convert2KafkaItem function calculates the secondary monitor value calculation calculates out to its structure and TSDB Kafka, because the calculations are implemented in memory, so the memory use more Big.

TSDb of int type

FUNC (TSDb of the this *) the Send (items [] * cmodel.MetaData, RESP cmodel.SimpleRpcResponse *) error {

Go handleItems (items)

return nil

}

// for external calls, to process the received data interface

func HandleItems (items [] * cmodel.MetaData) {error

handleItems (items)

return nil

}

FUNC handleItems (items [] * cmodel.MetaData) {

IF items nil == {

return

}

COUNT: = len (items)

IF COUNT == 0 {

return

}

CFG: g.Config = ()

for I: = 0; I <COUNT; I ++ {

IF items [I] == nil {

Continue

}

Endpoint: = items [I] .Endpoint

if !g.IsValidString(endpoint) {

IF {cfg.Debug

log.Printf("invalid endpoint: %s", endpoint)

}

pfc.Meter("invalidEnpoint", 1)

continue

}

counter := cutils.Counter(items[i].Metric, items[i].Tags)

if !g.IsValidString(counter) {

if cfg.Debug {

log.Printf("invalid counter: %s/%s", endpoint, counter)

}

pfc.Meter("invalidCounter", 1)

continue

}

dsType := items[i].CounterType

step := items[i].Step

checksum := items[i].Checksum()

key := g.FormRrdCacheKey(checksum, dsType, step)

//statistics

proc.TsdbRpcRecvCnt.Incr()

// To tsdb

//first := store.TsdbItems.First(key)

first := store.GetLastItem(key)

if first == nil && items[i].Timestamp <= first.Timestamp {

continue

}

tsdbItem,ok := convert2TsdbItem(first,items[i])

if ok {

isSuccess := sender.TsdbQueue.PushFront(tsdbItem)

if !isSuccess {

proc.SendToTsdbDropCnt.Incr()

}

}

kafkaItem,ok := convert2KafkaItem(first,items[i])

if ok {

isSuccess := sender.KafkaQueue.PushFront(kafkaItem)

if !isSuccess {

proc.SendToKafkaDropCnt.Incr()

}

}

//store.TsdbItems.PushFront(key, items[i], checksum, cfg)

// To Index

index.ReceiveItem (items [I], Checksum)

the Step: int64 (param.Step),

// To History

store.AddItem (Key, items [I])

}

}

//, the MySQL deleted from memory an index counter, and deleting files from the disk rrd

func (this * Tsdb) Delete ( params [] * cmodel.GraphDeleteParam, resp * cmodel.GraphDeleteResp) {error

RESP = {} & cmodel.GraphDeleteResp

for _, param: Range = the params {

ERR, Tags: = cutils.SplitTagsString (param.Tags)

! IF ERR = nil {

log.error ( "invalid Tags:" , param.Tags, "error:", ERR)

Continue

}

var cmodel.MetaData Item * = {& cmodel.MetaData

Endpoint: param.Endpoint,

the Metric: param.Metric,

Tags: Tags,

CounterType: param.DsType,

return nil

}

index.RemoveItem(item)

}

}

func convert2TsdbItem(f *cmodel.MetaData,d *cmodel.MetaData) (*cmodel.TsdbItem,bool) {

t := cmodel.TsdbItem{Tags: make(map[string]string)}

for k, v := range d.Tags {

t.Tags[k] = v

}

host,exists := store.Hosts.Get(d.Endpoint)

if exists {

if host.AppName != "" {

t.Tags["app_name"] = host.AppName

}

if host.GrpName != "" {

t.Tags["grp_name"] = host.GrpName

}

if host.Room != "" {

t.Tags["room"] = host.Room

}

if host.Env != "" {

t.Tags["env"] = host.Approx

}

}

t.Tags["endpoint"] = d.Endpoint

t.Metric = d.Metric

t.Timestamp = d.Timestamp

if d.CounterType == g.COUNTER {

if f == nil {

return &t,false

}

if f.Endpoint == "" {

return &t,false

}

value := d.Value - f.Value

if value < 0 {

return &t,false

}

if d.Timestamp - f.Timestamp > d.Step + d.Step/2 {

return &t,false

}

fv := float64(value)/float64(d.Step)

t.Value = math.Floor(fv*1e3 + 0.5)*1e-3

return &t,true

}

if d.CounterType == g.DERIVE {

if f == nil {

return &t,false

}

if f.Endpoint == "" {

return &t,false

}

if d.Timestamp - f.Timestamp > d.Step + d.Step/2 {

return &t,false

}

value := d.Value - f.Value

if value < 0 {

return &t,false

}

fv := float64(value)/float64(d.Step)

t.Value = math.Floor(fv*1e3 + 0.5)*1e-3

return &t,true

}

t.Value = d.Value

return &t,true

}

func convert2KafkaItem(f *cmodel.MetaData,d *cmodel.MetaData) (*cmodel.KafkaItem,bool) {

t := cmodel.KafkaItem{Tags: make(map[string]string)}

for k, v := range d.Tags {

t.Tags[k] = v

}

host,exists := store.Hosts.Get(d.Endpoint)

if exists {

t.AppName = host.AppName

t.GrpName = host.GrpName

t.Room = host.Room

t.Env = host.Env

}

t.Endpoint = d.Endpoint

t.Metric = d.Metric

t.Timestamp = d.Timestamp

t.Step = d.Step

if d.CounterType == g.COUNTER {

if f == nil {

return &t,false

}

if f.Endpoint == "" {

return &t,false

}

value := d.Value - f.Value

if value < 0 {

return &t,false

}

if d.Timestamp - f.Timestamp > d.Step + d.Step/2 {

return &t,false

}

fv := float64(value)/float64(d.Step)

t.Value = math.Floor(fv*1e3 + 0.5)*1e-3

return &t,true

}

if d.CounterType == g.DERIVE {

if f == nil {

return &t,false

}

if f.Endpoint == "" {

return &t,false

}

if d.Timestamp - f.Timestamp > d.Step + d.Step/2 {

return &t,false

}

value := d.Value - f.Value

if value < 0 {

return &t,false

}

fv := float64(value)/float64(d.Step)

t.Value = math.Floor(fv*1e3 + 0.5)*1e-3

return &t,true

}

t.Value = d.Value

return &t,true

}

My second paragraph of the code is optimized, the optimization idea is to convert2TsdbItem and convert2KafkaItem These two functions are used to calculate in advance the value of direct assignment Fortunately, when needed, because they are using the same value

type Tsdb int

func (this *Tsdb) Send(items []*cmodel.MetaData, resp *cmodel.SimpleRpcResponse) error {

go handleItems(items)

return nil

}

// 供外部调用、处理接收到的数据 的接口

func HandleItems(items []*cmodel.MetaData) error {

handleItems(items)

return nil

}

func handleItems(items []*cmodel.MetaData) {

if items == nil {

return

}

count := len(items)

if count == 0 {

return

}

cfg := g.Config()

for i := 0; i < count; i++ {

if items[i] == nil {

continue

}

endpoint := items[i].Endpoint

if !g.IsValidString(endpoint) {

if cfg.Debug {

log.Printf("invalid endpoint: %s", endpoint)

}

pfc.Meter("invalidEnpoint", 1)

continue

}

counter := cutils.Counter(items[i].Metric, items[i].Tags)

if !g.IsValidString(counter) {

if cfg.Debug {

log.Printf("invalid counter: %s/%s", endpoint, counter)

}

pfc.Meter("invalidCounter", 1)

continue

}

dsType := items[i].CounterType

step := items[i].Step

checksum := items[i].Checksum()

key := g.FormRrdCacheKey(checksum, dsType, step)

//statistics

proc.TsdbRpcRecvCnt.Incr()

// To tsdb

//first := store.TsdbItems.First(key)

first := store.GetLastItem(key)

if first == nil && items[i].Timestamp <= first.Timestamp {

continue

}

value := 0.0

if items[i].CounterType == g.GAUGE {

value = items[i].Value

} else {

ok :=true

value,ok = computerValue(first,items[i])

if !ok {

return

}

}

//tsdb结构体

tsdbItem,ok := convert2TsdbItem(value,items[i])

if ok {

isSuccess := sender.TsdbQueue.PushFront(tsdbItem)

if !isSuccess {

proc.SendToTsdbDropCnt.Incr()

}

}

//kafka结构体

kafkaItem,ok := convert2KafkaItem(value,items[i])

if ok {

isSuccess := sender.KafkaQueue.PushFront(kafkaItem)

if !isSuccess {

proc.SendToKafkaDropCnt.Incr()

}

}

//store.TsdbItems.PushFront(key, items[i], checksum, cfg)

// To Index

index.ReceiveItem(items[i], checksum)

// To History

store.AddItem(key, items[i])

}

}

//从内存索引、MySQL中删除counter,并从磁盘上删除对应rrd文件

func (this *Tsdb) Delete(params []*cmodel.GraphDeleteParam, resp *cmodel.GraphDeleteResp) error {

resp = &cmodel.GraphDeleteResp{}

for _, param := range params {

err, tags := cutils.SplitTagsString(param.Tags)

if err != nil {

log.Error("invalid tags:", param.Tags, "error:", err)

continue

}

var item *cmodel.MetaData = &cmodel.MetaData{

Endpoint: param.Endpoint,

Metric: param.Metric,

Tags: tags,

CounterType: param.DsType,

Step: int64(param.Step),

}

index.RemoveItem(item)

}

return nil

}

func computerValue(f *cmodel.MetaData,d *cmodel.MetaData) (float64,bool){

//计算函数 计算好value结果返回后组装tsdb和kafka将结构体

resultvalue := 0.0

if d.CounterType == g.COUNTER {

if f == nil {

return resultvalue,false

}

if f.Endpoint == "" {

return resultvalue,false

}

value := d.Value - f.Value

if value < 0 {

return resultvalue,false

}

if d.Timestamp - f.Timestamp > d.Step + d.Step/2 {

return resultvalue,false

}

fv := float64(value)/float64(d.Step)

resultvalue = math.Floor(fv*1e3 + 0.5)*1e-3

return resultvalue,true

}

if d.CounterType == g.DERIVE {

if f == nil {

return resultvalue,false

}

if f.Endpoint == "" {

return resultvalue,false

}

if d.Timestamp - f.Timestamp > d.Step + d.Step/2 {

return resultvalue,false

}

value := d.Value - f.Value

if value < 0 {

return resultvalue,false

}

fv := float64(value)/float64(d.Step)

resultvalue = math.Floor(fv*1e3 + 0.5)*1e-3

return resultvalue,true

}

resultvalue = d.Value

return resultvalue,true

}

func convert2TsdbItem(resultvalue float64,d *cmodel.MetaData) (*cmodel.TsdbItem,bool) {

t := cmodel.TsdbItem{Tags: make(map[string]string)}

for k, v := range d.Tags {

t.Tags[k] = v

}

host,exists := store.Hosts.Get(d.Endpoint)

if exists {

if host.AppName != "" {

t.Tags["app_name"] = host.AppName

}

if host.GrpName != "" {

t.Tags["grp_name"] = host.GrpName

}

if host.Room != "" {

t.Tags["room"] = host.Room

}

if host.Env != "" {

t.Tags["env"] = host.Env

}

}

t.Tags["endpoint"] = d.Endpoint

t.Metric = d.Metric

t.Timestamp = d.Timestamp

t.Value = resultvalue

return &t,true

}

func convert2KafkaItem(resultvalue float64,d *cmodel.MetaData) (*cmodel.KafkaItem,bool) {

t := cmodel.KafkaItem{Tags: make(map[string]string)}

for k, v := range d.Tags {

t.Tags[k] = v

}

host,exists := store.Hosts.Get(d.Endpoint)

if exists {

t.AppName = host.AppName

t.GrpName = host.GrpName

t.Room = host.Room

t.Env = host.Env

}

t.Endpoint = d.Endpoint

t.Metric = d.Metric

t.Timestamp = d.Timestamp

t.Step = d.Step

t.Value = resultvalue

return &t,true

}

第三段代码现在线上

type Tsdb int

func (this *Tsdb) Send(items []*cmodel.MetaData, resp *cmodel.SimpleRpcResponse) error {

go handleItems(items)

return nil

}

// 供外部调用、处理接收到的数据 的接口

func HandleItems(items []*cmodel.MetaData) error {

handleItems(items)

return nil

}

func handleItems(items []*cmodel.MetaData) {

if items == nil {

return

}

count := len(items)

if count == 0 {

return

}

cfg := g.Config()

for i := 0; i < count; i++ {

if items[i] == nil {

continue

}

endpoint := items[i].Endpoint

if !g.IsValidString(endpoint) {

if cfg.Debug {

log.Printf("invalid endpoint: %s", endpoint)

}

pfc.Meter("invalidEnpoint", 1)

continue

}

counter := cutils.Counter(items[i].Metric, items[i].Tags)

if !g.IsValidString(counter) {

if cfg.Debug {

log.Printf("invalid counter: %s/%s", endpoint, counter)

}

pfc.Meter("invalidCounter", 1)

continue

}

dsType := items[i].CounterType

step := items[i].Step

checksum := items[i].Checksum()

key := g.FormRrdCacheKey(checksum, dsType, step)

//statistics

proc.TsdbRpcRecvCnt.Incr()

// To tsdb

//first := store.TsdbItems.First(key)

first := store.GetLastItem(key)

if first == nil && items[i].Timestamp <= first.Timestamp {

continue

}

value,ok := computerValue(first,items[i])

if !ok {

continue

}

tsdbItem := &cmodel.TsdbItem{

Metric: items[i].Metric,

Value: value,

Timestamp: items[i].Timestamp,

Tags: make(map[string]string),

}

tsdbItem.Tags["endpoint"] = items[i].Endpoint

for k, v := range items[i].Tags {

tsdbItem.Tags[k] = v

}

kafkaItem := &cmodel.KafkaItem {

Metric: items[i].Metric,

Value: value,

Timestamp: items[i].Timestamp,

Step: items[i].Step,

Endpoint: items[i].Endpoint,

}

host,exists := store.Hosts.Get(items[i].Endpoint)

if exists {

kafkaItem.AppName = host.AppName

kafkaItem.GrpName = host.GrpName

kafkaItem.Room = host.Room

kafkaItem.Env = host.Env

if host.AppName != "" {

tsdbItem.Tags["app_name"] = host.AppName

}

if host.GrpName != "" {

tsdbItem.Tags["grp_name"] = host.GrpName

}

if host.Room != "" {

tsdbItem.Tags["room"] = host.Room

}

if host.Env != "" {

tsdbItem.Tags["env"] = host.Env

}

}

isSuccess := sender.TsdbQueue.PushFront(tsdbItem)

if !isSuccess {

proc.SendToTsdbDropCnt.Incr()

}

isSuccess = sender.KafkaQueue.PushFront(kafkaItem)

if !isSuccess {

proc.SendToKafkaDropCnt.Incr()

}

//store.TsdbItems.PushFront(key, items[i], checksum, cfg)

// To Index

index.ReceiveItem(items[i], checksum)

// To History

store.AddItem(key, items[i])

}

}

//从内存索引、MySQL中删除counter,并从磁盘上删除对应rrd文件

func (this *Tsdb) Delete(params []*cmodel.GraphDeleteParam, resp *cmodel.GraphDeleteResp) error {

resp = &cmodel.GraphDeleteResp{}

for _, param := range params {

err, tags := cutils.SplitTagsString(param.Tags)

if err != nil {

log.Error("invalid tags:", param.Tags, "error:", err)

continue

}

var item *cmodel.MetaData = &cmodel.MetaData{

Endpoint: param.Endpoint,

Metric: param.Metric,

Tags: tags,

CounterType: param.DsType,

Step: int64(param.Step),

}

index.RemoveItem(item)

}

return nil

}

func computerValue(f *cmodel.MetaData,d *cmodel.MetaData) (float64,bool){

//计算函数 计算好value结果返回后组装tsdb和kafka将结构体

resultvalue := 0.0

if d.CounterType == g.COUNTER {

if f == nil {

return resultvalue,false

}

if f.Endpoint == "" {

return resultvalue,false

}

value := d.Value - f.Value

if value < 0 {

return resultvalue,false

}

if d.Timestamp - f.Timestamp > d.Step + d.Step/2 {

return resultvalue,false

}

fv := float64(value)/float64(d.Step)

resultvalue = math.Floor(fv*1e3 + 0.5)*1e-3

return resultvalue,true

}

if d.CounterType == g.DERIVE {

if f == nil {

return resultvalue,false

}

if f.Endpoint == "" {

return resultvalue,false

}

if d.Timestamp - f.Timestamp > d.Step + d.Step/2 {

return resultvalue,false

}

value := d.Value - f.Value

if value < 0 {

return resultvalue,false

}

fv := float64(value)/float64(d.Step)

resultvalue = math.Floor(fv*1e3 + 0.5)*1e-3

return resultvalue,true

}

resultvalue = d.Value

return resultvalue,true

}