Pen tip and cap detection 1: Pen tip and cap detection data set (including download link)

Table of contents

Pen tip and cap detection 1: Pen tip and cap detection data set (including download link)

2. Handwriting detection data set

(4) Hand-Pen-voc handwriting detection data set

(5) Visualization effect of handwritten target frame

3. Pen tip and cap key point detection data set

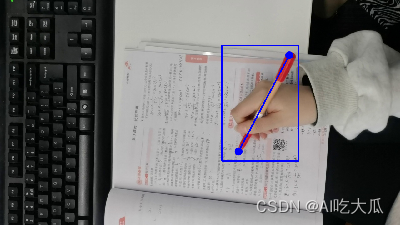

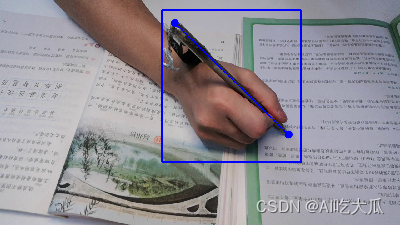

(2) Visualization effect of key points of pen tip and cap

5. Pen tip and cap key point detection (Python/C++/Android)

6. Special Edition: Pen Tip Fingertip Detection

1 Introduction

Currently, in the field of AI smart education, there is a relatively popular educational product, namelyFingertip reading orPen tip reading function, its core algorithm is to obtain the position of the pen tip or fingertip through deep learning method, recognize the text through OCR, and finally convert the text into Speech; among them, OCR and TTS algorithms have been very mature, and there are also some open source projects for fingertip or pen tip detection methods that can be implemented as a reference. This project will implement the pen tip and cap key point detection algorithm, in which the YOLOv5 model is used to implement hand detection (hand holding pen target detection), and the HRNet, LiteHRNet and Mobilenet-v2 models are used to implement pen tip and cap key point detection. The project is divided into multiple chapters such as data annotation, model training and Android deployment. This article is the pen tip and cap detection data of the project "Pen tip and cap detection" series of articles Set description;

The project collected handwriting detection data set and pen tip and cap key point detection data set:

- Hand-Pen Detection Dataset: A total of four are collected: Hand-voc1, Hand-voc2 and Hand-voc3, Hand-Pen-voc handwriting The detection data set contains a total of about 70,000 images; the annotation format is uniformly converted to VOC data format, the hand target frame is annotated as hand, and the target frame holding a pen is annotated as hand_pen, which can be used for deep learning hand target detection model algorithm development

- Pen-tip Keypoints Dataset (Pen-tip Keypoints Dataset): 1 data set was collected: dataset-pen2, with the target of hand_pen marked. area and both ends of the pen (pen tip and pen cap); the data set is divided into a test set Test and a training set Train, where the Test data set has 1075 pictures and the Train data set has 28603 pictures; the annotation format is uniformly converted to COCO data format. Can be used for deep learningpen tip and cap key point detection model training.

- Data collection and annotation is a very complicated and time-consuming work. Please respect the fruits of my labor.

[Respect the principle, please indicate the source when reprinting] https://blog.csdn.net/guyuealian/article/details/134070255

AndroidPen tip and cap key point detectionAPP Demo experience:

https://download.csdn.net/download/guyuealian/88535143

For more articles in the series "Pen tip and cap detection" please refer to:

- Pen tip and cap detection 1: Pen tip and cap detection data set (including download link)https://blog.csdn.net/guyuealian/article/details/134070255

- Pen tip and cap detection 2: Pytorch implements pen tip and cap detection algorithm (including training code and data set)https://blog.csdn.net/guyuealian/article/details/134070483

- Pen tip and cap detection 3: Android implements pen tip and cap detection algorithm (source code included but real-time detection)https://blog.csdn.net/guyuealian/article/details/134070497< /span>

- Pen tip and cap detection 4: C++ implements pen tip and cap detection algorithm (source code included but real-time detection)https://blog.csdn.net/guyuealian/article/details/134070516< /span>

2. Handwriting detection data set

The project has collected fourHand-Pen Detection Datasets (Hand-Pen Detection Dataset): Hand-voc1, Hand-voc2 and Hand -voc3 and Hand-Pen-voc, about 70,000 pictures in total

(1)Hand-voc1

Hand-voc1Hand detection data set, this data comes from foreign open source data sets, most of the data is posed by indoor cameras Hand data, excluding human body parts, each picture contains only one hand, is divided into two subsets: training set (Train) and test set (Test); the total number of training set (Train) exceeds 30,000 pictures, and the test set (Test) 2560 pictures in total; the pictures have been labeled with the hand area target box using labelme, the label name is hand, and the labeling format is unified Converted to VOC data format, it can be directly used for deep learning target detection model training.

|

|

|

|

(2)Hand-voc2

Hand-voc2Hand detection data set, this data comes from domestic open source data sets, including human body parts and multiple people. Each picture contains one or more hands, which is more in line with the business data set of home desk reading and writing scenarios. The data set currently only collects 980 pictures; the pictures have been labeled with the hand area target box using labelme. The name is hand, and the annotation format is uniformly converted into VOC data format, which can be directly used for deep learning target detection model training.

|

|

(3)Hand-voc3

Hand-voc3Hand detection data setcomes from abroadHaGRID gesture recognition data set< /span>, which can be directly used for deep learning target detection model training. hand is very large, with about 550,000 images, including 18 common universal gestures; The Hand-voc3 data set is randomly selected from the HaGRID data set, with 2000 pictures of each gesture, containing a total of 18x2000=36000 picture data. The annotation format is uniformly converted to VOC data format, and the annotation name isHaGRID data set; The original

AboutHaGRID datasetPlease refer to the article:HaGRID gesture recognition dataset usage instructions and download< /span>

|

|

|

|

(4) Hand-Pen-voc handwriting detection data set

Hand-Pen-voc handwriting detection data set, this data is a project that specifically collects data containing hands and writing tools and pens. Most of the picture data contains a hand. And it is the case of practicing writing while holding a pen in hand. The types of writing pens include pens, pencils, gel pens, markers, etc., which are very suitable for students to write/write/take notes/do homework scene data. The data set currently collects a total of 16457 pictures; the pictures have been marked with two target boxeshand and hand_pen using labelme, and the annotation format is uniformly converted to The VOC data format can be directly used for deep learning target detection model training.

- Target boxhand: Hand target box, only marked as hand when it is only a hand and not holding a pen

- Target boxhand_pen:Hand pen target box, the target box for normal writing while holding the pen in hand; because the hand is holding the pen for writing, in order to include The pen area, marked with the hand area target box, will be a little larger than the actual hand

|

|

|

|

(5) Visualization effect of handwritten target frame

You need to install the pybaseutils tool package with pip, and then use parser_voc to display the drawing effect of the hand target box

pip install pybaseutils

import os

from pybaseutils.dataloader import parser_voc

if __name__ == "__main__":

# 修改为自己数据集的路径

filename = "/path/to/dataset/Hand-voc3/train.txt"

class_name = ['hand','hand_pen']

dataset = parser_voc.VOCDataset(filename=filename,

data_root=None,

anno_dir=None,

image_dir=None,

class_name=class_name,

transform=None,

use_rgb=False,

check=False,

shuffle=False)

print("have num:{}".format(len(dataset)))

class_name = dataset.class_name

for i in range(len(dataset)):

data = dataset.__getitem__(i)

image, targets, image_id = data["image"], data["target"], data["image_id"]

print(image_id)

bboxes, labels = targets[:, 0:4], targets[:, 4:5]

parser_voc.show_target_image(image, bboxes, labels, normal=False, transpose=False,

class_name=class_name, use_rgb=False, thickness=3, fontScale=1.2)3. Pen tip and cap key point detection data set

There are many types of pens, with different materials and colors, but the shape of the pen is basically a long strip; the project does not directly mark the pen’s external rectangular frame, but divides the pen into two endpoints: the nib (pen head) and the pen cap (pen tail). The line connecting these two endpoints represents the entire length of the pen body:

- Pen tip/pen tip key point: located at the protruding tip point of the pen tip, index=0

- Pen cap/pen end key point: located at the center point of the end of the pen, index=1

- Hand-held pen labeling box: The box contains the area of the pen and the hand. It generally appears when the hand is holding the pen for writing. The pen appears alone. The label name is hand_pen.

(1)dataset-pen2

dataset-pen2 pen tip and cap key point detection data set, the data is collected and expanded from the Hand-Pen-voc handwriting detection data set, and the hand pen (hand_pen) is marked. The target area and both ends of the pen (pen tip and pen cap); most of the image data contains a hand holding a pen while practicing writing. The types of writing tools include pens, pencils, gel pens, and markers. etc., which is very consistent with the scenario data of studentswriting/writing/taking notes/doing homework. The data set is divided into test set Test and training set Train. The Test data set has 1075 pictures and the Train data set has 28603 pictures; the annotation format is uniformly converted to COCO data format, which can be used for deep learningPen tip and cap key point detection model training.

|

|

|

|

(2) Visualization effect of key points of pen tip and cap

You need to install the pybaseutils tool package with pip, and then use parser_coco_kps to display the drawing effect of hand and pen tip key points

pip install pybaseutils

import os

from pybaseutils.dataloader import parser_coco_kps

if __name__ == "__main__":

# 修改为自己数据集json文件路径

anno_file = "/path/to/dataset/dataset-pen2/train/coco_kps.json"

class_name = []

dataset = parser_coco_kps.CocoKeypoints(anno_file, image_dir="", class_name=class_name,shuffle=False)

bones = dataset.bones

for i in range(len(dataset)):

data = dataset.__getitem__(i)

image, boxes, labels, keypoints = data['image'], data["boxes"], data["label"], data["keypoints"]

print("i={},image_id={}".format(i, data["image_id"]))

parser_coco_kps.show_target_image(image, keypoints, boxes, colors=bones["colors"],

skeleton=bones["skeleton"],thickness=1)

4. Set of numbersDownload

Dataset download address:Pen tip and cap detection data set (including download link)

The contents of the data set include:

-

Handwriting detection data set:Contains Hand-voc1, Hand-voc2 and Hand-voc3. The Hand-Pen-voc handwriting detection data set contains a total of about 70,000 images; annotation format Uniformly converted to VOC data format, the hand target frame is marked as hand, and the target frame of the hand holding the pen is marked as hand_pen, which can be used for deep learning hand target detection model algorithm development.

-

Pen tip and cap key point detection data set dataset-pen2, marking the target area of the hand pen (hand_pen) and both ends of the pen (pen tip and pen cap); the data set is divided into It is the test set Test and the training set Train, where the Test data set has 1075 pictures and the Train data set has 28603 pictures; the annotation format is uniformly converted to COCO data format, which can be used for deep learningPen tip and cap Key point detection model training.

- Data collection and annotation is a very complicated and time-consuming work. Please respect the fruits of my labor.

5. Pen tip and capKey point detection (Python/C++/Android)

This project is based on the Pytorch deep learning framework to implement key point detection on the pen end of the handwriting tool (pen tip and pen cap). The handwriting detection uses the YOLOv5 model. The key point detection on the handwriting tool pen end (pen tip and pen cap) is improved based on the open source HRNet, and a complete set of Training and testing process for pen tip and cap key point detection; in order to facilitate subsequent model engineering and Android platform deployment, the project supports lightweight model LiteHRNet and Mobilenet model training and testing, and provides multiple versions of Python/C++/Android

AndroidPen tip and cap key point detectionAPP Demo experience:

https://download.csdn.net/download/guyuealian/88535143

6. Special Edition: Pen Tip Fingertip Detection

Due to length, this article only implements pen tip and cap key point detection; in essence, it is necessary to achievefingertip readingor a> function, we may not need pen cap detection, but need to implement pen tip + fingertip detection function; its implementation method is similar to pen tip cap key point detection . Pen tip point reading

The following is a demo of the pen tip + fingertip detection algorithm used in successful product implementation. Its detection accuracy and speed performance are better than those of pen tip and cap detection.