1. Background

JDK21 was officially released on September 19th, bringing many highlights. Among them, virtual threads have attracted much attention. It is no exaggeration to say that it has changed the way of writing high-throughput code. Only small changes can make the current The throughput of IO-intensive programs has been improved, making it no longer difficult to write high-throughput code.

This article will introduce in detail the usage scenarios of virtual threads, implementation principles, and performance stress testing results under IO-intensive services.

2. In order to improve throughput performance, we have made optimizations

Before talking about virtual threads, let's first talk about some optimization solutions we have made to improve throughput performance.

serial mode

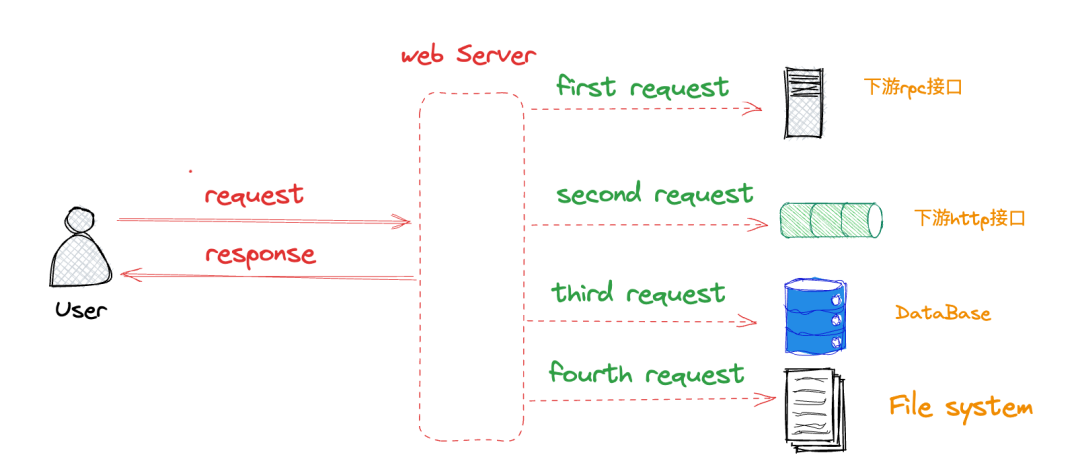

Under the current microservice architecture, processing a user/upstream request often requires multiple calls to downstream services, databases, file systems, etc., and then processing all requested data and returning the final results to the upstream.

In this mode, use serial mode to query the database, downstream Dubbo/Http interface, and file system to complete a request. The overall time consumption of the interface is equal to the sum of the return times of each downstream. Although this writing method is simple, the interface takes a long time. , poor performance, unable to meet the performance requirements of the C-side high QPS scenario.

In this mode, use serial mode to query the database, downstream Dubbo/Http interface, and file system to complete a request. The overall time consumption of the interface is equal to the sum of the return times of each downstream. Although this writing method is simple, the interface takes a long time. , poor performance, unable to meet the performance requirements of the C-side high QPS scenario.

Thread pool + Future asynchronous call

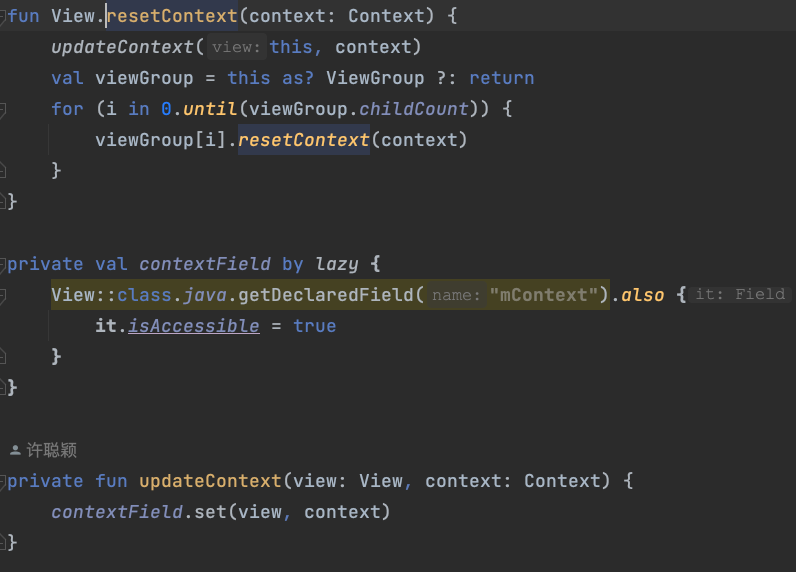

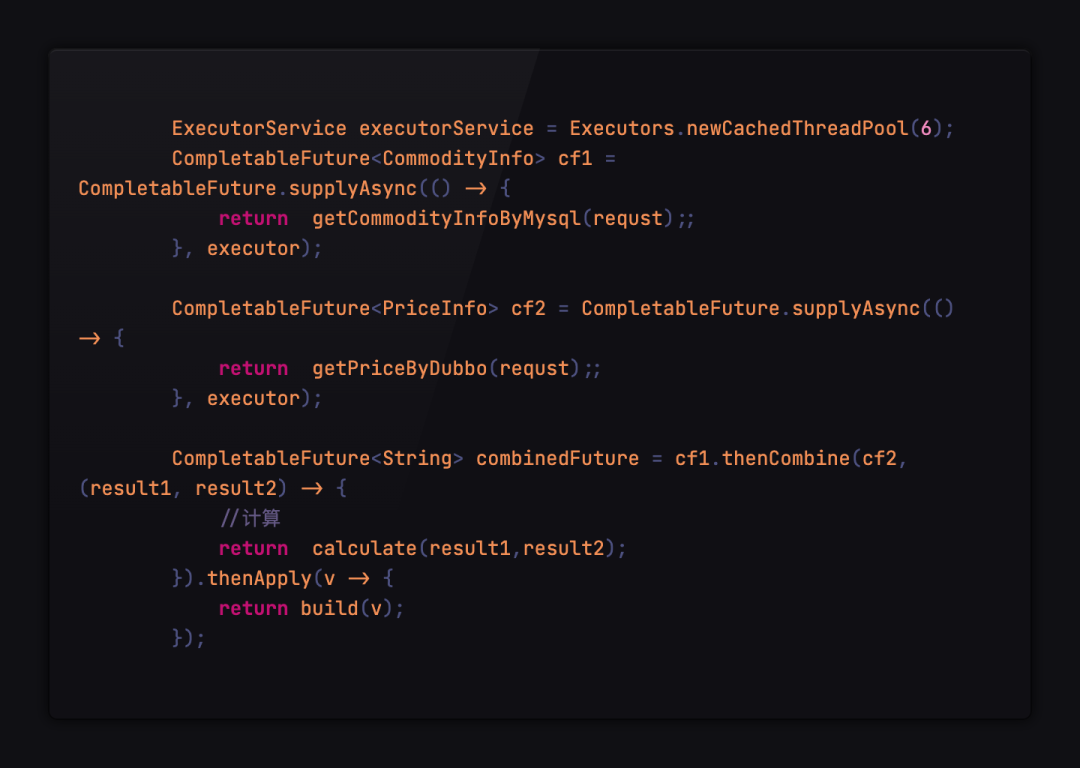

In order to solve the low performance problem of serial calls, we will consider using parallel asynchronous calls. The simplest way is to use thread pool + Future to make parallel calls.  Typical code is as follows:

Typical code is as follows:  Although this method solves the problem of low performance of serial calls in most scenarios, it also has serious drawbacks. Due to the existence of future dependencies, when there are a large number of dependencies in the usage scenario, it will cause A large amount of thread resources and CPU are wasted on blocking waiting , resulting in low resource utilization.

Although this method solves the problem of low performance of serial calls in most scenarios, it also has serious drawbacks. Due to the existence of future dependencies, when there are a large number of dependencies in the usage scenario, it will cause A large amount of thread resources and CPU are wasted on blocking waiting , resulting in low resource utilization.

Thread pool + CompletableFuture asynchronous call

In order to reduce the blocking waiting time of the CPU and improve resource utilization, we will use CompletableFuture to orchestrate the calling process and reduce blocking between dependencies.

CompletableFuture was introduced by Java8. Before Java8, asynchronous implementation was generally implemented through Future. Future is used to represent the results of asynchronous calculation. If there are dependencies between processes, the results can only be obtained through blocking or polling. At the same time, the native Future does not support setting callback methods. Before Java 8, you can use Guava to set callbacks. ListenableFuture, the introduction of callbacks will lead to callback hell, and the code is basically unreadable.

CompletableFuture is an extension of Future. It natively supports processing calculation results by setting callbacks. It also supports combined orchestration operations, which solves the problem of callback hell to a certain extent.

The implementation using CompletableFuture is as follows:  Although CompletableFuture alleviates the problem of a large amount of CPU resources wasted on blocking waiting to a certain extent , it only alleviates the problem, and the core problem has never been solved. These two problems prevent the CPU from being fully utilized and the system throughput easily reaches a bottleneck.

Although CompletableFuture alleviates the problem of a large amount of CPU resources wasted on blocking waiting to a certain extent , it only alleviates the problem, and the core problem has never been solved. These two problems prevent the CPU from being fully utilized and the system throughput easily reaches a bottleneck.

- The bottleneck of thread resource waste is always IO waiting , resulting in low CPU resource utilization. At present, most services are IO-intensive services. Most of the processing time of a request is spent waiting for downstream RPC and IO waiting for database queries. At this time, the thread can still only block and wait for the result to be returned, resulting in low CPU utilization. .

- There is a limit to the number of threads . In order to increase concurrency, we will configure a larger number of threads for the thread pool , but the number of threads is limited. Java's thread model maps platform threads 1:1, resulting in the cost of Java thread creation. It is very high and cannot be increased indefinitely. At the same time, as the number of CPU scheduling threads increases, more serious resource contention will occur, and precious CPU resources will be wasted on context switching.

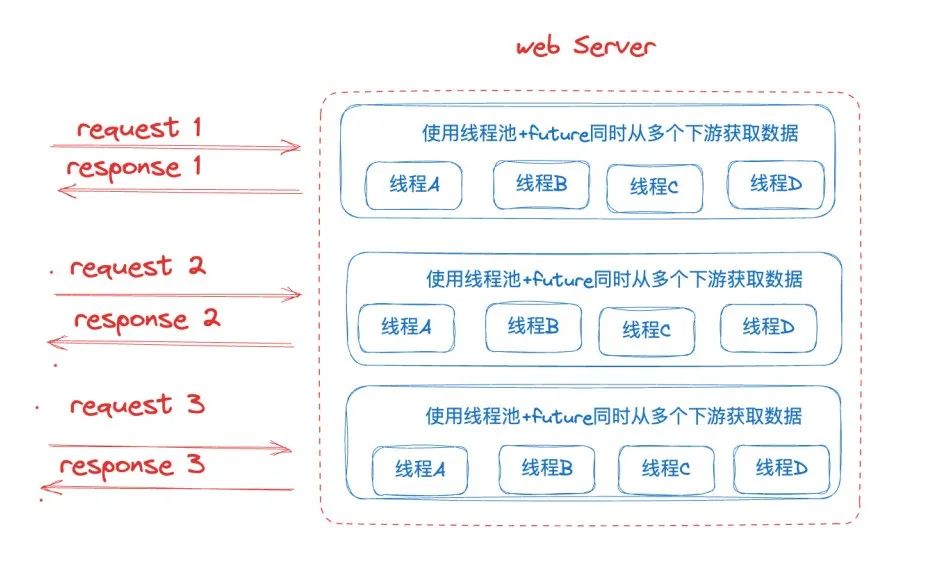

3. One request, one thread model

Before giving the final solution, let's first talk about the one-request-one-thread model common in web applications.

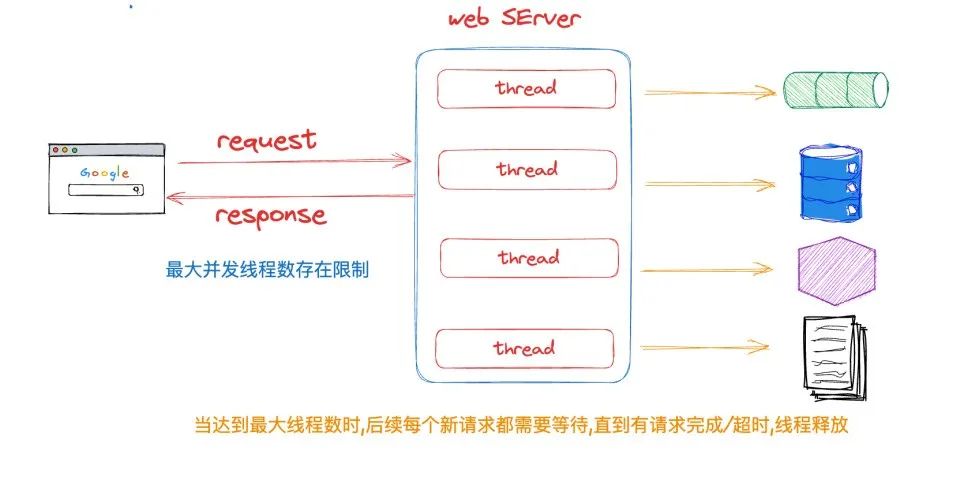

Our most common request model in the Web is the one-request-one-thread model, where each request is processed by a separate thread. This model is easy to understand and implement, and is very friendly to coding readability and debugging. However, it has some shortcomings. When a thread performs a blocking operation (such as connecting to a database or making a network call), the thread is blocked until the operation completes, which means that the thread will not be able to handle any other requests during this time.  When encountering scenarios such as major promotions or sudden traffic, which cause the number of requests to be endured by the service to increase, in order to ensure that each request is returned in the shortest possible time and reduce waiting time, we often adopt the following solutions:

When encountering scenarios such as major promotions or sudden traffic, which cause the number of requests to be endured by the service to increase, in order to ensure that each request is returned in the shortest possible time and reduce waiting time, we often adopt the following solutions:

- Expanding the maximum number of service threads is simple and effective. Due to the following problems, the maximum number of platform threads is limited and cannot be expanded in large quantities.

- Limited system resources lead to a limited total number of system threads, which in turn leads to a limited number of platform threads corresponding to system threads.

- The scheduling of platform threads depends on the system's thread scheduler. When too many platform threads are created, a large amount of resources will be consumed to process thread context switching.

- Each platform thread will open up a private stack space of about 1m, and a large number of platform threads will occupy a large amount of memory.

- Vertical expansion, upgrading machine configuration, horizontal expansion, adding service nodes , which is commonly known as configuration upgrade and capacity expansion, is effective and is the most common solution. The disadvantage is that it will increase costs. At the same time, in some scenarios, capacity expansion cannot 100% solve the problem.

- Adopt asynchronous/responsive programming solutions , such as RPC NIO asynchronous calls, WebFlux, Rx-Java and other non-blocking Ractor model-based frameworks, and use event-driven to enable high-throughput request processing with a small number of threads, with better performance and Excellent resource utilization, the disadvantage is that the learning cost is high, compatibility issues are large, the coding style is very different from the current one-request-one-thread model, it is difficult to understand, and it is difficult to debug the code.

So is there a method that is easy to write, easy to migrate, conforms to daily coding habits, has good performance, and has high CPU resource utilization?

The virtual thread in JDK21 may give the answer . JDK provides an abstract Virtual Thread that is completely consistent with Thread to deal with this frequent blocking situation. Blocking will still block, but if the blocking object is changed, it will be blocked by expensive platform threads. Changed to a very low-cost virtual thread blocking. When the code calls blocking API such as IO, synchronization, Sleep and other operations, the JVM will automatically unload the Virtual Thread from the platform thread , and the platform thread will process the next virtual thread. In this way, the utilization of platform threads is improved, so that platform threads are no longer blocked in waiting, and a small number of platform threads can handle a large number of requests from the bottom layer, improving service throughput and CPU utilization.

4. Virtual thread

Thread term definition

Operating system thread (OS Thread) : managed by the operating system, it is the basic unit of operating system scheduling.

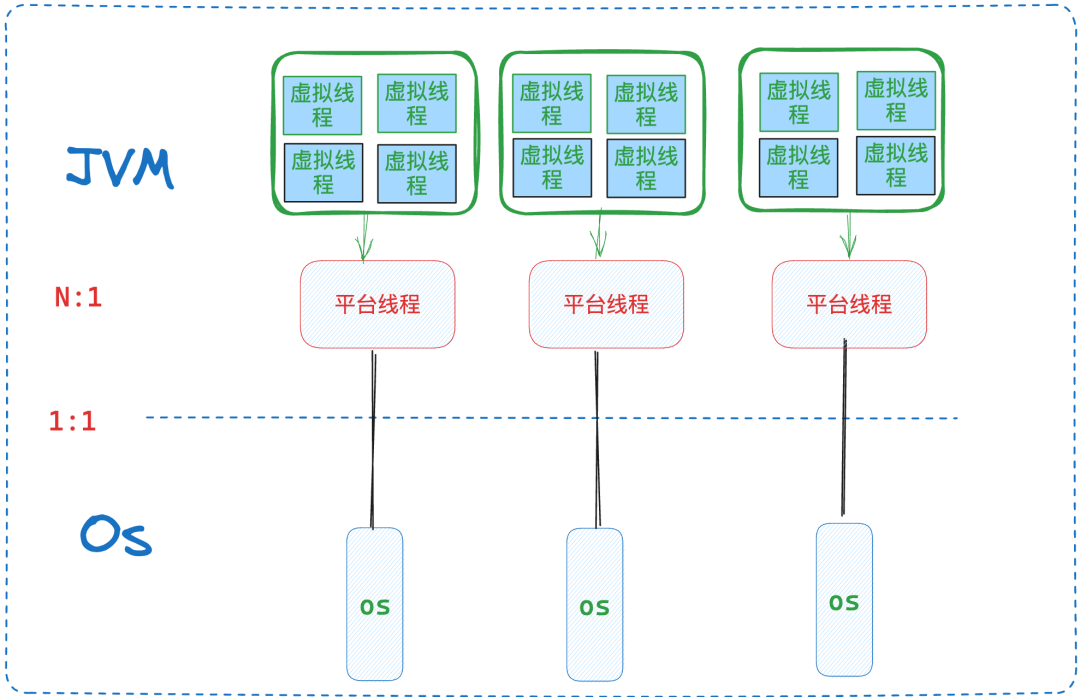

Platform Thread : Each instance of the Java.Lang.Thread class is a platform thread, which is Java's packaging of operating system threads and has a 1:1 mapping with the operating system.

Virtual Thread : A lightweight thread managed by the JVM. The corresponding instance is the java.lang.VirtualThread class.

Carrier Thread : refers to the platform thread that is actually responsible for executing tasks in the virtual thread. After a virtual thread is loaded into a platform thread, the platform thread is called the carrier thread of the virtual thread.

Virtual thread definition

Each instance of java.lang.Thread in the JDK is a platform thread. The platform thread runs Java code on the underlying operating system thread and exclusively occupies the operating system thread during the entire life cycle of the code. The platform thread instance is essentially scheduled by the thread scheduler of the system kernel, and the number of platform threads is limited by the operating system The number of threads .

The virtual thread (Virtual Thread) is not bound to a specific operating system thread . It runs Java code on the platform thread, but does not monopolize the platform thread throughout the life of the code. **This means that many virtual threads can run their Java code on the same platform thread, sharing the same platform thread. **At the same time, the cost of virtual threads is very low, and the number of virtual threads can be much larger than the number of platform threads.

Virtual thread creation

Method 1: Directly create a virtual thread

Thread vt = Thread.startVirtualThread(() -> {

System.out.println("hello wolrd virtual thread");

});

Method 2: Create a virtual thread but do not run it automatically. Call start() manually to start running.

Thread.ofVirtual().unstarted(() -> {

System.out.println("hello wolrd virtual thread");

});

vt.start();

Method 3: Create a virtual thread through the ThreadFactory of the virtual thread

ThreadFactory tf = Thread.ofVirtual().factory();

Thread vt = tf.newThread(() -> {

System.out.println("Start virtual thread...");

Thread.sleep(1000);

System.out.println("End virtual thread. ");

});

vt.start();

Method 4: Executors.newVirtualThreadPer -TaskExecutor()

ExecutorService executor = Executors.newVirtualThreadPerTaskExecutor();

executor.submit(() -> {

System.out.println("Start virtual thread...");

Thread.sleep(1000);

System.out.println("End virtual thread.");

return true;

});

Virtual thread implementation principle

Virtual threads are scheduled by the Java virtual machine, not the operating system. Virtual threads occupy a small space and use lightweight task queues to schedule virtual threads, which avoids the cost of kernel-based context switching between threads, so they can be created and used in large quantities.

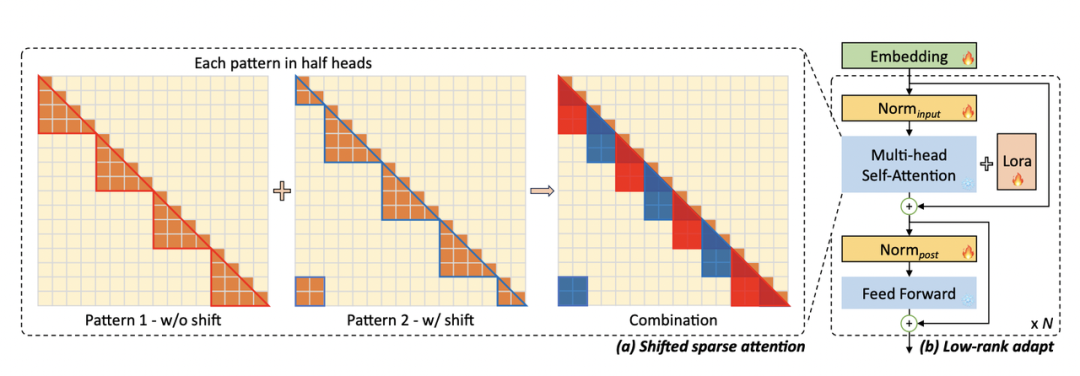

Simply put, the virtual thread is implemented as follows: virtual thread =continuation+scheduler+runnable

The virtual thread will wrap the task (java.lang.Runnable instance) into a Continuation instance:

- When a task needs to be blocked and suspended, the yield operation of Continuation will be called to block, and the virtual thread will be unloaded from the platform thread.

- When the task is unblocked and continues execution, calling Continuation.run will continue execution from the blocking point.

Scheduler is the executor, which submits tasks to a specific carrier thread pool for execution.

- It is a subclass of java.util.concurrent.Executor.

- The virtual thread framework provides a default FIFO ForkJoinPool for executing virtual thread tasks.

Runnable is the real task wrapper, and Scheduler is responsible for submitting it to the carrier thread pool for execution.

The operation of the JVM to allocate virtual threads to platform threads is called mount, and the operation of unallocating platform threads is called unmount:

Mount operation : The virtual thread is mounted to the platform thread, and the Continuation stack frame data packaged in the virtual thread will be copied to the thread stack of the platform thread. This is a process of copying from the heap to the stack.

Unmount operation : The virtual thread is unloaded from the platform thread. At this time, the virtual thread's task has not been completed, so the Continuation stack data frame packaged in the virtual thread will remain in the heap memory.

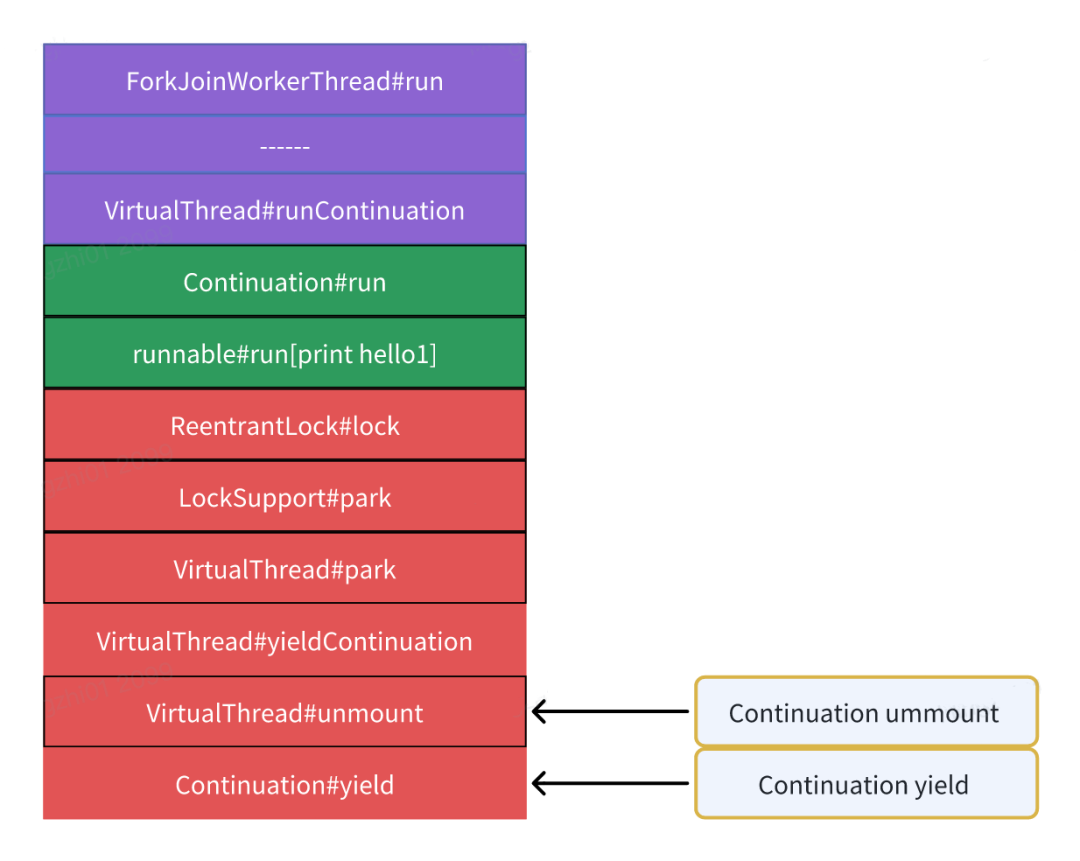

From the perspective of Java code, it is actually impossible to see that the virtual thread and the carrier thread share the operating system thread. It is considered that the virtual thread and its carrier are running on the same thread. Therefore, multiple calls on the same virtual thread The code may mount a different carrier thread each time it is called. The JDK uses ForkJoinPool in FIFO mode as the virtual thread scheduler . From this scheduler, the execution process of the virtual thread task is roughly as follows:

- Platform threads in the scheduler (thread pool) wait to process tasks.

- A virtual thread is assigned a platform thread, which acts as a carrier thread to perform tasks in the virtual thread.

- The virtual thread runs its Continuation, and after Mounting the platform thread, it finally executes the actual user task wrapped by Runnable.

- After the virtual thread task execution is completed, the Continuation is marked as terminated, the virtual thread is marked as terminal, the context is cleared, waiting for GC recycling, and the unmounted carrier thread will be returned to the scheduler (thread pool) to wait for the next task.

The above is the execution of virtual thread tasks without blocking scenarios. If a blocking (such as Lock, etc.) scenario is encountered, the yield operation of Continuation will be triggered to give up control and wait for the virtual thread to reallocate the carrier thread and execute it. See the following code for details:

ReentrantLock lock = new ReentrantLock();

Thread.startVirtualThread(() -> {

lock.lock();

});

// 确保锁已经被上面的虚拟线程持有

Thread.sleep(1000);

Thread.startVirtualThread(() -> {

System.out.println("first");

会触发Continuation的yield操作

lock.lock();

try {

System.out.println("second");

} finally {

lock.unlock();

}

System.out.println("third");

});

Thread.sleep(Long.MAX_VALUE);

}

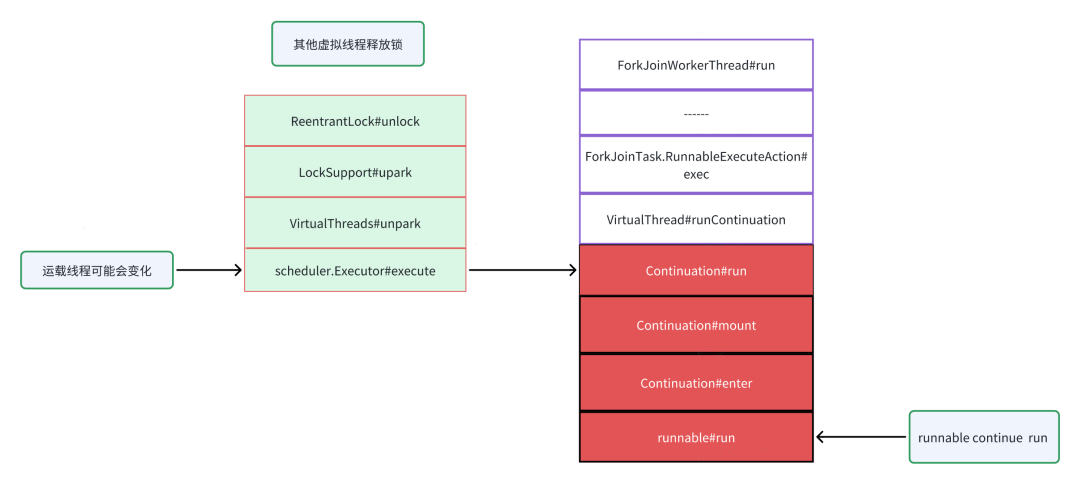

When the task is executed in the virtual thread, Continuation#run() is called to first execute part of the task code and then try to acquire the lock . This operation is a blocking operation and will cause the yield operation of the Continuation to give up control. If the yield operation is successful, it will be unmounted from the carrier thread. , the carrier thread stack data will be moved to the data frame of the Continuation stack and saved in the heap memory. The virtual thread task is completed. At this time, the virtual thread and Continuation have not been terminated and released, and the carrier thread is released to the executor to wait for new tasks. ; If the yield operation of the Continuation fails, Park will be called on the carrier thread and blocked on the carrier thread. At this time, the virtual thread and the carrier thread will be blocked at the same time . Local methods and synchronized methods modified by Synchronized will cause the yield to fail.

When the lock holder releases the lock, the virtual thread will be awakened to acquire the lock . After successfully acquiring the lock, the virtual thread will re-mount to allow the virtual thread task to be executed again. At this time, it may be assigned to another carrier thread for execution . Continuation The data frame in the stack will be restored to the carrier thread stack, and then Continuation#run() will be called again to resume task execution.

After the execution of the virtual thread task is completed, the Continuation is marked as terminated, the virtual thread is marked as terminal, the context variables are cleared, the carrier thread is unmounted, and the carrier thread is returned to the scheduler (thread pool) as a platform thread waiting to process the next task.

The Continuation component is very important. It is not only a wrapper for the user's real task, but also provides the ability to pause/continue the virtual thread task, as well as the data transfer function between the virtual thread and the platform thread. When the task needs to be blocked and suspended, the yield operation of Continuation is called. Perform blocking. When the task needs to be unblocked and continue execution, the run of Continuation is called to resume execution.

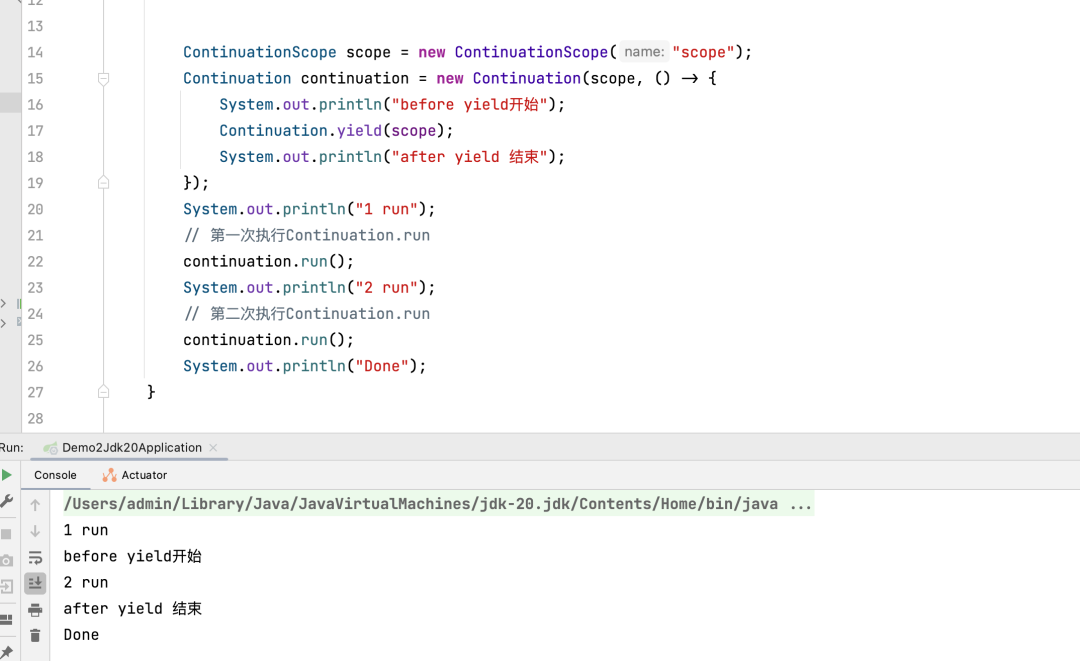

The magic of Continuation can be seen from the following code. It can be run locally by adding --add-exports java.base/jdk.internal.vm=ALL-UNNAMED to the compilation parameters.

ContinuationScope scope = new ContinuationScope("scope");

Continuation continuation = new Continuation(scope, () -> {

System.out.println("before yield开始");

Continuation.yield(scope);

System.out.println("after yield 结束");

});

System.out.println("1 run");

// 第一次执行Continuation.run

continuation.run();

System.out.println("2 run");

// 第二次执行Continuation.run

continuation.run();

System.out.println("Done");

It can be seen from the above case that after the Continuation instance makes a yield call, calling its run method again can continue execution from the point where yield was called, thereby realizing program interruption and recovery.

It can be seen from the above case that after the Continuation instance makes a yield call, calling its run method again can continue execution from the point where yield was called, thereby realizing program interruption and recovery.

Virtual thread memory usage evaluation

Resource usage of a single platform thread:

- According to the JVM specification, 1 MB of thread stack space is reserved.

- Platform thread instances will occupy 2000+ bytes of data.

Resource usage of a single virtual thread:

- The Continuation stack will occupy hundreds of bytes to hundreds of KB of memory space and is stored in the Java heap as a stack block object.

- A virtual thread instance will occupy 200 - 240 bytes of data.

Judging from the comparison results, theoretically, the memory space occupied by a single platform thread is at least KB level, while the memory space occupied by a single virtual thread instance is byte level . The memory occupancy gap between the two is large. This is why virtual threads can be used in large quantities. Reason for creation.

The following is a program to test the memory usage of platform threads and virtual threads:

private static final int COUNT = 4000;

/**

* -XX:NativeMemoryTracking=detail

*

* @param args args

*/

public static void main(String[] args) throws Exception {

for (int i = 0; i < COUNT; i++) {

new Thread(() -> {

try {

Thread.sleep(Long.MAX_VALUE);

} catch (Exception e) {

e.printStackTrace();

}

}, String.valueOf(i)).start();

}

Thread.sleep(Long.MAX_VALUE);

}

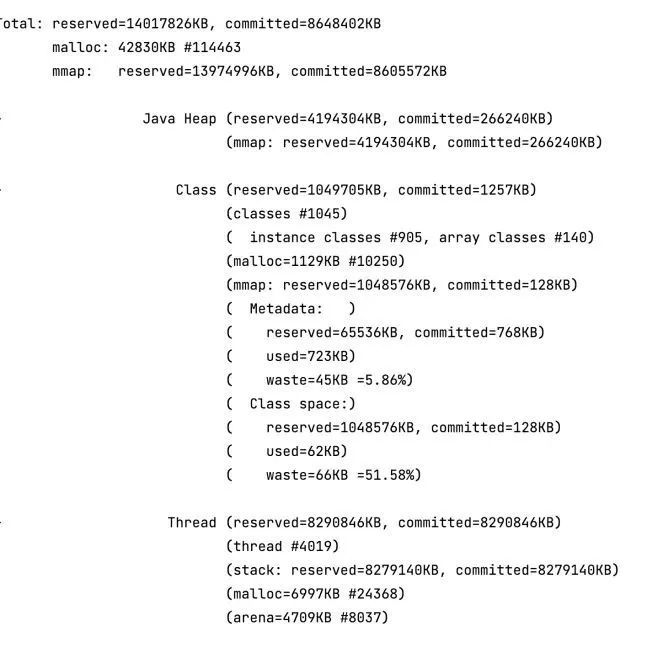

After the above program runs, 4000 platform threads are started. Use the -XX:NativeMemoryTracking=detail parameter and the JCMD command to view the memory space occupied by all threads as follows:  Most of the memory occupied comes from the created platform threads, and the total thread stack space occupied is approximately 8096 MB. The two together account for more than 96% of the total memory used (8403MB).

Most of the memory occupied comes from the created platform threads, and the total thread stack space occupied is approximately 8096 MB. The two together account for more than 96% of the total memory used (8403MB).

Write a program that runs a virtual thread in a similar way:

private static final int COUNT = 4000;

/**

* -XX:NativeMemoryTracking=detail

*

* @param args args

*/

public static void main(String[] args) throws Exception {

for (int i = 0; i < COUNT; i++) {

Thread.startVirtualThread(() -> {

try {

Thread.sleep(Long.MAX_VALUE);

} catch (Exception e) {

e.printStackTrace();

}

});

}

Thread.sleep(Long.MAX_VALUE);

}

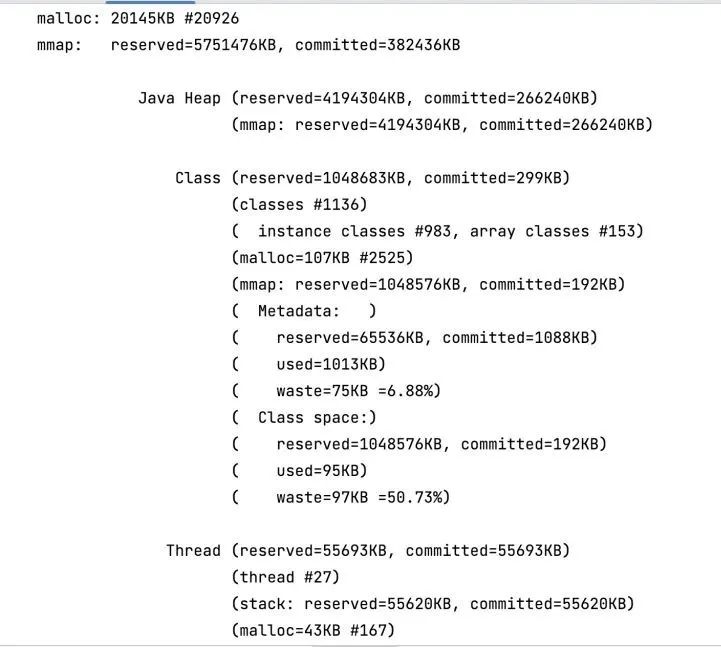

After the above program is run, 4000 virtual threads are started:  the actual occupancy of heap memory and the actual occupancy of total memory do not exceed 300 MB. It can be proved that virtual threads will not occupy too much memory even if a large number of them are created, and The stack of the virtual thread is stored in the Java heap as a stack block object and can be recycled by GC, which also reduces the occupancy of the virtual thread.

the actual occupancy of heap memory and the actual occupancy of total memory do not exceed 300 MB. It can be proved that virtual threads will not occupy too much memory even if a large number of them are created, and The stack of the virtual thread is stored in the Java heap as a stack block object and can be recycled by GC, which also reduces the occupancy of the virtual thread.

Limitations and usage suggestions of virtual threads

- If the virtual thread has a native method or an external method (Foreign Function & Memory API, jep 424), the yield operation cannot be performed, and the carrier thread will be blocked at this time.

- When running in a synchronized modified code block or method, the yield operation cannot be performed. At this time, the carrier thread will be blocked. It is recommended to use ReentrantLock.

- ThreadLocal related issues , currently virtual threads still support ThreadLocal, but because the number of virtual threads is very large, there will be a lot of thread variables stored in Threadlocal, which requires frequent GC to clean up, which will have an impact on performance. The official recommendation is to use it as little as possible ThreadLocal, and do not enlarge the object in the ThreadLocal of the virtual thread. Currently, the official wants to replace ThreadLocal with ScopedLocal, but it has not been officially released in version 21. This may be a big problem for large-scale use of virtual threads .

- No need to pool virtual threads. Virtual threads occupy very few resources, so they can be created in large numbers without considering pooling. It does not need to be the same as the platform thread pool. The creation cost of platform threads is relatively expensive, so we usually choose to de-pool and do it. Sharing, but the pooling operation itself will introduce additional overhead . For virtual thread pooling, it is not worth the gain. Using virtual threads, we abandon the thinking of pooling, create them when they are used, and throw them away after use.

Applicable scenarios for virtual threads

- A large number of IO blocking waiting tasks, such as downstream RPC calls, DB queries, etc.

- Large batches of computational tasks that require short processing times.

- Thread-per-request (one request, one thread) style applications, such as the mainstream Tomcat threading model or the SpringMVC framework based on a similar threading model, these applications only need small changes to bring huge throughput improvements.

5. Virtual thread stress test performance analysis

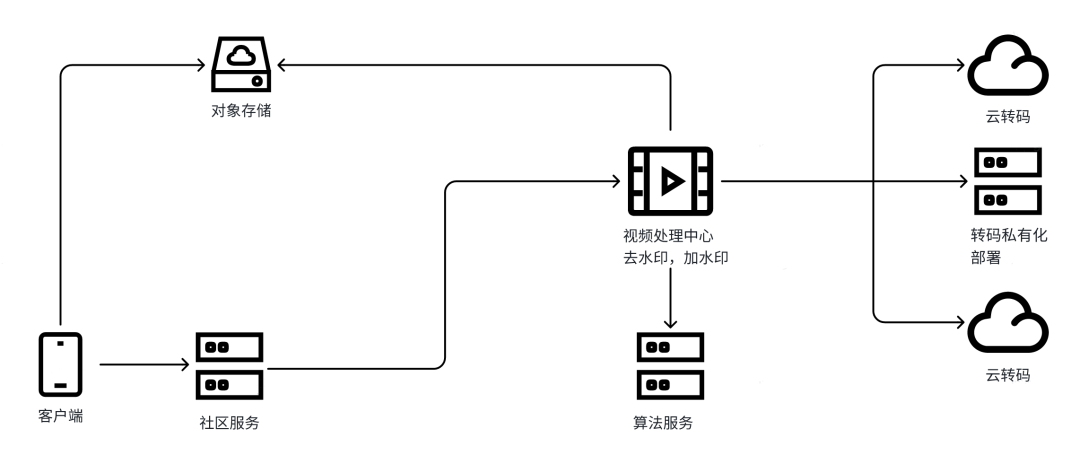

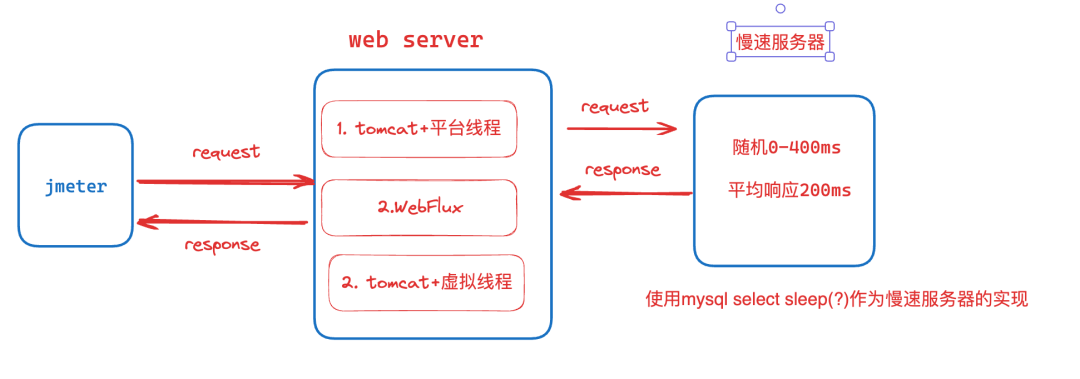

In the following test, we will simulate the most commonly used scenario - using a web container to handle HTTP requests.

Scenario 1: Use the embedded Tomcat in Spring Boot to process HTTP requests, and use the default platform thread pool as Tomcat's request processing thread pool.

Scenario 2 : Use Spring-WebFlux to create an application based on the event loop model for responsive request processing.

Scenario 3: Use the embedded Tomcat in Spring Boot to process Http requests, and use the virtual thread pool as Tomcat's request processing thread pool (Tomcat already supports virtual threads).

Test process

- Jmeter starts 500 threads to initiate requests in parallel. Each thread will wait for the request response before initiating the next request. The timeout for a single request is 10s, and the test time lasts 60s.

- The tested Web Server will accept Jmeter's request and call the slow server to obtain the response and return it.

- Slow servers respond with random timeouts. The maximum response time is 1000ms. The average response time is 500ms.

Metrics

Throughput and average response time , the higher the throughput, the lower the average response time, the better the performance.

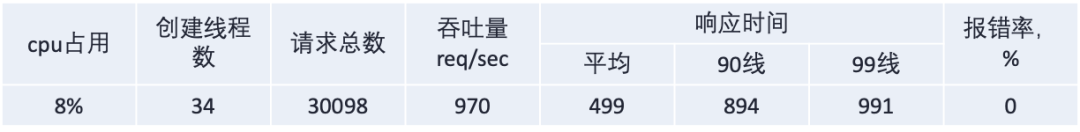

Tomcat+normal thread pool

By default, Tomcat uses a one-request-one-thread model to process requests. When Tomcat receives a request, it will take a thread from the thread pool to process the request. The allocated thread will remain occupied until the request is completed and will not be released. When there are no threads in the thread pool, the request will be blocked in the queue until there is a request to release the thread. The default queue length is Integer.MAX.

Default thread pool

By default, the thread pool contains up to 200 threads. This basically means that a maximum of 200 requests can be processed at a single point in time. Serving each request calls a slow server in a blocking manner with an average RT of 500ms. Therefore, a throughput of 400 requests per second can be expected, and the final stress test result is very close to the expected value, which is 388 req/sec.

Increase thread pool

For the sake of throughput, the production environment generally does not use the default value and increases the thread pool to server.tomcat.threads.max=500+. The stress test results after adjusting to 500+ are as follows:

It can be seen that the final throughput and the number of threads increase proportionally. At the same time, due to the increase in the number of threads, the request waiting decreases, and the average RT tends to the average RT of the response of the slow server.

However, it should be noted that the creation of platform threads is limited by memory and the Java thread mapping model and cannot be expanded infinitely. At the same time, a large number of threads will cause a large amount of CPU resources to be consumed during context switching, and the overall performance will be reduced.

WebFlux

WebFlux is different from the traditional Tomcat threading model. It does not allocate a dedicated thread for each request. Instead, it uses the event loop model to process multiple requests at the same time through non-blocking I/O operations, which allows it to use a limited number of threads. Handle a large number of concurrent requests.

In the stress test scenario, use WebClient to make a non-blocking HTTP call to the slow processor, and use RouterFunction to do request mapping and processing.

@Bean

public WebClient slowServerClient() {

return WebClient.builder()

.baseUrl("http://127.0.0.1:8000")

.build();

}

@Bean

public RouterFunction<ServerResponse> routes(WebClient slowServerClient) {

return route(GET("/"), (ServerRequest req) -> ok()

.body(

slowServerClient

.get()

.exchangeToFlux(resp -> resp.bodyToFlux(Object.class)),

Object.class

));

}

The WebFlux stress test results are as follows:

It can be seen that WebFlux's requests are not blocked at all, and only 25 threads are used to achieve a throughput of 964 req/sec.

Tomcat+virtual thread pool

Compared with platform threads, virtual threads have a much lower memory footprint. Running programs can create a large number of virtual threads without exhausting system resources; at the same time, when encountering Thread.sleep(), CompletableFuture.await(), wait I/O, when acquiring a lock, the virtual thread will be automatically unloaded, and the JVM can automatically switch to another waiting virtual thread to improve the utilization of a single platform thread and ensure that the platform thread will not be wasted in meaningless blocking waiting.

To use virtual threads, you need to add --enable-preview to the startup parameters. At the same time, Tomcat already supports virtual threads in version 10. We only need to replace Tomcat's platform thread pool with a virtual thread pool.

@Bean

public TomcatProtocolHandlerCustomizer<?> protocolHandler() {

return protocolHandler ->

protocolHandler.setExecutor(Executors.newVirtualThreadPerTaskExecutor());

}

private final RestTemplate restTemplate;

@GetMapping

public ResponseEntity<Object> callSlowServer(){

return restTemplate.getForEntity("http://127.0.0.1:8000", Object.class);

}

The final pressure test results are as follows:

You can see that the stress test results of virtual threads are actually the same as those of WebFlux, but we are not using any complex reactive programming techniques at all . At the same time, regular blocking RestTemplate is also used for calls to slow servers. All we did was replace the thread pool with a virtual thread executor to achieve the same effect as the more complex Webflux writing method.

The overall pressure test results are as follows:

From the above pressure test results, we can draw the following conclusions:

- The traditional thread pool mode has unsatisfactory effects. Throughput can be improved by increasing the number of threads, but system capacity and resource limitations need to be taken into consideration. However, for most scenarios, using thread pools to handle blocking operations is still a mainstream and good choice.

- The effect of WebFlux is very good, but considering that it needs to be developed completely in a responsive style, the cost and difficulty are relatively high. At the same time, WebFlux has some compatibility issues with some existing mainstream frameworks. For example, the official Mysql IO library does not support NIO and Threadlocal compatibility issues. etc. Migrating existing applications basically requires rewriting all code, and the amount of changes and risks are uncontrollable.

- Virtual threads work very well, and the biggest advantage is that we didn't modify the code or use any reactive techniques. The only change was to replace the thread pool with virtual threads . Although the changes are minor, the performance results are significantly improved compared to using a thread pool.

Based on the above stress test results, we can be optimistic that virtual threads will subvert our current services and request processing methods in the framework.

6. Summary

For a long time in the past, when writing server-side applications, we used exclusive threads to process each request. The requests were independent of each other. This is the one-request-one-thread model . This approach is easy to understand and implement in programming. , also easy to debug and performance tune.

However, the one-request-one-thread style cannot simply be implemented using platform threads, because platform threads are encapsulations of threads in the operating system. Operating system threads have a higher application cost and there is an upper limit on their number. **For a server-side application that needs to handle a large number of requests concurrently, it is unrealistic to create a platform thread for each request. **Under this premise, a number of non-blocking I/O and asynchronous programming frameworks have emerged, such as WebFlux and RX-Java. When a request is waiting for an I/O operation, it temporarily yields the thread and continues execution after the I/O operation completes. In this way, a large number of requests can be processed simultaneously with a small number of threads. These frameworks can improve the throughput of the system, but they require developers to be familiar with the underlying framework used and write code in a responsive style. The difficulty of debugging, learning costs, and compatibility issues of responsive frameworks make most people discouraged. After using virtual threads, everything will change. Developers can use the most comfortable way to write code. High performance and high throughput are automatically completed by virtual threads for you, which greatly reduces the difficulty of writing high-concurrency service applications. .

Reference documentation:

https://zhuanlan.zhihu.com/p/514719325

https://www.vlts.cn/post/virtual-thread-source-code#%E5%89%8D%E6%8F%90

https://zhuanlan.zhihu.com/p/499342616

* Text/creed

This article is original to Dewu Technology. For more exciting articles, please see: Dewu Technology official website

Reprinting without the permission of Dewu Technology is strictly prohibited, otherwise legal liability will be pursued according to law!

IntelliJ IDEA 2023.3 & JetBrains Family Bucket annual major version update new concept "defensive programming": make yourself a stable job GitHub.com runs more than 1,200 MySQL hosts, how to seamlessly upgrade to 8.0? Stephen Chow's Web3 team will launch an independent App next month. Will Firefox be eliminated? Visual Studio Code 1.85 released, floating window Yu Chengdong: Huawei will launch disruptive products next year and rewrite the history of the industry. The US CISA recommends abandoning C/C++ to eliminate memory security vulnerabilities. TIOBE December: C# is expected to become the programming language of the year. A paper written by Lei Jun 30 years ago : "Principle and Design of Computer Virus Determination Expert System"