1. Description

2. Real-time AI done right: timely insights and actions at scale

In my previous blog post , I laid out the challenges of current AI architectures and the inherent limitations they impose on data scientists and developers. These challenges hinder our ability to capitalize on real-time data growth, meet growing consumer expectations, adapt to an increasingly dynamic marketplace, and deliver proven, real-time business results. In short, even though AI brings some great benefits, it still falls short due to the following reasons:

- Targeted forecasts based on broad demographic data fail to provide the insights needed to change behavior and drive impact.

- Batch processing and historical analysis cannot keep up with rapidly evolving consumer demands.

- Time, cost and complexity delay how quickly we can learn new models and take action to meet changing market demands.

- The lack of visibility between teams and the various tool stacks further complicates and delays AI practitioners when trying to build applications that provide value.

Simply put, this outdated model of bringing data into ML/AI systems fails to deliver the types of results needed at the speed that businesses and consumers demand. But there is a better way to deliver real-time impact and drive value through AI.

Now, let’s explore how organizations can transcend these limitations and join the ranks of enterprises bringing ML to their data to deliver smarter applications and more accurate AI predictions at the exact time for maximum business impact.

3. Rethink data architecture and use AI to drive influence

With real-time AI, developers can now build AI-driven applications that not only predict behavior but drive action—at the exact moment it can have the greatest impact. With real-time AI, you now have possibilities—from changing consumer behavior on an individual level, to gaining insights into user intent and context, to taking preventive measures to protect manufacturing uptime and supply chain resiliency. Not included. Move away from what you call the world, which is the percentage of users who churn, and move it to a model where you can understand specific individuals and drive decisions, in the moment, to increase engagement based on a broad demographic understanding of your users Spend.

Achieving this shift will require changes in how AI systems are built. Most artificial intelligence today is based on large amounts of historical data, collected in daily or sometimes weekly batches. The result is a rear-view mirror look at historical behavior based on broad patterns and demographics. With real-time AI, you can connect to a series of events as they unfold, allowing you to spot key moments, signals, and outcomes as they happen. The result is a highly personalized understanding of consumer behavior, security threats, system performance, and more, along with the ability to intervene to change outcomes.

For example, the goal of a music app is not just to provide the right content, but to provide personalized, engaging content that keeps users staying longer, exploring new areas of music, new artists, and new genres, and allowing them to Engage so much that they renew their subscription. The app needs to serve music based not just on the user's history, but on the user's intent within the app when they are actively engaged.

Think about the listener who has been listening to productivity-boosting instrumental music all morning, but now it’s noon and they’re heading to the gym. They want music to help inspire them. If the app continues to offer Beethoven when the user wants to exercise Beyoncé, the app is falling short, burdening the user with search and potential frustration.

So, how does an app detect a change in intent while the user is in the app? This can be revealed through real-time behavior and actions. It could be that the user left a song they had been listening to and scrolled through the selection based on the ranking algorithm, but did not make a selection. The user then goes to one of the carousels provided by the app, but again does not select any options since they are all podcasts. Maybe they'll play a song from their old workout playlist. Additionally, context is important; it can be gleaned from factors such as the day of the week or time of day or even the user's location. Now, with intent and context, real-time ML models can more accurately predict what is needed in the current session.

Once deployed to an application, real-time AI relies on feature freshness and low latency—how quickly features are updated and how quickly the application can then take action. The right infrastructure eliminates response lag and makes more efficient use of resources by computing these features in real-time to make predictions only for active users. This experience gives listeners what they need, when they need it, which will drive engagement and subscription renewals with the ultimate goal of doing so.

4. Flexibility and responsiveness

Real-time AI is built for speed and scale; it delivers the right data on the right infrastructure at the right time. This in turn can capture opportunities in context and train models based on historical and real-time data to make more accurate and timely decisions.

At its most advanced, real-time AI can monitor the performance of ML models. If the model's performance degrades, automatic retraining is triggered. Alternatively, "shadow" models can be trained and if they start to perform better than the models in production, they can be swapped out for underperforming models.

Real-time AI can also make it easier to ensure all demographics are treated equally, allowing the ability to monitor and adjust for unintended consequences in real time. Imagine a model in production starting to pass fairness and bias tests. In real time, enterprises can flip models out of production and fall back to rules-based systems or models that represent business standards and guidelines. This approach provides the flexibility and responsiveness needed in a dynamically changing, high-risk environment.

5. Reduce time, cost and complexity

One of the main limitations of traditional AI is the significant effort and cost associated with data transfer and storage. In contrast, bringing ML capabilities to the data itself saves time, cost, and complexity by eliminating these data transfers because with a simplified architecture you get an environment to process event data, features, and models instantly at scale Ingest data. Additionally, speed and productivity are increased by simplifying complexity and improving understandability of feature and training dataset lineages. In other words, get a clearer, clearer picture of what's happening across multiple pipes, lakes, views, and transitions. With access to direct data sources, you can accelerate understanding, especially when developing capabilities for a team or individual.

Since many customers are not active every day, there is no need to generate daily forecasts across the entire customer base, resulting in further cost savings. For example, if an organization uses a batch processing system and has 100,000 active users per day and 10 billion active users per month, there is no need to rescore 10 billion customers every night. Doing so would add unnecessary and substantial costs. The cost of storing this data continues to increase.

Retraining the model to incorporate behavioral changes requires preserving or being able to reproduce each training data set to enable troubleshooting and auditing. To reduce the amount of data copied and stored over time, each time a model is retrained, you should be able to access the original, untransformed version of the data and be able to reproduce the training dataset using the feature versions used during training. This is very difficult with batch processing systems because the data has gone through multiple transformations across multiple systems and languages. In real-time systems, expressing complete functionality for training and production directly from raw events reduces the amount of duplication of all data that typically needs to be stored in multiple locations.

Bringing AI/ML to data sources creates a single way to express transformations. You now know you're connected to the correct source, you know this is the exact definition of the transformation, and you can express what you need in the data transformation more succinctly in a few lines of code - rather than pipe to pipe to pipe, these Multiple transmissions involve confusion.

6. Reduce friction and anxiety

As I pointed out in a previous blog post , team members across the data, ML, and application stacks often don't have broad visibility or deep understanding of ML projects. These tools vary greatly depending on where the team member is in the stack.

These silos inject friction and confusion into the process and create confusion around things like, “Is this ready for production?” Once we actually go into production, will this work? ” Or, “Despite millions of dollars invested, are these models having only minimal impact—falling far short of the promise or vision of delivering value?”

Bringing AI/ML to data enables a unified interface with a set of abstractions to support training and production. The same feature definition can generate any number of training/test datasets and keep the feature store up to date with new data streams. Additionally, using a declarative framework allows you to export feature definitions and the resource definitions they depend on to check content into a code repository or CI/CD pipeline and spin up new environments with new data in different regions without transferring the data out the area. This makes it easy to integrate best practices, simplifies testing, and shortens the learning curve. This also inherently reduces friction and provides greater visibility and a clearer understanding of what's going on in a specific code set through a more standardized format.

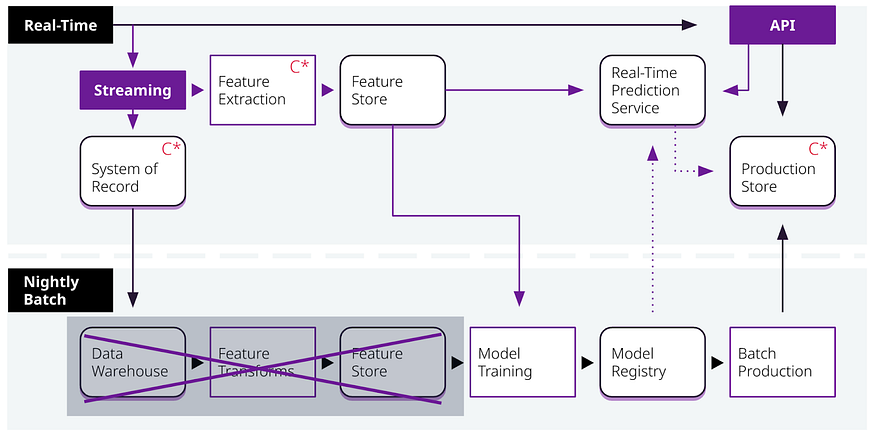

7. Complete real-time AI correctly

Real-time AI solutions take a new approach by combining real-time data at scale with integrated machine learning into a complete open data stack built for developers. With the right stack, and the right abstractions, there is incredible promise for creating a new generation of applications that drive more accurate business decisions, predictive operations, and more engaging, engaging consumer experiences. This has the potential to unlock the value and promise of AI in a way that is beyond the reach of all but a few businesses. This will yield instant access to large-scale data in all its forms. With integrated intelligence, real-time AI will provide timely insights into globally distributed operations, data and users.

In an upcoming blog post, I will discuss the critical role of Apache Cassandra® in this real-time AI stack and some of the exciting new things you can expect from DataStax.