Introduction: This article is compiled from "Artificial Intelligence in the GPT Era" by Zhang Bo, academician of the Chinese Academy of Sciences, professor of the Department of Computer Science at Tsinghua University, and honorary dean of the Institute of Artificial Intelligence of Tsinghua University, at the "New Generation AI·New Era Finance" forum at the World Artificial Intelligence Conference on July 8. Sharing the theme of "Intelligent Security". Academician Zhang Bo believes that financial intelligence is an inevitable trend, but top-level planning and deployment are necessary, and a safe and mutual trust system needs to be re-established. Humanity is a community with a shared future. Only when the whole world unites to jointly develop AI, and must focus on development and governance at the same time, can we ensure the healthy development of AI.

The following is the transcript of Academician Zhang Bo’s speech:

AI Safety in the GPT era

Our era is called the ChatGPT era, or it can also be called the GPT era for short, or it can also be called the AIGC era. In 2014, artificial intelligence proposed that neural networks can be used to generate a variety of content. This was a major breakthrough in artificial intelligence. In other words, we can generate the required text, images, voices, and videos through neural networks, completely changing the face of artificial intelligence. In 2017, the generated model was proposed, which is the Conformer converter. Its proposal was also a major breakthrough in artificial intelligence.

OpenAI combined this converter technology with another technology in 2018, which is called text semantic representation under word embedding. These two technological breakthroughs are the result of 60 years of hard work. In 2018, OpenAI combined these two technologies, plus another technology - self-supervised learning, self-supervised learning to predict the next word. These three technologies made ChatGPT. What is the significance of ChatGPT? Some people say that ChatGPT is general artificial intelligence; some experts say that ChatGPT is not general artificial intelligence at all. Both statements seem wrong to me. The correct statement is that ChatGPT takes a step towards general artificial intelligence. Microsoft describes it as the spark of general artificial intelligence, or the prototype of general artificial intelligence, or the dawn of general artificial intelligence, and this statement is correct.

Why take a step towards general artificial intelligence? Two characteristics of general artificial intelligence:

1. On the issue of dialogue, ChatGPT has achieved the goal of artificial intelligence. This goal is the goal set by behaviorism, which is also the goal set by the carefree philosophy. Artificial intelligence should make machine behavior similar to human behavior, and does not pursue the internal working mechanism of the machine to be consistent with the working mechanism of the human brain. This is a mainstream school of thought in artificial intelligence. According to the materialist or behaviorist school of thought, ChatGPT meets the artificial intelligence indicators. Why? Because our conversation with ChatGPT is very close to human conversation.

2. Open fields and multi-task. This has never been possible in the history of artificial intelligence. All past successes of artificial intelligence have been in limited areas and single tasks, so ChatGPT has nothing to do with the field on the issue of dialogue. In other words, for the first time in artificial intelligence, you can ask it any question. In the past, the problems that artificial intelligence wanted to solve had to be clearly stated in which field it was to be solved, and the dialogue was related to the field. The versatility of these two implementations is remarkable.

Why can’t we say general artificial intelligence now? Because it only meets this requirement in the field of dialogue or chatting, artificial intelligence cannot yet do it in many other fields. Whether it can do it in the future still requires our efforts. Our conclusion is that ChatGPT is a very important step towards general artificial intelligence.

What can we see it doing best? For example, I asked it: "I'm going to make a report today. Please share your opinions on AI security in the banking industry." This is what it is best at. What it talks about will be very comprehensive, and we can't imagine it being so comprehensive. This is what it does. What you do best. With this foundation in place, we see the future.

ChatGPT has produced three major changes:

1. It will definitely cause a revolution in science and technology. Why? In the past, compared with information technology, the troublesome problem of artificial intelligence was that there was no theory and the theory could not be established. The main reason was that in the past, artificial intelligence could only solve problems in limited fields and single tasks, and it was impossible to establish problems in limited fields. A general theory. ChatGPT has cleared this obstacle. At least in natural language processing, it is possible to establish a general theory because it has nothing to do with the field. A general artificial intelligence theory can only be established regardless of the field, which will inevitably lead to a revolution in science and technology.

2. It will definitely cause changes in the industry. Comparing the artificial intelligence industry with the information industry, we will find that the development of the information industry continues to be rapid, while the development of the artificial intelligence industry is slow and tortuous. The reason is that before the establishment of the information technology industry, all theories were perfected, including computer theory, communication theory, and control theory. Under the guidance of these theories, information technology has developed very smoothly. There is still no theory for artificial intelligence, and all achievements are limited to certain fields. Therefore, all hardware or software is specialized and the market is very small, unlike the new field of computer hardware and software which are general-purpose and the market is very large. In the past 30 to 40 years, Intel, IBM, and Microsoft have appeared in the information industry. Artificial intelligence has been developed for more than 60 years. Basically, we have never seen Intel with artificial intelligence, IBM with artificial intelligence, and Microsoft with artificial intelligence. . But ChatGPT has the potential to clear this obstacle because it is universal, cross-domain and open-domain.

We superimpose applications on the basis of basic research models, which will inevitably produce qualitative changes. For example, customer service, all the customer service systems we build now, including the bank’s customer service system, teach “idiots” to do customer service. Why? Because the computer originally has nothing. If you teach it to do customer service, aren't you teaching "idiots" to do customer service? So it makes very low-level mistakes. We are now on ChatGPT, which is at least the level of high school students. Therefore, the results of teaching a high school student to do customer service are definitely different from teaching an "idiot" to do customer service in the past. This change will definitely happen.

3. Artificial intelligence governance will definitely occur. The most basic learning method we use now is called "Next token prediction", which is completely different from human learning methods. How does it learn a text? It is a learning method that uses the previous text to predict the next word. This learning method will inevitably bring about a result-the output is uncertain, because the prediction using probability is greatly affected by the prompt word.

Everyone will ask, why do we do this and why are we not sure? I can tell you that this matter creates the necessary conditions for emergence. Emergence is impossible without uncertainty, which is the price we must pay in order to make it creative. If we don't want to pay this price, there will be no emergence and no creativity.

This phenomenon inevitably leads to three results:

1. Mistakes are inevitable. To be creative, it is impossible not to make mistakes. When using ChatGPT, you will see that sometimes it produces great results, and sometimes it is complete nonsense. Everyone will be surprised that such an intelligent system can actually talk nonsense? Bullshit is a given.

2. The robustness is very poor. It will be misled by the prompt words, and we can use different prompt words to make it generate different results. That is, you can do good things and bad things.

3. Lack of self-knowledge. It doesn’t know it’s wrong. Even if you tell it it’s wrong, it won’t be able to correct it on its own. Human beings must help it correct it in the background.

These three issues will lead to the emergence of artificial intelligence security issues. Of course, it also has a lot to do with data quality.

For example, I asked it: "A man is standing facing south, and the sun is on his right. Is this morning, noon, or night?" It got it right and said: "It is night." This requires imagination and reasoning. I said: "No, it's morning." It immediately said: "Sorry, I just said it wrong, it should be morning." This means that it doesn't really know whether this question is right or wrong, and I wrongly guided it to answer the wrong question. , it immediately admitted its error and no longer insisted on the original correct statement. Please note that this is caused by the "Next token prediction" method just mentioned.

In addition, there are value issues caused by data. For example, if you ask it to write a novel using the epidemic, it will say "the epidemic came from China." This data contains many Western viewpoints, and this kind of bias will definitely cause problems. How about the way it picks locks, you ask? It also says at the beginning that this is illegal, but then it teaches you how to pick a lock.

In other words, it has no moral standards, no ethical standards, and no political standards that we require. This is also a very big problem. Additionally, it is vulnerable to attacks such as adversarial example attacks.

First, the governance of the model itself.

Large models will generate a variety of audio, video and text, but the generated content may not necessarily meet our requirements, ethical standards, moral standards, or political standards, and this is inevitable. Don't think that this is because we didn't design it well. As long as we use this model, as long as we use the basic model of ChatGPT, we will definitely have this result. This is the price we have to pay for creativity and diversity.

what to do? There is an important management method for artificial intelligence, called artificial intelligence alignment, which relies on humans to help it through supervised learning and reinforcement learning with human feedback, the so-called "RLHF", which governs it through these two methods. Now domestic and foreign ChatGPT must go through a lot of governance work before it is launched, otherwise it will not be usable at all.

Second, it involves user governance issues to prevent abuse and misuse.

There is a composition "Flying House" for the third grade of Hong Kong primary school. I asked ChatGPT to write a "Flying House" as a third grade student. The writing was very good, almost the same as what the third grade student wrote. What if primary and secondary school students use this to hand in homework? The content generated by the large model, you say it was a painting you drew, and the article said it was written by you. The question now is how to identify these texts and images, and how do we prove that this text, image, photo or composed music was generated by a machine? ? I can tell you that it is very difficult. Maybe some flaws can be found in portrait photos, but it is difficult to tell whether images and text, especially text, were written by a machine or a human, so the issue of copyright ownership is involved.

Do articles, drawings, and songs written by machines have copyrights? Who should this copyright belong to? The Americans used a very simple method. The copyright belongs to the user. During discussions in China, everyone felt this was very unreasonable. Why? You used this software to draw a painting, and three French students auctioned it for 430,000 US dollars. As a result, 430,000 US dollars went to the three French students. Many lawyers pointed out that this is unreasonable and that the interests of the software and platform people should be considered. Otherwise, who Are you still willing to be a platform? But why does the United States give copyright to users? The reason is that there is no way to determine whether the painting was drawn by me or by a computer, so it simply belongs to the user. This is their method, and we haven't discussed a good method yet.

There are many more examples of the dangers of abuse. Many criminals use AI to fake identities, causing property damage and economic losses. They can also use this to slander others, etc. Computers can write text at basically the same level as humans. In the future, 90% of speeches on the Internet will be written by machines. Can we still see the truth of the incident? When an incident comes out, most people on the Internet are opposed to it. Is it the public opinion of the majority of people, or is it written by a small number of people manipulating a machine? After ChatGPT came out, this problem really threatened us.

There are also some problems related to fraud in China, especially financial fraud. There are many frauds using fake identities. Nowadays, AI fraud is relatively easy. Next, let’s talk about issues related to finance. The materials just mentioned were all provided by Ruilai Intelligence Company, which mainly deals with artificial intelligence security issues.

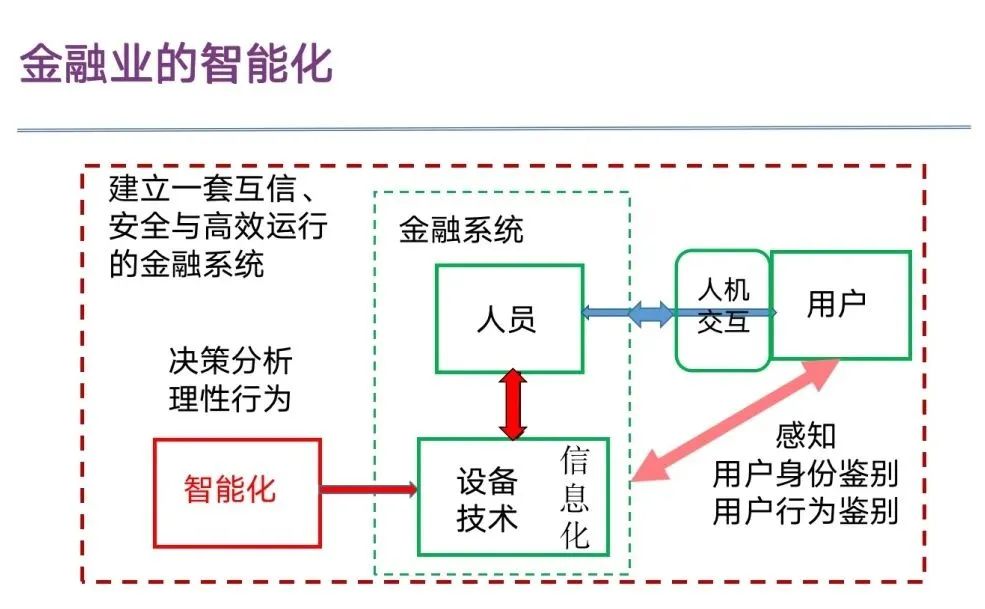

From the perspective of financial services, financial services have undergone great changes after informatization, allowing our financial services to provide very high-quality services at any time and anywhere. Although there are so many changes in technology, the relationship between personnel and equipment and the relationship between technology have not changed. We basically provide services to customers through personnel operating equipment, using equipment, and using technology. The problem is that there is a very important change after informatization. When users and financial staff provide services, they are often not face-to-face, but instead turn into back-to-back human-computer interaction. The demand for intelligence immediately arises here.

First, determine the user’s identity – who are you? We didn't have this problem face to face in the past, but now we definitely have this problem back to back. What’s more important is the identification of user behavior. Is your behavior legal? Is it money laundering? Is it a scam? In the past, it was difficult to do it face-to-face, but now it is relatively easy to do it back-to-back. Because we are dealing with machines, there is the problem of user behavior identification.

The relationship between personnel and equipment has undergone important changes. In the past, it was one-sided, with personnel using technology and equipment. Now technology and equipment also participate in the decision-making analysis process. Users use data-based loan models to decide whether to lend or not through deep learning methods. Loan it to him. This raises a very important issue. We must re-establish a financial operating system of mutual trust, security and efficiency. In the past, when we dealt with finance, we dealt with people. Our credit was based on trust in people. Now it is all done by machines. Can we trust it? Would that be fair? Let me tell you, no matter whether you use large models or data, this is definitely unsafe and unfair. There is a re-establishment here.

I just talked about this issue with the leaders attending the meeting. Financial intelligence is an inevitable trend, but when we do it, we should not do one thing at a time. We must have top-level planning and deployment. Without top-level planning and deployment, it is difficult to re-establish a safe and mutually trusting system. In the past, security and mutual trust relied on personnel manipulation. We must see this trend now. The problem brought about by the informatization and intelligence of any industry is that the system is becoming more and more fragile and vulnerable to attacks. The more informatized and intelligent the system, the more fragile it is. As we all know, attack is relatively easy. One successful attack out of 100 times is a good attack; defense is difficult, and one out of 100 defense attempts is broken. This defense cannot be used at all. These two requirements are not symmetrical at all. . The defense must be foolproof, and the attack only needs to be successful once. The more information-based and intelligent it is, the more fragile and vulnerable it is to attacks, so security must be considered in the first place. It does not mean that the higher the informatization and the higher the intelligence, the better. This can only be said when safety is ensured.

We now have many methods of identification, but most of them are unsafe, as I believe everyone has experienced. For example, when doing face recognition, if the person on the left is not the person on the right, of course you can't get in. However, as far as the computer is concerned, as long as the artificial glasses are put on, it will be mistaken for the user on the right. The model is completely different from human recognition. Although the recognition rate is very high, it is very fragile and vulnerable to attacks. Local texture changes can change the recognition. result.

In addition to counter-sample attacks, deepfakes can also break the system. Use the face on the left to replace the face of the victim on the right. You can do it by shaking your head and blinking. It is easy to get in. This is a process of practical application, and you can completely trick the system into it by using face replacement changes. We have studied some security detection methods to detect whether it is fake or real to deal with this risk and problem.

Although the Next token prediction model brings us many benefits, it will inevitably bring problems such as uncertainty, errors, poor robustness, inexplicability, and lack of self-knowledge. The uncertainty and limitation of data in finance itself will bring some effects, so we created an artificial intelligence security system platform to solve this problem.

in conclusion:

1. Humanity is a community with a shared future. Only when the whole world unites to jointly develop artificial intelligence can the healthy development of artificial intelligence be ensured.

2. Development and governance must be addressed simultaneously to ensure the healthy development of artificial intelligence.

·END·

Further reading: