The memory management subsystem may be the most complex subsystem in the Linux kernel. It supports many functional requirements, such as page mapping, page allocation, page recycling, page swapping, hot and cold pages, emergency pages, page fragmentation management, page cache, Page statistics, etc., and also have high requirements on performance. This article starts from three aspects: memory management hardware architecture, address space division and memory management software architecture, and attempts to make some macro analysis and summary of the software and hardware architecture of memory management.

Memory management hardware architecture

Because memory management is the core function of the kernel, in order to optimize memory management performance, in addition to software optimization, a lot of optimization design has been done to the hardware architecture. The figure below is a memory hierarchy design scheme on a current mainstream processor.

As can be seen from the figure, for the logical cache architecture to read and write memory, the hardware has designed three optimization paths.

1) First of all, the L1 cache supports virtual address addressing, which ensures that the virtual address (VA) from the CPU can be used to directly search the L1 cache without converting it into a physical address (PA), improving cache search efficiency. Of course, using VA to search the cache has security flaws, which requires some special design of the CPU to make up for it. For details, you can read "Computer Architecture: Quantitative Research Methods" for relevant details.

2) If the L1 cache does not hit, address translation is required to convert VA into PA. The memory mapping management of Linux is implemented through page tables, but the page tables are placed in the memory. If each address translation process requires access to the memory, the efficiency is very low. Here the CPU accelerates the address translation through the TLB hardware unit (in the MMU).

3) After obtaining the PA, search the cache data in the L2 cache. The L2 cache is generally an order of magnitude larger than the L1 cache, and its search hit rate is also higher. If the data is obtained by hit, it can avoid accessing the memory and improve the access efficiency.

It can be seen that in order to optimize memory access efficiency, modern processors introduce hardware modules such as multi-level cache and TLB.

Memory mapping space division

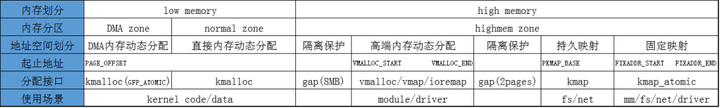

According to different memory usage methods and usage scenarios, the kernel divides the memory mapped address space into multiple parts. Each divided space has its own starting and ending addresses, allocation interfaces and usage scenarios. The figure below is a common 32-bit address space division structure.

- DMA memory dynamically allocates address space:

Some DMA devices cannot access all memory space due to limitations of their own addressing capabilities. For example, early ISA devices can only perform DMA in the 24-bit address space, that is, they can only access the first 16MB of memory. Therefore, it is necessary to divide the DMA memory dynamic allocation space, that is, DMA zone. Its allocation is applied through the kmalloc interface with the GFP_ATOMIC control character. - Direct memory dynamically allocates address space :

For reasons such as access efficiency, the kernel uses a simple linear mapping of memory. However, due to the addressing capability of the 32-bit CPU (4G size) and the starting setting of the kernel address space (starting at 3G), it will cause The kernel's address space resources are insufficient. When the memory is larger than 1GB, all memory cannot be directly mapped. The part of the address space that cannot be mapped directly, that is, the highmem zone. The area between the DMA zone and the highmem zone is the normal zone, which is mainly used for dynamic memory allocation of the kernel. Its allocation is applied through the kmalloc interface. - High-end memory dynamically allocates address space:

The memory allocated by high-end memory is memory with continuous virtual addresses and discontinuous physical addresses. It is generally used for modules and drivers dynamically loaded by the kernel, because the kernel may have been running for a long time and the memory page fragmentation is serious. If you want It will be more difficult to apply for memory pages with large continuous addresses, which can easily lead to allocation failure. Depending on application needs, high-end memory allocation provides multiple interfaces:

vmalloc: Specify allocation size, page location and virtual address implicit allocation;

vmap: Specifies the page location array, and the virtual address is implicitly allocated;

ioremap: Specifies the physical address and size, the virtual address is implicitly allocated.

- Persistently mapped address spaces :

Kernel context switches are accompanied by TLB flushes, which can cause performance degradation. But some modules using high-end memory also have high performance requirements. The TLB of the persistent mapping space is not refreshed when the kernel context switches, so the high-end address space they map has higher addressing efficiency. Its allocation is applied for through the kmap interface. The difference between kmap and vmap is that vmap can map a group of pages, that is, the pages are not consecutive, but the virtual addresses are continuous, while kmap can only map one page to the virtual address space. kmap is mainly used in modules such as fs and net that have higher performance requirements for high-end memory access. - Fixed mapping address space:

The problem with persistent mapping is that it may sleep and is not available in scenarios that cannot block, such as interrupt contexts and spin lock critical sections. In order to solve this problem, the kernel has divided into fixed mappings, and its interfaces will not sleep. Fixed mapping space is mapped through the kmap_atomic interface. The usage scenarios of kmap_atomic are similar to kmap, and are mainly used in modules such as mm, fs, net, etc. that have high performance requirements for high-end memory access and cannot sleep.

Different CPU architectures have different divisions of address space, but in order to ensure that CPU architecture differences are not visible to external modules, the semantics of the memory address space allocation interface are consistent.

Because 64-bit CPUs generally do not require high-end memory (of course they can support it), the address space division is quite different from that of 32-bit CPUs. The following figure is an X86_64 kernel address space division diagram:

Information Direct: Linux kernel source code technology learning route + video tutorial kernel source code

Learning Express: Linux Kernel Source Code Memory Tuning File System Process Management Device Driver/Network Protocol Stack

Memory management; software architecture

The core work of kernel memory management is memory allocation and recovery management, which is divided into two systems: page management and object management. The page management system is a two-level hierarchy, and the object management system is a three-level hierarchy. The allocation cost and negative impact of operations on the CPU cache and TLB gradually increase from top to bottom.

Page management hierarchy : a two-level structure composed of hot and cold caches and partner systems. Responsible for caching, allocating, and recycling memory pages.

Object management hierarchy : a three-level structure composed of per-cpu cache, slab cache, and partner system. Responsible for object caching, allocation, and recycling. The object here refers to a memory block smaller than the size of a page.

In addition to memory allocation, memory release also operates according to this hierarchy. If an object is released, it is first released to the per-cpu cache, then to the slab cache, and finally to the partner system.

There are three main modules in the block diagram, namely the buddy system, slab allocator and per-cpu (hot and cold) cache. Their comparative analysis is as follows.

Original author: Geek Rebirth