1 Understand time complexity

1.1 What is time complexity?

Time complexity is a function that qualitatively describes the running time of the algorithm. In software development, time complexity is used to facilitate developers to estimate the running time of the program. The number of operating units of the algorithm is usually used to represent the time consumed by the program. Here, by default, each unit of the CPU takes the same time to run. Assuming that the problem size of the algorithm is n, then the number of operation units is f(n)represented by a function. As the data size nincreases, the growth rate of the algorithm execution time and f(n)the growth rate show a certain relationship. This is called the asymptotic time complexity of the algorithm. , referred to as time complexity, denoted as O(f(n)), where n refers to the number of instruction sets.

1.2 What is big O

Big O is used to represent the upper bound of the algorithm execution time . It can also be understood as the running time in the worst case. The amount and order of data have a great impact on the execution time of the algorithm. It is assumed here that a certain input data is used. The running time of this algorithm is longer than the calculation time of other data.

We all say that the time complexity of insertion sort is O(n^2), but the time complexity of insertion sort has a great relationship with the input data. If the input data is completely ordered, the time complexity of insertion sort is, if the input O(n)data is In completely reverse order, the time complexity is O(n^2), so the worst-case O(n^2)time complexity is, we say that the time complexity of insertion sort is O(n^2).

Quick sorting is O(nlogn), the time complexity of quick sorting in the worst case is O(n^2), in general O(nlogn), so strictly speaking from the definition of big O, the time complexity of quick sorting should be O(n^2) , but we Still saying that the time complexity of quick sort is O(nlogn), this is the default rule in the industry.

The time complexity of binary search is O(logn)that the data size is halved each time until the data size is reduced to 1. Finally, it is equivalent to finding the power of 2 equal to n, which is equivalent to dividing it logntimes.

The time complexity of merge sort is O(nlogn), for top-down merging, the time complexity is O(logn) when the data scale is divided from n to 1, and then the time complexity of continuous upward merging is, and the overall time complexity O(n)is O(nlogn).

The traversal complexity of a tree is generally O(n), nwhich is the number of nodes in the tree, and the time complexity of selection sorting, which is O(n^2). We will gradually analyze the complexity of each data structure and algorithm in the corresponding chapters. For more time complexity analysis and derivation, please refer to the main theorem.

1.3 Common time complexity

1.3.1 O(1): constant complexity

let n = 100;

1.3.2 O(logn): logarithmic complexity

//二分查找非递归

var search = function (nums, target) {

let left = 0,

right = nums.length - 1;

while (left <= right) {

let mid = Math.floor((left + right) / 2);

if (nums[mid] === target) {

return mid;

} else if (target < nums[mid]) {

right = mid - 1;

} else {

left = mid + 1;

}

}

return -1;

};

1.3.3 O(n): linear time complexity

for (let i = 1; i <= n; i++) {

console.log(i);

}

1.3.4 O(n^2): square (nested loop)

for (let i = 1; i <= n; i++) {

for (let j = 1; j <= n; j++) {

console.log(i);

}

}

for (let i = 1; i <= n; i++) {

for (let j = 1; j <= 30; j++) {

//嵌套的第二层如果和n无关则不是O(n^2)

console.log(i);

}

}

1.3.5 O(2^n): exponential complexity

for (let i = 1; i <= Math.pow(2, n); i++) {

console.log(i);

}

1.3.6 O(n!): factorial

for (let i = 1; i <= factorial(n); i++) {

console.log(i);

}

2 Common sorting algorithms

2.1 Selection sorting

Algorithm steps:

First find the smallest (largest) element in the unsorted sequence and store it at the starting position of the sorted sequence.

Then continue to find the smallest (largest) element from the remaining unsorted elements, and then put it at the end of the sorted sequence.

Repeat step two until all elements are sorted.

Selection sort is a simple and intuitive sorting algorithm. No matter what data is entered, the time complexity is O(n²). So when using it, the smaller the data size, the better. The only advantage may be that it does not take up additional memory space.

function selectSort(arr) {

for (let i=0;i<arr.length-1;i++) {

let min = i;

for (let j=min+1;j<arr.length;j++) {

if (arr[min] > arr[j]) {

min = j;

}

}

let temp = arr[i];

arr[i] = arr[min]

arr[min] = temp;

}

return arr;

}

2.2 Bubble sorting

Compare adjacent elements. If the first is greater than the second, swap them both;

The first pass of looping 0-n determines the value of n by finding the largest value and bubbles to the top.

The second pass loops 0-n-1 to determine the value of n-1

The third pass loops 0-n-2 to determine the value of n-2

function bubleSort(arr) {

for (let i = 0;i<arr.length-1;i++) {

// 循环轮次

for (let j=0;j< arr.length-i-1;j++) {

// 每轮比较次数

if (arr[j] > arr[j+1]) {

// 相邻比较

let temp = arr[j]

arr[j] = arr[j+1]

arr[j+1] = temp

}

}

}

return arr;

}

2.3 Insertion sort

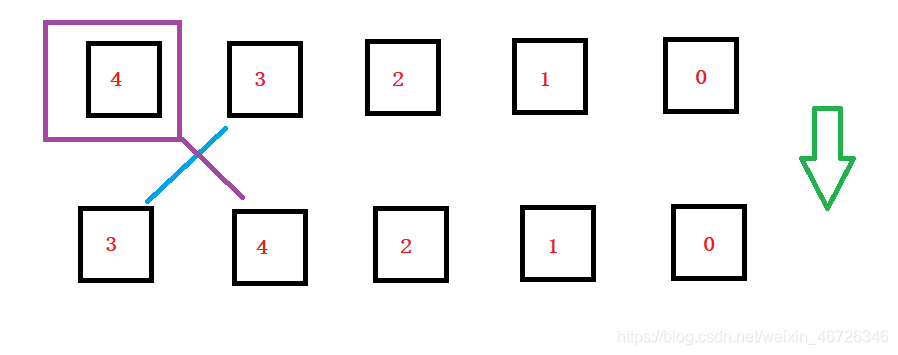

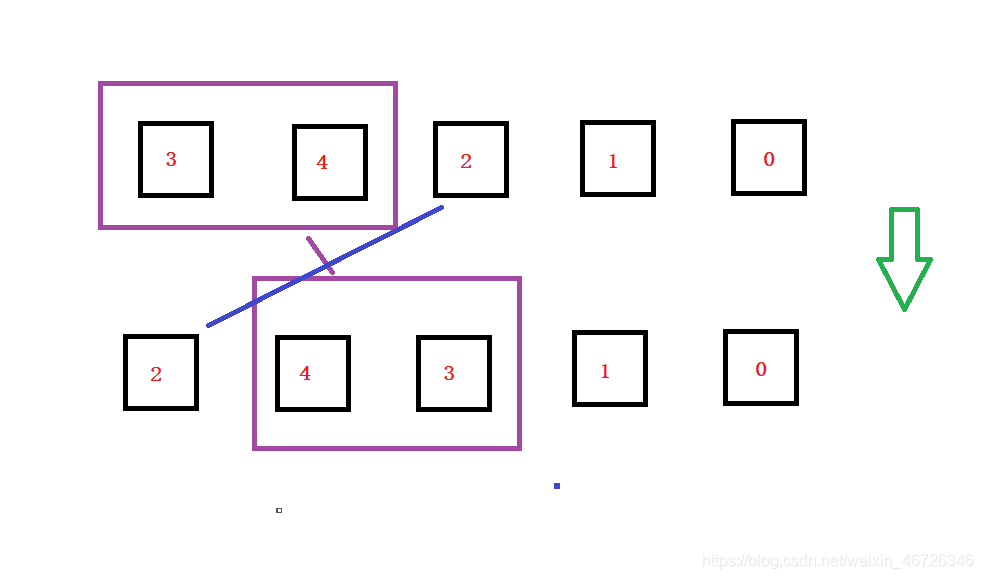

Sort by the first two values for the first time

The second loop sorts the first three values, compares the last value with the new value, and then inserts it into the specified position.

function insertSort(arr) {

for (let i = 1;i<arr.length;i++) {

// 循环轮次

let end = i;

let curr = arr[i]

while(end > 0 && curr < arr[end-1]) {

// 直到第一位 或者 当前的数不是最小值

arr[end] = arr[end - 1] // 移动比当前值小的值到后一位

end--

}

arr[end] = curr // 插入当前值

}

return arr;

}

2.4 XOR operation

The result is 1 if and only if only one bit of the expression is 1. Otherwise, the bit of the result is 0. Simply put, it is -----0 for the same and 1 for the difference.

Syntax: result = expression1 ^ expression2

按位异或 是对两个表达式执行 按位异或,先将两个数据转化为二进制数,然后进行 按位异或运算,只要位不同结果为 1,否则结果为 0

例如:

let a = 5;

let b = 8;

let c = a ^ b;

console.log(c) // 13

解析:

a 转二进制数为:0101

b 转二进制数为:1000

那么按照 按位异或 运算之后得到:1101(相同为 0,不同为 1),得到 c 的值就是 13

Features

1. Meet the exchange rate

a ^ b == b ^ a

2. The XOR operation of two identical numbers must result in 0

a^a === 0

3. 其他数字The result of 0 and XOR operation must be其他数字

0^a === a

2.5 Exercises

1. Given a non-empty integer array, each element appears twice except for one element that appears only once. Find the element that appears only once

let arr = [1, 3, 1, 2, 2, 7, 3, 6, 7]

// es5 解决方案

function fnc(arr){

let result = 0;

for(let i = 0; i < arr.length; i++){

result = result ^ arr[i];

}

return result;

}

// es6 解决方案

function fnc(arr){

return arr.reduce((a, b) => a ^ b, 0);

}