Article directory

Preface

In general, expanding the vocabulary can speed up decoding. For models that are not friendly to Chinese support (such as llama), expanding the vocabulary can also improve the performance of the model in Chinese.

environment

jsonlines==3.1.0

sentencepiece==0.1.99

transformers==4.28.1

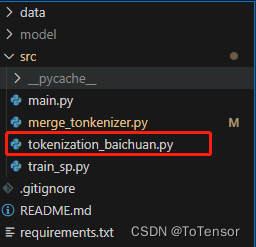

project structure

Among them, tokenization_baichuan.pythey were copied directly from the Baichuan model folder.

1. Steps to use

pip install -r requirements.txt

cd src

python main.py

2. Training vocabulary

Not much code for training

def train_sp(train_file, domain_sp_model_name):

max_sentence_length = 200000

pad_id = 3

model_type = "BPE"

vocab_size = 8000

spm.SentencePieceTrainer.train(

input=train_file,

model_prefix=domain_sp_model_name,

shuffle_input_sentence=False,

train_extremely_large_corpus=True,

max_sentence_length=max_sentence_length,

pad_id=pad_id,

model_type=model_type,

vocab_size=vocab_size,

split_digits=True,

split_by_unicode_script=True,

byte_fallback=True,

allow_whitespace_only_pieces=True,

remove_extra_whitespaces=False,

normalization_rule_name="nfkc",

num_threads=16

)

Parameter Description:

--input(以逗号分隔的输入句子列表) type: std::string default: ""

--input_format(输入格式。支持的格式为 `text` 或 `tsv`.) type: std::string default: ""

--model_prefix(输出模型前缀) type: std::string default: ""

--model_type(模型算法:unigram、bpe、word 或 char) type: std::string 默认值:"unigram"

--vocab_size(词汇量大小) 类型:int32 默认值:8000

--accept_language(以逗号分隔的该模型可接受的语言列表 type: std::string 默认值: ""

--self_test_sample_size(自我测试样本的大小) type: int32 default: 0

--character_coverage(用于确定最小符号的字符覆盖率) 类型: double 默认值: 0.9995

--input_sentence_size(训练器加载句子的最大大小) 类型: std::uint64_t 默认值: 0

--shuffle_input_sentence(提前随机抽样输入句子。当--input_sentence_size > 0时有效) type: bool 默认值: true

--seed_sentencepiece_size(种子句子片段的大小) 类型: int32 默认值:1000000

--shrinking_factor(相对于损失,保留收缩因子最高的句子类型:double 默认值:0.75

--num_threads(训练的线程数) 类型: int32 默认值: 16

--num_sub_iterations(EM 子迭代次数) 类型: int32 默认值: 2

--max_sentencepiece_length(句子片段的最大长度) 类型: int32 默认值: 16

--max_sentence_length(以字节为单位的句子最大长度) 类型: int32 默认值: 4192

--split_by_unicode_script(使用 Unicode 脚本分割句子类型:bool 默认值:true

--split_by_number(按数字(0-9)分割词块) 类型:bool 默认值:true

--split_by_whitespace(使用空格分割句子类型:bool 默认值:true

--split_digits(将所有数字(0-9)分割成独立片段) 类型:bool 默认值:false

--treat_whitespace_as_suffix(将空白标记视为后缀而非前缀) type: bool 默认值: false

--allow_whitespace_only_piece(允许只包含(连续)空白标记的片段 type: bool 默认值: false

--control_symbols(以逗号分隔的控制符号列表 type: std::string 默认值: ""

--control_symbols_file(从文件加载控制符号) type: std::string default: ""

--user_defined_symbols(以逗号分隔的用户自定义符号列表) type: std::string default: ""

--user_defined_symbols_file(从文件加载用户定义的符号) type: std::string default: ""

--required_chars(无论 --character_coverage 为何,此标志中的 UTF8 字符始终用于字符集) type: std::string 默认值: ""

--required_chars_file(从文件加载 required_chars) type: std::string default: ""

--byte_fallback(将未知片段分解为 UTF-8 字节片段) type: bool default: false

--vocabulary_output_piece_score(在词汇文件中定义分数) type: bool default: true

--normalization_rule_name(规范化规则名称,可从 nfkc 或 identity 中选择 type: std::string default: "nmt_nfkc"

--normalization_rule_tsv(规范化规则 TSV 文件) type: std::string default: ""

--denormalization_rule_tsv(去规范化规则 TSV 文件) type: std::string default: ""

--add_dummy_prefix(在文本开头添加虚假空白) type: bool default: true

--remove_extra_whitespaces(删除前导、尾部和重复的内部空白) type: bool 默认值: true

--hard_vocab_limit(如果设置为 false,--vocab_size 将被视为软限制) type: bool 默认值: true

--use_all_vocab(如果设置为 true,则使用所有标记作为词汇表。 对单词/字符模型有效。

--unk_id(覆盖 UNK (<unk>) id。)类型:int32 默认值:0

--bos_id(覆盖 BOS (<s>) id。设置 -1 可禁用 BOS。) 类型:int32 默认值:1

--eos_id (覆盖 EOS (</s>) id。设置 -1 可禁用 EOS。) 类型:int32 默认值:2

--pad_id (覆盖 PAD (<pad>) id。设置 -1 可禁用 PAD。) 类型:int32 默认值:-1

--unk_piece(覆盖 UNK (<unk>) piece。)类型:std::string 默认值:"<unk>"

--bos_piece (覆盖 BOS (<s>) piece.) 类型: std::string 默认值: "<s>"

--eos_piece(覆盖 EOS (</s>) piece。) 类型:std::string 默认值:"</s>"

--pad_piece (覆盖 PAD (<pad>) 部件。) 类型:std::string 默认值:"<pad>"

--unk_surface (<unk>的虚拟表面字符串。在解码时,<unk> 被解码为 `unk_surface`.) type: std::string default: " ⁇ "

--train_extremely_large_corpus(增加单字节标记化的位深度。) type: bool default: false

--random_seed (随机生成器的种子值) 类型: uint32 默认值: 4294967295

--enable_differential_privacy(是否在训练时添加 DP。目前仅 UNIGRAM 模型支持。) 类型:bool 默认值:false

--differential_privacy_noise_level(为 DP 增加的噪声量) type: float default: 0

--differential_privacy_clipping_threshold(为 DP 削除计数的阈值) type: std::uint64_t default: 0

Third, the combined vocabulary

def merge_vocab(src_tokenizer_dir, domain_sp_model_file, output_sp_dir, output_hf_dir, domain_vocab_file=None):

# load

src_tokenizer = AutoTokenizer.from_pretrained(src_tokenizer_dir, trust_remote_code=True)

domain_sp_model = spm.SentencePieceProcessor()

domain_sp_model.Load(domain_sp_model_file)

src_tokenizer_spm = sp_pb2_model.ModelProto()

src_tokenizer_spm.ParseFromString(src_tokenizer.sp_model.serialized_model_proto())

domain_spm = sp_pb2_model.ModelProto()

domain_spm.ParseFromString(domain_sp_model.serialized_model_proto())

print("原始词表大小: ", len(src_tokenizer))

print("用自己的数据训练出来的词表大小", len(domain_sp_model))

# 原始词表集合

src_tokenizer_spm_tokens_set = set(p.piece for p in src_tokenizer_spm.pieces)

print("原始词表 example: ", list(src_tokenizer_spm_tokens_set)[:10])

# 拓展词表

added_set = set()

for p in domain_spm.pieces:

piece = p.piece

if piece not in src_tokenizer_spm_tokens_set:

# print('新增词:', piece)

new_p = sp_pb2_model.ModelProto().SentencePiece()

new_p.piece = piece

new_p.score = 0

src_tokenizer_spm.pieces.append(new_p)

added_set.add(piece)

print(f"加了sp训练出来的词表后,新词表大小: {

len(src_tokenizer_spm.pieces)}")

# 加载自定义的词表

if domain_vocab_file:

word_freqs = load_user_vocab(domain_vocab_file)

domain_vocab_set = set([i[0] for i in word_freqs if i])

print('自定义的词表 example:', list(domain_vocab_set)[:10])

print('自定义的词表大小:', len(domain_vocab_set))

for p in domain_vocab_set:

piece = p

if piece not in src_tokenizer_spm_tokens_set and piece not in added_set:

# print('新增词:', piece)

new_p = sp_pb2_model.ModelProto().SentencePiece()

new_p.piece = piece

new_p.score = 0

src_tokenizer_spm.pieces.append(new_p)

print(f"加了自定义的词表后,新词表大小: {

len(src_tokenizer_spm.pieces)}")

os.makedirs(output_sp_dir, exist_ok=True)

with open(output_sp_dir + '/new_tokenizer.model', 'wb') as f:

f.write(src_tokenizer_spm.SerializeToString())

tokenizer = BaichuanTokenizer(vocab_file=output_sp_dir + '/new_tokenizer.model')

tokenizer.save_pretrained(output_hf_dir)

print(f"new tokenizer has been saved to {

output_hf_dir}")

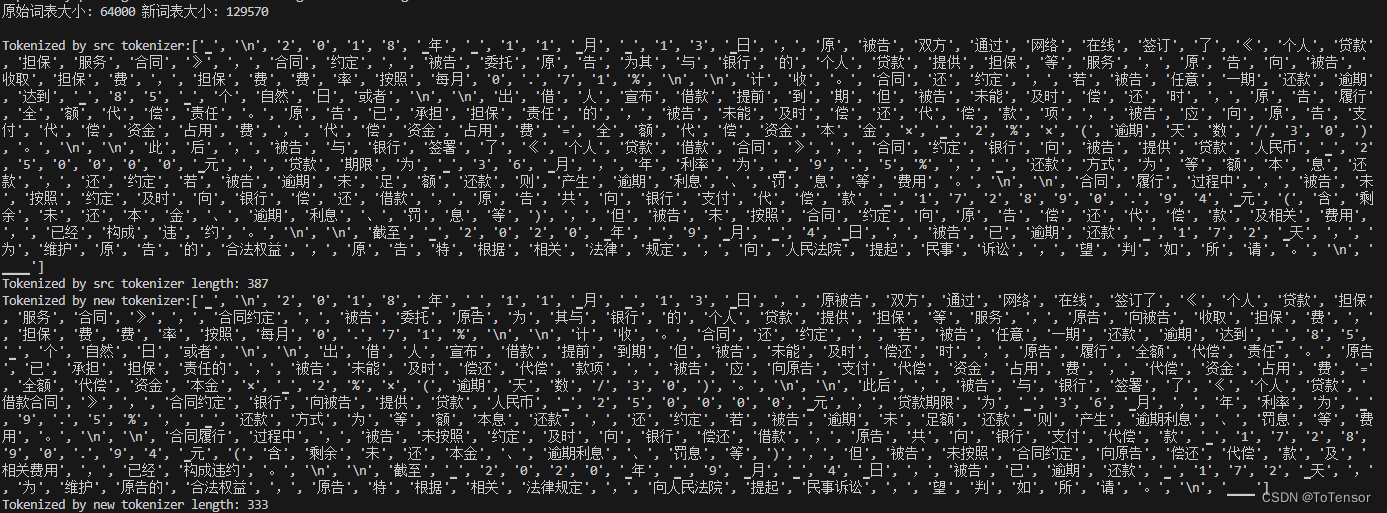

4. Effect

The original vocabulary size is expanded from 64000 to 129570, which is doubled.

The same goes for expanding the vocabulary of other models. Just tokenization_baichuan.pychange it to another model and modify the corresponding code.

All codes have been uploaded to Github : https://github.com/seanzhang-zhichen/expand-baichuan-tokenizer