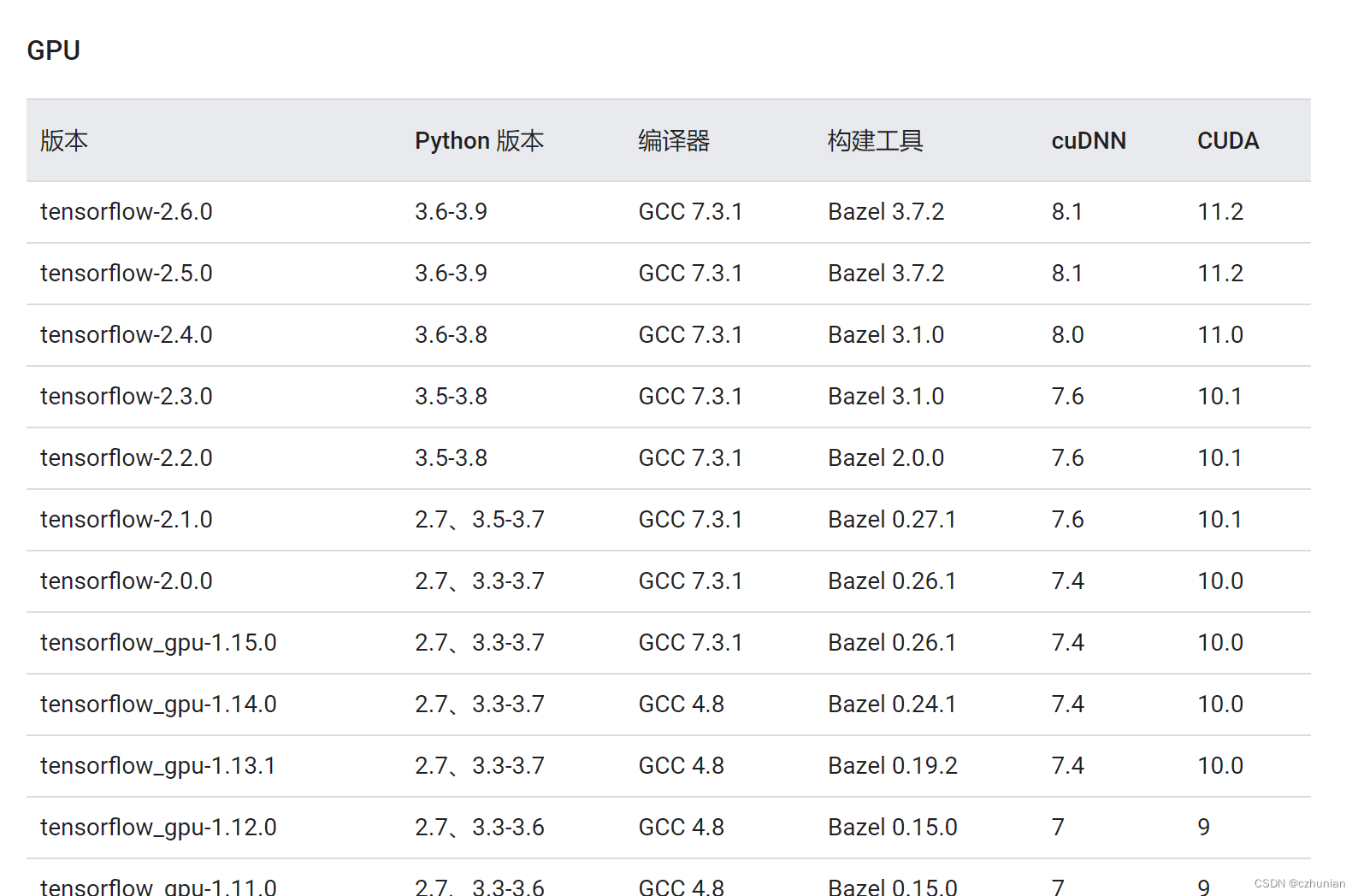

1. cuda installation, cuda and TensorFlow versions correspond, link https://www.tensorflow.org/install/source#tested_source_configurations

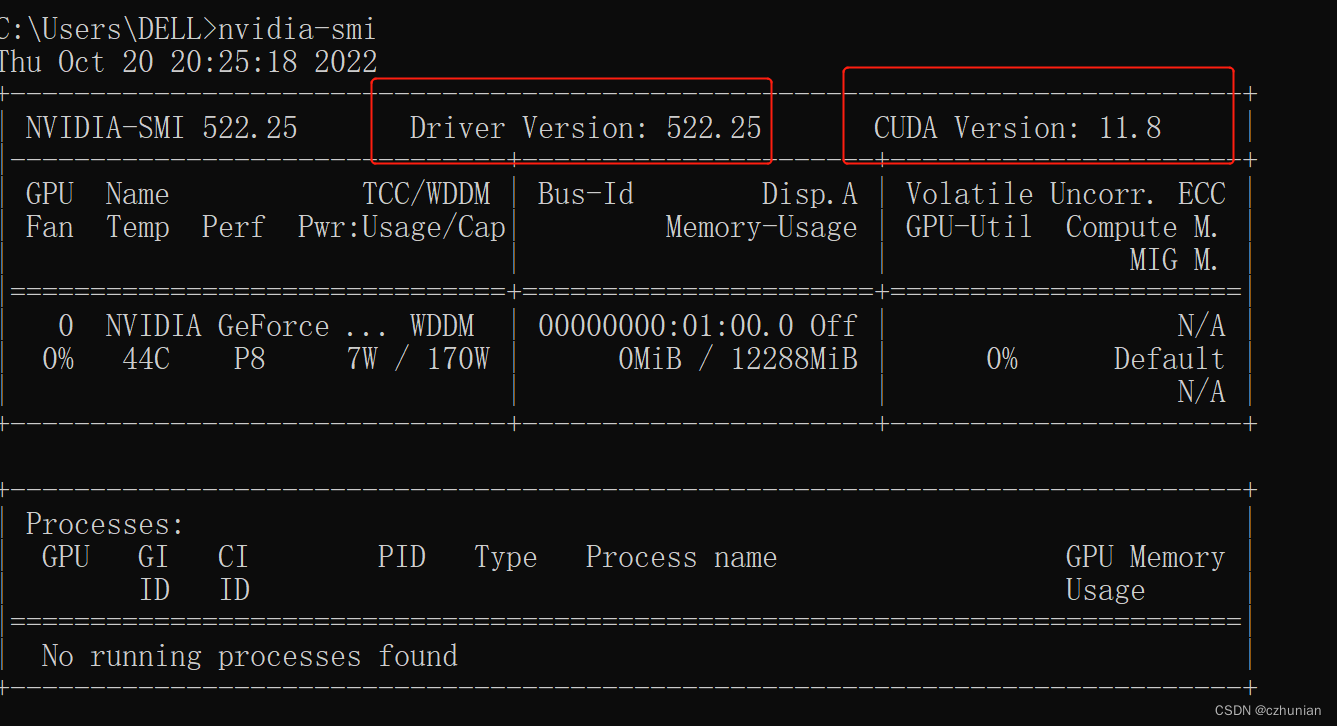

1. Check the driver version installed by yourself, nvidia-smi

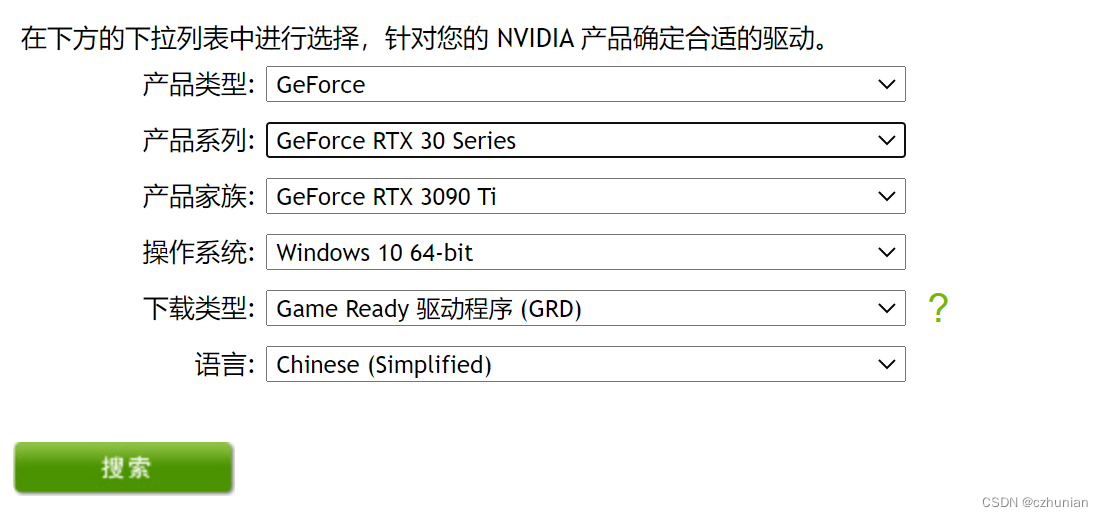

2. Install the required cuda, download link CUDA Toolkit Archive | NVIDIA Developer

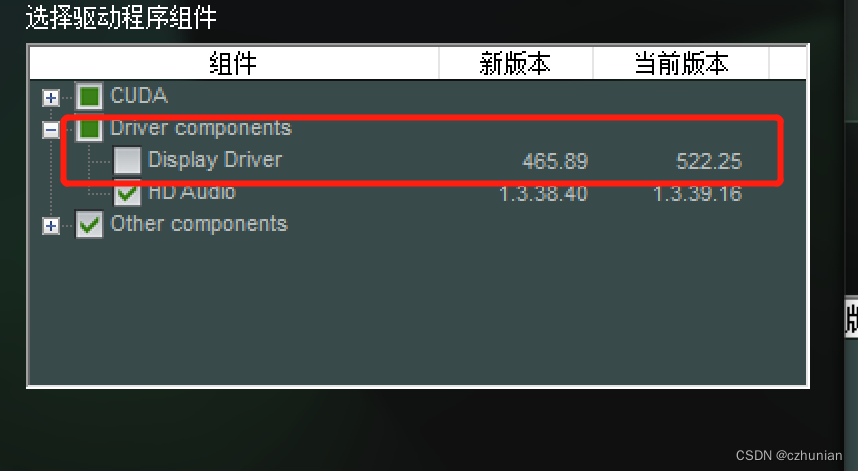

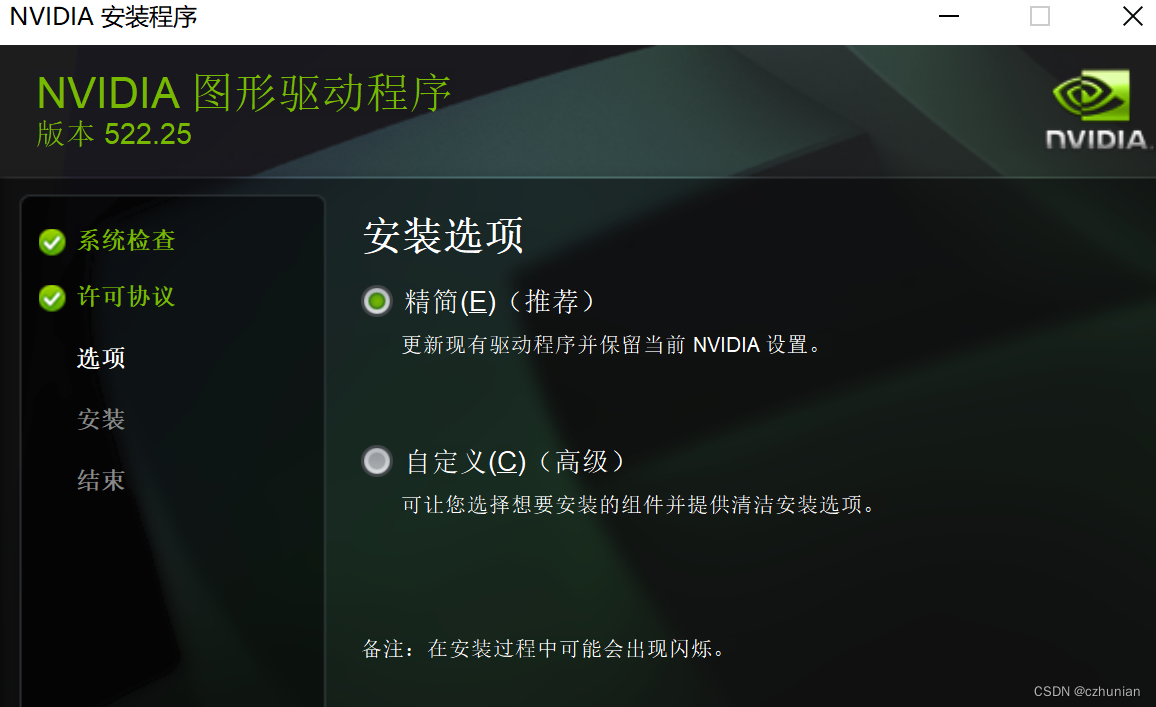

Find the required version, download the corresponding environment, customize the installation , check the new driver version, if it is not higher than the current version, do not install it.

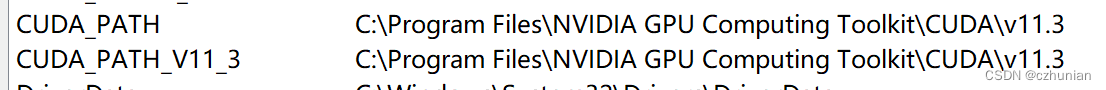

After the installation is successful, configure the environment variables and remember the installation path.

(If the installation is unsuccessful, uninstall the driver and install it again, it will be successful. You can use the software extraction code for uninstallation: 1233)

(If cudatookit is successfully installed and the graphics card cannot be found, then

Just install a driver on the official website )

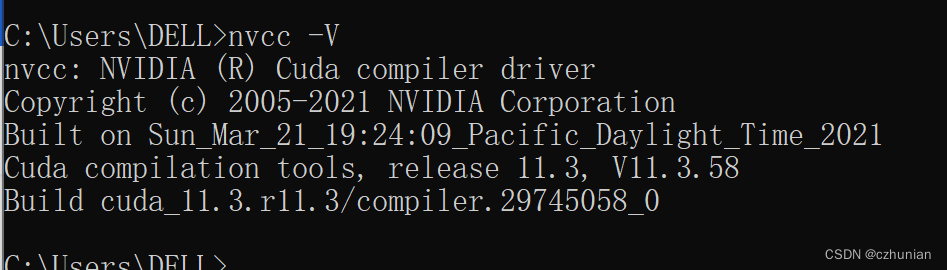

Check the installed cuda version

The version here is different from the cuda version corresponding to nvidia-smi, you can see the explanation of the fourth point below

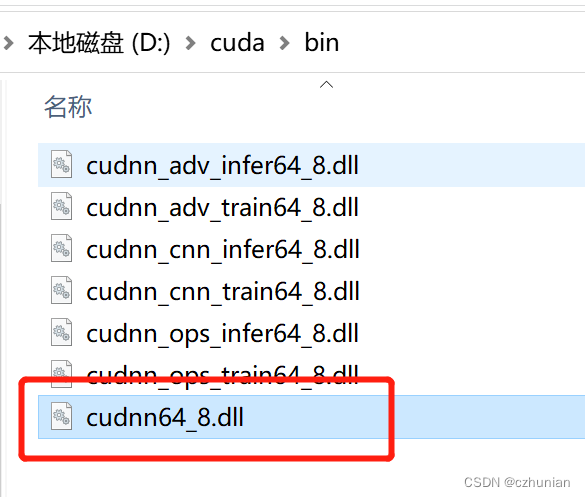

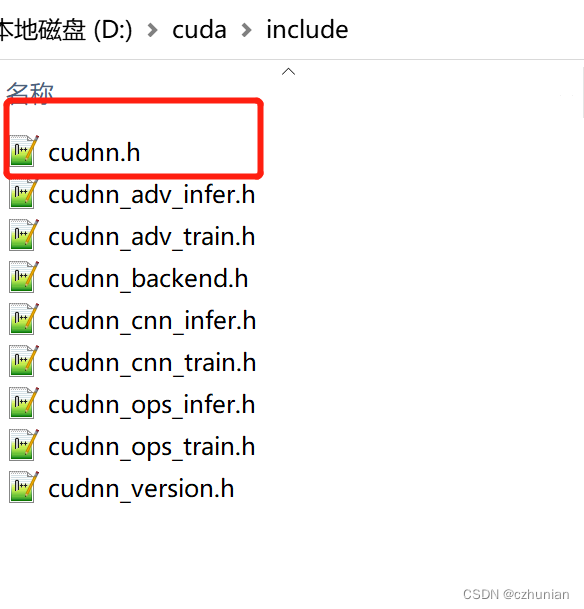

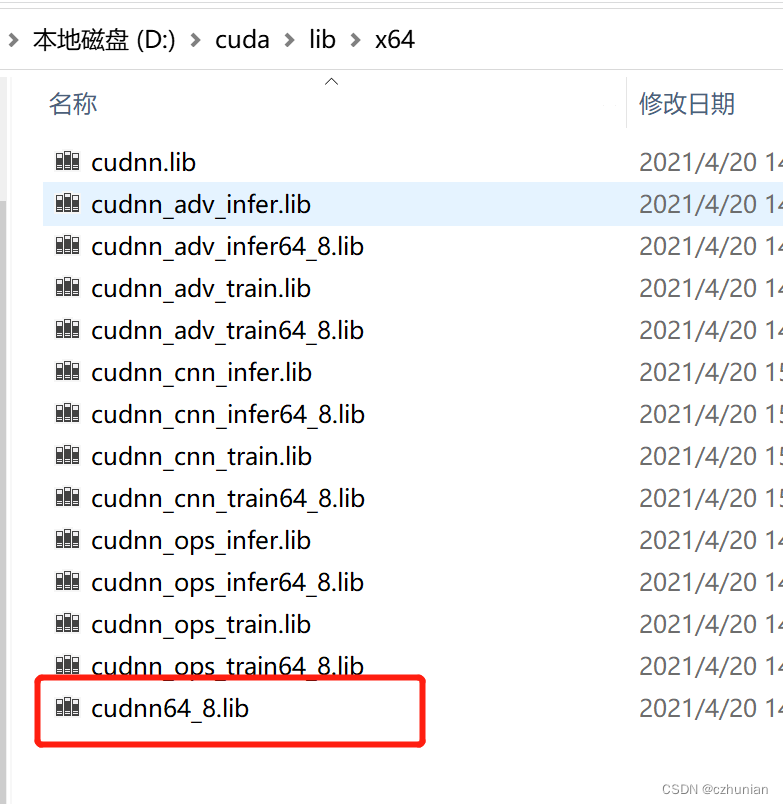

2. Installation of cuDNN neural network acceleration library

cuDNN is not an application program, but several file packages, just copy it to the CUDA directory after downloading.

cuDNN download page: cuDNN download page (remember the corresponding version, see above)

cuDNN is actually just a patch of CUDA, optimized for deep learning operations

Copy the following files include, lib, bin to the corresponding directory under the v11.3 directory.

Or directly copy these three directories to the v11.3 directory (do not overwrite file copy)

3. Install TensorFlow2.5-gpu version

1. Install the specified version of TensorFlow2.5. Here is to download the wheel file directly, and then create a virtual environment (conda creation) tutorial conda install Pytorch (GPU)_czhunian's blog-CSDN blog_conda install pytorch gpu

2. Download the wheel link https://github.com/fo40225/tensorflow-windows-wheel

Select the corresponding version and download all the files under this version.

3. In the virtual environment, conda activate tf2, enter the virtual environment, then switch to the wheel file path, pip install command to install

4. Test, the output True means the installation is successful

import tensorflow as tf

tf.test.is_gpu_available()4. Cuda and NVIDIA driver version relationship, link

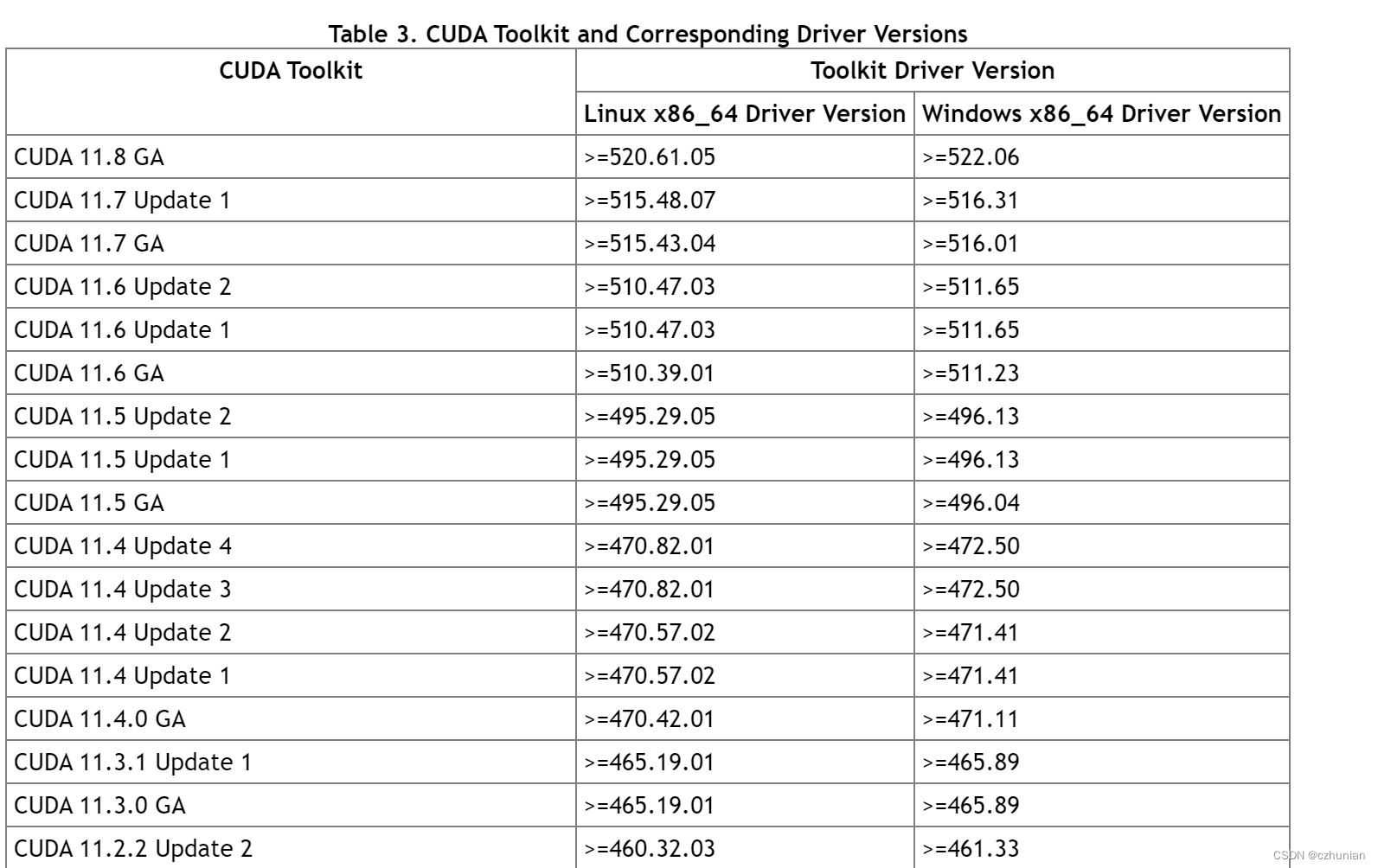

Mainly look at Table 3, the relationship between the cuda version and the driver.

View CUDA driver version (driver version) : NVIDIA GPU driver version (nvidia-smi)

View CUDA runtime version (runtime version): cudatoolkit package installed by yourself (nvcc -V)

- Necessary files for supporting the driver API

libcuda.so(eg ) are installed by the GPU driver installer .nvidia-smiIt belongs to this category of API. - The necessary files for supporting the runtime API (such as

libcudart.soandnvcc) are installed by the CUDA Toolkit installer . (CUDA Toolkit Installer may sometimes integrate GPU driver Installer).nvccis the CUDA compiler-driver tool installed with the CUDA Toolkit, which only knows the CUDA runtime version it was built with. It doesn't know what version of the GPU driver is installed, or even if the GPU driver is installed.

In summary, if the CUDA versions of the driver API and runtime API are inconsistent, it may be because you are using a separate GPU driver installer instead of the GPU driver installer in the CUDA Toolkit installer.

To sum up, the driver version does not conflict with the runtime version (here, the runtime should not be greater than the driver version), CUDA Toolkit (runtime) is essentially just a toolkit , and even multiple cudatoolkit versions can be installed, through Modify the environment variable to choose which version of cuda to use.

V. Summary

Graphics card driver version >= corresponding cudatoolkit version <= corresponding tensorflow version ; so cuda is a bridge .

1. Determine the tensorflow version to be installed.

2. According to the tensorflow version, determine the version of cudatoolkit and determine the version of cudann.

3. According to the cuda version, determine the version of the graphics card driver, upgrade if it is not enough, and ignore it if it is enough.