This article talks about how to use HuggingFace's Transformers to quantify the LLaMA2 large model produced by Meta AI, so that the model can run with only about 5GB of video memory.

written in front

In the previous two articles " Using Docker to Get Started with the Official LLaMA2 Open Source Model " and " Using Docker to Get Started with the Chinese Version of LLaMA2 Open Source Model ", we talked about how to get started quickly and use the freshly released Meta AI LLaMA2 model.

After actual testing, whether it is the original model (English) or the Chinese version model (bilingual), we need 13-14 GB of video memory to be able to run it.

In order to allow more students to play with the LLaMA2 model, I tried to use HuggingFace's Transformers to quantize the model. The quantized model only needs about 5GB of video memory to run.

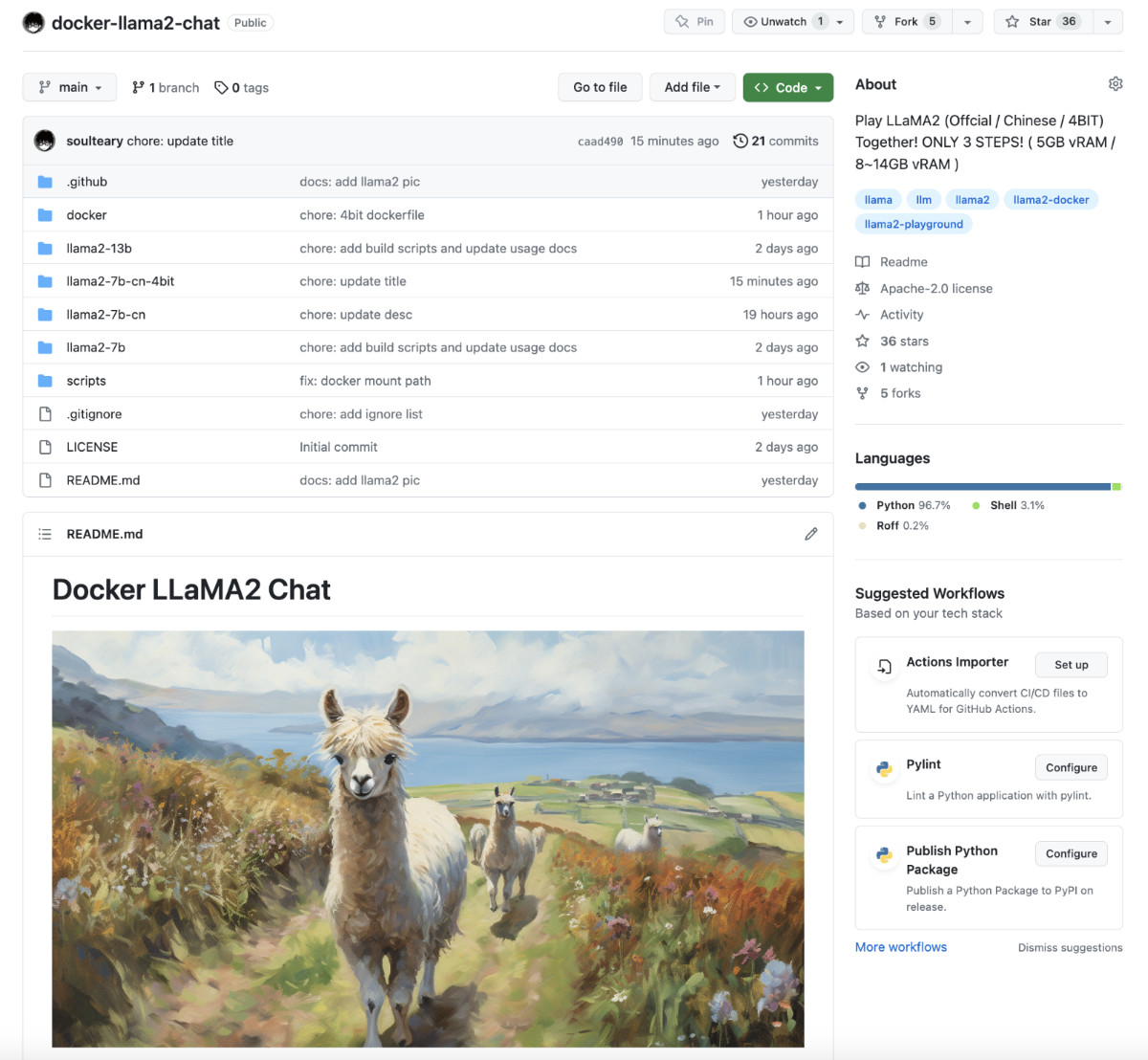

I have uploaded the complete code and model to GitHub and HuggingFace , interested students can pick it up by themselves.

Preparation

All the methods in this article, you can refer to and use in non-Docker containers.

In order to save trouble, you can refer to the first two articles, and you can quickly get the original or Chinese version of the LLaMA2 model operating environment and Docker image. If your local environment is complete, then ignore Dockerthe relevant commands and directly execute various specific program commands in Bash.

Next, we will use the LLaMA2 Chinese model image as an example to quantify the model.

In the previous article, we used the following command to quickly start a LLaMA2 Chinese model application:

docker run --gpus all --ipc=host --ulimit memlock=-1 --ulimit stack=67108864 --rm -it -v `pwd`/LinkSoul:/app/LinkSoul -p 7860:7860 soulteary/llama2:7b-cn bash

Because the quantized model needs to be saved, we first make a simple adjustment to the above command and add a parameter:

docker run --gpus all --ipc=host --ulimit memlock=-1 --ulimit stack=67108864 --rm -it -v `pwd`/LinkSoul:/app/LinkSoul -v `pwd`/soulteary:/app/soulteary -p 7860:7860 soulteary/llama2:7b-cn bash

Here we add an additional parameter -v `pwd`/soulteary:/app/soultearyto soultearymap the directory under the directory where the command is currently executed to the container /app/soultearyto save future quantized model files.

After executing the command, we'll be in an interactive command line environment inside a fully-fledged Docker container.

Quantization of LLaMA2 using Transformers

Here, we only use Transformers produced by HuggingFace to complete all the required work without introducing other open source projects.

The core configuration of the Transformers quantization model

The quantization function of Transformers is implemented by calling bitsandbytes . If you want to correctly call this function library for quantification, you need to complete the parameter configuration in AutoModelForCausalLM.from_pretrainedthe method .quantization_config

In the utils/quantization_config.py#L37 source code of Transformers , we can intuitively see the operation mode and parameter definition of the function. The simplest 4BIT quantization configuration is as follows:

model = AutoModelForCausalLM.from_pretrained(

# 要载入的模型名称

model_id,

# 仅使用本地模型,不通过网络下载模型

local_files_only=True,

# 指定模型精度,保持和之前文章中的模型程序相同 `model.py`

torch_dtype=torch.float16,

# 量化配置

quantization_config = BitsAndBytesConfig(

# 量化数据类型设置

bnb_4bit_quant_type="nf4",

# 量化数据的数据格式

bnb_4bit_compute_dtype=torch.bfloat16

),

# 自动分配设备资源

device_map='auto'

)

bnb_4bit_quant_typeThe reason why is set here nf4is because in HuggingFace's QLoRA large-scale model quantification practice , using nf4(NormalFloat) this new data type can save memory consumption as much as possible without sacrificing performance.

The bnb_4bit_compute_dtypereason why it is set to torch.bfloat16is because of another description of HuggingFace , we can use this new data format to reduce the "waste of space" of traditional FP32 and avoid the potential overflow problem of FP32 conversion to FP16.

Write a model quantization program

To sum up, it is not difficult to write a simple program of less than 30 lines to complete the quantization of the LLaMA2 model (I uploaded the related program to soulteary/docker-llama2-chat/llama2-7b-cn-4bit/quantization_4bit.py ):

import torch

from transformers import AutoModelForCausalLM, BitsAndBytesConfig

# 使用中文版

model_id = 'LinkSoul/Chinese-Llama-2-7b'

# 或者,使用原版

# model_id = 'meta-llama/Llama-2-7b-chat-hf'

model = AutoModelForCausalLM.from_pretrained(

model_id,

local_files_only=True,

torch_dtype=torch.float16,

quantization_config = BitsAndBytesConfig(

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.bfloat16

),

device_map='auto'

)

import os

output = "soulteary/Chinese-Llama-2-7b-4bit"

if not os.path.exists(output):

os.mkdir(output)

model.save_pretrained(output)

print("done")

Perform quantization operations on the model

We save the above content as quantization_4bit.py, place it in the directory of the same level as the LLaMA2 model directory meta-llamaor , and then use to execute the program to start the quantification of the model:LinkSoulpython quantization_4bit.py

# python quantization_4bit.py

Loading checkpoint shards: 100%|██████████████████████████████████████████████████████████████████| 3/3 [00:15<00:00, 5.01s/it]

done

Wait for a while, and you will be able to find the automatically created and saved new model pair directory in the current program directory soulteary/Chinese-Llama-2-7b-4bit/:

# du -hs soulteary/Chinese-Llama-2-7b-4bit/

13G soulteary/Chinese-Llama-2-7b-4bit/

# ls -al soulteary/Chinese-Llama-2-7b-4bit/

total 13161144

drwxr-xr-x 2 root root 4096 Jul 21 18:12 .

drwxr-xr-x 3 root root 4096 Jul 21 18:11 ..

-rw-r--r-- 1 root root 629 Jul 21 18:11 config.json

-rw-r--r-- 1 root root 132 Jul 21 18:11 generation_config.json

-rw-r--r-- 1 root root 9976638098 Jul 21 18:12 pytorch_model-00001-of-00002.bin

-rw-r--r-- 1 root root 3500316839 Jul 21 18:12 pytorch_model-00002-of-00002.bin

-rw-r--r-- 1 root root 26788 Jul 21 18:12 pytorch_model.bin.index.json

Complete the model run file

The model quantization calculation is over, but the model at this time cannot be used because the tokenizer-related program files are missing. Similar to the official version of LLaMA2 and the Chinese version are all compatible, the quantized version of the model and the model before quantization are also all compatible.

Solving this problem is very simple, we only need to copy the files in the model before quantization to the new model directory:

cp LinkSoul/Chinese-Llama-2-7b/tokenizer.model soulteary/Chinese-Llama-2-7b-4bit/

cp LinkSoul/Chinese-Llama-2-7b/special_tokens_map.json soulteary/Chinese-Llama-2-7b-4bit/

cp LinkSoul/Chinese-Llama-2-7b/tokenizer_config.json soulteary/Chinese-Llama-2-7b-4bit/

Adjust the model program

As mentioned above, there is no difference in use between the quantized program and the original program, so most programs can remain the same. However, because it is a new model file, there are still a few simple adjustments to be made.

Update the model runner

As mentioned above, there is no difference in use between the quantized program and the original program, so most programs can remain the same. In order to make the model load and run correctly through 4BIT, we need to adjust two things:

We need to adjust the variable model.pyin used by the relevant projects in the previous two articles model_id, and AutoModelForCausalLM.from_pretrainedadd to the call load_in_4bit=True:

model_id = 'soulteary/Chinese-Llama-2-7b-4bit'

if torch.cuda.is_available():

model = AutoModelForCausalLM.from_pretrained(

model_id,

load_in_4bit=True,

local_files_only=True,

torch_dtype=torch.float16,

device_map='auto'

)

else:

model = None

tokenizer = AutoTokenizer.from_pretrained(model_id)

The complete code of this part can be found in soulteary/docker-llama2-chat/llama2-7b-cn-4bit/model.py .

Run the model application

I uploaded the model application to soulteary/docker-llama2-chat/llama2-7b-cn-4bit , because there is no difference from the previous two articles, so I will not expand it.

If you choose not to run in the container and use it directly python app.py, the model program will run quickly.

Build a new container image

To build a 4BIT image, execute the script as in the previous article, and wait for the image to be built:

bash scripts/make-7b-cn-4bit.sh

If you have followed the first two articles before, then this operation should be completed within 1 to 2 seconds.

Launch model application using container

There is no difference between using the container to start the application and the previous article, just execute the command and call the following script:

bash scripts/run-7b-cn-4bit.sh

Waiting for the log to appear Running on local URL: http://0.0.0.0:7860, we can use and test normally.

Memory resource usage

Video memory resources have always been the part that everyone pays more attention to. The model startup probably requires video memory resources in the early 5G.

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 525.125.06 Driver Version: 525.125.06 CUDA Version: 12.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... Off | 00000000:01:00.0 Off | Off |

| 31% 61C P2 366W / 450W | 5199MiB / 24564MiB | 99% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 1396 G /usr/lib/xorg/Xorg 167MiB |

| 0 N/A N/A 1572 G /usr/bin/gnome-shell 16MiB |

| 0 N/A N/A 8595 C python 5012MiB |

+-----------------------------------------------------------------------------+

After using it for a period of time, it is still within 6GB. Does it feel okay?

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 525.125.06 Driver Version: 525.125.06 CUDA Version: 12.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... Off | 00000000:01:00.0 Off | Off |

| 32% 50C P8 35W / 450W | 5725MiB / 24564MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 1402 G /usr/lib/xorg/Xorg 167MiB |

| 0 N/A N/A 1608 G /usr/bin/gnome-shell 16MiB |

| 0 N/A N/A 24950 C python 5538MiB |

+-----------------------------------------------------------------------------+

at last

Not all students have one or several 4090s or A100s, so even if the quantization will bring some effect reduction, it is better than not being able to run the model or play together because of insufficient video memory.

Moreover, even if the effect declines, it is still suitable for use in many scenarios. In the following article, we will expand it.

The art of engineering lies in "trade-off". I heard a new friend mention it by accident a while ago, and I have a feeling of awakening something that has been hidden in my heart for a long time.

–EOF

This article uses the "Signature 4.0 International (CC BY 4.0)" license agreement. You are welcome to reprint or re-use it, but you need to indicate the source. Attribution 4.0 International (CC BY 4.0)

Author of this article: Su Yang

Creation time: July 22, 2023

Counting words: 8491 words

Reading time: 17 minutes to read

Link to this article: https://soulteary.com/2023/07/22/quantizing-meta-ai-llama2-chinese-version-large-models-using-transformers.html