1. What is a depth camera

(5) Depth camera: structured light, TOF, binocular camera-Knowledge

Traditional RGB color ordinary cameras are called 2D cameras , which can only capture objects within the camera's viewing angle, without the distance information from the objects to the camera, and can only perceive the distance of objects by feeling without clear data. The depth camera can obtain the distance information from the object to the camera, together with the X and Y coordinates of the 2D plane, the 3D coordinates of each point can be calculated, so we can infer the application of the depth camera, such as 3D reconstruction, target positioning, recognition

2. Introduction of Depth Camera

There are three types of depth camera solutions currently on the market.

(1) Structured-light, representative companies include Orbi Zhongguang, Apple (Prime Sense), Microsoft Kinect-1, Intel RealSense, Mantis Vision, etc.

(2) Binocular vision (Stereo), representing companies Leap Motion, ZED, DJI;

(3) Optical time-of-flight (TOF), representative companies Microsoft Kinect-2, PMD, SoftKinect, Lenovo Phab.

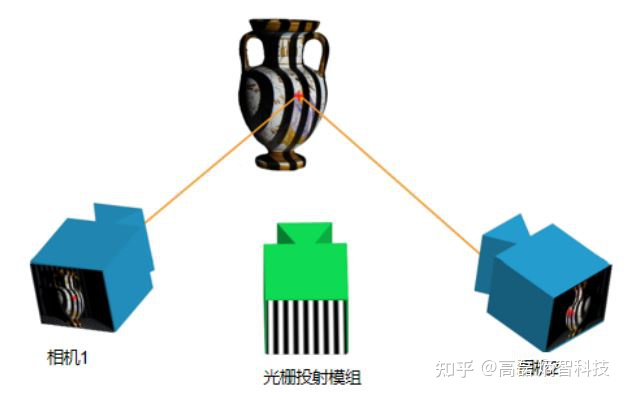

1. Structured light depth camera

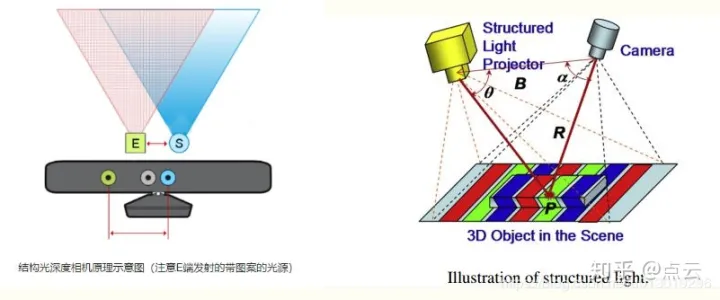

Structured Light Camera Principle

Structured light is called Structured light in English. The basic principle is that the light with certain structural characteristics is projected onto the object to be photographed through a near-infrared laser, and then collected by a special infrared camera. This kind of light with a certain structure will collect different image phase information due to different depth regions of the subject, and then convert this structure change into depth information through the computing unit to obtain a three-dimensional structure. (Changes in light structure can be reflected as depth information)

To put it simply, the three-dimensional structure of the object to be photographed is obtained through optical means, and then the obtained information is applied more deeply. Usually an invisible infrared laser with a specific wavelength is used as the light source. The light emitted by it is coded and projected on the object, and the distortion of the returned coded pattern is calculated by a certain algorithm to obtain the position and depth information of the object.

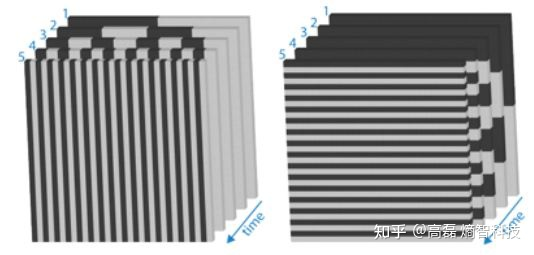

Infrared Laser Encoding for Structured Light Cameras

According to different coding patterns, there are generally: stripe structured light, representing the sensor enshape, coded structured light, representing the sensor Mantis Vision, Realsense(F200), speckle structured light representing the sensor apple (primesense), and Orbi Zhongguang.

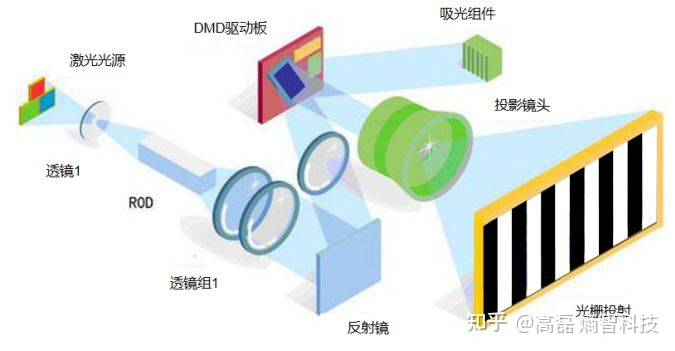

Learn about the process of structured light collection from the emission channel from this article

Structured Light Camera Features

The main advantages of structured light (speckle) are:

1) The solution is mature, and the camera baseline can be made relatively small, which is convenient for miniaturization.

2) The resource consumption is low, the depth map can be calculated from a single frame IR image, and the power consumption is low.

3) Active light source, which can also be used at night.

4) High precision and high resolution within a certain range, the resolution can reach 1280x1024, and the frame rate can reach 60FPS.

The disadvantages of speckle structured light are similar to structured light:

1) It is easily disturbed by ambient light, and the outdoor experience is poor.

2) As the detection distance increases, the accuracy will deteriorate

2、TOF

TOF principle

As the name implies, it measures the time of flight of light to obtain the distance. Specifically, it continuously emits laser pulses to the target, then uses the sensor to receive the reflected light, and obtains the exact target distance by detecting the flight time of the light pulse. Because of the speed of light laser, it is actually not feasible to measure the time-of-flight directly, and it is generally realized by detecting the phase shift of the light wave modulated by certain means. According to different modulation methods, the TOF method can generally be divided into two types: pulsed modulation (Pulsed Modulation) and continuous wave modulation (Continuous Wave Modulation). Pulse modulation requires a very high-precision clock for measurement, and needs to emit high-frequency and high-intensity laser light. At present, most of them use the method of detecting phase offset to realize the TOF function. To put it simply, it emits a processed light, which will be reflected back when it hits an object, and captures the round-trip time. Because the speed of light and the wavelength of the modulated light are known, the distance to the object can be quickly and accurately calculated.

TOF Features

Because TOF is not based on feature matching, the accuracy will not drop rapidly when the test distance becomes longer. At present, unmanned driving and some high-end consumer lidars are basically implemented by this method. The main advantages of TOF are: 1) Long detection distance. In the case of sufficient laser energy, it can reach tens of meters. 2) Less interference from ambient light.

But TOF also has some obvious problems:

1) High requirements on equipment, especially the time measurement module.

2) Large resource consumption. This scheme needs multiple sampling integrations when detecting the phase offset, and the computation load is large.

3) The edge precision is low.

4) Limited to resource consumption and filtering, the frame rate and resolution cannot be high.

Comparison between structured light and TOF

In contrast, structured light technology consumes less power, is more mature, and is more suitable for static scenes. The TOF solution has lower noise at long distances and higher FPS, so it is more suitable for dynamic scenes.

At present, structured light technology is mainly used in unlocking and secure payment, and its application distance is limited. The TOF technology is mainly used for rear-mounted photography of smart phones, and has certain effects in AR, VR and other fields (including 3D photography, somatosensory games, etc.).

3. Binocular stereo vision

Binocular stereo vision (Binocular Stereo Vision) is an important form of machine vision. It is based on the principle of parallax and uses imaging equipment to obtain two images of the measured object from different positions. By calculating the position deviation between corresponding points of the image , A method to obtain the three-dimensional geometric information of the object.

Of course, the complete binocular depth calculation is very complicated, mainly involving the feature matching of the left and right cameras, and the calculation will consume a lot of resources. The main advantages of binocular cameras are:

1) There is no need for structured light, TOF transmitters and receivers, low hardware requirements, and low cost. Ordinary CMOS camera will do.

2) Suitable for both indoor and outdoor use. As long as the light is right, not too dim.

But the disadvantages of binocular are also very obvious:

1) Very sensitive to ambient light. Light changes cause large image deviations, which in turn can lead to matching failures or low accuracy

2) It is not suitable for scenes that are monotonous and lack texture. Binocular vision performs image matching based on visual features, and no features will cause matching failure .

3) High computational complexity. This method is a purely visual method, which has high requirements on the algorithm and a large amount of calculation.

4) The baseline limits the measurement range. The measurement range is proportional to the baseline (distance between the two cameras), making miniaturization impossible.

3. Summary

From the perspective of the above three mainstream 3D camera imaging solutions, each has its own advantages and disadvantages, but from the perspective of practical application scenarios, structured light, especially speckle structured light, is the most widely used in the non-autonomous driving field. Because in terms of accuracy, resolution, and the scope of application scenarios, neither binocular nor TOF can achieve the greatest balance. Moreover, structured light is easily disturbed by ambient light, especially sunlight. Since this type of camera has an infrared laser emission module, it is very easy to transform it into an active binocular to make up for this problem.

Disclaimer: This article is a compilation of several reference articles, any infringement will be deleted immediately!