论文:Taming Transformers for High-Resolution Image Synthesis

VQGAN (Vector Quantized Generative Adversarial Network) is a GAN-based generative model that can convert images or text into high-quality images. The model was released in 2021 by the OpenAI research team.

The VQGAN model uses two core parts: Vector Quantization (VQ) and GAN. Among them, VQ is a data compression technology that can represent continuous data as discretized vectors. In VQGAN, an input image or text is mapped to a discretized vector representation in VQ space. These discretized vectors are then fed into the GAN model for image generation.

VQGAN models can be used for tasks such as image generation, image editing, and image retrieval. In order to train the VQGAN model, a large image dataset and some preprocessing techniques such as data augmentation and image cropping are required. During training, the VQGAN model optimizes two loss functions: one for quantization error (i.e., the error between a discretized vector and a continuous value), and another for an adversarial loss between the generator and the discriminator.

In practice, VQGAN can be used for many interesting tasks, such as image generation from text, text generation from image, image-to-image translation, image editing, style transfer, etc. The emergence of VQGAN has brought new progress to the field of image generation and has attracted a lot of attention on social media.

Its main technical details are as follows:

- Vector Quantization: VQGAN uses Vector Quantization (VQ) technology to represent continuous data as discretized vectors. In VQGAN, the input image or text is first encoded into a continuous vector representation, and then mapped to a discrete vector space, namely VQ space. This process is achieved by using a discretized encoder and a discretized decoder.

- Generative Adversarial Networks: VQGAN uses the structure of GAN to generate images. GAN is composed of two models, the generator and the discriminator. The generator is responsible for generating images, and the discriminator is responsible for judging whether the generated images are real images. During the training process, the generator and the discriminator play games with each other to continuously optimize their parameters so that the generated image is closer to the real image.

- Multi-Scale Architecture: VQGAN uses a multi-scale architecture, including encoders and decoders. In the encoder, multiple convolutional layers are used to extract features at different scales. In the decoder, these features are restored to images through upsampling and convolutional layers. This multi-scale structure enables VQGAN to generate more detailed images.

- Adversarial Training and Vector Quantization: VQGAN optimizes two loss functions during training: one for quantization error (that is, the error between discretized vectors and continuous values), and the other for the adversarial loss between the generator and the discriminator. These two loss functions are optimized simultaneously for better image generation.

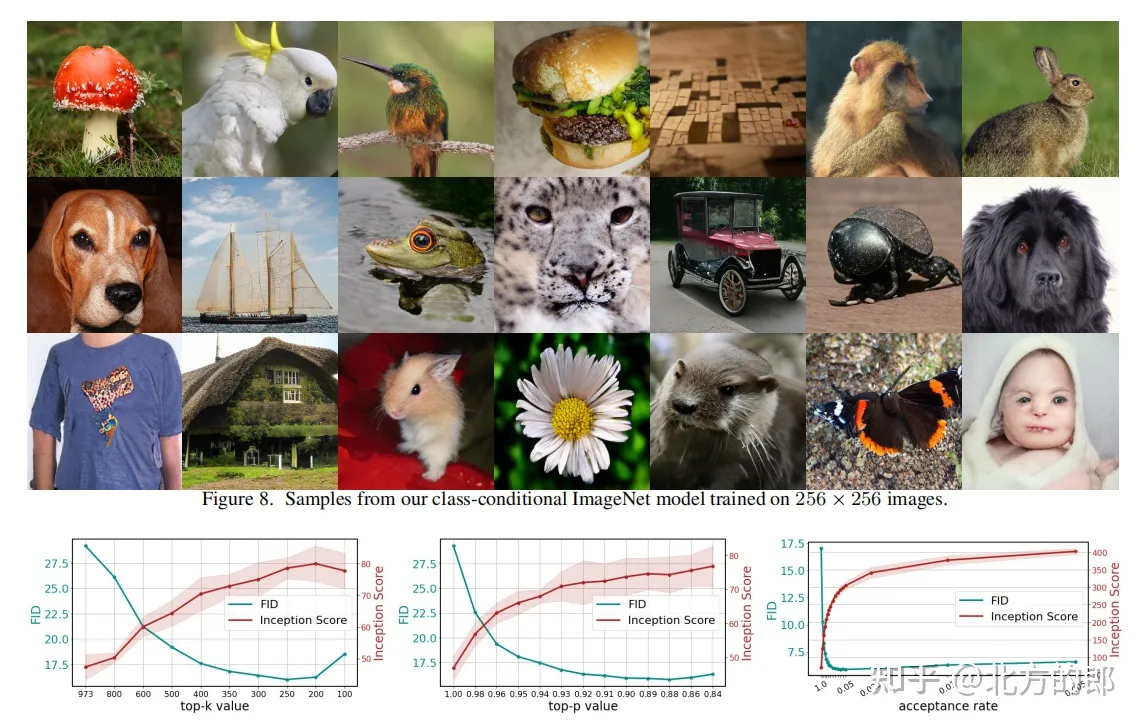

- Conditional Generation: VQGAN also supports conditional generation, that is, adding conditional information when generating images, such as generating relevant images through a given text description. This feature can expand the application domain of VQGAN.

Overall, VQGAN achieves high-quality image generation by using VQ techniques and GAN structures, as well as techniques such as multi-scale architectures and conditional generation.