Introduction to Generative Adversarial Networks

This blog will introduce Generative Adversarial Networks (GANs), various GAN variants, and interesting applications to solve real world problems. While most of the examples in this post are about using GANs for art and design, the same technique can be easily adapted and applied to many other fields: medicine, agriculture, and climate change. GANs are powerful and versatile.

Generative Adversarial Networks (GANs) Generative Adversarial Networks

This is the first article in the GAN series of tutorials:

- Introduction to Generative Adversarial Networks (this blog)

- Getting Started: DCGAN for Fashionable MNIST

- GAN Training Challenge: DCGAN on Color Images

1. Principle

1. How GANs work

GANs are generative models that observe many sample distributions and generate more samples from the same distribution. Other generative models include variational autoencoders (VAE variational autoencoders) and autoregressive models (Autoregressive models).

2. GAN architecture

In the basic GAN architecture there are two networks: the generator model and the discriminator model. GANs have the word "adversarial" in their name because the two networks are trained simultaneously and compete against each other, as in a zero-sum game like chess.

The generator model generates new images. The goal of the generator is to produce images that look so real that they fool the discriminator. In the simplest GAN architecture for image synthesis, the input is usually random noise and its output is the generated image.

A discriminator is just a binary image classifier whose job it is to classify whether an image is real or fake. In more complex GANs, the discriminator can be conditioned with images or text for image-to-image translation or text-to-image generation (Image-to-Image translation or Text-to-Image generation) .

On the whole, the basic architecture of GAN is as follows: the generator generates fake images; the real images (training data set) and fake images are input into the discriminator in batches. Then, the discriminator tells whether the image is real or fake.

3. Training GANs

Minimax game: G vs. D

Most deep learning models (such as image classification) are based on optimization: finding low values of the cost function. GANs are different because of two networks: the generator and the discriminator, each with its own cost and opposite goals: the generator

tries to trick the discriminator into seeing fake images as real and the

discriminator tries to correctly classify Classifying real and fake images

The following mathematical function of the minimax game illustrates this adversarial dynamic during training. Don't worry too much if you don't understand the math, I will explain it in more detail when encoding the G loss and D loss in a future DCGAN article.

During training, both the generator and the discriminator improve over time. Generators are getting better at generating images similar to the training data, while discriminators are getting better at distinguishing real images from fake ones.

GANs are trained to find an equilibrium in the game when

the data generated by the generator looks almost identical to the training data.

The discriminator is no longer able to tell the difference between fake and real images.

4. Artists and critics

Imitating masterpieces is a great way to learn about art - "How Artists Reproduced Masterpieces in World Famous Museums". As a human artist who imitates a masterpiece, I find an artwork I like as inspiration and replicate as much of it as I can: outlines, colors, composition and brushwork, and so on. Then a critic took one look at the book and told whether it looked like a true masterpiece.

GANs are trained similarly to this process by thinking of the generator as an artist and the discriminator as a critic. Note the difference in analogy between human and machine artists, though: the generator cannot access or see the masterpiece it is trying to replicate. Instead, it relies solely on feedback from the discriminator to improve the images it generates.

5. Evaluation Indicators

A good GAN model should have good image quality - e.g. not blurry and similar to the training images; diversity: a wide variety of images are generated that approximate the distribution of the training dataset.

To evaluate GAN models, the generated images can be visually inspected during training or by inference with the generator model. If you want to quantitatively evaluate GANs, here are two popular evaluation metrics:

- Inception Score captures the quality and diversity of generated images

- Fréchet Inception Distance compares real and fake images instead of just evaluating generated images in isolation

6. GAN variants

Since the original GAN paper by Ian Goodfellow et al. in 2014, many GAN variants have emerged. They tend to build upon each other, either to solve specific training problems or to create new GANs architectures that allow for finer control over GANs or better images.

Below are some of these breakthrough variants, laying the groundwork for future GAN advancements. Anyway, this is not a complete list of all GAN variants.

-

DCGAN (Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks) is the first GAN proposal to use a Convolutional Neural Network (CNN) in its network architecture. Most current GAN variants are based on DCGAN to some extent. Therefore, DCGAN is likely to be your first GAN tutorial, learn the "Hello World" of GAN.

-

WGAN (Wasserstein GAN) and WGAN-GP (wasserstein GAN) were created to address GAN training challenges such as mode collapse - when the generator repeatedly generates the same image or a small portion (of a training image). Instead of weight clipping to improve training stability, WGAN is improved.

-

cGAN (Conditional Generative Adversarial Networks) first introduced the concept of generating images based on a condition, which could be an image class label, an image, or text, as in more complex GANs. Both Pix2Pix and CycleGAN are conditional GANs that use images as conditions for image-to-image translation.

-

Pix2PixHD (High-Resolution Image Synthesis and Semantic Operations with Conditional GANs) removes the influence of multiple input conditions, and as shown in the paper example: Controlling the color, texture, and shape of generated clothing images for fashion design. Furthermore, it can generate realistic 2k high-resolution images.

-

SAGAN (Self-Attention Generative Adversarial Network) improves image synthesis quality: By applying self-attention modules (a concept in NLP models) to neural networks, details are generated using cues from all feature locations. Google DeepMind expanded the scale of SAGAN and created BigGAN.

-

BigGAN (Large-Scale GAN Training for High-Fidelity Natural Image Synthesis) can create high-resolution and high-fidelity images.

-

ProGAN, StyleGAN, and StyleGAN2 can all create high-resolution images.

-

ProGAN (Progressive Growth of GANs for Quality, Stability, and Variation) makes networks grow incrementally.

-

StyleGAN (a style-based generator architecture for generative adversarial networks) introduced by NVIDIA Research uses the progressive growth of ProGAN with Adaptive Instance Normalization (AdaIN) plus image style transfer, and the ability to control the style of generated images .

-

StyleGAN2 (Analyzing and Improving Image Quality of StyleGAN) improves on the original StyleGAN by making some improvements in the areas of normalization, progressive growth, and regularization techniques.

7. GAN application

GANs are general and can be used in various applications.

8. Image synthesis

Image composition can be fun and serve practical purposes, such as image enhancement in machine learning (ML) training or to help create artwork and design assets.

GANs can be used to create images that never existed before, and this is perhaps what GANs are best known for. They can create unseen new faces, cat figures and artwork, and more. I've included some high-fidelity images below that I generated from the StyleGAN2 powered website. Go to these links, experiment for yourself, and see what images you get from experimenting.

Zalando Research uses GANs to generate stylish designs based on color, shape, and texture (disentangling multiple conditional inputs in GANs).

Fashion++, a Facebook study, goes beyond creating fashion to recommending fashion-changing advice: "What is fashion?"

GANs can also help train reinforcers. For example, NVIDIA's GameGAN simulates a gaming environment.

9. Image-to-image translation

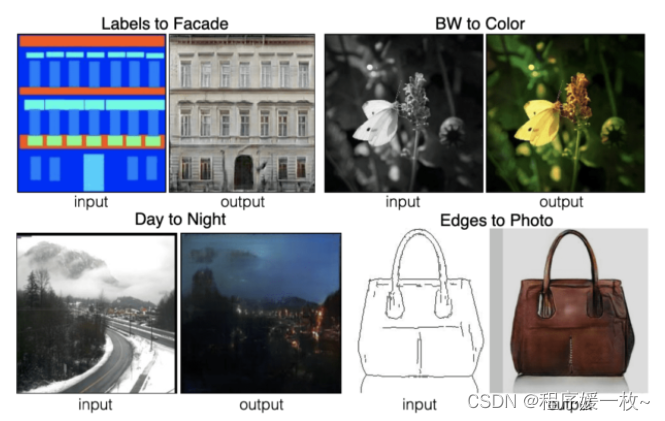

Image-to-image translation is a computer vision task that transforms an input image into another domain (e.g., color or style) while preserving the original image content. This is perhaps one of the most important tasks for using GANs in art and design.

Pix2Pix (Image-to-Image Translation with Conditional Adversarial Networks) is a conditional GAN, probably the most famous image-to-image translation GAN. However, a major drawback of Pix2Pix is that it requires a dataset of paired training images.

Image-to-Image Translation Using Conditional Adversarial Networks

investigates the image-to-image translation problem with conditional adversarial networks as a general solution. These networks not only learn a mapping from an input image to an output image, but also learn a loss function to train this mapping. This makes it possible to apply the same general approach to problems that traditionally require very different loss formulations. We demonstrate that this approach is effective for synthesizing images from label maps, reconstructing objects from edge maps, and colorizing images, among other tasks. In fact, since the release of the pix2pix software this paper, a large number of netizens (among them artists) have posted their own experiments with the system, further demonstrating its broad applicability and ease of adoption without tuning parameters. As this work shows it is possible to obtain one of the reasonable result functions without manually designing the loss.

Entanglement of Multiple Conditional Inputs in GANs

In this paper, we propose a method to disentangle the influence of multiple input conditioning in generative adversarial networks (GANs). In particular, methods for controlling the color, texture, and shape of generated clothing images for computer-aided fashion design are demonstrated. To untangle the influence of input attributes, we tailor a conditional GAN with a consistency loss function. Experiments adjust one input at a time and show that the network can be guided to generate novel and realistic images of clothing. Also presented is a garment design process that estimates input properties of existing garments and modifies them using a generator.

CycleGAN builds on Pix2Pix and only requires unpaired images, which are more readily available in the real world. It can convert images of apples to oranges, day to night, horses to zebras...well. These may not be real-world use cases; since then, many other image-to-image Gans have been developed for art and design.

Now you can translate your selfies into manga, drawing, cartoon or any other style you can imagine. For example, using White-box Cartoon GAN (White-box CartoonGAN) to turn my selfie into a cartoon version.

Colorization can be applied not only to black and white photos, but also to artwork or design assets. During artwork making or UI/UX design, we start with an outline or silhouette and then color it. Automatic coloring can provide inspiration for artists and designers.

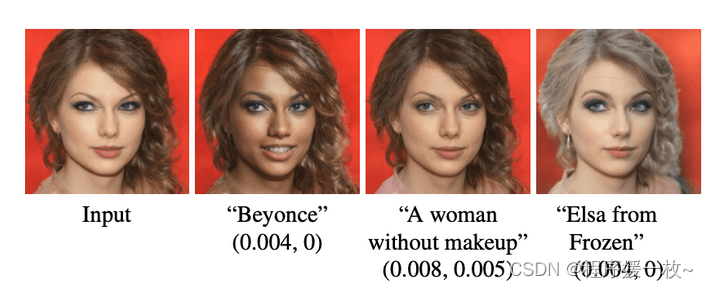

10. Text-to-Image

We have seen many examples of image-to-image translation by GANs. It is also possible to use words as conditions to generate images, which is more flexible and intuitive than using class labels as conditions.

The combination of NLP and computer vision has become a hot research field in recent years. Here are a few examples: StyleCLIP and Taming Transformers for High-Resolution Image Synthesis (StyleCLIP and Taming Transformers for High-Resolution Image Synthesis).

We show how to (i) use CNNs to learn context-rich image lexical components, and then (ii) utilize Transformers to efficiently model composition in high-resolution images. Our method is readily applicable to conditional synthesis tasks, where both non-spatial information such as object class and spatial information such as segmentation can control the generated images. In particular, we show the state-of-the-art on using Transformers for semantic-guided synthesis of megapixel images and obtaining class-conditional autoregressive models on ImageNet.

11. Go beyond images

GANs can be used not only for images, but also for music and video. For example, GANSynth in the Magenta project can make music. Here is an interesting example of GAN video action transfer called "Everyone Dance Now" (YouTube | Paper). I've always enjoyed watching this fascinating video of a professional dancer's dance moves being transferred to an amateur.

12. Other GAN applications

Here are some other GAN applications:

-

Image Repair: Replace missing parts in an image.

-

Image uncropping or stretching: This may help simulate camera parameters in virtual reality.

Unbounded: Generative Adversarial Networks for Image Expansion

Image expansion models have broad applications in image editing, computational photography, and computer graphics. While image inpainting has been extensively studied in the literature, it is challenging to directly apply state-of-the-art inpainting methods to image extensions as they tend to generate semantically inconsistent blurred or repeated pixels. We introduce semantic conditioning into the discriminator of generative adversarial networks (GANs), and achieve promising results on image extension with coherent semantics and visually pleasing colors and textures. We also show promising results in extreme extensions, such as panorama generation.

- Super-resolution (SRGAN and ESRGAN): Enhance images from low-resolution to high-resolution. This can be very helpful for photo editing or medical image enhancement.

Photorealistic single-image super-resolution using generative adversarial networks

Despite breakthroughs in the accuracy and speed of single-image super-resolution using faster and deeper convolutional neural networks, a core problem remains Unsolved: How can finer texture details be recovered when super-resolution is performed at massive upscaling factors? The behavior of optimization-based super-resolution methods is mainly driven by the choice of objective function. Most recent work has focused on minimizing the mean squared reconstruction error. The resulting estimates have high peak signal-to-noise ratios, but they tend to lack high-frequency detail and are perceptually unpleasing because they cannot match the fidelity expected at higher resolutions. In this paper, we propose SRGAN, a generative adversarial network (GAN) for image super-resolution (SR). To the best of our knowledge, this is the first framework capable of inferring photorealistic natural images with a 4x magnification factor. To achieve this, we propose a perceptual loss function consisting of an adversarial loss and a content loss. The adversarial loss pushes our solution onto the natural image manifold using a discriminator network trained to distinguish super-resolution images from raw photo-realistic images. Furthermore, a content loss driven by perceptual similarity rather than similarity in pixel space is used. Deep residual networks are able to recover photorealistic textures from massively downsampled images on public benchmarks. An extensive Mean Opinion Score (MOS) test shows a very significant improvement in perceptual quality using SRGAN. The MOS scores obtained with SRGAN are closer to those of the original high-resolution images than those obtained with any state-of-the-art method.

Here is an example of how GANs can be used to combat climate change. Earth Intelligence Engine is an FDL (Frontier Development Lab) 2020 project that uses Pix2PixHD to simulate what an area would look like after a flood.

Earth Intelligence Engine is an FDL 2020 project to help urban planners communicate flood risk more effectively and intuitively to support the development of climate-resilient infrastructure.

We create the first physically consistent, photorealistic visualization engine of coastal flooding and reforestation by combining generative computer vision methods with climate science models. We achieve this through novel methods for ensuring the physical consistency of GAN-generated images, and a modular approach using multiple vision and climate models.

We've seen demonstrations of GANs from papers and research labs. and open source projects. These days, we're starting to see real commercial applications using GANs. Designers are familiar with using the design resources in Icons8. Take a look at their website and you'll notice applications of GANs: from Smart Upscaler, Generated Photos to Face Generator.