1. What is the rendering pipeline

The rendering pipeline is also called the rendering pipeline, and its process is: model data -> vertex shader -> tessellation shader -> geometry shader -> clipping -> screen mapping -> triangle settings -> traversing triangles -> fragments Shader -> Fragment Operations -> Imaging

2. Concrete operation

1. Vertex Shader

The vertex shader is fully programmable, and its input comes from the model data sent by the CPU. As the name implies, the vertex shader is used to process vertices, so each vertex in the input data will go through the vertex shader.

The vertex shader cannot destroy and create any vertices, nor can it obtain the relationship between vertices.

His main tasks include: coordinate transformation, vertex-by-vertex lighting, and output of subsequent required data

2. Surface subdivision shader (used to subdivide primitives, which is an optional shader)

3. Geometry shader (for primitive operation, also belongs to optional shader)

4. Cropping

Our scenes are usually very large, and the camera's viewing range is very small. At this time, some objects are completely within the camera's viewing range, some are only partially within the camera's viewing range, and some are completely out of the camera's viewing range. scope. And part of the graphics within the visible range of the camera needs to be cropped. We usually generate a new point at the intersection of the object and the edge of the camera's visible range to replace the undisplayed point. This step is called clipping, and the clipped data is passed to the next pipeline.

5. Screen mapping

The job of screen mapping is to convert the x and y coordinates of each primitive to the screen coordinate system. The screen coordinate system is a two-dimensional coordinate system, which has a great relationship with the resolution we use to display the screen.

6. Triangle Setup

From this stage, it enters the rasterization stage. The output of the previous stage is the vertex position in the screen coordinate system and other related information (for example: depth, normal direction, viewing angle direction).

The main task of this stage is to calculate which pixels are covered by each primitive and calculate their colors.

7. Traversing triangles

This stage checks whether each pixel is covered by a triangle mesh, and generates a fragment if so.

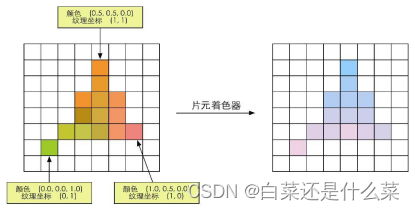

8. Fragment shader

This stage is a programmable stage, and the output of this stage is one or more color values, such as the following figure

9. Fragment-by-slice operation

This stage is called the output merge stage

main mission

- Determines the visibility of each fragment. Test: Depth, Stencil

- If the test passes, store the data into the buffer for merging.

3. Rendering stage

1. Model data

2. Geometry stage (vertex shader, tessellation shader, geometry shader, clipping, screen mapping)

3. Rasterization stage (triangle setup, triangle traversal, fragment shader, fragment-by-fragment operation)