Article directory

- Basic structure of graph neural network

- Atlas and Graph Fourier Transform

- Comparison of GNN based on spectral domain and GNN based on spatial domain

- Task Requirements and Model Requirements for Graph Neural Networks

- A Practical Framework for Graph Neural Networks

- GCN

- Several interview questions for graph neural networks

Basic structure of graph neural network

Graph Neural Network (GNN) is a class of deep learning models for processing graph data. It can learn the nodes and edges of the graph, and is suitable for various graph structure tasks, such as node classification, link prediction, community discovery, etc. The basic structure of a graph neural network usually consists of the following two parts:

-

Node Embedding : Map each node in the graph to a low-dimensional vector representation, usually using some linear transformation, nonlinear activation function and normalization techniques. The purpose of node embedding is to capture the features and local structural information of nodes.

-

Graph Convolution : Use node embedding and graph structure information to update node features, thereby realizing representation learning for the entire graph. Graph convolution operations can generally be viewed as an aggregation operation based on local neighborhood features, taking both node features and local topology information into account. The main purpose of graph convolution is to achieve representation learning for graph structures by iteratively updating node embeddings.

In addition to these two parts, graph neural networks may also include other modules, such as attention mechanisms, pooling layers, and classifiers, to better handle the characteristics and complexity of graph data.

It should be noted that different types of graph neural networks may have different node embedding and graph convolution designs, for example, GCN uses Laplacian matrix and Fourier transform for graph convolution, while GAT uses attention mechanism to Fusion of information between different nodes.

Atlas and Graph Fourier Transform

The spectrum is the eigenvalues of the Laplacian matrix of the graph. Specifically, given a graph G=(V,E), where V and E represent the node set and edge set of graph G respectively, assuming that n represents the number of nodes, the adjacency matrix A of graph G is an nxn matrix, if the node If there is an edge connecting i and j, then A ij =1, otherwise A ij =0. The degree matrix of graph G is D=diag(d1, d2,...,dn), where di represents the degree of the i-th node in the graph. Define the Laplacian matrix L = D - A of the graph, and perform eigenvalue decomposition on it:

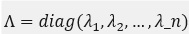

among them,  it is arranged in order of eigenvalues from small to large, and

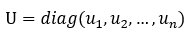

it is arranged in order of eigenvalues from small to large, and  is an orthogonal matrix composed of corresponding eigenvectors. The set of eigenvalues above

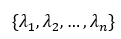

is an orthogonal matrix composed of corresponding eigenvectors. The set of eigenvalues above  is the spectrum of the graph G.

is the spectrum of the graph G.

Graph Fourier Transform (Graph Fourier Transform) is a technique based on graph signal processing, similar to traditional Fourier transform, but suitable for processing graph data.

In the traditional Fourier transform, a continuous signal or a discrete signal is transformed into the frequency domain through Fourier transform. This frequency domain shows the frequency characteristics of the signal and is usually used for filtering, denoising, compressing and other operations on the signal. . In the graph Fourier transform, similarly, we convert a graph signal (Graph Signal) into the graph frequency domain through Fourier transform for graph structure analysis, filtering and other operations.

There is a significant difference between the calculation method of the Fourier transform of the graph and the traditional Fourier transform. It needs to convert the graph into a Laplacian Matrix first, and then use the eigenvectors and eigenvalues corresponding to the Laplacian matrix. Do a Fourier transform. Specifically, assuming there is a graph G with n nodes, the adjacency matrix is A, and the degree matrix is D, the Laplacian Matrix can be calculated as follows: L = D - A.

Denote the matrix composed of eigenvectors of L as U, and the vector composed of eigenvalues as λ, then the Fourier transform of the graph can be obtained:

f̂ = U^T * f, where f is the original graph signal and f̂ is its frequency-domain representation in the graph Fourier transform.

Graph Fourier transform can be used in many applications such as signal recovery, graph compression, image analysis, etc., especially for processing regular and irregular graph structures.

Comparison of GNN based on spectral domain and GNN based on spatial domain

GNN based on the spectral domain and GNN based on the spatial domain are currently two mainstream GNN models. The two have different characteristics and applicable scenarios. The following is a brief comparison:

- principle

The GNN based on the spectral domain relies on the graph Fourier transform to transform the graph signal (node features) into the frequency domain for processing, and the propagation of node features is essentially carried out in the frequency domain. The GNN based on the spatial domain updates the feature representation of the current node by aggregating the features of the neighbor nodes around each node.

- Advantages and disadvantages

The GNN based on the spectral domain has strong expressive ability and stability, because the Fourier transform of the graph can provide global structural information and can capture the essential characteristics of the graph. At the same time, the effect is better on some maps with strong smoothness. However, the GNN based on the spectral domain has the problems of large amount of calculation and high computational complexity.

The GNN based on the spatial domain is highly computationally efficient and easy to implement, and it works well for graphs with obvious local features (such as social networks, semantic networks, etc.). But GNNs based on spatial domain are difficult to deal with global structural information and edge features of complex graphs.

- Applicable scene

GNN based on the spectral domain is suitable for processing regularized or smoother graphs, such as Molecular Graphs (molecular graph structure), Image Graphs (visual task graph structure), etc. The GNN based on the spatial domain is suitable for processing irregular and complex graphs, such as Social Graphs (social network), Textual Graphs (text task graph structure), etc.

In general, whether to choose a GNN based on the spectral domain or a GNN based on the spatial domain needs to be selected and evaluated according to the characteristics of specific application scenarios and tasks.

Task Requirements and Model Requirements for Graph Neural Networks

mission requirements

-

Node Classification: Given a graph and labels for some nodes, predict labels for the remaining nodes.

-

Link Prediction: Given a graph, predict whether there is an edge between two nodes.

-

Community discovery: Given a graph, divide nodes into several communities.

-

Graph Feature Extraction: Extract high-level feature representations from raw image data for subsequent classification and recognition.

model requirements

-

Spatial invariance: GNNs should be unbiased about the arrangement of nodes in the graph, i.e. spatial invariance.

-

Locality: For each node, GNN should only consider the information of its neighbor nodes, not the information of the whole graph, that is, locality.

-

Reproducibility: GNNs should be able to train the model multiple times on the same graph and get the same results.

-

Scalability: GNNs should be able to handle large-scale graph data while preventing the model from overfitting and underfitting.

-

Robustness: GNNs should be able to handle cases of missing or noisy data and maintain stable performance.

In order to meet the above requirements, GNN models usually have the following characteristics:

-

Based on the convolution operation of the adjacency matrix, convolution, pooling and full connection operations are performed on each node.

-

The update method of node representation is usually based on the characteristics of the node itself and the characteristics of its neighbor nodes, which is used to represent the context information of the node in the whole graph.

-

GNN is usually trained using the gradient descent algorithm, combined with regularization, dropout and other techniques to avoid model overfitting.

-

For large-scale graph data, GNN usually uses methods such as sampling and aggregation to reduce the amount of computation and storage.

-

GNN usually adopts a multi-layer model and iteratively updates node representations to achieve better performance.

A Practical Framework for Graph Neural Networks

There are currently a variety of practical frameworks available for graph neural networks. The following are some of the more popular practical frameworks:

-

PyTorch Geometric ( https://pytorch-geometric.readthedocs.io/en/latest/ )

is a computing library built using PyTorch to process graph-structured data, which supports a large number of traditional graph algorithms and Graph Neural Network (GNN) algorithms. The framework is suitable for processing large-scale, high-dimensional graph-structured data. -

Deep Graph Library ( https://www.dgl.ai/ )

is a deep learning library for processing graph-structured data, supporting a variety of deep learning models, such as graph convolutional networks, graph attention networks, etc. The framework is suitable for processing large-scale, distributed graph-structured data. -

GraphConv ( https://github.com/mdeff/cnn_graph )

is a GNN framework implemented using TensorFlow, especially suitable for processing graph structure information of spatial two-dimensional data. The core of the framework is to use a graph convolutional network to perform convolution operations on the nodes of the graph to extract feature information. -

Deep Graph Nets ( https://github.com/dsgiitr/dgmc )

is a dynamic graph neural network (Dynamic Graph Neural Networks, DGN) framework implemented using TensorFlow, which can dynamically edit and update different graphs to adapt to more complex scene.

The above practical frameworks provide a complete graph neural network algorithm model and corresponding implementation methods, which can be selected according to specific needs when used.

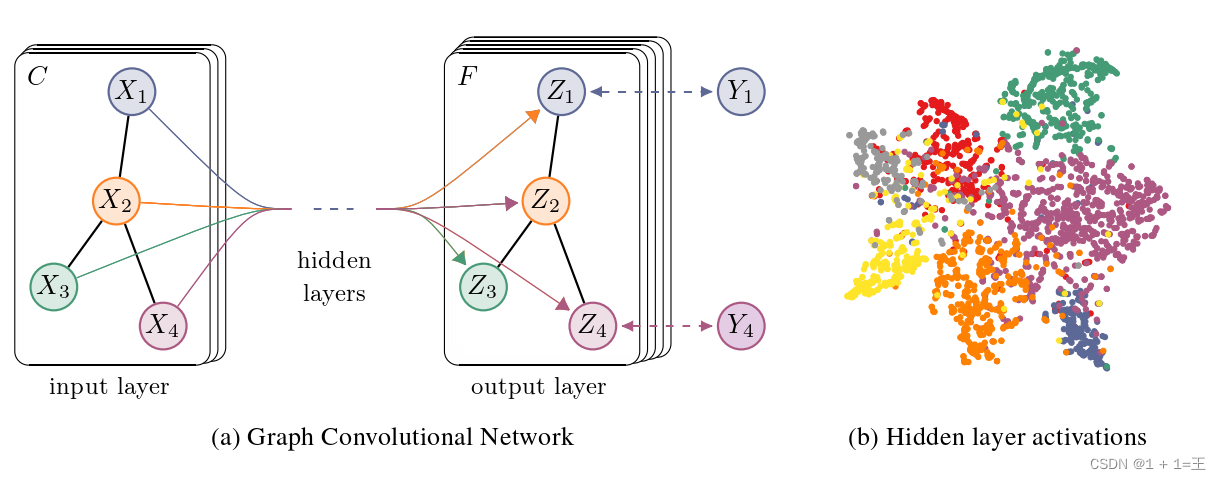

GCN

For an input graph, it has N nodes, the features of each node form a feature matrix X, and the relationship between nodes forms an adjacency matrix A, and X and A are the inputs of the model.

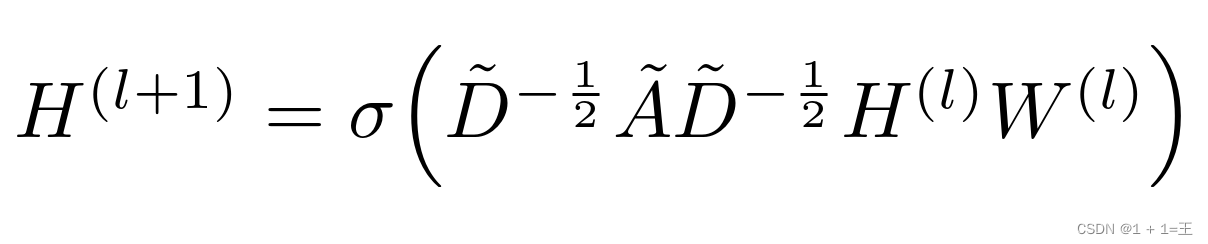

GCN is a neural network layer with the following layer-by-layer propagation rules:

in,

- ˜A = A + I, where A is the connection matrix of the input graph and I is the identity matrix.

- ˜D is the degree matrix of ˜A, ˜D ii = ∑ j ˜A ij

- H is the feature of each layer, for the input layer H = X

- σ is the non-linear activation function

- W is the trainable weight matrix for a specific layer

After multi-layer GCN propagation layer by layer, the feature matrix of the input graph changes from X to Z, but the adjacency matrix A is always shared, as shown in the figure below: You can

use pyg (torch_geometric) to build a GCN network:

use pyg (torch_geometric) to build a GCN network:

import torch

import torch.nn as nn

from torch_geometric.nn import GCNConv

import torch.nn.functional as F

class GCN(torch.nn.Module):

def __init__(self, in_channels, hidden_channels, out_channels):

super().__init__()

self.conv1 = GCNConv(in_channels, hidden_channels)

self.conv2 = GCNConv(hidden_channels, out_channels)

def forward(self, x, edge_index):

x = self.conv1(x, edge_index)

x = x.relu()

x = F.dropout(x, p=0.5, training=self.training)

x = self.conv2(x, edge_index)

return x

Several interview questions for graph neural networks

The following is a brief analysis of some common interview questions on graph neural networks mentioned above:

- Please briefly introduce what a graph neural network is and what are its application scenarios?

Graph neural network is a deep learning model for graph data. It is mainly used in node classification, graph classification, link prediction, recommendation system and other fields. It has also been applied to related research in chemistry, physics, social network and other fields recently.

- What is the difference between a graph convolutional neural network and a normal convolutional neural network?

Ordinary Convolutional Neural Networks (CNNs) are mainly used to process data in Euclidean space. The graph convolutional neural network (GCN) is mainly used to process data on irregular graphs, and its core is to perform convolution operations on the adjacency matrix on the graph.

- What are adjacency matrix and feature matrix?

The adjacency matrix describes the connection relationship between each node in the graph. It is a two-dimensional matrix of n×n, where n represents the number of nodes. For nodes i and j, if they are connected, the adjacency matrix Aij=1; otherwise Aij=0. The characteristic matrix describes the characteristic information of the node, which is a two-dimensional matrix of n×d, where d represents the dimension of the node characteristic, and each row represents the characteristic vector of a node.

- Please briefly introduce the message passing mechanism in graph convolutional neural network.

In a graph convolutional neural network, each node receives information from its neighbors, aggregates the information, and finally updates its own feature representation. Specifically, each node i will receive the feature representation (feature matrix V) and its adjacency matrix Aij passed to it by its neighbor node j. After aggregating these information, node i will get its updated feature representation.

- What is a Graph Attention Network (GAT)? What is the difference from traditional GCN?

Graph Attention Network (GAT) is a graph neural network model based on self-attention mechanism, which can update the feature representation of the current node by adaptively fusing information from surrounding nodes. Compared with the traditional GCN, the biggest feature of GAT is that the importance of adjacent nodes is no longer fixed, but can be adaptively learned according to the specific situation.

- Please briefly describe the calculation process of GraphSAGE.

GraphSAGE is a graph neural network approach based on vectorization of nodes' neighbors in heterogeneous graphs. Specifically, it uses a method similar to aggregation pooling, splicing all node vectors in the subgraph where the current node is located, and then converting them through a neural network model (usually a multi-layer perceptron), And the transformed result is used as the new feature representation of the current node.

- What is Graph Auto-Encoder (GAE)? What are the application scenarios?

Graph Auto-Encoder (GAE) is a method for unsupervised graph representation learning. Its main idea is to achieve a low-dimensional, semi-supervised feature embedding representation by training a codec. Application scenarios include social networks, protein structure prediction and other fields.

- Please briefly describe the Graph Pooling operation in the graph neural network.

The Graph Pooling operation in the graph neural network is mainly used to reduce the number of nodes in the graph. Specifically, it aggregates several nodes in the current subgraph into a new node, and then proceeds to the next round of feature extraction.

- What is ChebNet? What is the difference from traditional GCN?

ChebNet is a graph convolutional neural network model based on Chebyshev polynomials. Its main advantage is that it can consider local neighborhood information and global information at the same time, and has better generalization performance. The main difference from the traditional GCN is that it uses Chebyshev polynomials to approximate the Laplacian of the adjacency matrix, and then converts complex convolution operations into matrix multiplication, reducing computational complexity.

- What is graph representation learning? Please give an example.

Graph representation learning refers to transforming complex graph structures into low-dimensional vector representations. For example, for a user node in a social network graph, the adjacent nodes of the node can be aggregated, and the aggregated result can be used as a new feature representation of the current node. For another example, for the atomic structure in biomolecules, it can be represented by layer-by-layer conversion coupled basic graph convolutional neural network.