Graph and graph learning

Reference from Baidu Flying Paddle Course

1. The basic representation method of graphs

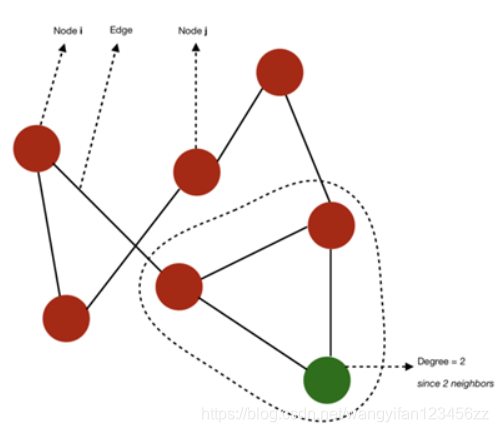

Graph G=(V, E) is composed of the following elements:

- A set of nodes (also called verticle) V=1,…,n

- A set of edges E⊆V×V

- Edge (i,j) ∈ E connects nodes i and j

- i and j are called neighbors

- The degree of a node (degree) refers to the number of adjacent nodes

Schematic diagram of nodes, edges and degrees

- If all nodes of a graph have n-1 adjacent nodes, the graph is complete. In other words, all nodes have all possible connection methods.

- The path from i to j is the sequence of edges from i to j. The length of the path is equal to the number of sides traversed.

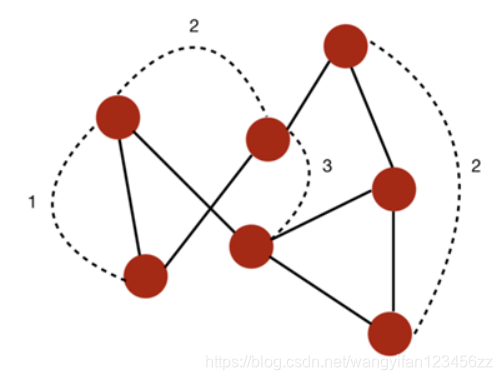

- The diameter of a graph refers to the length of the longest path among all the shortest paths connecting any two nodes.

For example, in this case, we can calculate some shortest paths connecting any two nodes. The diameter of the graph is 3, because no shortest path between any two nodes has a length greater than 3.

Geodesic path refers to the shortest path between two nodes.

If all nodes can be connected to each other through a certain path, they form a connected component. If a graph has only one connected component, then the graph is connected (connected)

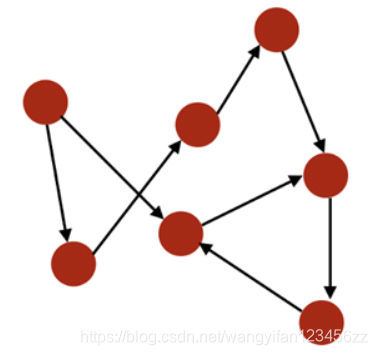

If the edges of a graph are paired in order, the graph is directed. i is the degree (in-degree) is the number of edges of the point i, the degrees ** (out-degree) ** i is the number of edges away

if a given node can return, then the graph is cyclic的 (cyclic). In contrast, if at least one node cannot be returned, the graph is acyclic.

Graphs can be weighted, that is, weights are applied to nodes or relationships.

If the number of edges in a graph is smaller than the number of nodes, the graph is sparse. In contrast, if there are many edges between nodes, the graph is dense.

2. How to store graphs

There are three ways to store a graph, depending on what you want to use it for:

Store as an edge list:

1 2

1 3

1 4

2 3

3 4

…

We store the ID of each pair of nodes connected by an edge, for example:

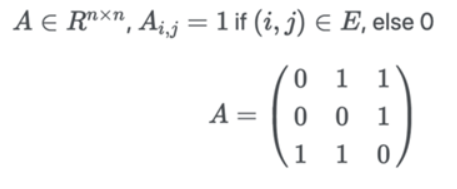

Use the adjacency matrix , which is usually loaded in memory :

For each possible pairing in the graph, if two nodes are connected by an edge, set it to 1 . If the graph is undirected, then A is symmetric.

3. Types and properties of graphs

Graphs can be classified according to different standards. Here we mainly talk about a classification method, isomorphic graphs and heterogeneous graphs.

Isomorphic graphs and heterogeneous graphs

Two graphs G and H are the same composition (isomorphic graphs), can be generated by H in FIG vertex graph G relabeled .

If G and H are isomorphic, then their orders are the same , their sizes are the same, and the degrees of their vertices correspond to the same.

Heterogeneous graph is a new concept corresponding to isomorphic graph.

There is only one kind of node and edge in the traditional Homogeneous Graph data . Therefore, when building a graph neural network, all nodes share the same model parameters and have the same dimensional feature space .

There can be more than one kind of nodes and edges in a Heterogeneous Graph, so different types of nodes are allowed to have different dimensional features or attributes .

4. What is a graph neural network?

In the past few years, the rise and application of neural networks have successfully promoted the research of pattern recognition and data mining. Many machine learning tasks that once relied heavily on manual feature extraction (such as target detection, machine translation, and speech recognition) have now been used by various end-to-end deep learning paradigms (such as convolutional neural networks (CNN), long short-term memory ( LSTM) and autoencoder) have completely changed. Some scholars have attributed the rise of this artificial intelligence wave to three conditions, namely:

- The rapid development of computing resources (such as GPU)

- Availability of large amounts of training data

- The effectiveness of deep learning in extracting latent features from Euclidean spatial data

Although traditional deep learning methods have been applied to extract the features of Euclidean spatial data with great success, the data in many practical application scenarios are generated from non-Euclidean spaces, and traditional deep learning methods are dealing with non-Euclidean spatial data. However, the performance on the Internet is still unsatisfactory . For example, in e-commerce, a graph-based learning system can use the interaction between users and products to make very accurate recommendations, but the complexity of graphs makes existing deep learning algorithms face huge challenge. This is because the graph is irregular, each graph has a variable size unordered node, each node in the graph has a different number of adjacent nodes, leading to some important operations (such as convolution) in the image (Image) is easy to calculate, but it is no longer suitable for direct use in pictures . In addition,A core assumption of existing deep learning algorithms is that the data samples are independent of each other. However, for graphs, this is not the case. Each data sample (node) in the graph will have edges related to other real data samples (nodes) in the graph. This information can be used to capture the interdependence between instances.

In recent years, people have become more and more interested in the expansion of deep learning methods on graphs. Driven by the success of many factors, the researchers used the ideas of convolutional networks, recurrent networks and deep autoencoders for reference to define and design the neural network structure for processing graph data, thus a new research hotspot——" "Graph Neural Networks (GNN)" came into being. This article mainly provides a brief overview of the current research status of graph neural networks.

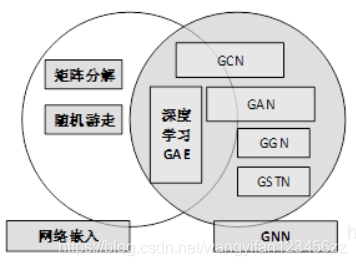

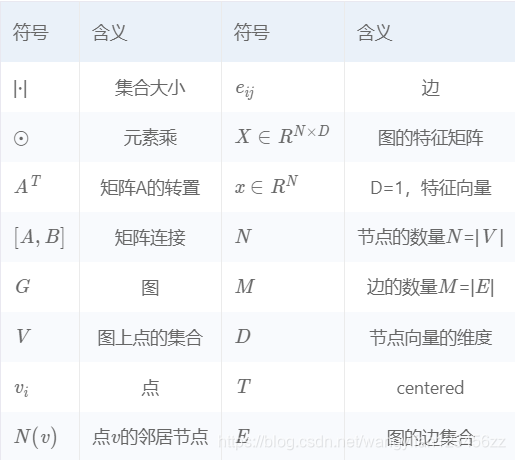

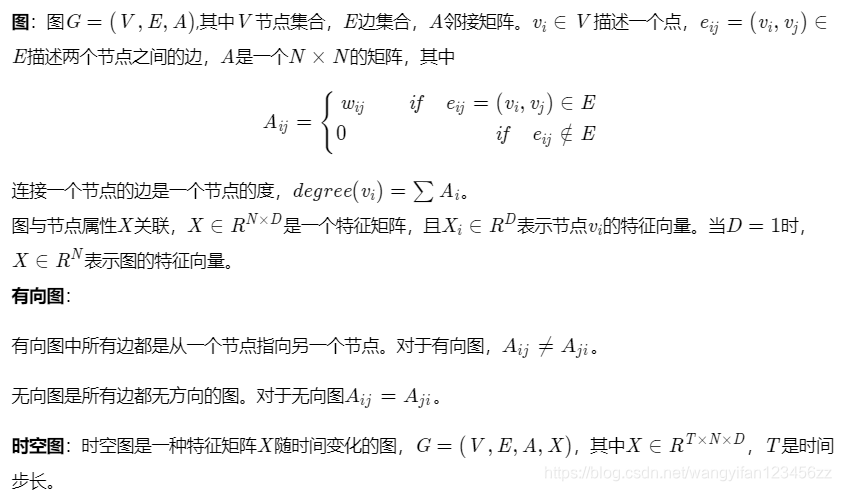

It should be noted that the research of graph neural network is closely related to graph embedding (readers who don’t know about graph embedding can refer to this article "Overview of Graph Embedding") or network embedding. Graph embedding or network embedding is the field of data mining and machine learning Another subject of increasing concern .Graph embedding aims to represent the vertices in the graph as low-dimensional vectors by preserving the network topology structure and node content information of the graph, so that simple machine learning algorithms (for example, support vector machine classification) can be used for processing. Many graph embedding algorithms are usually unsupervised algorithms, and they can be roughly divided into three categories, namely matrix factorization, random walk and deep learning methods . At the same time, the deep learning method of graph embedding is also a graph neural network, including graph autoencoder-based algorithms (such as DNGR and SDNE) and unsupervised training graph convolutional neural networks (such as GraphSage). The following figure describes the difference between graph embedding and graph neural network in this article.

5. What graph neural networks are there?

In this article, we divide graph neural networks into five categories, namely: Graph Convolution Networks (GCN), Graph Attention Networks (Graph Attention Networks), Graph Autoencoders, Graph Generation Networks (Graph Generative Networks) and Graph Spatial-temporal Networks.

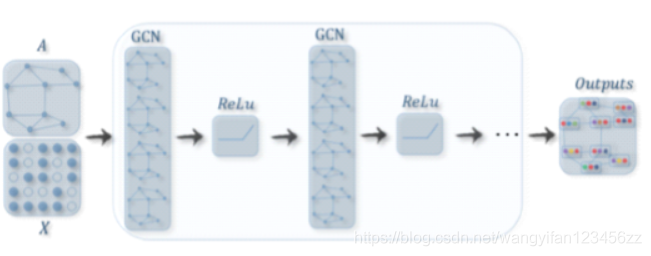

1. Graph Convolution Networks (Graph Convolution Networks, GCNs)

GCN methods can be divided into two categories, spectral-based and spatial-based. The spectrum-based method introduces a filter from the perspective of graph signal processing to define graph convolution, where the graph convolution operation is interpreted as removing noise from the graph signal . The space-based method expresses the graph convolution as the aggregation of feature information from the neighborhood. When the graph convolutional network algorithm runs at the node level, the graph pooling module can be interleaved with the graph convolution layer to coarsen the graph into high-level substructures . As shown in the figure below, this architecture design can be used to extract all levels of graph representation and perform graph classification tasks.

2. Space-based GCNs method

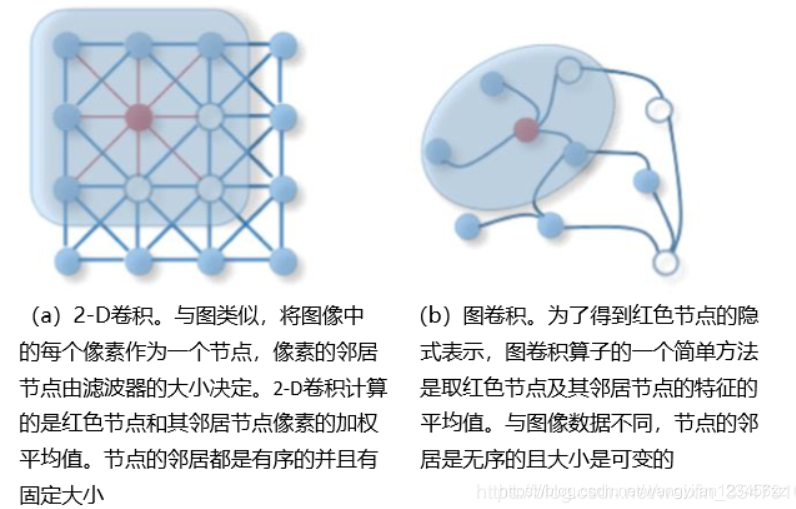

The idea of the space-based graph convolutional neural network is mainly derived from the traditional convolutional neural network on the image convolution operation. The difference is that the space-based graph convolutional neural network defines the graph convolution based on the spatial relationship of the nodes.

In order to associate an image with a graph, an image can be regarded as a special form of a graph. Each pixel represents a node. As shown in Figure a below, each pixel is directly connected to its nearby pixels. Through a 3×3 window, the neighborhood of each node is 8 pixels around it. The positions of these eight pixels indicate the order of neighbors of a node. Then, by weighting and averaging the pixel values of the center node and its neighboring nodes on each channel, a filter is applied to the 3×3 window. Due to the specific order of adjacent nodes, trainable weights can be shared in different positions . Similarly, for a general graph, the space-based graph convolution aggregates the central node representation and the adjacent node representation to obtain a new representation of the node, as shown in Figure b.

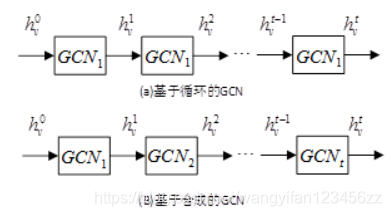

A common practice is to stack multiple graph convolutional layers together. According to different methods of convolutional stacking, space-based GCNs can be further divided into two categories: recurrent-based and composition-based spatial GCNs. The recurrent-based method uses the same graph convolutional layer to update the hidden representation, and the composition-based method uses a different graph convolutional layer to update the hidden representation. The figure below illustrates this difference.

3. Spatial GCNs method based on combination

As the earliest graph convolutional network, the spectrum-based model has achieved impressive results in many graph-related analysis tasks. These models have a certain theoretical basis in graph signal processing. By designing a new graph signal filter, we can theoretically design a new graph convolution network. However, the spectrum-based model has some shortcomings that are difficult to overcome. Below we will explain it from three aspects: efficiency, versatility, and flexibility.

In terms of efficiency, the computational cost of spectral-based models increases sharply with the size of the graph, because they either need to perform feature vector calculations or process the entire graph at the same time, which makes them difficult to apply to large graphs . Space-based models have the potential to handle large graphs because they perform convolution directly in the graph domain by clustering neighboring nodes. Calculations can be performed in a batch of nodes, rather than in the entire graph. When the number of adjacent nodes increases, sampling techniques can be introduced to improve efficiency.

In general, spectral-based models assume a fixed graph, making it difficult for them to add new nodes to the graph. On the other hand, the space-based model performs graph convolution locally at each node and can easily share weights between different locations and structures .

In terms of flexibility, based on spectral model only in a directed graph no work on, there is no clear definition of the Laplace matrix on the graph, so the model is based on the spectrum used in a directed graph is the only way there will be Convert a directed graph to an undirected graph. The space-based model handles multi-source inputs more flexibly, and these inputs can be combined into aggregate functions . therefore,In recent years, space models have attracted more and more attention。

4. Graph Attention Networks

The attention mechanism has now been widely used in sequence-based tasks. Its advantage is that it can amplify the impact of the most important part of the data. This feature has proven to be useful for many tasks, such as machine translation and natural language understanding. Nowadays, the number of models integrated into the attention mechanism is continuously increasing, and graph neural networks also benefit from this. It uses attention in the aggregation process, integrates the output of multiple models, and generates random walks facing important goals.

5. Graph Autoencoders

Graph autoencoder is a kind of graph embedding method , its purpose is to use neural network structure to represent graph vertices as low-dimensional vectors . A typical solution is to use a multilayer perceptron as an encoder to obtain node embedding, where the decoder reconstructs the node's neighborhood statistical information, such as positive pointwise mutual information (PPMI) or first and second order approximations . Recently, researchers have explored the use of GCN as an encoder, combining GCN with GAN, or combining LSTM with GAN to design graph auto-encoders. We will first review the GCN-based AutoEncoder, and then summarize the other variants in this category.

At present, the main methods of GCN-based autoencoders are: Graph Autoencoder (GAE) and Adversarially Regularized Graph Autoencoder (ARGA)

Other variants of the picture autoencoder are:

Network Representations with Adversarially Regularized Autoencoders (NetRA)

Deep Neural Networks for Graph Representations (DNGR)

Structural Deep Network Embedding (SDNE)

Deep Recursive Network Embedding (DRNE)

DNGR and SDNE learning only give the node embedding of the topological structure, while GAE, ARGA, NetRA, and DRNE are used to learn the node embedding when both the topology information and the node content feature exist. One challenge of the graph autoencoder is the sparsity of the adjacency matrix A, which makes the number of positive entries of the decoder far smaller than the number of negative entries. To solve this problem, DNGR reconstructed a denser matrix, namely the PPMI matrix. SDNE penalizes the zero entries of the adjacency matrix, GAE reweights the entries in the adjacency matrix, and NetRA linearizes the graph into a sequence.

6. Graph Generative Networks

The goal of the graph generation network is to generate a new graph given a set of observed graphs. FIG generating net number of methods are network-specific field. For example, in molecular map generation, some work simulates the string representation of the molecular map called SMILES. In natural language processing, the generation of semantic graphs or knowledge graphs usually takes a given sentence as a condition. Recently, several general methods have been proposed. Some works use the generation process as the alternate formation factor of nodes and edges, while others use generative adversarial training. Such methods either use GCN as a building block or use a different architecture.

The GAN-based graph generation network mainly includes

Molecular Generative Adversarial Networks (MolGAN): Integrate relational GCN, improved GAN, and reinforcement learning (RL) objectives to generate graphs with required attributes. GAN consists of a generator and a discriminator, which compete with each other to improve the authenticity of the generator. In MolGAN, the generator tries to propose a pseudo graph and its feature matrix, while the goal of the discriminator is to distinguish between pseudo samples and empirical data. In addition, a reward network parallel to the discriminator is introduced to encourage the generated graph to have certain attributes according to the external evaluator .

Deep Generative Models of Graphs (DGMG): Use space-based graph convolutional networks to obtain hidden representations of existing graphs. The decision-making process of generating nodes and edges is based on the representation of the entire graph. In short, DGMG recursively generates a node in a graph until a certain stopping condition is reached. At each step after adding a new node, DGMG will repeatedly decide whether to add an edge to the added node, until the decision result of the decision becomes false. If the decision is true, evaluate the probability distribution connecting the newly added node to all existing nodes, and extract a node from the probability distribution. After adding new nodes and their edges to the existing graph, DGMG will update the representation of the graph.

The graph generation networks of other architectures mainly include

GraphRNN: A depth graph generation model through a two-level recurrent neural network. The graph-level RNN adds a new node to the node sequence each time, and the edge-level RNN generates a binary sequence indicating the connection between the newly added node and the previously generated node in the sequence. In order to linearize a graph into a series of nodes to train the graph-level RNN, GraphRNN uses a breadth first search (BFS) strategy. In order to establish a binary sequence model for training edge-level RNNs, GraphRNN assumes that the sequence obeys the multivariate Bernoulli distribution or the conditional Bernoulli distribution.

NetGAN: Netgan combines LSTM with Wasserstein-GAN, and uses a random walk-based method to generate graphics. The GAN framework consists of two modules, a generator and a discriminator. The generator does its best to generate a reasonable random walking sequence in the LSTM network, while the discriminator tries to distinguish between a fake random walking sequence and a real random walking sequence. After the training is completed, regularize the co-occurrence matrix of a set of random walking nodes, and we can get a new graph.

7. Graph Spatial-Temporal Networks

The graph spatio-temporal network captures the spatio-temporal correlation of the spatiotemporal graph at the same time . The time-space graph has a global graph structure, and the input of each node changes with time. For example, in a traffic network, each sensor acts as a node to continuously record the traffic speed of a certain road, where the edges of the traffic network are determined by the distance between the sensor pairs. The goal of the graph spatio-temporal network can be to predict the future node value or label, or to predict the spatiotemporal graph label. Recent research only discusses the use of GCNs, the combination of GCNs and RNN or CNN, and the loop architecture customized according to the graph structure.

The current model of the graph spatio-temporal network mainly includes

Diffusion Convolutional Recurrent Neural Network (DCRNN)

CNN-GCN

Spatial Temporal GCN (ST-GCN)

Structural-RNN

6. Application of graph neural network

1. Computer Vision

One of the largest application areas of graphical neural networks is computer vision. Researchers have explored methods of using graph structures in scene graph generation, point cloud classification and segmentation, and action recognition .

In scene graph generation, the semantic relationship between objects helps to understand the semantic meaning behind the visual scene. Given an image, the scene graph generation model detects and recognizes objects, and predicts the semantic relationship between pairs of objects. Another application reverses the process by generating a real image of a given scene graph. Natural language can be parsed into a semantic graph, where each word represents an object, which is a promising solution to synthesize a given text description image.

In point cloud classification and segmentation, a point cloud is a set of three-dimensional points scanned and recorded by lidar. The solution to this task enables lidar equipment to see the surrounding environment, which is usually beneficial for unmanned vehicles. In order to identify the objects depicted by the point cloud, the point cloud is converted into a k-nearest neighbor graph or an overlay graph, and the graph theory evolution network is used to explore the topological structure.

In action recognition, recognizing the human actions contained in the video helps to better understand the video content from the machine. A set of solutions detects the position of human joints in a video clip. Human joints connected by bones naturally form a diagram. Given a time series of human joint positions, spatiotemporal neural networks are applied to learn human behavior patterns.

In addition, the possible applications of graph neural networks in computer vision are also increasing. This includes human-object interaction, few-shot image classification, semantic segmentation, visual reasoning, and question answering.

2. Recommender Systems

The graph-based recommendation system takes projects and users as nodes . By using the relationship between items and items, users and users, users and items, and content information, the graph-based recommendation system can generate high-quality recommendations. The key to a recommendation system is to evaluate the importance of an item to users . Therefore, it can be transformed into a link prediction problem. The goal is to predict the missing link between the user and the project. In order to solve this problem, some scholars have proposed a GCN-based graphics auto-encoder. There are also scholars who combine GCN and RNN to learn the hidden steps of users' ratings of items.

3. Traffic

Traffic congestion has become a hot social problem in modern cities. Accurately predicting traffic speed, traffic volume, or road density in a traffic network is essential in route planning and flow control. Some scholars use graph-based spatio-temporal neural network methods to solve these problems. The input to their model is a time-space graph. In this spatio-temporal graph, nodes are represented by sensors placed on the road, and edges are represented by the distance of pairs of nodes above the threshold, and each node contains a time series as a feature. The goal is to predict the average speed of a road in a time interval. Another interesting application is taxi demand forecasting. This helps the intelligent transportation system to efficiently use resources and save energy.

4. Chemistry

In chemistry, researchers use graph neural networks to study the graph structure of molecules. In a molecular graph, atoms are nodes in the graph, and chemical bonds are edges in the graph. Node classification, graph classification, and graph generation are the three main tasks of molecular graphs. They can be used to learn molecular fingerprints, predict molecular properties, infer protein structures, and synthesize compounds.

5. Other

In addition to the above four fields, graph neural networks have also been explored and can be applied to other problems, such as program verification, program reasoning, social impact prediction, adversarial attack prevention, electronic health record modeling, brain networks, event detection, and combination optimization .