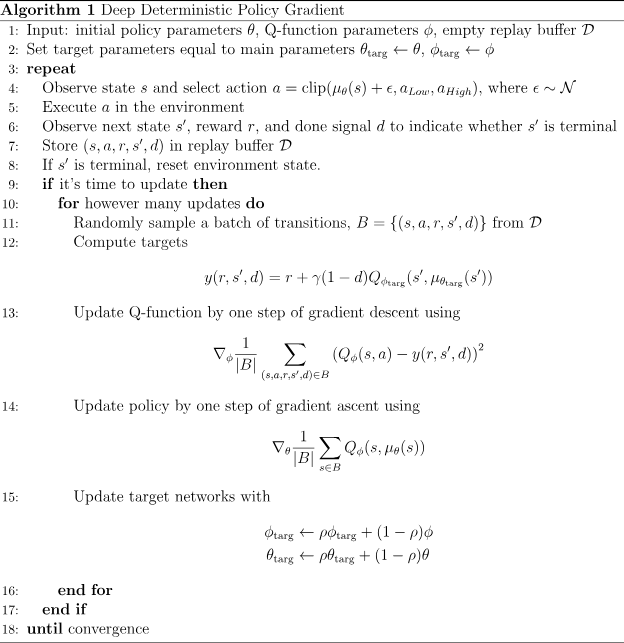

1. DDPG

Compared with the traditional PG algorithm, the DDPG method has three main improvements:

A. off-policy strategy

The traditional PG algorithm generally adopts the on-policy method, which divides the overall reinforcement learning process into multiple epochs, completes an update of the policy model and value model in each epoch, and needs to be re-sampled according to the decision model in each round of epoch to obtain the round of training samples.

But when the cost of interacting with the environment is relatively high, the efficiency of this on-policy approach is not good. Therefore, DDPG proposes an off-policy method, which can use historical samples, assuming that for historical samples , DDPG's off-policy strategy will re-estimate the value according to the current target policy.

Therefore, the goal of DDPG for the value estimation model is

to represent the Batch randomly drawn from all historical samples

The goal of the traditional on-policy strategy is that the following formula can be the cumulative income after MC sampling,

which represents the sampling result of the current epoch round.

B. More Complex Deterministic Continuous Action Policy Scenario Modeling

The traditional PG algorithm models the operation through an action distribution . This action distribution is generally discrete, or the action is modeled as a Gaussian distribution, and the two parameters of the distribution mean and standard deviation are fitted by a neural network.

DDPG employs an action generation network that can output deterministic and continuous action values.

C. Target Networks

DDPG's off-policy strategy will re-estimate the value according to the current target policy, so the estimated value here is calculated through another network Target Networks, mainly to avoid too much change when directly using the target optimization network for estimation And affect the effect.

The traditional DQN will take a longer period of time to synchronize the parameters of the target optimization network to the Target Network as a whole. However, DDPG adopts a smoother method to help the Target Network adapt to the parameters of the target optimization network in a timely manner.

D. Exploration

DDPG is a deterministic action decision, so in order to ensure exploration, DDPG adds a Gaussian noise after sampling actions, and adds truncation to avoid inappropriate action values.

The overall process of DDPG algorithm

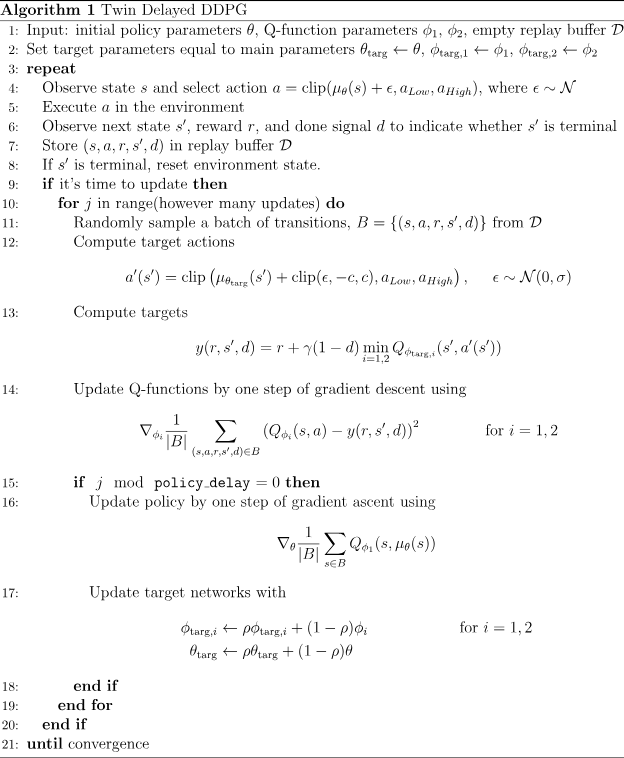

2. Twin Delayed DDPG

Twin Delayed DDPG is also known as the TD3 algorithm, which mainly makes two upgrades on the basis of DDPG:

A. target policy smoothing

As mentioned above, DDPG's off-policy strategy will re-estimate the value based on the current target policy. The action generated by the target policy here does not add noise exploration, because it is only used to estimate the value, not to explore.

However, the TD3 algorithm adds noise to the target policy action here. The main reason is for regularization. This regularization operation smoothes some incorrect action spikes that may appear during training.

B. clipped double-Q learning

DDPG is based on Q-learning. Since it is a definite action that takes the greatest possibility, it may cause a Maximum deviation (simple understanding is that due to the existence of the estimated distribution, the maximum value generally deviates from the expected value), this The problem may be solved by double Q-learning.

Based on DDPG, TD3 applies the idea of double Q-learning, introduces two target value estimation models, generates value estimations respectively, and selects the smallest value as the final estimation value.

There are also two target value models:

However, there is only one set of target decision-making models, and it is only optimized according to a certain target value model:

TD3 algorithm overall process

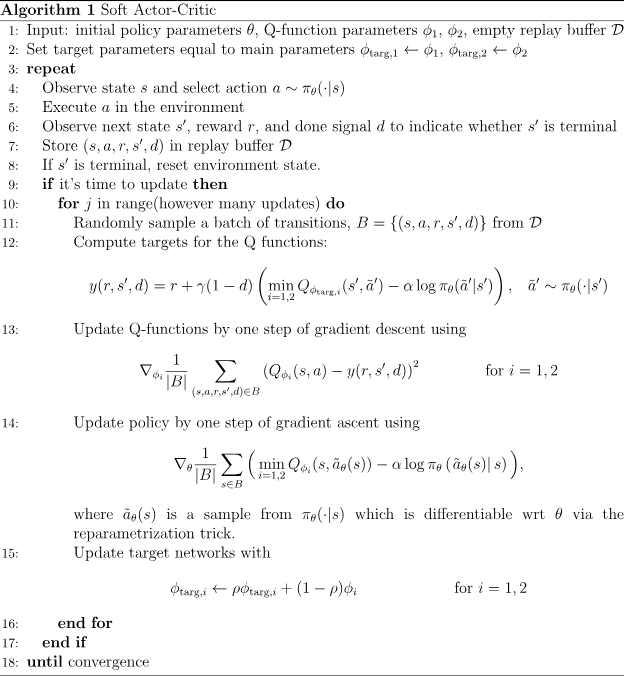

3. Soft Actor-Critic

Since DDPG can only produce deterministic actions, Soft Actor Critic (SAC) implements stochastic policy to produce probabilistic action decisions. Compared with TD3, the SAC algorithm has two main differences:

A. entropy regularization

Entropy regularization Entropy regularization is the core content of SAC, because SAC implements probabilistic action decision-making . The main problem of probabilistic action decision-making is that the generated action probability may be too scattered, so SAC uses entropy regularization to avoid this situation.

At the same time, the policy model update target also adds entropy regularization, but it applies both target models, which is different from TD3.

B. Exploration

SAC has natural exploration characteristics because its action decision function is probabilistic, so it does not have a target network for the action decision model. In addition, the exploration characteristics of the action decision-making model can also be controlled by controlling during training .