Table of contents

1. Common ways of requests library to request web pages

Baidu web page http request actual combat

Before using, make sure to install the requests library, you can use pip3 in the taskbar cmd to install:

pip3 install requests1. Common ways of requests library to request web pages

import requests

r = requests.get('https://www.httpbin.org/get')

r = requests.post('https://www.httpbin.org/post')

r = requests.put('https://www.httpbin.org/put')

r = requests.delete('https://www.httpbin.org/delete')

r = requests.patch('https://www.httpbin.org/patch')1. GET request

-

basic example

import requests

r = requests.get('https://www.httpbin.org/get')

print(r.text)operation result:

{

"args": {},

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate, br",

"Host": "www.httpbin.org",

"User-Agent": "python-requests/2.27.1",

"X-Amzn-Trace-Id": "Root=1-62d27f11-52cfa9120c9cb3430ff3acbf"

},

"origin": "120.238.232.103",

"url": "https://www.httpbin.org/get"

}

From the above, the returned results include args, headers (request headers), IP, URL and other information. If you want to add two parameters name and age, you can use parameter settings to pass this information, where the parameters are passed in as a dictionary

import requests

data = {

"name":"LZQ",

"age" : 22

}

r = requests.get('https://www.httpbin.org/get',params=data)

print(r.text)operation result:

{

"args": {

"age": "22",

"name": "LZQ"

},

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate, br",

"Host": "www.httpbin.org",

"User-Agent": "python-requests/2.27.1",

"X-Amzn-Trace-Id": "Root=1-62d28029-58d27395661203591b286a70"

},

"origin": "120.238.232.103",

"url": "https://www.httpbin.org/get?name=LZQ&age=22"

}

-

Add request header

import requests

headers = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.198 Safari/537.36"}

r = requests.get('https://www.httpbin.org/get',headers = headers)

print(r.text)operation result::

{

"args": {},

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate, br",

"Host": "www.httpbin.org",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.198 Safari/537.36",

"X-Amzn-Trace-Id": "Root=1-62d29e95-7f32281c719e7d9b4847c851"

},

"origin": "120.238.218.103",

"url": "https://www.httpbin.org/get"

}

-

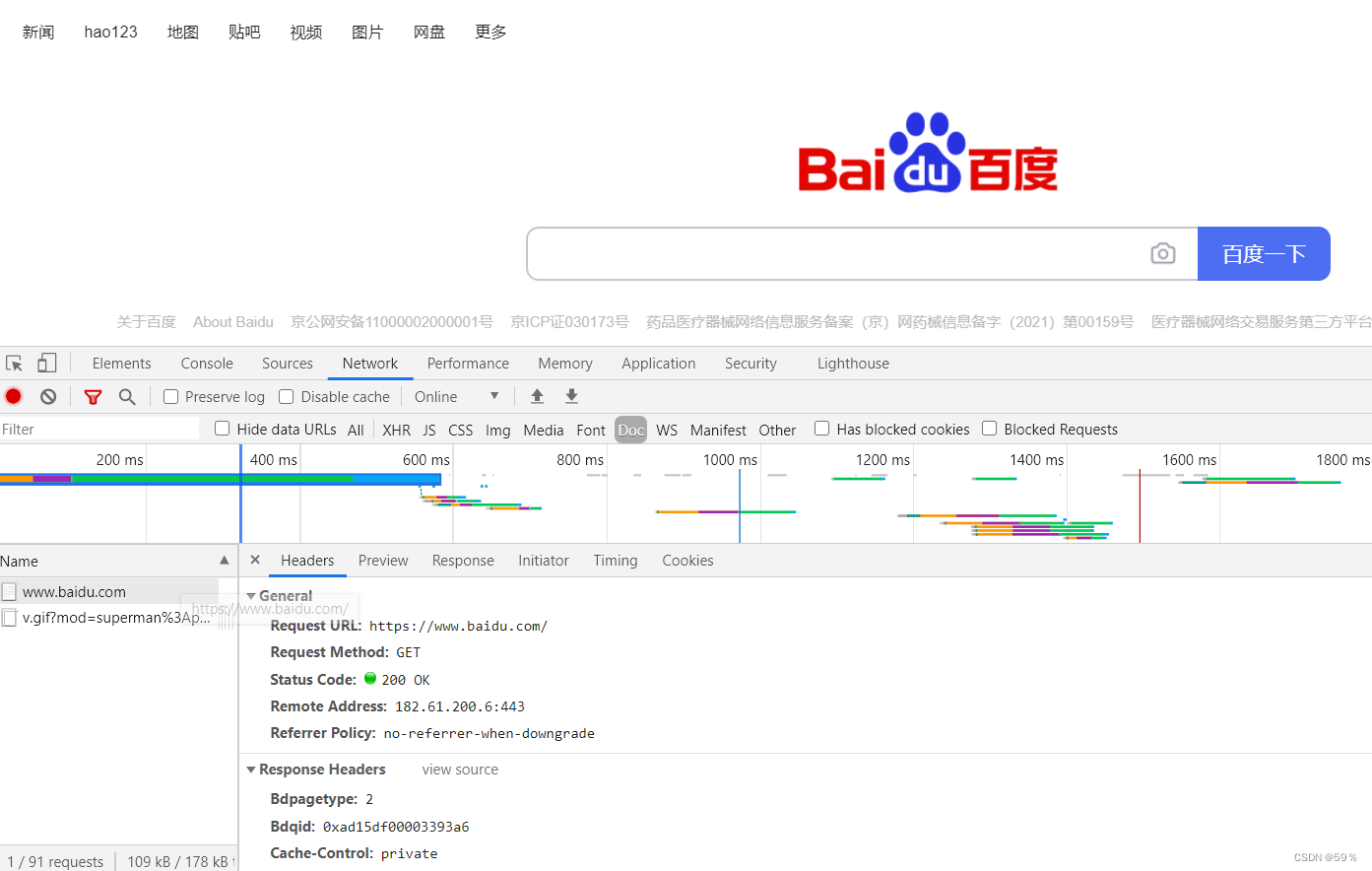

Baidu web page http request actual combat

The simplest get request is constructed below, and the request link is: https://www.baidu.com

import requests

import json

r = requests.get('https://www.baidu.com/')

print(r.status_code) #状态码

print(r.text) #响应体

print(r.headers) #响应头200

<!DOCTYPE html>

<!--STATUS OK--><html> <head><meta http-equiv=content-type content=text/html;charset=utf-8><meta http-equiv=X-UA-Compatible content=IE=Edge><meta content=always name=referrer><link rel=stylesheet type=text/css href=https://ss1.bdstatic.com/5eN1bjq8AAUYm2zgoY3K/r/www/cache/bdorz/baidu.min.css><title>ç¾åº¦ä¸ä¸ï¼ä½ å°±ç¥é</title></head> <body link=#0000cc> <div id=wrapper> <div id=head> <div class=head_wrapper> <div class=s_form> <div class=s_form_wrapper> <div id=lg> <img hidefocus=true src=//www.baidu.com/img/bd_logo1.png width=270 height=129> </div> <form id=form name=f action=//www.baidu.com/s class=fm> <input type=hidden name=bdorz_come value=1> <input type=hidden name=ie value=utf-8> <input type=hidden name=f value=8> <input type=hidden name=rsv_bp value=1> <input type=hidden name=rsv_idx value=1> <input type=hidden name=tn value=baidu><span class="bg s_ipt_wr"><input id=kw name=wd class=s_ipt value maxlength=255 autocomplete=off autofocus=autofocus></span><span class="bg s_btn_wr"><input type=submit id=su value=ç¾åº¦ä¸ä¸ class="bg s_btn" autofocus></span> </form> </div> </div> <div id=u1> <a href=http://news.baidu.com name=tj_trnews class=mnav>æ°é»</a> <a href=https://www.hao123.com name=tj_trhao123 class=mnav>hao123</a> <a href=http://map.baidu.com name=tj_trmap class=mnav>å°å¾</a> <a href=http://v.baidu.com name=tj_trvideo class=mnav>è§é¢</a> <a href=http://tieba.baidu.com name=tj_trtieba class=mnav>è´´å§</a> <noscript> <a href=http://www.baidu.com/bdorz/login.gif?login&tpl=mn&u=http%3A%2F%2Fwww.baidu.com%2f%3fbdorz_come%3d1 name=tj_login class=lb>ç»å½</a> </noscript> <script>document.write('<a href="http://www.baidu.com/bdorz/login.gif?login&tpl=mn&u='+ encodeURIComponent(window.location.href+ (window.location.search === "" ? "?" : "&")+ "bdorz_come=1")+ '" name="tj_login" class="lb">ç»å½</a>');

</script> <a href=//www.baidu.com/more/ name=tj_briicon class=bri style="display: block;">æ´å¤äº§å</a> </div> </div> </div> <div id=ftCon> <div id=ftConw> <p id=lh> <a href=http://home.baidu.com>å³äºç¾åº¦</a> <a href=http://ir.baidu.com>About Baidu</a> </p> <p id=cp>©2017 Baidu <a href=http://www.baidu.com/duty/>使ç¨ç¾åº¦åå¿è¯»</a> <a href=http://jianyi.baidu.com/ class=cp-feedback>æè§åé¦</a> 京ICPè¯030173å· <img src=//www.baidu.com/img/gs.gif> </p> </div> </div> </div> </body> </html>

{'Cache-Control': 'private, no-cache, no-store, proxy-revalidate, no-transform', 'Connection': 'keep-alive', 'Content-Encoding': 'gzip', 'Content-Type': 'text/html', 'Date': 'Sat, 16 Jul 2022 11:15:27 GMT', 'Last-Modified': 'Mon, 23 Jan 2017 13:23:55 GMT', 'Pragma': 'no-cache', 'Server': 'bfe/1.0.8.18', 'Set-Cookie': 'BDORZ=27315; max-age=86400; domain=.baidu.com; path=/', 'Transfer-Encoding': 'chunked'}

-

Grab web data

import requests

import re

r = requests.get("https://ssr1.scrape.center/")

pattern = re.compile('<h2.*?>(.*?)</h2>',re.S)

titles = re.findall(pattern,r.text)

print(titles)operation result:

['Farewell My Concubine- Farewell My Concubine', 'This killer is not too cold- Léon', 'Shawshank's Redemption- The Shawshank Redemption', 'Titanic- Titanic', 'Roman Holiday- Roman Holiday', 'Tang Bohu Point Qiu Xiang- Flirting Scholar', 'Gone with the Wind- Gone with the Wind', 'The King of Comedy- The King of Comedy', 'The Truman Show- The Truman Show', 'The Lion King- The Lion King']

The above uses regular expressions to grab the title of the website, and the relevant content of learning regular expressions will be updated later.

-

Grab binary data

Pictures, audio, video and other files are essentially composed of binary codes, and have specific storage formats and corresponding decoding formats. If you want to capture these data, you must capture their binary data.

import requests

r = requests.get("https://ssr1.scrape.center/static/img/logo.png")

print(r.text)

print(r.content)

Screenshot of the running result part:

Comparing the two attributes of the response, it is noticed that r.text has garbled characters, and r.content has a b in front of it, which means that it is bytes type data. Now save the extracted data.

import requests

r = requests.get("https://ssr1.scrape.center/static/img/logo.png")

with open('logo.png','wb') as f: #第一个参数的文件名称,第二个参数是以二进制写的形式打开文件

f.write(r.content)2. POST request

It is also very simple to use requests to implement post requests. Examples are as follows:

import requests

data = {

"name":"LZQ",

"age" : 22

}

r = requests.post('https://www.httpbin.org/post',params=data)

print(r.text)operation result:

{

"args": {

"age": "22",

"name": "LZQ"

},

"data": "",

"files": {},

"form": {},

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate, br",

"Content-Length": "0",

"Host": "www.httpbin.org",

"User-Agent": "python-requests/2.27.1",

"X-Amzn-Trace-Id": "Root=1-62d29f4b-17b7d6b13b6d5c8d4a65f987"

},

"json": null,

"origin": "120.238.218.103",

"url": "https://www.httpbin.org/post?name=LZQ&age=22"

}

3. Response

After the request is sent, a response will be obtained, which can be used to obtain status code, response header, url and other information.

import requests

r = requests.get("https://www.baidu.com")

print(type(r.status_code),r.status_code) #状态码

print(type(r.headers),r.headers) #响应头

print(type(r.cookies),r.cookies) #Cookie

print(type(r.url),r.url)

print(type(r.history),r.history) #历史记录operation result:

<class 'int'> 200

<class 'requests.structures.CaseInsensitiveDict'> {'Cache-Control': 'private, no-cache, no-store, proxy-revalidate, no-transform', 'Connection': 'keep-alive', 'Content-Encoding': 'gzip', 'Content-Type': 'text/html', 'Date': 'Sat, 16 Jul 2022 11:26:22 GMT', 'Last-Modified': 'Mon, 23 Jan 2017 13:23:55 GMT', 'Pragma': 'no-cache', 'Server': 'bfe/1.0.8.18', 'Set-Cookie': 'BDORZ=27315; max-age=86400; domain=.baidu.com; path=/', 'Transfer-Encoding': 'chunked'}

<class 'requests.cookies.RequestsCookieJar'> <RequestsCookieJar[<Cookie BDORZ=27315 for .baidu.com/>]>

<class 'str'>

<class 'list'> []Baidu, you will know