Author: CSDN @ _Yakult_

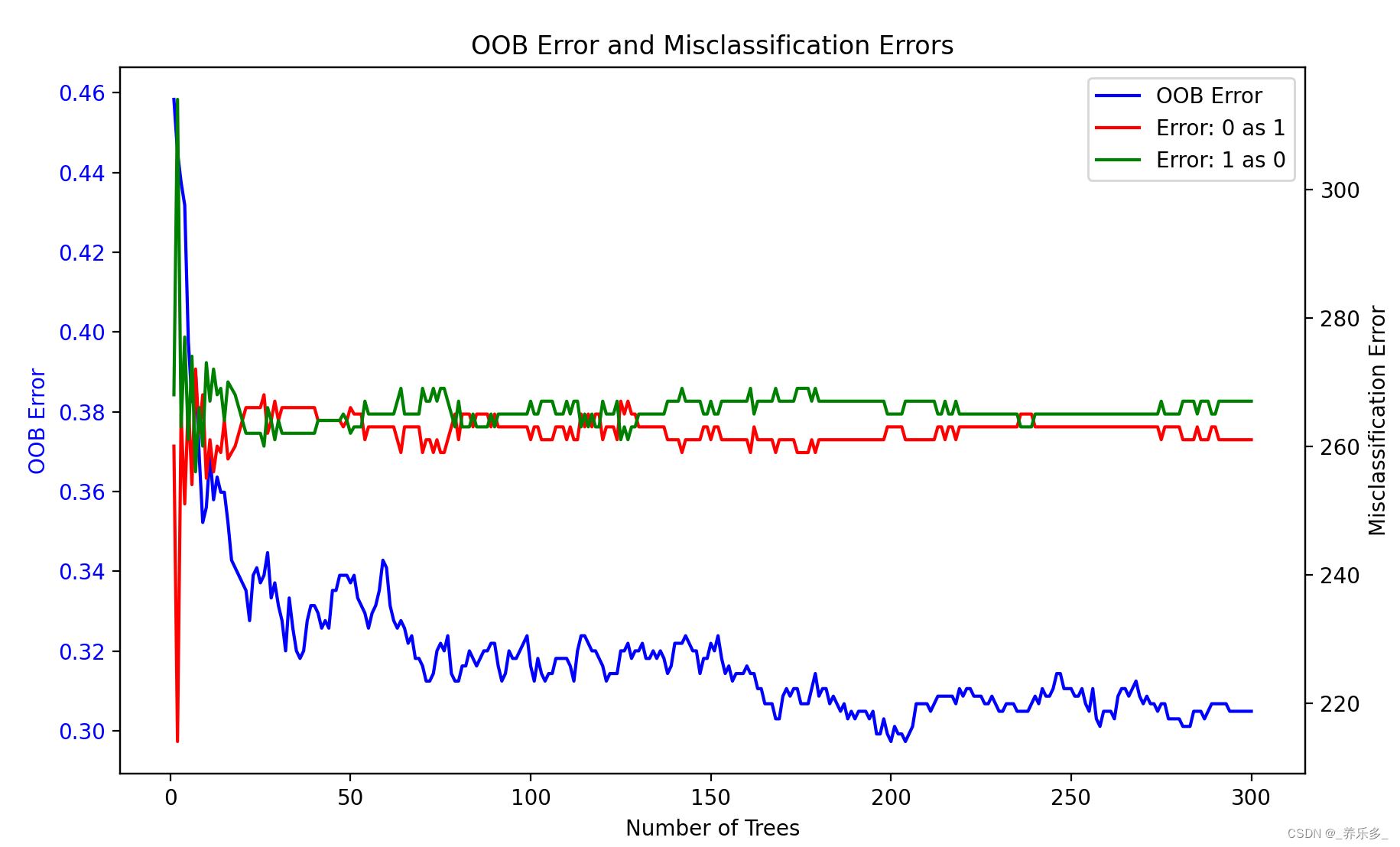

Random Forest is a powerful machine learning algorithm commonly used for classification and regression tasks. It consists of multiple decision trees, and predicts through ensemble learning. In this blog, we will explore the performance of random forest classifiers under different numbers of decision trees, and draw corresponding charts for visual analysis. OOB error, the error generated when 0 is misjudged as 1, and the error generated when 1 is misjudged as 0.

Article directory

Datasets and Models

First, we use an example dataset for experiments. This dataset contains two features and two categories, with a total of 100 samples. We will use the scikit-learn library to build and train a random forest classifier and evaluate its performance.

Variation of OOB error

We wish to observe how the Out-of-Bag (OOB) error of a random forest classifier varies with the number of decision trees. OOB error is a method that evaluates over samples that were not used during training and can provide an estimate of model performance.