Foreword

Everyone should be familiar with Synchronized and ReentrantLock . As the most commonly used local lock in java, the performance of ReentrantLock in the initial version is much better than that of Synchronized. Subsequent java has made a lot of optimizations on Synchronized in iterations of versions. Until jdk1.6, the performance of the two locks is almost the same, and even the Synchronized automatic release lock will be more useful.

When asked about the choice of Synchronized and ReentrantLock during the interview, many friends blurted out that Synchronized should be used. Even when I asked the interviewer during the interview, very few people were able to answer it. Moon wanted to say that this is not necessarily , Students who are only interested in the title can go straight to the end , I am not a title party~

Synchronized use

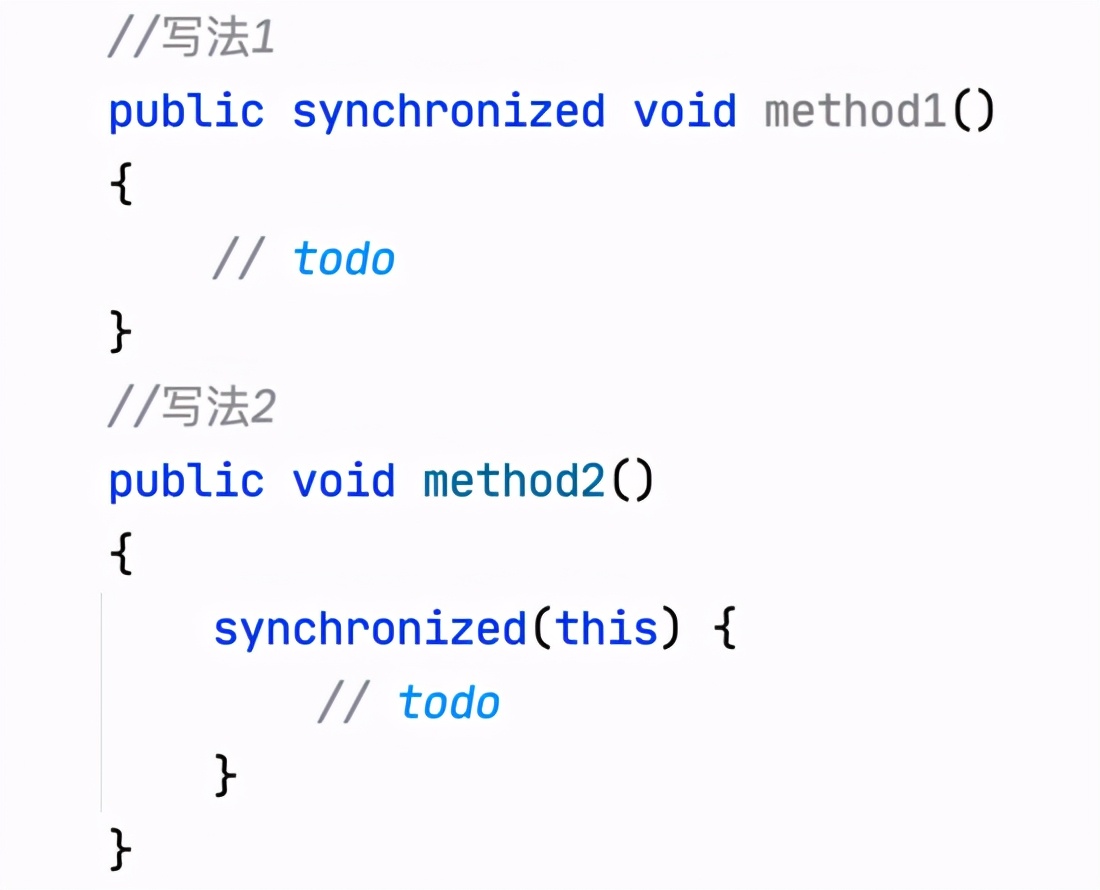

The use of synchronized in java code is very simple

- 1. Paste directly on the method (the bytecode file of the current class is locked)

- 2. Paste on the code block (the object is locked)

What happens to the Synchronized piece of code while the program is running?

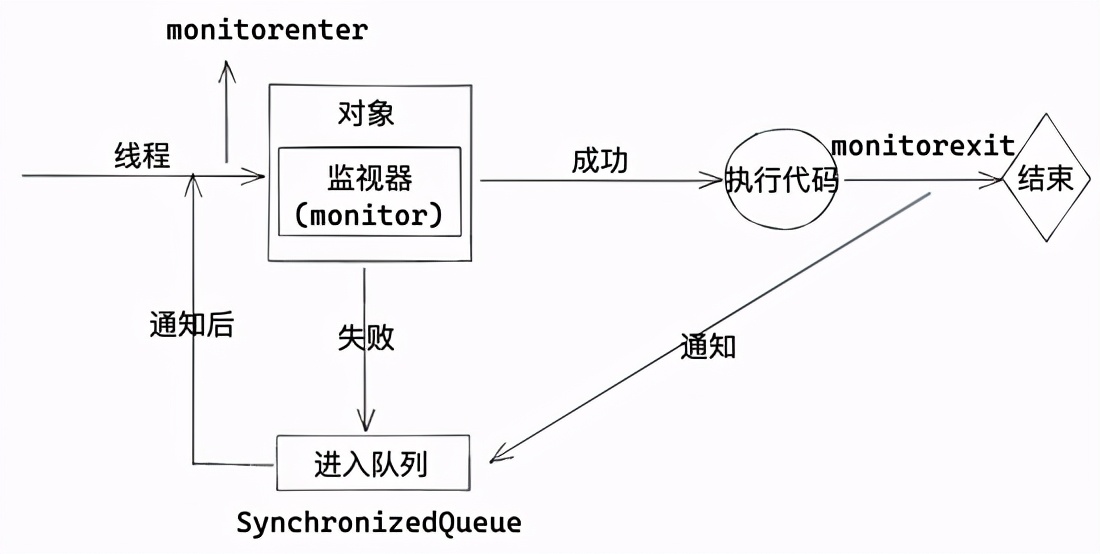

Look at a picture

In the process of multi-threaded running, the thread will first grab the object's monitor . This monitor is unique to the object. In fact, it is equivalent to a key. If you grab it, you will have the right to execute the current code block.

Other threads that have not been grabbed will enter the queue (SynchronizedQueue) waiting, waiting for the current thread to finish executing, release the lock.

Finally, after the current thread has finished executing, it is notified to leave the queue and then continue to repeat the current process.

From the perspective of JVM, the monitorenter and monitorexit instructions represent the execution and end of the code.

SynchronizedQueue:

SynchronizedQueue is a special queue. It has no storage function. Its function is to maintain a set of threads. Each insert operation must wait for the removal operation of another thread. Similarly, any removal operation waits for the insertion of another thread. operating. Therefore, there is actually no element in this queue, or the capacity is 0, which is not strictly a container. Since the queue has no capacity, the peek operation cannot be called because there are elements only when they are removed.

for example:

When drinking, first pour the wine into the wine cup and then into the wine glass. This is a normal queue .

When drinking, pour the wine directly into the glass, this is the SynchronizedQueue .

This example should be very clear and easy to understand. Its advantage is that it can be delivered directly, eliminating the need for a third-party delivery process.

Talk about the details, the process of lock upgrade

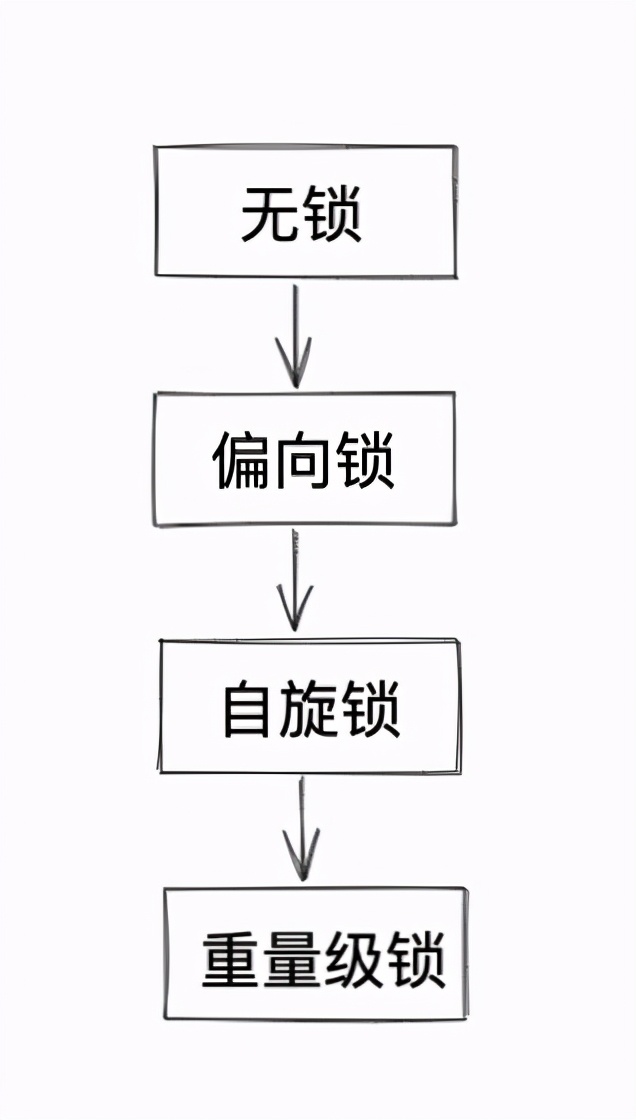

Before jdk1.6, Synchronized is a heavyweight lock, or post a picture first

This is why Synchronized is a heavyweight lock, because each lock resource is directly applied for with the cpu, and the number of cpu locks is fixed. When the cpu lock resource is used up, it will wait for the lock , This is a very time-consuming operation.

But in jdk1.6, a lot of optimizations are made at the code level, which is what we often call the process of lock upgrade.

This is the process of lock upgrade , let's briefly talk about:

- No lock: The object is unlocked from the beginning.

- Partial lock: It is equivalent to attaching a label to the object (store my thread id in the object header), next time I come in and find that the label is mine, I can continue to use it.

- Spin lock: Imagine that there is a toilet with a person in it. You want to go to it but there is only one pit, so you can only wander and wait. After that person comes out, you can use it. This spin uses cas to ensure atomicity. I won’t go into details about cas here.

- Heavyweight locks: apply for locks directly from the cpu , and other threads wait in the queue.

When did the lock escalation happen?

- Biased lock: when a thread acquires a lock, it will be upgraded from a lock-free to a biased lock

- Spin lock: When the thread competition occurs, the bias lock is upgraded to the spin lock, imagine while(true);

- Heavyweight lock: When the thread competition reaches a certain number or exceeds a certain time, it is promoted to a heavyweight lock

Where is the lock information recorded?

This picture is the data structure of the markword in the object header . The lock information is stored here, which clearly shows the change of the lock information when the lock is upgraded. In fact, the object is marked by a binary value. Each value represents a state.

Since synchronized has a lock upgrade, is there a lock downgrade?

This question has a lot to do with our topic.

There is lock degradation in the HotSpot virtual machine, but it only occurs in STW, and only the garbage collection thread can observe it. That is to say, lock degradation will not occur during our normal use. It will be downgraded only during GC.

So the answer to the question, do you understand? Haha, let's go down.

Use of ReentrantLock

The use of ReentrantLock is also very simple. The difference from Synchronized is that you need to manually release the lock yourself. In order to ensure a certain release, it is usually used in conjunction with try~finally.

Principle of ReentrantLock

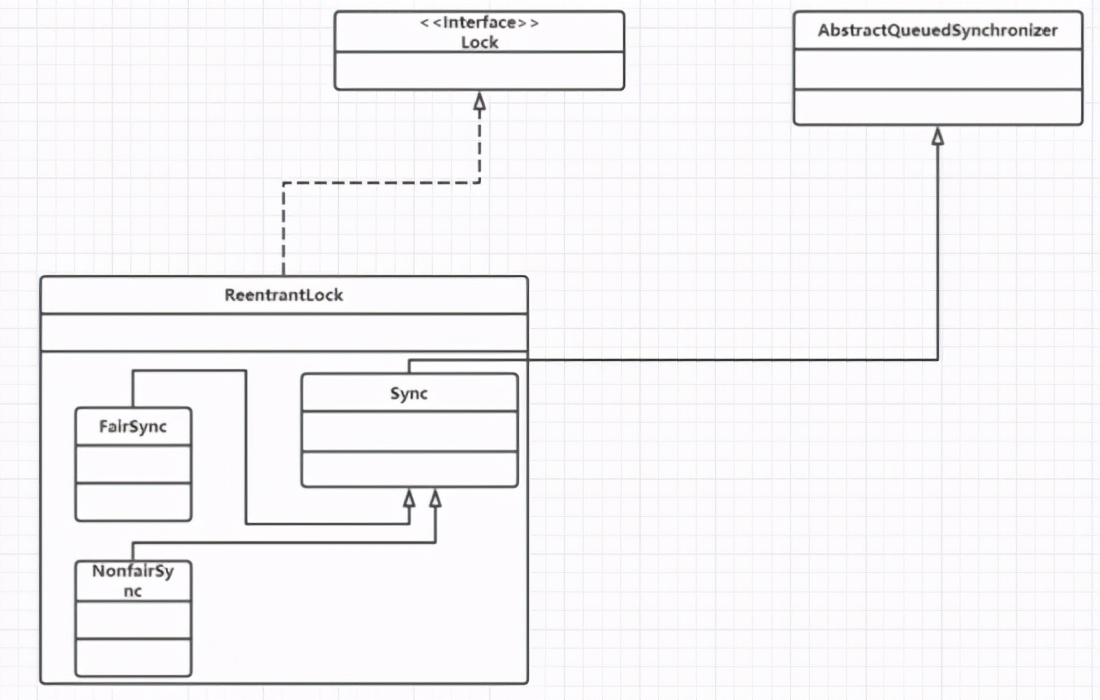

ReentrantLock means reentrant lock . When it comes to ReentrantLock, you have to say AQS, because the underlying layer is implemented using AQS .

ReentrantLock has two modes , one is fair lock and the other is unfair lock.

- In fair mode, waiting threads will be executed in strict accordance with the queue order after they are queued

- In unfair mode, there may be a queue jump after waiting for a thread to enter the queue

This is the structure diagram of ReentrantLock. This diagram is actually very simple, because the main implementation is left to AQS. Let's focus on AQS below.

AQS

AQS (AbstractQueuedSynchronizer): AQS can be understood as a framework that can implement locks .

Simple process understanding:

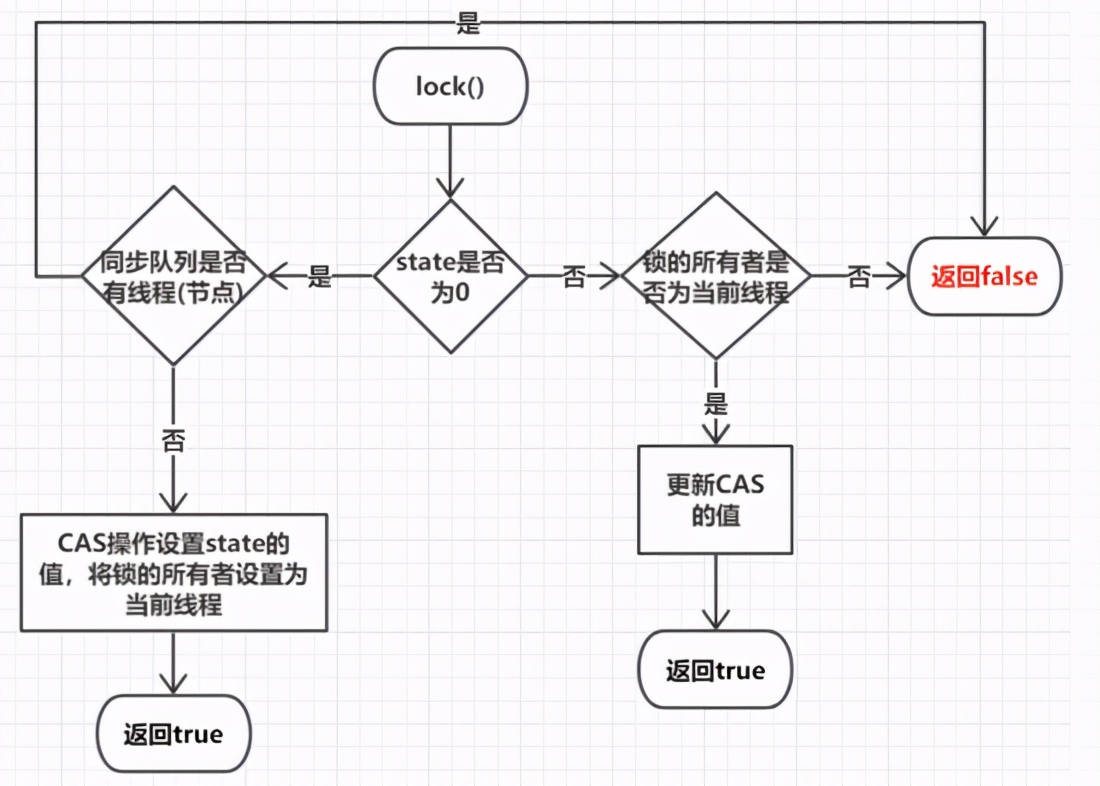

Fair lock:

- Step 1: Get the value of state. If state=0, it means that the lock is not occupied by other threads, and the second step is executed. If state!=0, it means the lock is being occupied by other threads, and the third step is executed.

- Step 2: Determine whether there are threads waiting in the queue. If it does not exist, directly set the owner of the lock to the current thread, and update the state. Join the team if it exists.

- Step 3: Determine if the owner of the lock is the current thread. If yes, update the value of state. If not, the thread enters the queue and waits.

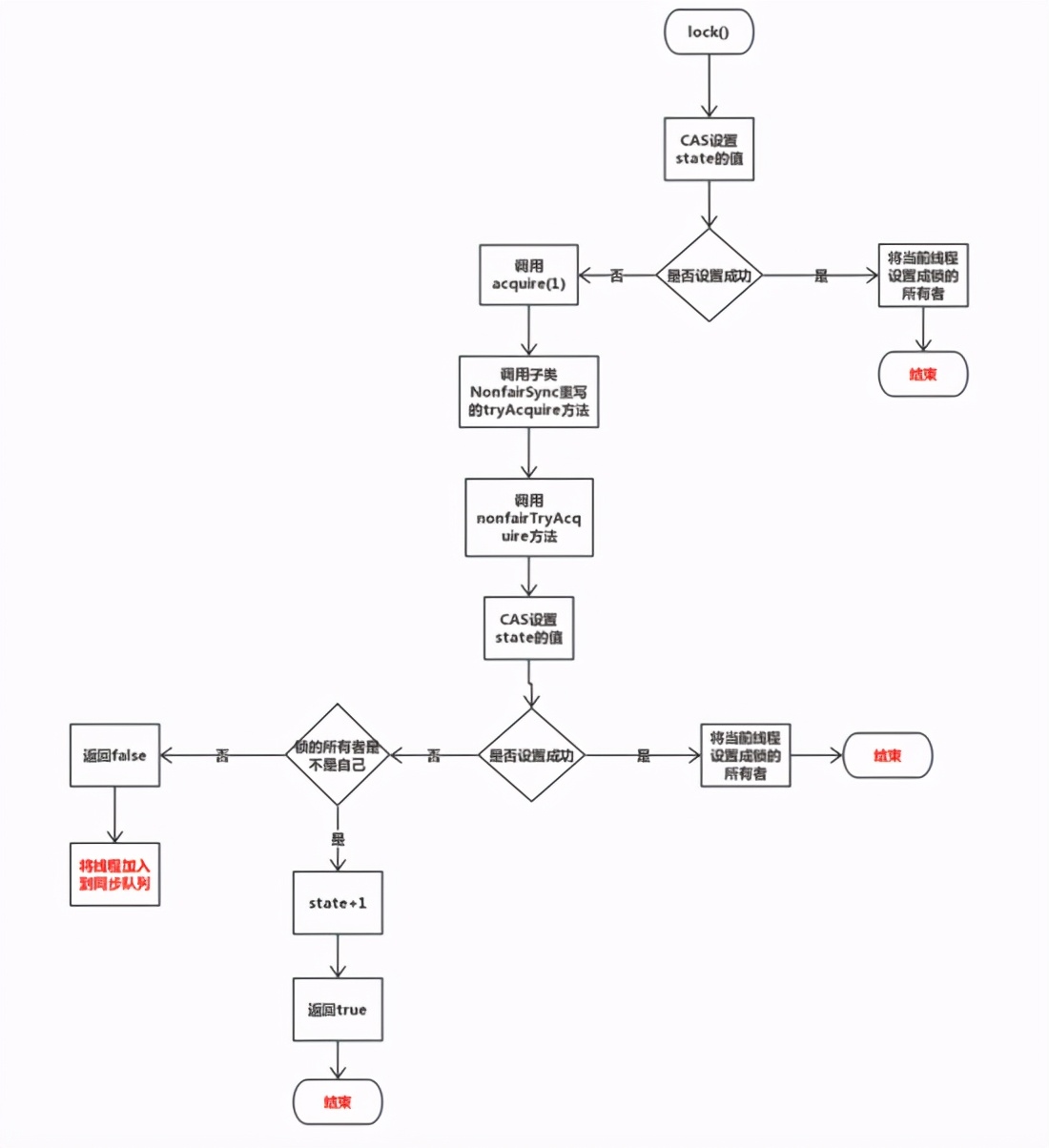

Unfair lock:

- Step 1: Get the value of state. If state=0 means that the lock is not occupied by other threads, the current lock holder is set to the current thread, and the operation is completed with CAS. If it is not 0 or the setting fails, it means the lock is occupied and proceed to the next step.

- Get the value of state at this time. If it is 0, it means that the thread just released the lock. At this time, set the lock holder to itself. If it is not 0, check whether the thread holder is himself. If so, give state+1 , If the lock is not acquired, enter the queue and wait

After reading the above part, I believe you have a clearer concept of AQS, so let's talk about the small details.

AQS uses the state synchronization state (0 means no lock, 1 means yes), and exposes getState, setState, and compareAndSet operations to read and update this state, so that only when the synchronization state has an expected value, it will be set atomically Into the new value.

When a thread fails to acquire the lock, AQS completes the management of the synchronization state through a two-way synchronization queue , and is added to the end of the queue.

This is the code that defines the head and tail nodes. We can use volatile to modify it first to ensure that other threads are visible. AQS actually modifies the head and tail nodes to complete the enqueue and dequeue operations.

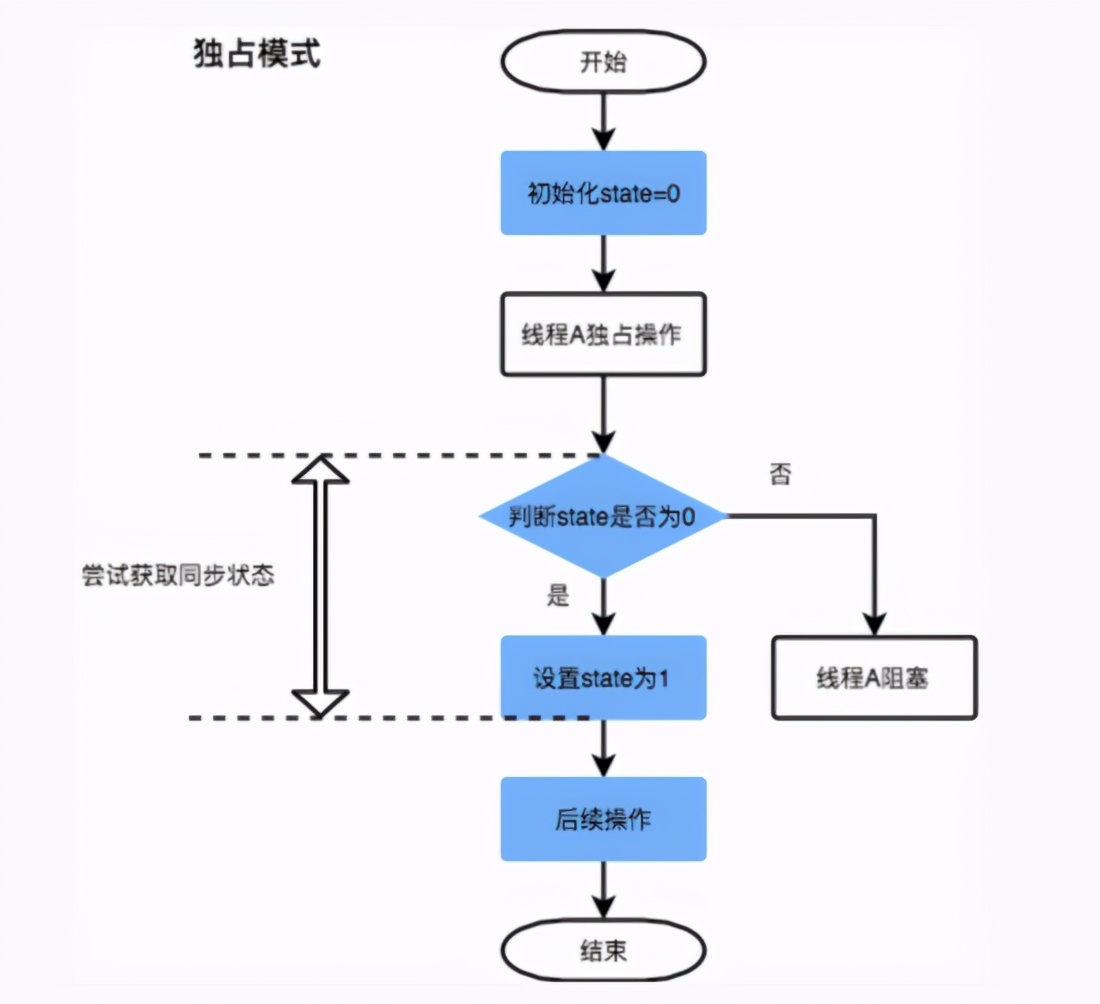

When AQS acquires a lock, it is not necessary that only one thread can hold the lock, so there is a difference between exclusive mode and shared mode at this time. The ReentrantLock in this article uses exclusive mode, in the case of multiple threads Only one thread will acquire the lock.

The process of the exclusive mode is relatively simple. It is judged whether a thread has acquired the lock according to whether the state is 0. If it is blocked, it will continue to execute the subsequent code logic.

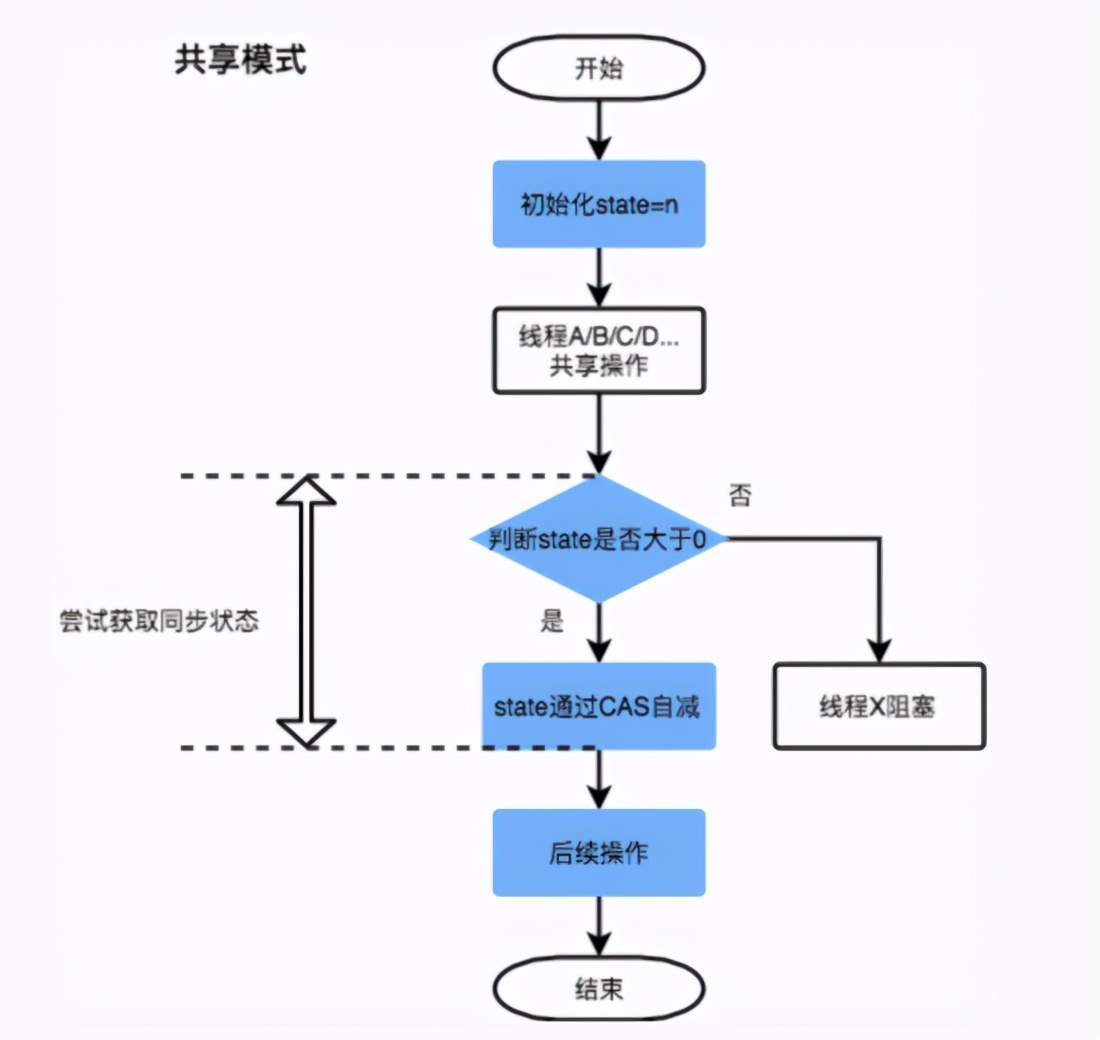

The shared mode process judges whether a thread has acquired the lock according to whether the state is greater than 0. If it is not greater than 0, it will block. If it is greater than 0, the value of state will be subtracted by the atomic operation of CAS, and then continue to execute the subsequent code logic.

The difference between ReentrantLock and Synchronized

- In fact, the core difference between ReentrantLock and Synchronized is that Synchronized is suitable for low concurrency competition, because if the lock upgrade of Synchronized is finally upgraded to a heavyweight lock, there is no way to eliminate it during use, which means that it must be combined with the cpu every time. To request lock resources, ReentrantLock mainly provides the ability to block. Through the suspension of threads under high concurrency, to reduce competition and improve concurrency , so the answer to the title of our article is obvious.

- Synchronized is a keyword, which is implemented by the jvm level , while ReentrantLock is implemented by the java api .

- Synchronized is an implicit lock that can automatically release the lock , and ReentrantLock is an explicit lock that requires manual release of the lock .

- ReentrantLock allows the thread waiting for the lock to respond to the interrupt, but synchronized does not work. When using synchronized, the waiting thread will wait forever and cannot respond to the interrupt.

- ReentrantLock can obtain the lock state, but synchronized cannot.

Talk about the answer to the title

In fact, the answer to the question is in the first item of the previous column, which is also the core difference. After synchronized is upgraded to a heavyweight lock, the downgrade cannot be completed under normal circumstances, while ReentrantLock improves performance through blocking, which is reflected in the design mode Added support for multi-threaded situations.