Pytest has a rich plugin architecture, more than 800 external plugins and an active community, identified by "pytest-*" in the PyPI project.

This article will list some plug-ins with more than 200 stars on github for practical demonstrations.

Plugin library address: http://plugincompat.herokuapp.com/

1. pytest-html : used to generate HTML reports

For a complete test, a test report is essential, but the test results of pytest itself are too simple, and pytest-html can just provide you with a clear report.

Install:

pip install -U pytest-html

Example:

# test_sample.py

import pytest

# import time

# Tested function

def add(x, y):

# time.sleep(1)

return x + y

# Test

class TestLearning:

data = [

[3, 4, 7],

[ -3, 4, 1],

[3, -4, -1],

[-3, -4, 7],

]

@pytest.mark.parametrize("data", data)

def test_add(self, data):

assert add(data[0], data[1]) == data[2]

run:

E:\workspace-py\Pytest>pytest test_sample.py --html=report/index.html

========================================================================== test session starts ==========================================================================

platform win32 -- Python 3.7.3, pytest-6.0.2, py-1.9.0, pluggy-0.13.0

rootdir: E:\workspace-py\Pytest

plugins: allure-pytest-2.8.18, cov-2.10.1, html-3.0.0, rerunfailures-9.1.1, xdist-2.1.0

collected 4 items

test_sample.py ...F [100%]

=============================================================================== FAILURES ================================================================================

_____________________________________________________________________ TestLearning.test_add[data3] ______________________________________________________________________

self = <test_sample.TestLearning object at 0x00000000036B6AC8>, data = [-3, -4, 7]

@pytest.mark.parametrize("data", data)

def test_add(self, data):

> assert add(data[0], data[1]) == data[2]

E assert -7 == 7

E + where -7 = add(-3, -4)

test_sample.py:20: AssertionError

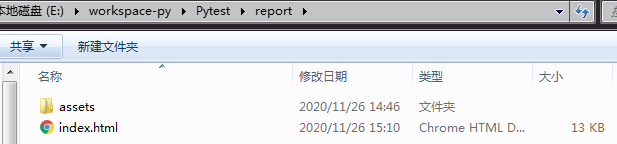

------------------------------------------------- generated html file: file://E:\workspace-py\Pytest\report\index.html --------------------------------------------------

======================================================================== short test summary info ========================================================================

FAILED test_sample.py::TestLearning::test_add[data3] - assert -7 == 7

====================================================================== 1 failed, 3 passed in 0.14s ======================================================================

After running, an html file and css style folder assets will be produced, and you can view the clear test results by opening the html with a browser.

Later I will update a more clear and beautiful test report plugin: allure-python

2. pytest-cov : used to generate coverage reports

When doing unit testing, the code coverage rate is often used as an indicator to measure the quality of the test, and even use the code coverage rate to assess the completion of the test task.

Install:

pip install -U pytest-cov

run:

E:\workspace-py\Pytest>pytest --cov=. ========================================================================== test session starts ========================================================================== platform win32 -- Python 3.7.3, pytest-6.0.2, py-1.9.0, pluggy-0.13.0 rootdir: E:\workspace-py\Pytest plugins: allure-pytest-2.8.18, cov-2.10.1, html-3.0.0, rerunfailures-9.1.1, xdist-2.1.0 collected 4 items test_sample.py .... [100%] ----------- coverage: platform win32, python 3.7.3-final-0 ----------- Name Stmts Miss Cover ------------------------------------ conftest.py 5 3 40% test_sample.py 7 0 100% ------------------------------------ TOTAL 12 3 75% =========================================================================== 4 passed in 0.06s ===========================================================================

3. pytest-xdist : realize multi-thread and multi-platform execution

Speed up the run by sending tests to multiple CPUs. You can use -n NUMCPUS to specify a specific number of CPUs, or use -n auto to automatically identify the number of CPUs and use them all.

Install:

pip install -U pytest-xdist

Example:

# test_sample.py

import pytest

import time

# 被测功能

def add(x, y):

time.sleep(3)

return x + y

# 测试类

class TestAdd:

def test_first(self):

assert add(3, 4) == 7

def test_second(self):

assert add(-3, 4) == 1

def test_three(self):

assert add(3, -4) == -1

def test_four(self):

assert add(-3, -4) == 7

E:\workspace-py\Pytest>pytest test_sample.py ========================================================================== test session starts ========================================================================== platform win32 -- Python 3.7.3, pytest-6.0.2, py-1.9.0, pluggy-0.13.0 rootdir: E:\workspace-py\Pytest plugins: allure-pytest-2.8.18, cov-2.10.1, html-3.0.0, rerunfailures-9.1.1, xdist-2.1.0 collected 4 items test_sample.py .... [100%] ========================================================================== 4 passed in 12.05s =========================================================================== E:\workspace-py\Pytest>pytest test_sample.py -n auto ========================================================================== test session starts ========================================================================== platform win32 -- Python 3.7.3, pytest-6.0.2, py-1.9.0, pluggy-0.13.0 rootdir: E:\workspace-py\Pytest plugins: allure-pytest-2.8.18, assume-2.3.3, cov-2.10.1, forked-1.3.0, html-3.0.0, rerunfailures-9.1.1, xdist-2.1.0 gw0 [4] / gw1 [4] / gw2 [4] / gw3 [4] .... [100%] =========================================================================== 4 passed in 5.35s =========================================================================== E:\workspace-py\Pytest>pytest test_sample.py -n 2 ========================================================================== test session starts ========================================================================== platform win32 -- Python 3.7.3, pytest-6.0.2, py-1.9.0, pluggy-0.13.0 rootdir: E:\workspace-py\Pytest plugins: allure-pytest-2.8.18, assume-2.3.3, cov-2.10.1, forked-1.3.0, html-3.0.0, rerunfailures-9.1.1, xdist-2.1.0 gw0 [4] / gw1 [4] .... [100%] =========================================================================== 4 passed in 7.65s ===========================================================================

The above-mentioned operations have not enabled multi-concurrency, enabled 4 CPUs, and enabled 2 CPUs. From the results of running time, it is obvious that multi-concurrency can greatly reduce the running time of your test cases.

4. pytest-rerunfailures : implement rerun failure use cases

We may have some indirect faults during the test, such as network fluctuations in the interface test, and some plug-ins not being refreshed in time in the web test. At this time, re-running can help us eliminate these faults.

Install:

pip install -U pytest-rerunfailures

run:

E:\workspace-py\Pytest>pytest test_sample.py --reruns 3

========================================================================== test session starts ==========================================================================

platform win32 -- Python 3.7.3, pytest-6.0.2, py-1.9.0, pluggy-0.13.0

rootdir: E:\workspace-py\Pytest

plugins: allure-pytest-2.8.18, cov-2.10.1, html-3.0.0, rerunfailures-9.1.1, xdist-2.1.0

collected 4 items

test_sample.py ...R [100%]R

[100%]R [100%]F [100%]

=============================================================================== FAILURES ================================================================================

___________________________________________________________________________ TestAdd.test_four ___________________________________________________________________________

self = <test_sample.TestAdd object at 0x00000000045FBF98>

def test_four(self):

> assert add(-3, -4) == 7

E assert -7 == 7

E + where -7 = add(-3, -4)

test_sample.py:22: AssertionError

======================================================================== short test summary info ========================================================================

FAILED test_sample.py::TestAdd::test_four - assert -7 == 7

================================================================= 1 failed, 3 passed, 3 rerun in 0.20s ==================================================================

If you want to set the retry interval , you can use the --rerun-delay parameter to specify the delay time (in seconds);

If you want to rerun specific errors , you can use the --only-rerun parameter to specify regular expression matching, and you can use it multiple times to match multiple.

pytest --reruns 5 --reruns-delay 1 --only-rerun AssertionError --only-rerun ValueError

If you just want to mark a single test for automatic rerun when it fails, you can add pytest.mark.flaky() and specify the retry count and delay interval.

@pytest.mark.flaky(reruns=5, reruns_delay=2)

def test_example():

import random

assert random.choice([True, False])

5. pytest-randomly : implement random sorting test

The greater the randomness in the test the easier it is to find hidden defects in the test itself and provide more coverage for your system.

Install:

pip install -U pytest-randomly

run:

E:\workspace-py\Pytest>pytest test_sample.py

========================================================================== test session starts ==========================================================================

platform win32 -- Python 3.7.3, pytest-6.0.2, py-1.9.0, pluggy-0.13.0

Using --randomly-seed=3687888105

rootdir: E:\workspace-py\Pytest

plugins: allure-pytest-2.8.18, cov-2.10.1, html-3.0.0, randomly-3.5.0, rerunfailures-9.1.1, xdist-2.1.0

collected 4 items

test_sample.py F... [100%]

=============================================================================== FAILURES ================================================================================

___________________________________________________________________________ TestAdd.test_four ___________________________________________________________________________

self = <test_sample.TestAdd object at 0x000000000567AD68>

def test_four(self):

> assert add(-3, -4) == 7

E assert -7 == 7

E + where -7 = add(-3, -4)

test_sample.py:22: AssertionError

======================================================================== short test summary info ========================================================================

FAILED test_sample.py::TestAdd::test_four - assert -7 == 7

====================================================================== 1 failed, 3 passed in 0.13s ======================================================================

E:\workspace-py\Pytest>pytest test_sample.py

========================================================================== test session starts ==========================================================================

platform win32 -- Python 3.7.3, pytest-6.0.2, py-1.9.0, pluggy-0.13.0

Using --randomly-seed=3064422675

rootdir: E:\workspace-py\Pytest

plugins: allure-pytest-2.8.18, assume-2.3.3, cov-2.10.1, forked-1.3.0, html-3.0.0, randomly-3.5.0, rerunfailures-9.1.1, xdist-2.1.0

collected 4 items

test_sample.py ...F [100%]

=============================================================================== FAILURES ================================================================================

___________________________________________________________________________ TestAdd.test_four ___________________________________________________________________________

self = <test_sample.TestAdd object at 0x00000000145EA940>

def test_four(self):

> assert add(-3, -4) == 7

E assert -7 == 7

E + where -7 = add(-3, -4)

test_sample.py:22: AssertionError

======================================================================== short test summary info ========================================================================

FAILED test_sample.py::TestAdd::test_four - assert -7 == 7

====================================================================== 1 failed, 3 passed in 0.12s ======================================================================

This feature is enabled by default, but can be disabled via a flag (if you don't need this module, it is recommended not to install it).

pytest -p no:randomly

If you want to specify a random order , you can specify it through the --randomly-send parameter, or you can use the last value to specify that the order of the last run should be followed.

pytest --randomly-seed=4321

pytest --randomly-seed=last

6. Other active plugins

There are also some other functional ones, some customized for individual frameworks, and for compatibility with other testing frameworks, I will not demonstrate them here, but I will simply list them:

pytest-django : For testing Django applications (Python web framework).

pytest-flask : For testing Flask applications (Python web framework).

pytest-splinter : Compatible with Splinter web automation testing tool.

pytest-selenium : Compatible with Selenium Web automated testing tools.

pytest-testinfra : Test the actual state of servers configured by management tools like Salt, Ansible, Puppet, Chef, etc.

pytest-mock : Provides a mock firmware that creates virtual objects to implement individual dependencies in tests.

pytest-factoryboy : combined with the factoryboy tool to generate a variety of data.

pytest-qt : Provides for writing tests for PyQt5 and PySide2 applications.

pytest-asyncio : For testing asynchronous code with pytest.

pytest-bdd : Implements a subset of the Gherkin language to automate project requirements testing and facilitate behavior-driven development.

pytest-watch : Provides a set of shortcut CLI tools for pytest.

pytest-testmon : Can automatically select and re-execute tests affected only by recent changes.

pytest-assume : Used to allow multiple failures per test.

pytest-ordering : Ordering functionality for test cases.

pytest-sugar : Displays failures and errors immediately and shows a progress bar.

pytest-dev / pytest-repeat : Single or multiple tests can be executed repeatedly (specified number of times).