Some time ago, the editor introduced to you that with the official launch of the GPT-4 language model recently, ChatGPT has also brought a new plug-in - a web browser and a code interpreter, giving ChatGPT the ability to use tools, network, and run calculations. Ability.

After the update, according to the feedback from the global media, GPT-4 does have a great performance improvement compared to GPT-3.5, but to the surprise of global AI followers, this ability seems to have a new evolution recently .

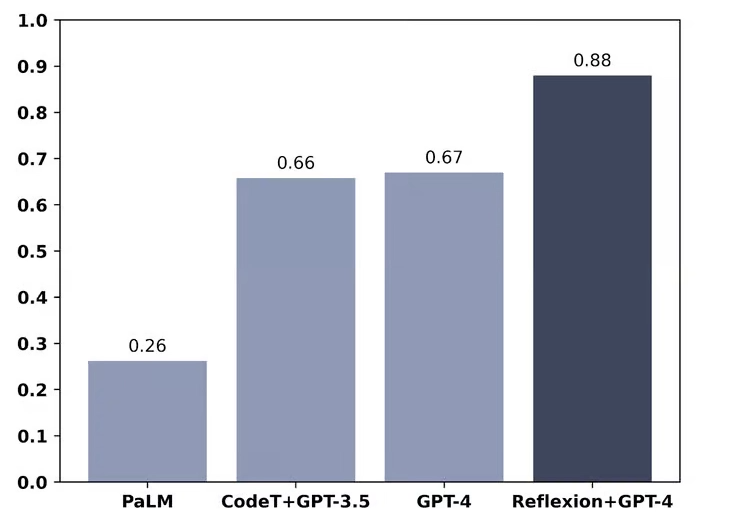

(88% accuracy after GPT-4 reflection, 67% before)

A former Google Brain research engineer discovered that GPT-4 can criticize its own achievements in a reasonable way, which means that the GPT-4 model already has a certain ability to reflect, which once again accelerated the evolution of the GPT-4 model. According to relevant According to the media test, the test success rate of GPT-4 with reflective ability is 30% higher than that of GPT-4 without reflective ability.

The faster and faster development of GPT-4 may aggravate users' concerns about personal information security, and question whether ChatGPT is controllable.

According to the latest research report released by the network security company Darktrace, attackers use generative AI such as ChatGPT to increase the amount of social engineering attacks by 135% by increasing text descriptions, punctuation marks, and sentence length.

In addition, according to relevant media reports, within 20 days after Samsung Electronics introduced the chat robot ChatGPT, there were 3 accidents involving ChatGPT, of which 2 were related to semiconductor equipment and 1 was related to the content of the meeting.

In order to prevent similar accidents from happening again, Samsung is formulating relevant protective measures. If similar accidents occur in the future, Samsung may cut off the ChatGPT service.

Many countries have also taken measures to address the rapidly growing security issues. According to People's Daily Online, on March 31, the Italian data protection agency stated that the ban and investigation of ChatGPT had "immediate effect" on March 31. The data protection agency said the model had privacy concerns.

There have been concerns over the potential risks of AI, including its threat to jobs and the spread of misinformation and bias. Italy will not only block OpenAI's chatbot, but also investigate its compliance with the General Data Protection Regulation, Italy's data protection agency said.

In addition, the Irish Data Protection Commission also stated that the agency is following up with Italian regulators to understand the basis for their actions to protect the information security of users in the country.

And just yesterday, according to relevant media reports, not only Italy, Germany, France and other countries may also follow Italy's approach to disable ChatGPT, and the relevant German organization stated that it has contacted the relevant Italian organization and discussed the investigation result.

According to relevant media reports, due to the investigation of information security issues related to ChatGPT by many countries, the stock price of ChatGPT concept stock C3.ai continued to fall, closing down 26.34% to 24.95 US dollars, with a market value of 2.8 billion US dollars, the largest intraday drop since the stock was listed .

From the current point of view, ChatGPT does have its unique advantages, and its performance advantage is great compared with other competing products, but the problems caused by it cannot be effectively controlled are also very obvious. First of all, AI currently cannot If you provide a completely correct answer for the first time, there may be a chance to output wrong answers and cause misunderstandings; in addition, it is impossible to impose stricter control on illegal activities, making the cost of crimes related to information security and the cost of creating rumors on social media lower, which aggravates the the burden of review.

As Altman, CEO of OpenAI, said in a previous interview, ChatGPT is still a very early AI. But formally because it is a very early AI, it has more problems, and it should be controlled in the process of a large number of training to ensure that it can be used safely and effectively, otherwise the situation may be worse in the future if it is allowed to develop .