Pattern Recognition - Exam Review

Article Directory

Bayesian estimation

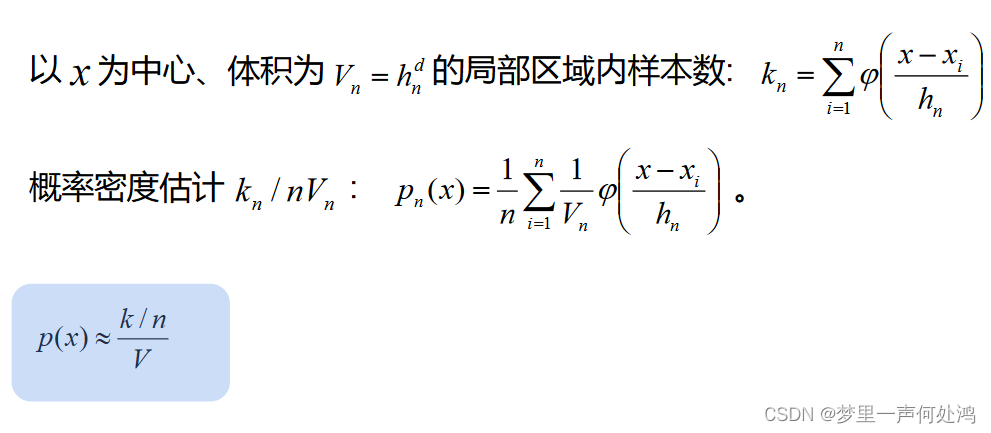

The use of parzen window in meanshift

Initialization:

Choose an initial point as the center of the Parzen window.

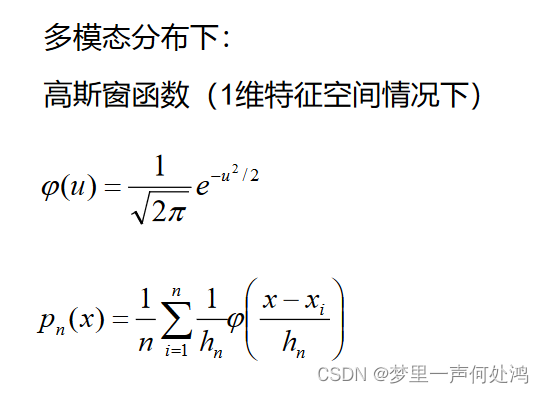

Choose a window function K ( u ) K(u)K ( u ) , usually using a Gaussian distribution as the window function. lethhh is the bandwidth parameter of the window function.

Kernel density estimation:

for each sample point xi x_ixi, calculate its relationship with the target point xxThe distance between x , ie d ( x , xi ) d(x,x_i)d(x,xi) .

The distance d ( x , xi ) d(x,x_i)d(x,xi) divided by the bandwidth parameterhhh , get the standardized distanceui = d ( x , xi ) h u_i = \frac{d(x,x_i)}{h}ini=hd(x,xi).

General standardization distance ui u_iiniSubstitute into the window function K ( u ) K(u)In K ( u ) , achievable weightwi = K ( ui ) w_i=K(u_i)Ini=K ( ui) .

Sample point xxThe value of the kernel density estimation function of x is fh ^ ( x ) = 1 nhd ∑ i = 1 nwi \hat{f_h}(x)=\frac{1}{nh^d}\sum_{i=1}^n w_ifh^(x)=nhd1∑i=1nIni, where nnn is the sample size,ddd is the data dimension.

If Gaussian distribution is used as the window function, then the window function is K ( u ) = 1 ( 2 π ) d / 2 e − 1 2 ∣ u ∣ 2 K(u)=\frac{1}{(2\pi)^ {d/2}}e^{-\frac{1}{2}|u|^2}K ( u )=( 2 p )d/21It is−21∣ u ∣2 , where∣ u ∣ |u|∣ u ∣ represents the normalized distanceuuThe modulus length of u .

Calculate the mean shift vector:

multiply each sample point according to its weight in the window (that is, the value of the kernel density estimation function) by the vector from the point to the center of the window to obtain a vector set, and then add these vectors and average Get the mean shift vector.

Shift window:

Move the center of the Parzen window to the position indicated by the mean shift vector calculated in the previous step. Repeat the above steps until the center of the Parzen window is stable or reaches the preset number of iterations, judging that the algorithm has converged. Output the final Parzen window center as the clustering result of the algorithm.

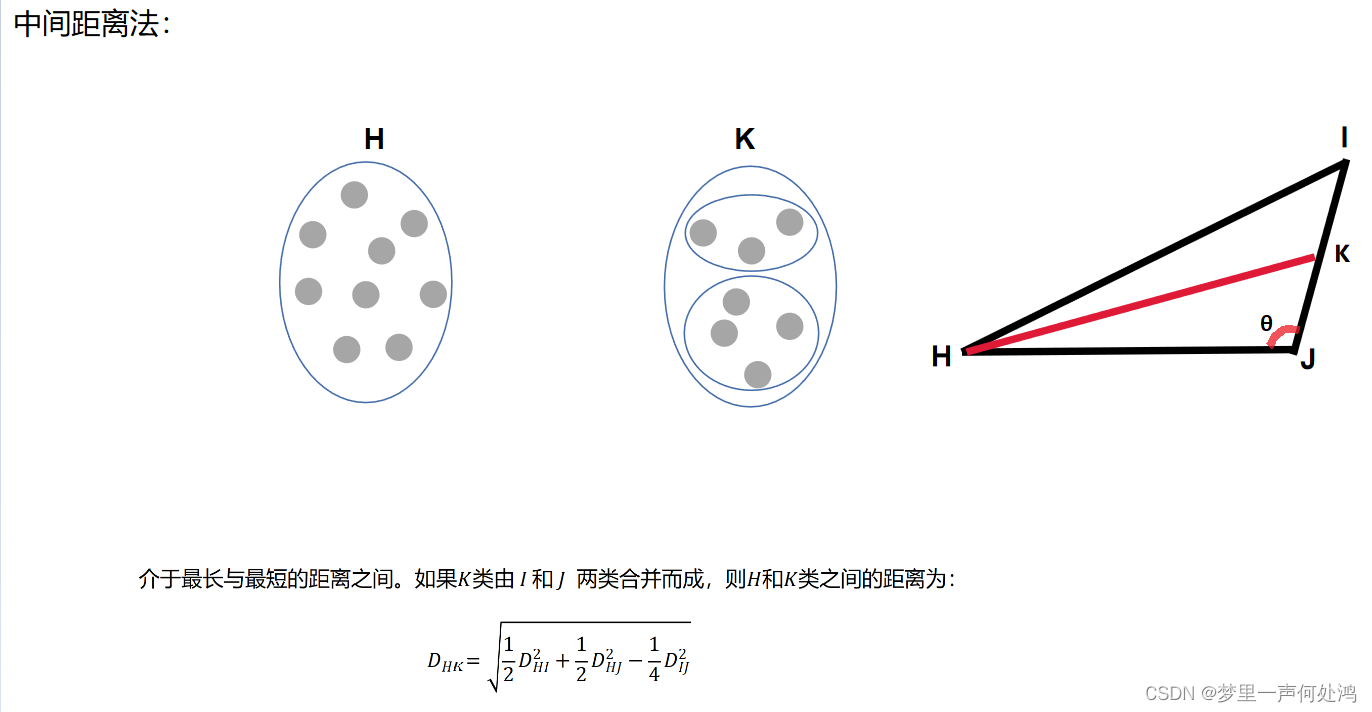

middle distance method

The teacher said that the derivation of the formula should be tested, and the derivation is as follows:

在Δ \DeltaIn Δ HJK, for ∠ \angle∠ HJI immediatelyθ \thetaθ carries out the law of cosines as follows:

c o s θ = H J 2 + J K 2 − H K 2 2 H J × J K = H J 2 + 1 4 J I 2 − H K 2 H J × J I ( 1 ) cos\theta = \frac{HJ^2 + JK^2 - HK^2}{2HJ\times JK} = \frac{HJ^2 + \frac{1}{4}JI^2 - HK^2}{HJ\times JI} (1) cosθ=2HJ _ _xJKHJ2+JK2−HK2=HJxJ IHJ2+41J I2−HK2( 1 )

在Δ \DeltaIn Δ HJI, the same for∠ \angle∠ HJI immediatelyθ \thetaθ carries out the law of cosines as follows:

c o s θ = H J 2 + J I 2 − H I 2 2 H J × J I ( 2 ) cos\theta = \frac{HJ^2 + JI^2 - HI^2}{2HJ\times JI} (2) cosθ=2HJ _ _xJ IHJ2+J I2−HI2( 2 )

Obviously, formula 1 and formula 2 are the same:

H J 2 + 1 4 J I 2 − H K 2 H J × J I = H J 2 + J I 2 − H I 2 2 H J × J I \frac{HJ^2 + \frac{1}{4}JI^2 - HK^2}{HJ\times JI} = \frac{HJ^2 + JI^2 - HI^2}{2HJ\times JI} HJxJ IHJ2+41J I2−HK2=2HJ _ _xJ IHJ2+J I2−HI2

解得:

H K = 1 2 H I 2 + 1 2 H J 2 − 1 4 I J 2 HK = \sqrt{\frac{1}{2}HI^2+\frac{1}{2}HJ^2-\frac{1}{4}IJ^2} HK=21HI2+21HJ2−41IJ2

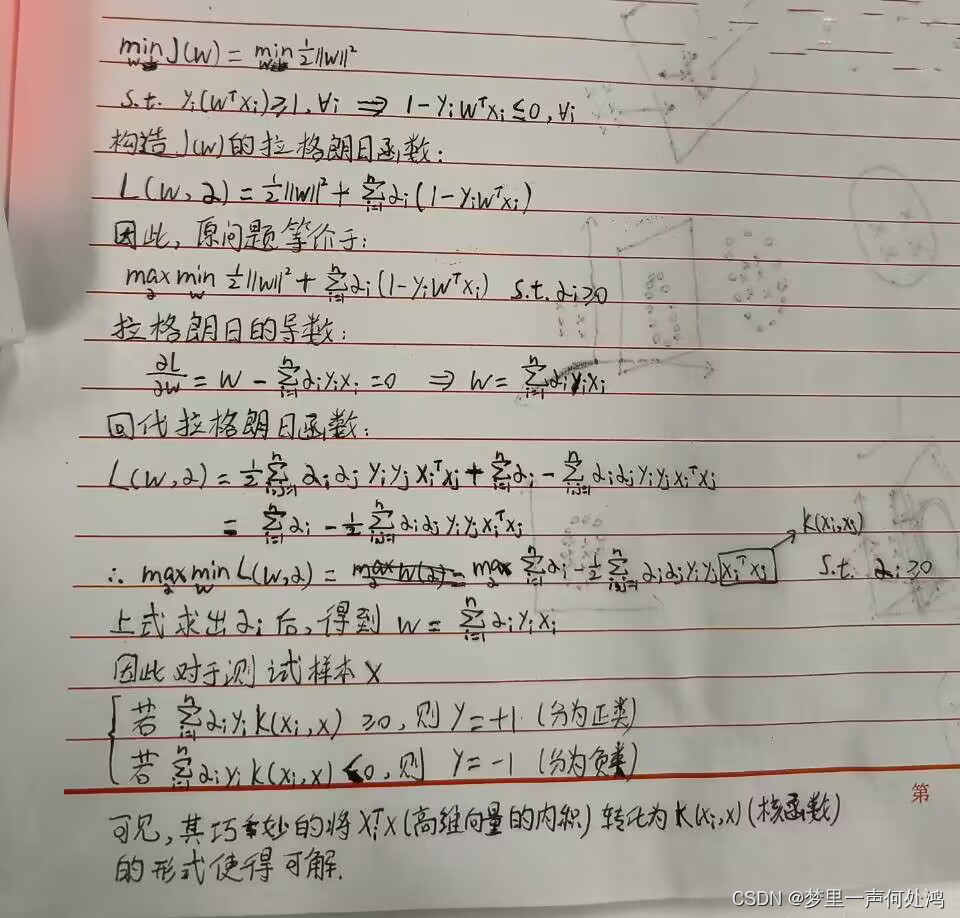

Dual transformation in SVM

This is our final exam question for this semester, and the teacher revealed it in advance. It is also similar to the above derivation, but it is simpler.

That is, if the objective function is not w T xi + bw^Tx_i + bInTxi+b is insteadw T xiw^Tx_iInTxi, please convert the dual problem. In fact, it has become simpler, and there is no need to perform derivation and back substitution on b. The solution is as follows: