Article directory

- 1 Introduction

- 2 About Global Tensor

- 2.1 Basic Guarantee of OneFlow Distributed Global Perspective

- 2.2 SBP automatic conversion

- 2.3 to_global method

- 2.4 GlobalTensor class code tracking

- 2.5 How to do the execution test of Global Ops

- 3 Summary

- 4 Reference Links

1 Introduction

In order to simplify distributed training, OneFlow proposes the concept of Global View. In the global perspective, distributed training can be performed like single-machine and single-card programming. In the design of OneFlow, Placement, SBP and SBP Signature are used to achieve this abstraction. Among them, Global Tensor is a kind of Tensor that can meet the abstraction required by Global View. This paper focuses on the mapping of Global Tensor's global perspective and physical perspective.

In addition, starting from mid-November last year, my five-month internship in OneFlow came to an end. At the end of this article, I made a brief summary of the work and gains during this period.

2 About Global Tensor

2.1 Basic Guarantee of OneFlow Distributed Global Perspective

This part of the content corresponds to Section 3.1 of the OneFlow paper . Placement, SBP, and SBP Signature are important guarantees for OneFlow's distributed global perspective. OneFlow's global perspective makes distributed training as easy to use as a single machine and a single card. First of all, the Placement attribute of Global Tensor can specify which physical device the Tensor is stored on. This concept is well understood. Let's focus on the design of SBP, which completes the mapping between the global tensor and the corresponding local tensor. Mathematical abstraction.

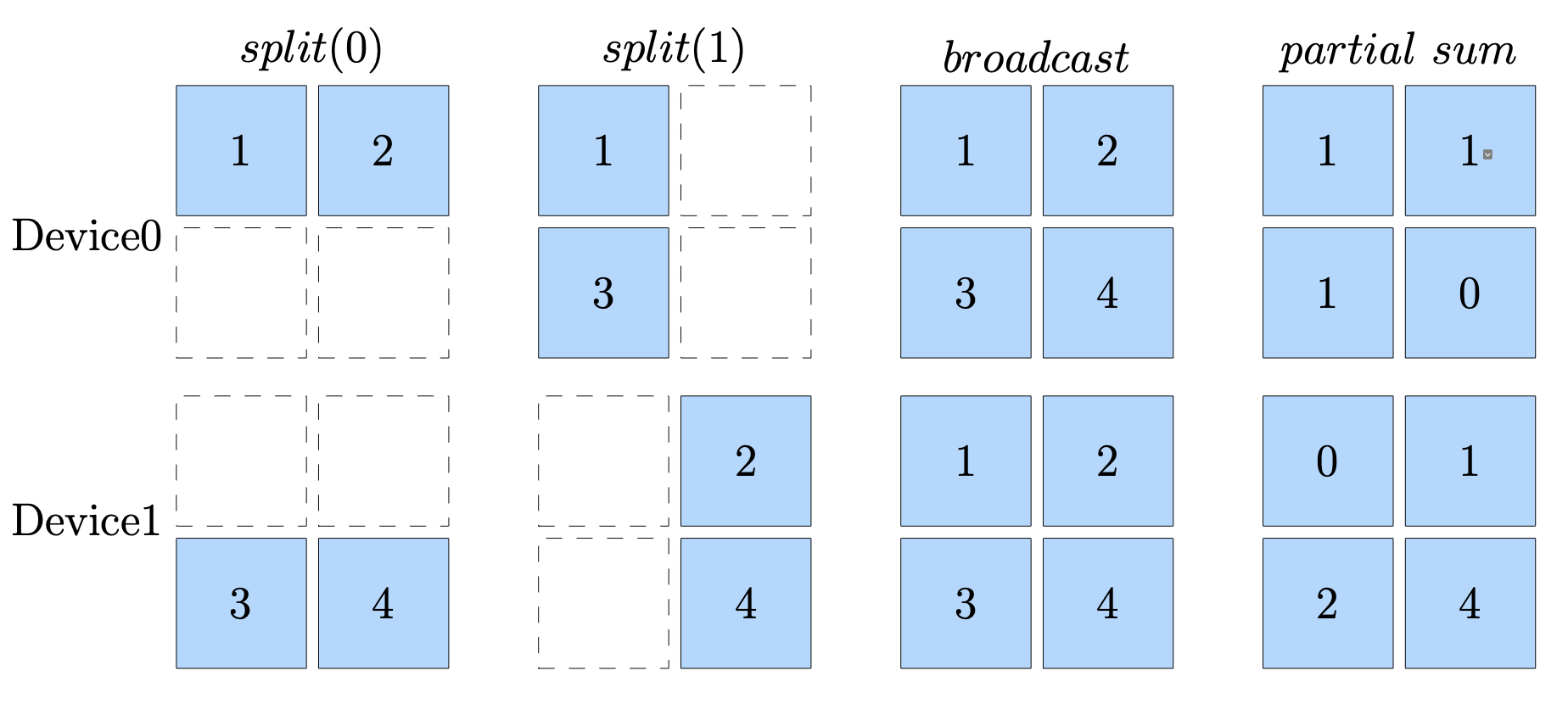

The following figure is 2x2a , which is mapped into 2 local tensors (Local Tensor) under 4 SBP mappings (each called SBP Signature), namely split(0), split(1) , broadcast and partial-sum, respectively, are the ideas of dividing by dimension, copying and broadcasting, and adding by position.

For a Global Tensor, we can arbitrarily set its SBP. However, for Ops with input and output data, it is not allowed to arbitrarily set the SBP attributes of its input and output. Because arbitrarily setting the input and output SBP attributes of Ops may not conform to the algorithm of operators in the global perspective, the concept of SBP Signature is proposed. Taking MatMul as an example here, given a data tensor Xand a weight tensor W, Y=X*Wthe SBP Signature can Xbe Winferred from the Signature of and . The following table is the legal legal SBP combination. Of course, SBP Signature does not need to be explicitly specified by us, and OneFlow has an automatic derivation mechanism.

| X | W | Y =X*W |

|---|---|---|

| S(0) | B | S(0) |

| B | S(1) | S(1) |

| S(1) | S(0) | P(sum) |

| P(sum) | B | P(sum) |

| B | P(sum) | P(sum) |

| B | B | B |

The above is 1D SBP. In order to be compatible with more complex distributed training scenarios, OneFlow also provides 2D SBP. Let's briefly look at the difference, first of all the difference in Placement configuration:

>>> placement1 = flow.placement("cuda", ranks=[0, 1, 2, 3]) # 1D SBP 配置集群

>>> placement2 = flow.placement("cuda", ranks=[[0, 1], [2, 3]]) # 2D SBP 配置集群

When the cluster in the placement is a 1/2-dimensional device array; the SBP of the Global Tensor must also correspond to it. For example, for the second case above (broadcast, split(0)), . At this time, on the device array, perform the broadcast operation on the 0th dimension [on each device group]; [then] do split(0) on the 1st dimension [on each device group].

For example: For ranks=[[0, 1], [2, 3]]), suppose to be seen as ranks = [Dim0_DeviceGroupA, Dim0_DeviceGroupB], Dim0_DeviceGroupA = [0, 1], Dim0_DeviceGroupB=[2, 3]. Then the data of rank0 is to first broadcast the complete data of Global Tensor to the two device groups Dim0_DeviceGroupA and Dim0_DeviceGroupB in the 0th dimension; then split(0) the data obtained by the Dim0_DeviceGroupA group to the 1st dimension, namely rank 0 and rank 1 in Dim0_DeviceGroupA ( ranks=[[0, 1]]), the same is true for Dim0_DeviceGroupB.

>>> sbp = (flow.sbp.broadcast, flow.sbp.split(0))

>>> tensor_to_global = tensor.to_global(placement=placement, sbp=sbp)

For SBP Signature, there is also a 2D version. On the basis of 1D, it is independently deduced in two dimensions, and matrix multiplication is performed.

2.2 SBP automatic conversion

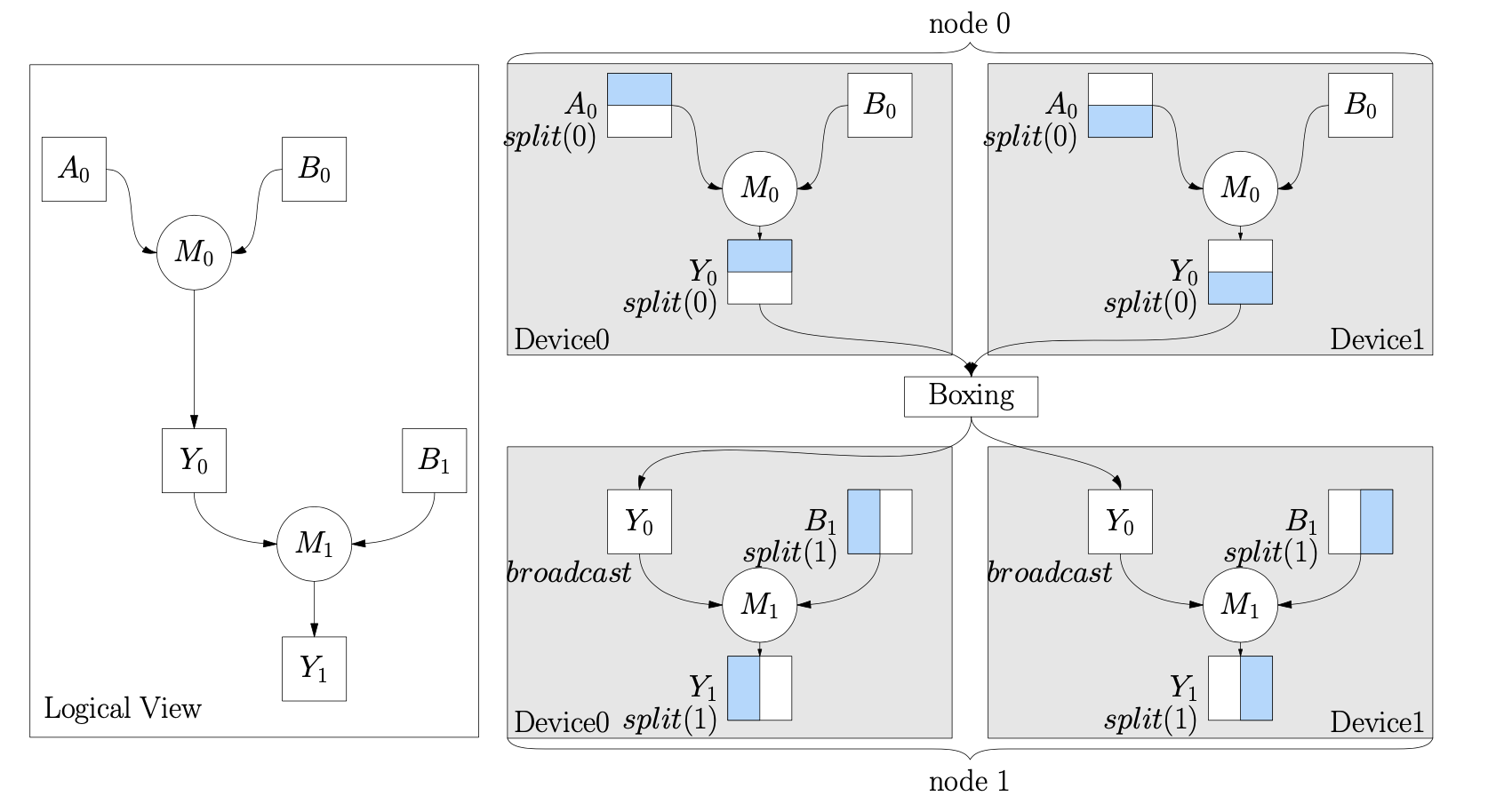

Producers and consumers of the same Global Tensor (upstream operator output and downstream operator input) may require different SBP Signatures. As shown in the figure below, two MatMul Ops are connected through the Global Tensor Y0. S(0) is the SBP Signature of Y0 inferred by M0. However, M1 expects its SBP Signature to be B. In this case, an operation of type SBP is required to convert the local tensor corresponding to Y0 between M0 and M1. This completes the automatic conversion of SBP, which is the Boxing mechanism of OneFlow.

Boxing mechanism has different methods, such as all2all, broadcast, reduce-scatter, all-reduce and all-gather, etc. Each operation will incur different communication costs. The above split(0)conversion is broadcastequivalent to doing an all-gather operation. In fact, it is well understood. The more detailed explanation of this part corresponds to Section 3.2 of the OneFlow paper , such as the calculation of the communication cost of each operation.

2.3 to_global method

Based on the content of the above two sections, look at the code, which has a typical to_global method, which also corresponds to Section 3.4 of the OneFlow paper .

import oneflow as flow

P0 = flow.placement("cuda", ranks=[0, 1])

P1 = flow.placement("cuda", ranks=[2, 3])

a0_sbp = flow.sbp.split(0)

b0_sbp = flow.sbp.broadcast

y0_sbp = flow.sbp.broadcast

b1_sbp = flow.sbp.split(1)

A0 = flow.randn(4, 5, placement=P0, sbp=a0_sbp)

B0 = flow.randn(5, 8, placement=P0, sbp=b0_sbp)

Y0 = flow.matmul(A0, B0)

Y0.to_global(placement=P1, sbp=y0_sbp)

B1 = flow.randn(8, 6, placement=P1, sbp=b1_sbp)

Y2 = flow.matmul(Y0, B1)

In the above code, we first initialized the placement and SBP of the Global Tensor. matmulThe output SBP of the operator of the previous layer was originally split(0), but matmulthe was converted broadcast. At this point, the output of the previous layer and the input of the next layer have different SBPs, which is not allowed. So, based on the Boxing mechanism, we use the to_globalmethod to split(0)convert to broadcast, which is in the code parameter sbp=y0_sbp.

2.4 GlobalTensor class code tracking

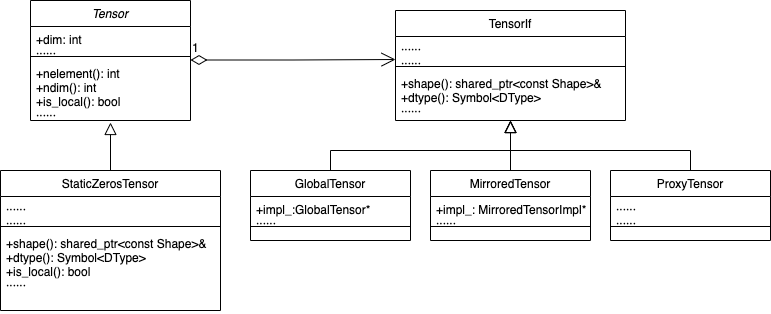

Based on the above, the concept of Global Tensor is actually clearer. In the design of OneFlow, it is a special Tensor that can meet the abstraction required by Global View (global consistency perspective). OneFlow's Tensor design is more like a bridge mode. The Tensor base class is used as an abstract role, and TensorIf, as a subclass of Tensor, acts as an implementation role interface. Both GlobalTensor and MirroredTensor provide the specific implementation of the implementation role interface, as shown in the following figure.

上图是简单的 Tensor 的设计模式,这里我们直接重点定位到 GlobalTensor 实现类中,代码位置在 https://github.com/Oneflow-Inc/oneflow/blob/888ad73fe28e2a4509ce7e563f196011e88b817d/oneflow/core/framework/tensor_impl.h#L139 ,类名为 ConsistentTensor,在 OneFlow v0.7.0 中已经叫做 GlobalTensor。相关的代码也可以在这个位置开始追踪。在 ConsistentTensor 中,有指向 ConsistentTensorImpl 的指针,EagerConsistentTensorImpl 和 EagerConsistentTensorImpl 又分别继承 ConsistentTensorImpl ,代码位置在 https://github.com/Oneflow-Inc/oneflow/blob/888ad73fe28e2a4509ce7e563f196011e88b817d/oneflow/core/framework/tensor_impl.h#L286 。值得注意的是, ConsistentTensor 的实现类 ConsistentTensorImpl 里,代码中存在 ConsistentTensorMeta 的成员指针,TensorMeta 系列类维护了除包括 Tensor 的设备、形状和数据类型等基本变量外,包括 placement 和 SBP 的信息。

As mentioned earlier, Global Tensor is a special Tensor that can satisfy the distributed abstraction of Global View. The above picture MirroredTensoris where the Tensor data of each device is actually stored. EagerConsistentTensorImplThere is MirroredTensora . Similarly, it MirroredTensorholds MirroredTensorImpla pointer to , and MirroredTensorImplthe implementation class holds TensorStoragea pointer to . The data in Tensor finally exists in the TensorStoragemember, and the code location is https://github.com/Oneflow -Inc/oneflow/blob/baa761916262c72414b744538afcc56b01906a09/oneflow/core/eager/eager_blob_object.h#L32.

2.5 How to do the execution test of Global Ops

Finally, a brief look at how OneFlow accomplishes the testing tasks of Global Ops. In the previous article **"How does the deep learning framework elegantly do the task of operator alignment"**, I have already introduced the dependencies of OneFlow's AutoTest framework. Link: https://zhuanlan.zhihu.com/p/458111952 .

In this article, it mainly focuses on the introduction of the one-sided task of Local Ops, and the execution test of Global Ops is also based on the AutoTest framework. The difference is that we only need to use the to_global() method introduced earlier in the article to convert the local tensor to get the global tensor. And need to enumerate placement and SBP information. Take matmul Op as an example. For Binary Ops such as matmul, under the same placement, you need to traverse the SBP twice. The code is as follows.

@autotest(n=1, check_graph=False)

def _test_matmul(test_case, placement, x_sbp, y_sbp):

x = random_tensor(ndim=2, dim0=8, dim1=16).to_global(placement=placement, sbp=x_sbp)

y = random_tensor(ndim=2, dim0=16, dim1=8).to_global(placement=placement, sbp=y_sbp)

return torch.matmul(x, y)

class TestMatMulModule(flow.unittest.TestCase):

@globaltest

def test_matmul(test_case):

for placement in all_placement():

for x_sbp in all_sbp(placement, max_dim=2):

for y_sbp in all_sbp(placement, max_dim=2):

_test_matmul(test_case, placement, x_sbp, y_sbp)

if __name__ == "__main__":

unittest.main()

3 Summary

The above briefly introduces my understanding of Global Tensor. In this part, I summarize my internship work and gains. This internship at OneFlow is not long, but my first work experience, and it is a remote job, and I am still busy with some things in school. So far, I only know Brother Xiaoyu (Xu Xiaoyu)'s face, hahaha~ I have also seen BBuf (Zhang Xiaoyu)'s marriage certificate photo, as well as the profile picture of Brother Chi (Yao Chi). Overall, it was a fun and relaxing (perhaps because of my inaction) experience.

- When I first joined the company, I first submitted a few simple PRs to the oneflow warehouse, familiarized myself with the CI process, as well as performance testing, using OneFlow to complete some simple tasks such as multi-card training U-Net network and python code handling, so as to adapt faster. work rhythm. During this period, I learned ONNX, carefully read a series of articles under https://github.com/BBuf/onnx_learn, and participated in some work of OneFlow2ONNX.

- In the middle of my internship, I fixed some bugs in oneflow's operator code, and taught myself the new standard of C++ and CUDA optimization. At this time, I felt that I didn't learn anything in three years in college. I was a Java, 408 without emotion. Exam machine. In addition, some operators have been developed and many bugs have been written. I have to thank the OneFlow security guard zzk (Zheng Zekang). Built the CI of the RTD documents of libai and flowvision warehouses, and was responsible for the Oncall work of related documents.

- In the later stage of the internship, I completed the project that nn.Graph supports Local Ops single test, that is, the automatic execution test of Graph and Eager mode of the same code without changing the code. There are many sub-problems to be solved, but through a little bit The hack basically completes this task. Based on this, I will be more familiar with the construction, compilation process, training and debugging of static graph (Graph).

- About 30+ PRs have been raised in the oneflow repository. Participating in open source has very practical benefits. The gains are greater than the daily practice of doing software black-box testing, such as becoming a Committer or submitting how many PRs. You can put these on your resume during the interview. , but if you have no experience in participating in open source, you have to spend a lot of energy in other areas to prove your ability. Working in OneFlow is generally pretty easy. During my time in Novice Village, my colleagues have always helped me, so that I don't have to face many unfamiliar technologies and suffer. When I have time, I would like to have the opportunity to return to Oneflow to practice all the time. If I reflect on myself, I really need to settle the fragmented knowledge. After a short period of time, I will start doing experiments and writing papers, I'm a fight until free~

4 Reference Links

- https://arxiv.org/pdf/2110.15032.pdf

- https://github.com/Oneflow-Inc/oneflow