Abstract: When you add a cache to the system, have you considered what should be paid attention to when using the cache?

This article is shared from HUAWEI CLOUD Community " [High Concurrency] What is the most concerned issue of caching? What types are there? Recycling strategies and algorithms? ", Author: Glacier.

Often when starting a project, performance issues are not considered too much, and fast iteration functions are the main focus. Subsequently, with the rapid development of the business, the performance of the system will become slower and slower. At this time, the system needs to be optimized accordingly, and the most significant effect is to add a cache to the system. So, the question is, when you add a cache to the system, have you considered what needs to be paid attention to when using the cache?

cache hit rate

The cache hit ratio is the ratio of the number of times data is read from the cache to the total number of reads. The higher the hit rate, the better. Cache hit ratio = number of reads from cache / (total number of reads (number of reads from cache + number of reads from slow devices)) . This is a very important monitoring indicator. If you do caching, you should monitor this indicator to see if the caching is working well.

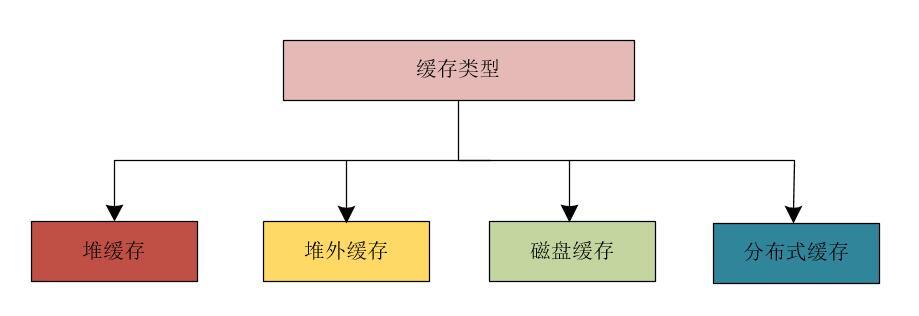

cache type

Generally speaking, cache types can be divided into: heap cache, off-heap cache, disk cache and distributed cache.

heap memory

Objects are stored using Java heap memory. The advantage of using a heap cache is that there is no serialization/deserialization and it is the fastest cache. The disadvantage is also obvious. When the amount of cached data is large, the GC (garbage collection) pause time will become longer, and the storage capacity is limited by the size of the heap space. Cache objects are generally stored through soft/weak references. That is, when the heap memory is insufficient, you can forcibly reclaim this part of the memory to release the heap memory space. Heap caches are generally used to store warmer data. Can be implemented using Guava Cache, Ehcache 3.x, MapDB.

off-heap memory

That is, the cache data is stored in the off-heap memory, which can reduce the GC pause time (the heap objects are transferred to the off-heap, and the objects scanned and moved by the GC become less), and more cache space can be supported (only limited by the size of the machine memory, not limited by the size of the machine memory). heap space impact). However, serialization/deserialization is required when reading the data. Therefore, it will be much slower than the heap cache. It can be implemented using Ehcache 3.x, MapDB.

disk cache

That is, the cached data is stored on disk, and the data still exists when the JVM restarts, but the heap/off-heap cached data will be lost and needs to be reloaded. It can be implemented using Ehcache 3.x, MapDB.

Distributed cache

Distributed caching can use ehcache-clustered (with Terracotta server) to implement distributed caching between Java processes. It can also be implemented using Memcached and Redis.

When using distributed cache, there are two modes as follows:

- Stand-alone mode: Store the hottest data in the heap cache, the relatively hot data in the off-heap cache, and the non-hot data in the disk cache.

- Cluster mode: Store the hottest data to the heap cache, the relatively hot data to the external cache, and the full amount of data to the distributed cache.

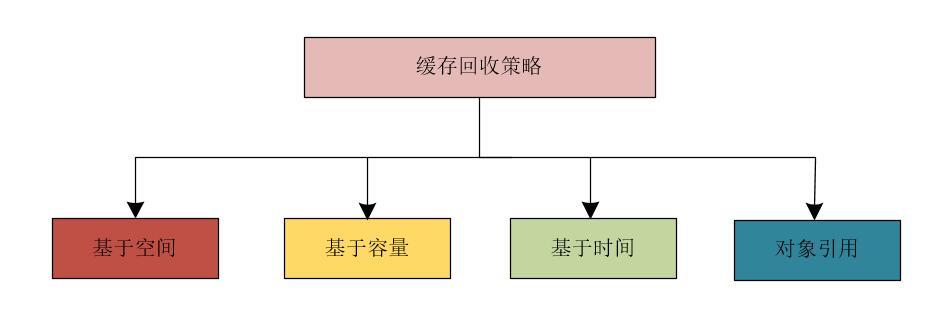

Cache Reclamation Policy

Generally speaking, the cache reclamation strategy includes: space-based reclamation strategy, capacity (space)-based reclamation strategy, time-based reclamation strategy and object reference-based reclamation strategy.

space based

Space-based means that the cache has set a storage space, such as 10MB. When the upper limit of the storage space is reached, the data will be removed according to a certain policy.

based on capacity

Capacity-based means that the cache has a maximum size. When the cached entry exceeds the maximum size, old data is removed according to a certain policy.

time based

TTL (Time To Live): The lifetime, that is, a period of time from the creation of the cached data until it expires (the cached data will expire regardless of whether it is accessed during this time period).

TTI (Time To Idle): Idle period, that is, the time to remove the cache after the cached data has not been accessed.

object reference

Soft references: If an object is a soft reference, these objects can be reclaimed by the garbage collector when the JVM heap memory is low. Soft references are suitable for caching, so that when the JVM heap memory is insufficient, these objects can be reclaimed to free up some space for strongly referenced objects to avoid OOM.

Weak references: When the garbage collector reclaims memory, if a weak reference is found, it will be reclaimed immediately. Compared with soft references, weak references have a shorter lifetime.

Note: The reference is only reclaimed during garbage collection if there are no other strongly referenced objects referencing weak/soft referenced objects. That is, if there is an object (not a weak reference/soft reference object) that references a weak reference/soft reference object, the weak reference/soft reference object will not be reclaimed during garbage collection.

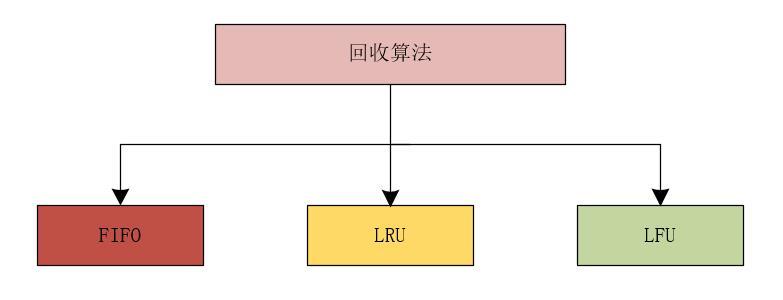

recycling algorithm

Using space-based and capacity-based caches will use certain strategies to remove old data, usually including: FIFO algorithm, LRU algorithm, and LFU algorithm.

- FIFO (First In First Out): First-in, first-out algorithm, that is, the first put into the cache is removed first.

- LRU (Least Recently Used): The least recently used algorithm, the one with the longest time from now is removed.

- LFU (Least Frequently Used): The least commonly used algorithm, the one with the least number of times (frequency) used in a certain period of time is removed.

In practical applications, LRU-based caches are mostly used.

Click Follow to learn about HUAWEI CLOUD's new technologies for the first time~