Abstract

Capture how the visual content changes

Generalize slow feature analysis to “steady” feature analysis

Key idea

Impose a prior that higher order derivatives in the learned feature space must be small.

Method

Train a convolutional neural network with a regularizer on tuples of sequential frames from unlabeled video.

1.Introduction

predictable

Key idea

Impose higher order temporal constraints on the learned visual representation

Develop a regularizer that uses contrastive loss over tuples of frames to achieve such mappings with CNNS( z ( b ) − z ( a ) ≈ z ( c ) − z ( b ) z(b)-z(a) ≈ z(c) - z(b) z(b)−z(a)≈z(c)−z(b))

2.Related Work

Target

- Previous: Build a robust object recognition system

the image representation must incorporate some degree of invariance to changes. Invariance can be manully crafted, sucn as with spatial pooling operations or gradient descriptors.

The authors’ method: pad pad the training data by systematically perturbing raw images with label-preserving transformations

results: jittered versions originating from the same content all map close by in the learned feature space.

idea: Unlabeled video is an appealing resource for recovering invariance.

- Authors’: Learn features from unlabeled video

idea: Preserve higher order feature steadiness

- Authors’: Exploit transformation patterns in unlabeled video to learn features that are useful for recognition.

idea: Explicitly predict the transformation parameters

3.Approach

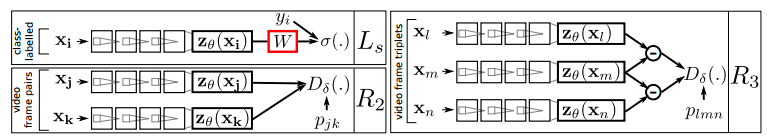

Tuned the hidden layers of the network not only with the backpropagation gradients from a classification loss, but also with gradients computed from the unlabeled video that exploit its temporal steadiness.

3.1 Notation and framework overview

4.Experimental set

4.1.Experimental setup

- dataset

- Baseline

- 1.Unreg: An unregularized network trained only on the supervised training samples S

- 2.SFA-1: Distance l2 to calculate R2

- 3.SFA-2: Distance l2 to calculate R2

Siamese network configuration

- Network architectures

- 1.NORB -> NORB task: input → 25 hidden units → ReLU nonlinearity → D=25 features

- 2.The other two tasks: (1)Resize images to 32 × 32;(2)standard CNN architectures known to work well with tiny images, producing D=64-dimensional features

- Optimize Nesterov-accelerated stochastic gradient descent, validatin classification

4.2.Quantifying steadiness

-

- At test time, given the first two images of each triplet, the task is to predict what the third looks like

Method: Indentify an image closest to zθ(x3)

- At test time, given the first two images of each triplet, the task is to predict what the third looks like

-

- Take a large poo C of candidate images, map them all to their features via zθ

-

- Rank in incresing order of distance from zθ(x3)

4.3.Recognition results

- Unlabeled video as a prior for supervised recognition

- Pairing unsupervised and supervised datasets

- Unlabeled video as a prior for supervised recognition

- Varying unsupervised training set size

- Comparison to supervised pretraining and finetuning

- how the proposed unsupervised feature learning idea competes with the popular supervised pretraining model