In the era of cloud computing, computing resources have become water and electricity on the Internet, as Xiao Ma said back then.

Various services such as virtual host, web server, database, object storage, etc. can be completed through various cloud platforms.

And behind the thriving cloud computing, there is an important hero, that is virtualization technology . It can be said unceremoniously that without virtualization technology, cloud computing is impossible to talk about.

Speaking of virtualization, what do you think of? From our commonly used three-piece virtual machine VMware, VirtualPC, and VirutalBox to today's popular KVM and container technology Docker?

What is the relationship between these technologies, what are the technical principles behind them, what are the differences, and what are the scenarios of their respective applications?

After reading this article, I believe everyone can answer the above questions.

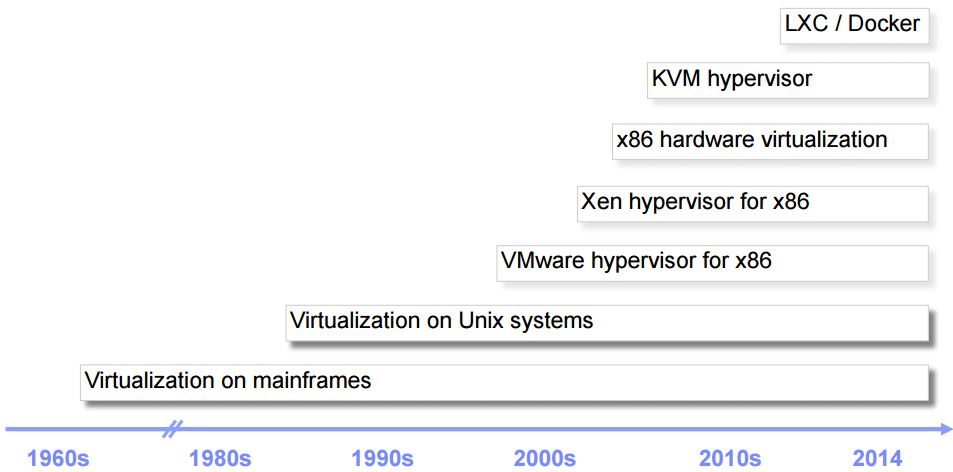

History background

What is virtualization technology?

The explanation in Wikipedia is this:

Virtualization (technology) is a resource management technology that abstracts and transforms various physical resources of a computer (CPU, memory, disk space, network adapters, etc.) and can be divided and combined into one or more A computer configuration environment.

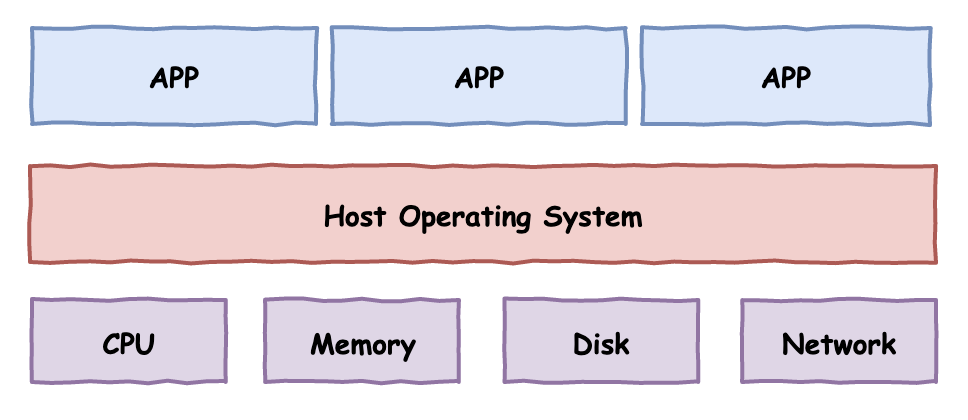

For a computer, we can simply divide it into three layers: from bottom to top, the physical hardware layer, the operating system layer, and the application program layer.

In 1974, two computer scientists Gerald Popek and Robert Goldberg published an important paper "Formal Requirements for the Third Generation of Virtualization Architecture" , in which three basic conditions for virtualization were put forward:

等价性: The program should show the same results when executed on the local computer and in the virtual machine (not including the difference in execution time)安全性: Virtual machines are isolated from each other and from the host computer性能: In most cases, the code instructions in the virtual machine should be executed directly in the physical CPU, and a small number of special instructions can be participated by the VMM.

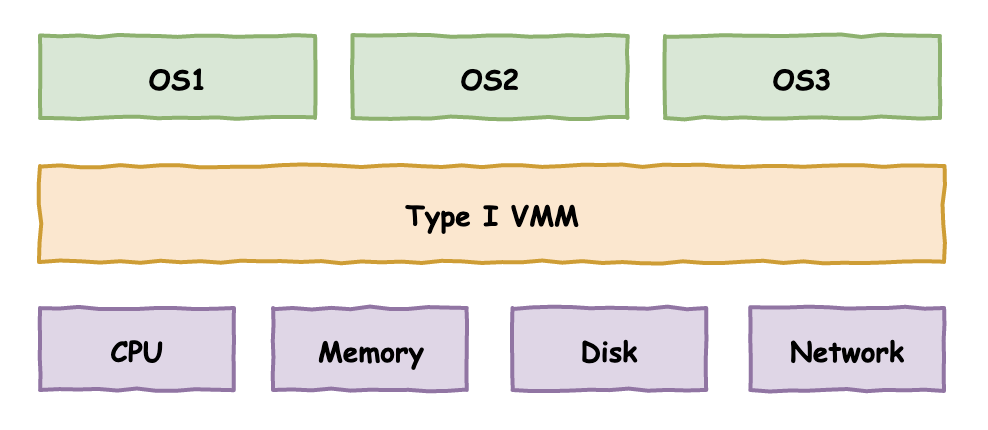

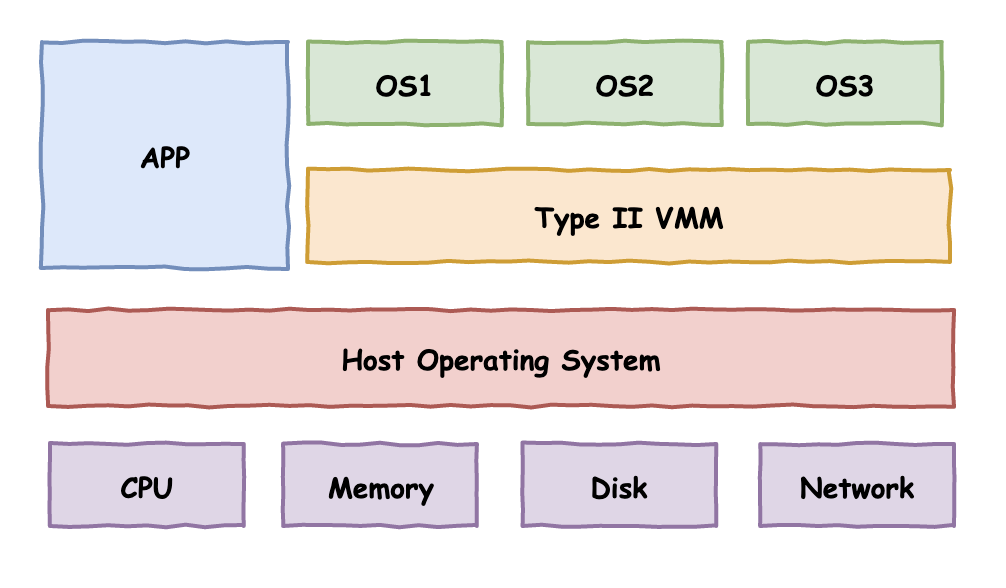

So how to realize the virtualized partition of the physical resources at the bottom of the computer? In the history of computer technology development, there have been two well-known solutions, namely Type I virtualization and Type II virtualization

Type I virtualization

Type I virtualization  Type II virtualization

Type II virtualization

The VMM in the figure means Virtual Machine Monitor, a virtual machine monitoring program, or another more professional term: HyperVisor

The difference between the two virtualization solutions can be clearly seen from the figure:

Type I: Directly above the hardware to build multiple isolated operating system environments

Type II: Depends on the host operating system and builds multiple isolated operating system environments on it

VMware as we know it actually has two product lines, one is VMware ESXi, which is directly installed on bare metal and does not require an additional operating system. It belongs to the first type of virtualization. The other is VMware WorkStation, which is more familiar to our ordinary users, which belongs to the second type of virtualization.

How to realize the above-mentioned virtualization solution?

A typical approach is- 陷阱 & 模拟technology

What do you mean? To put it simply, under normal circumstances, the code instructions in the virtual machine are directly placed on the physical CPU for execution. Once some sensitive instructions are executed, an exception is triggered. The control flow is handed over to the VMM, and the VMM performs the corresponding processing. To create a virtual computer environment.

However, this classic virtualization solution has encountered problems on the Intel x86 architecture.

Full virtualization: VMware binary translation technology

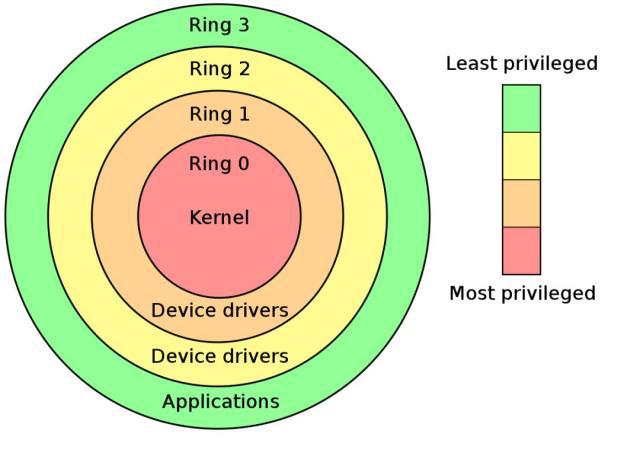

Different from the 16-bit real address working mode in the 8086 era, after the x86 architecture entered the 32-bit era, a series of new technologies such as protected mode and virtual memory were introduced. At the same time, application code and operating system code are isolated for safety, and its implementation depends on the working state of the x86 processor.

This is the well-known Ring0-Ring3 four "rings" of x86 processors.

The operating system kernel code runs in the Ring0 state with the highest authority, and the application program works in the Ring3 state with the lowest peripheral authority. The remaining mainstream operating systems of Ring1 and Ring2 are basically not used.

The permissions mentioned here have two levels of constraints:

- Accessible memory space

- Privileged instructions that can be executed

Let's focus on the second point, privileged instructions.

There are some special instructions in the CPU instruction set for hardware I/O communication, memory management, interrupt management and other functions. These instructions can only be executed in the Ring0 state and are called privileged instructions. Obviously, these operations cannot be performed by the application casually. If an application in the working state of Ring3 tries to execute these instructions, the CPU will automatically detect it and throw an exception.

Going back to our topic of virtualization technology, as the previous definition says, virtualization is the logical or physical division of computing resources to build independent execution environments.

According to the 陷阱 & 模拟methods we mentioned earlier , the programs including the operating system in the virtual machine can be run in a low-privileged Ring3 state. Once the operating system in the virtual machine performs memory management, I/O communication, interrupts, etc. , Execute the privileged instruction to trigger the exception, the physical machine dispatches the exception to the VMM, and the VMM performs the corresponding simulation execution.

This was originally an ideal model for realizing virtualization, but the CPU of the x86 architecture encountered a barrier here.

What is the problem?

Looking back at the ideal model described earlier, the premise for this model to be realized is that an exception can be triggered when a sensitive instruction is executed , so that the VMM has the opportunity to intervene to simulate a virtual environment.

But the reality is that there are some instructions in the CPU instruction set of the x86 architecture, which are not privileged instructions and can be executed in the Ring3 state, but these instructions are sensitive to the virtual machine and cannot be executed directly. Once executed, the exception cannot be triggered, and the VMM cannot intervene, and the virtual machine is exposed !

This result will lead to unpredictable errors in the code instructions in the virtual machine, and more seriously affect the operation of the real physical computer. The so-called security isolation and equivalence of virtualization will not be discussed.

How to solve this problem so that the x86 architecture CPU can also support virtualization?

VMware and QEMU have taken two different paths.

VMware creatively proposed a binary translation technology . VMM acts as a bridge between the virtual machine operating system and the host computer, and "translates" the instructions to be executed in the virtual machine into appropriate instructions for execution on the host physical computer to simulate the execution of the program in the virtual machine . You can simply understand it as the process of Java virtual machine executing Java bytecode. The difference is that the Java virtual machine executes bytecode, while VMM simulates the execution of CPU instructions.

It is also worth mentioning that in order to improve performance, not all instructions are executed in simulation. VMware has made a lot of optimizations here. For some "safe" instructions, it is not impossible to let them execute directly. So VMware's binary translation technology also incorporates some direct execution.

For the operating system in the virtual machine, VMM needs to completely simulate the underlying hardware devices, including processors, memory, clocks, I/O devices, interrupts, etc., in other words, VMM "simulates" one in the form of pure software The computer is used by the operating system in the virtual machine.

This kind of technology that completely simulates a computer is also called full virtualization. The benefits of this are obvious. The operating system in the virtual machine does not perceive that it is in the virtual machine, and the code can be installed directly without any changes. The shortcomings are also conceivable: completely simulated by software, conversion and translation execution, performance is worrying!

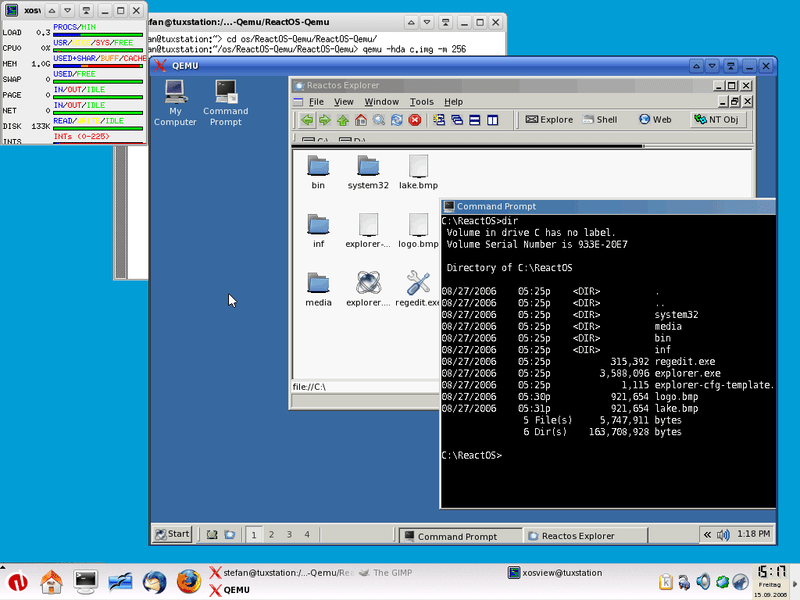

QEMU is a complete software-level "simulation". At first glance, it looks similar to VMware, but the actual essence is completely different. VMware translates the original CPU instruction sequence into a processed CPU instruction sequence for execution. However, QEMU completely simulates the execution of the entire CPU instruction set, which is more like "interpretation execution", and the performance of the two is not the same.

Paravirtualization: Xen kernel customization modification

Since there is full virtualization, the opposite is paravirtualization. As mentioned earlier, due to the relationship of sensitive instructions, a fully virtualized VMM needs to capture these instructions and completely simulate the execution of this process, so as to achieve both the satisfaction of the virtual machine The needs of the operating system will not affect the physical computer.

But in simple terms, the simulation process is actually quite complicated, involving a large number of underlying technologies, and such simulation is time-consuming and labor-intensive.

And just imagine, if you change all the places where sensitive instructions are executed in the operating system and change it to an interface call ( HyperCall ), the interface provider VMM will implement the corresponding processing, eliminating a lot of work such as capturing and simulating the hardware process. , Performance will be greatly improved.

This is paravirtualization , and the representative of this technology is Xen , an open source project born in 2003.

One of the biggest problems with this technology is that it needs to modify the source code of the operating system and do the corresponding adaptation work. This is acceptable for open source software like Linux, and it's more work at best. But for a closed-source commercial operating system like Windows, modifying its code is tantamount to a dream.

Hardware assisted virtualization VT / AMD-v

The toss and toss is all because the x86 architecture CPU naturally does not support the classic virtualization mode, and software vendors have to come up with various other methods to implement virtualization on x86.

If we go further, the CPU itself adds support for virtualization, what will it be like?

Soon after software vendors used their best efforts to realize the virtualization of the x86 platform, various processor vendors also saw the broad market for virtualization technology, and introduced virtualization support at the hardware level, officially boosting virtualization. The rapid development of technology.

Among them are Intel's VT series technology and AMD's AMD-v series technology.

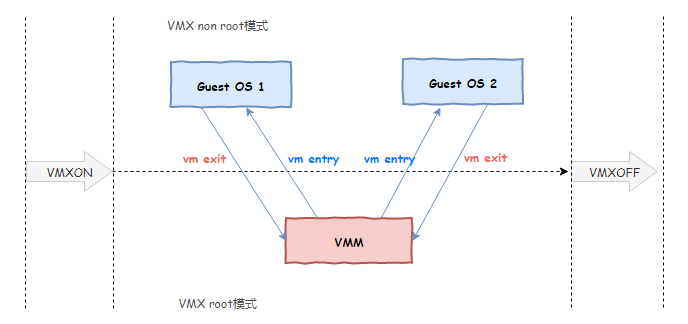

Hardware-assisted virtualization details more complex, in simple terms, the new generation CPU under the original Ring0-Ring3 four working state, and then introduces a concept called mode of operation, there VMX root operationand VMX non-root operationtwo modes, each mode has a complete There are four working states of Ring0-Ring3, the former is the mode of VMM operation, and the latter is the mode of OS operation in the virtual machine.

The level of VMM operation is called Ring -1 in some places. VMM can configure which instructions are hijacked and captured through the programming interface provided by the CPU, so as to achieve control of the virtual machine operating system.

In other words, in order to be able to control the execution of the code in the virtual machine, the original VMM had to use the "middleman" to perform translation and execution. Now the new CPU tells VMM: Don't be so troublesome. Tell me in advance which instructions and events you feel about Interest, I will notify you when these instructions are executed and these events occur, and you can achieve control. Fully supported by the hardware level, the performance is naturally much higher.

The above is just a simple understanding of hardware-assisted virtualization technology. In fact, it contains more elements and provides more convenience to VMM, including memory virtualization, I/O virtualization, etc., making the design and development of VMM greatly The simplification of VMM eliminates the need to pay expensive simulation execution costs, and the overall virtualization performance has also been greatly improved.

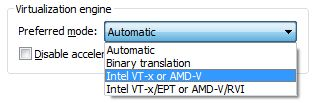

VMware introduced support for hardware-assisted virtualization from version 5.5, and then officially fully supported it in version 8.0 in 2011. Therefore, when we create a virtual machine, we can choose which virtualization engine technology to use, whether to use the original binary translation execution, or a new technology based on hardware-assisted virtualization.

During the same period, XEN also added support for hardware-assisted virtualization from version 3.0. Since then, XEN-based virtual machines can also run Windows systems.

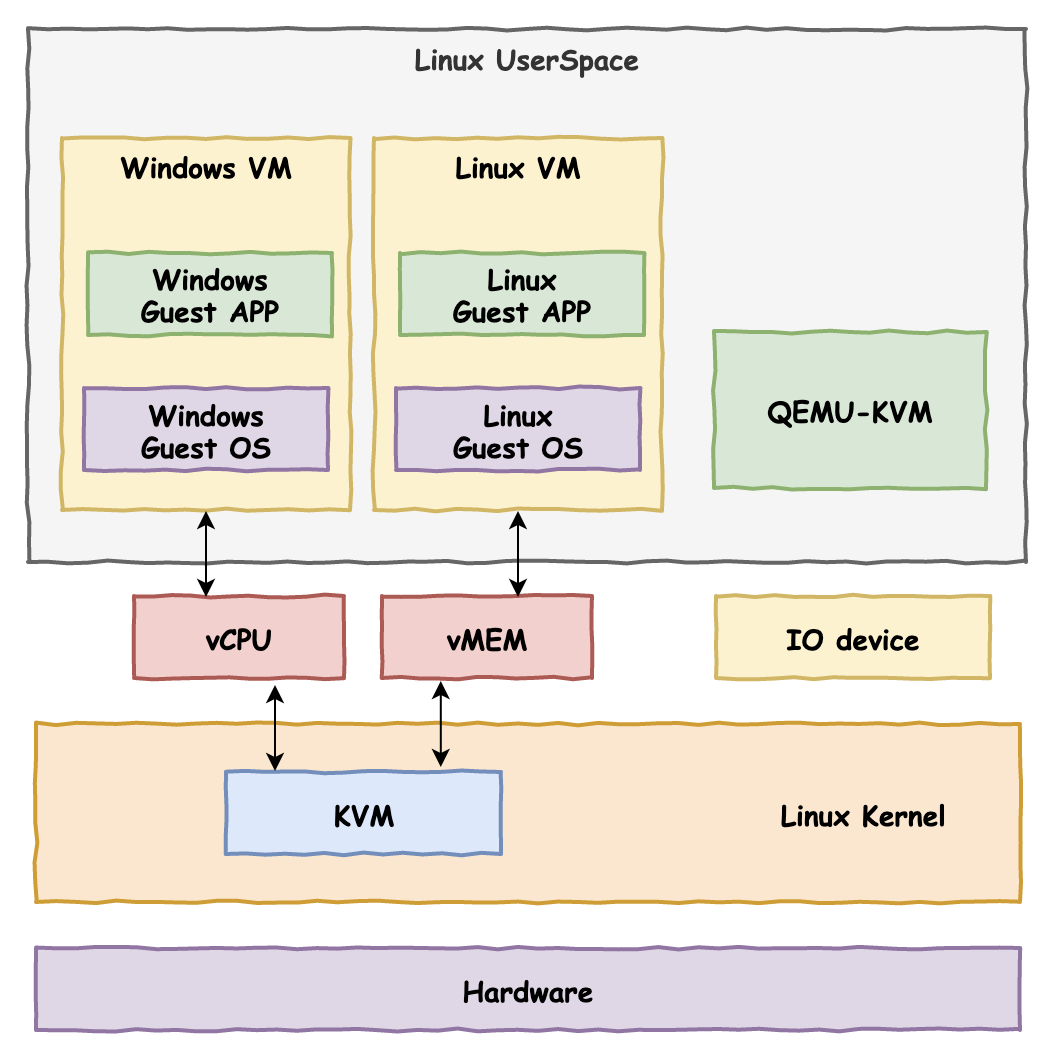

KVM-QEMU

With the support of hardware-assisted virtualization, virtualization technology has begun to show a blowout trend. VirtualBox, Hyper-V, KVM and other technologies have sprung up one after another. Among them, the open source KVM technology that has gained fame in the cloud computing field is undoubtedly.

The full name of KVM is for Kernel-based Virtual Machine , which means kernel-based virtual machine.

In terms of the underlying technology of virtualization, KVM, like the subsequent versions of VMware, is based on hardware-assisted virtualization. The difference is that VMware, as an independent third-party software, can be installed on many different operating systems such as Linux, Windows, MacOS, and KVM as a virtualization technology has been integrated into the Linux kernel. It can be considered that the Linux kernel itself is A HyperVisor, which is also the meaning of the KVM name, so this technology can only be used on Linux servers.

KVM technology is often used with QEMU and is called KVM-QEMU architecture. As mentioned earlier, before the birth of the hardware-assisted virtualization technology of the x86 architecture CPU, QEMU has already adopted a full set of software simulation methods to achieve virtualization, but the execution performance under this scheme is very low.

KVM itself is based on hardware-assisted virtualization, which only realizes the virtualization of CPU and memory, but a computer not only only has CPU and memory, but also requires various I/O devices, but KVM is not responsible for these. At this time, QEMU and KVM are on the line. The transformed QEMU is responsible for the virtualization of external devices, and KVM is responsible for the virtualization of the underlying execution engine and memory. The two complement each other and become the darling of the new generation of cloud computing virtualization solutions.

Container Technology-LXC & Docker

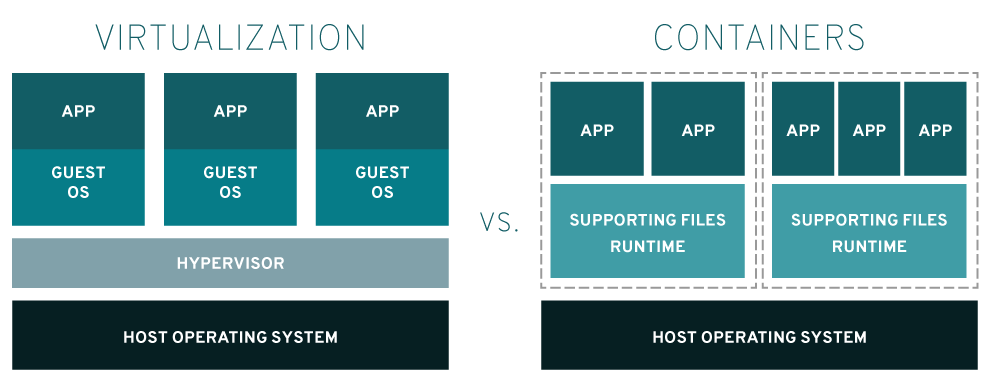

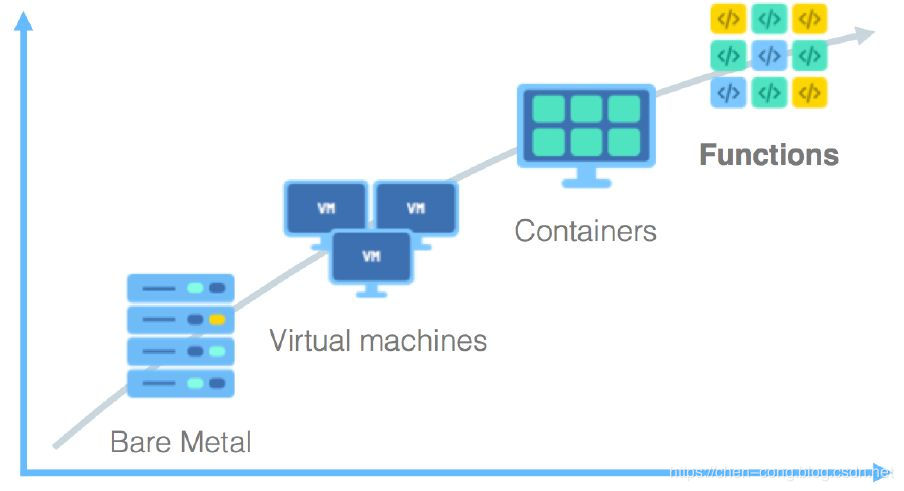

Whether it is full virtualization technology based on translation and simulation, paravirtualization technology, or full virtualization technology supported by CPU hardware, the goal of virtualization is a complete computer with the underlying The complete environment of physical hardware, operating system and application program execution.

In order to make the program in the virtual machine achieve an "approximate" effect like running on a real physical machine, the HyperVisor behind it has done a lot of work and paid a "heavy" price.

Although HyperVisor has done so much, have you ever asked the program in the virtual machine, is this what it wants? Perhaps HyperVisor gave too much, but the target program said: You can actually not have to work so hard.

There is indeed such a situation. The program in the virtual machine says: I just want a separate execution environment, and you don't need to spend so much effort to virtualize a complete computer.

What are the benefits of this?

Is it expensive to virtualize a computer or just to virtualize an isolated program operating environment? The answer is obviously the former. A physical machine may have 10 virtual machines virtualized at the same time and it has begun to feel tired, but it can still deal with it calmly when virtualizing 100 virtual execution environments at the same time, which has huge benefits for the full utilization of resources.

In recent years, the fire container technology was born under this guiding ideology.

Unlike virtualization technology that completely virtualizes a computer, container technology is more like virtualization at the operating system level. It only needs to virtualize an operating system environment.

LXC technology is a typical representative of this kind of solution. The full name is LinuX Container. With the support of Linux kernel Cgroups technology and namespace technology, it isolates operating system files, network and other resources, and isolates a separate space on the native operating system. The application program is placed in it to run. The shape of this space is similar to a container containing the application program in it, so the container technology is named.

To give a not-so-appropriate analogy, a three-bedroom house was used by the second landlord to transform into three one-bedroom apartments. Each one-bedroom apartment is equipped with a bathroom and a kitchen. For the people living in it It is a complete housing.

Nowadays, the underlying principles of the popular Docker technology of various big factories are not fundamentally different from LXC. Even in the early days, Docker was a high-level packaging directly based on LXC. Docker goes a step further on the basis of LXC, packaging each component and dependency in the execution environment into independent objects, which is more convenient for transplantation and deployment.

The advantage of container technology is its light weight. All program code instructions in the isolated space can be executed directly on the CPU without translation conversion. Everyone is the same operating system at the bottom layer, and separate spaces are formed through logical isolation at the software level. .

The disadvantage of container technology is that the security is not as high as virtualization technology. After all, the isolation at the software level is much weaker than the isolation at the hardware level. The isolation environment system and the external host share the same operating system kernel. Once a kernel vulnerability is used to initiate a security attack, the program breaks through the container limit and achieves escape, endangering the host computer, and security ceases to exist.

Ultra-light virtualized firecracker

Virtual and complete computers have good isolation but are too cumbersome. Simple container technology is too light and is not safe enough to rely on software isolation. Is there a compromise solution that combines the advantages of both, achieving both light weight and safety What?

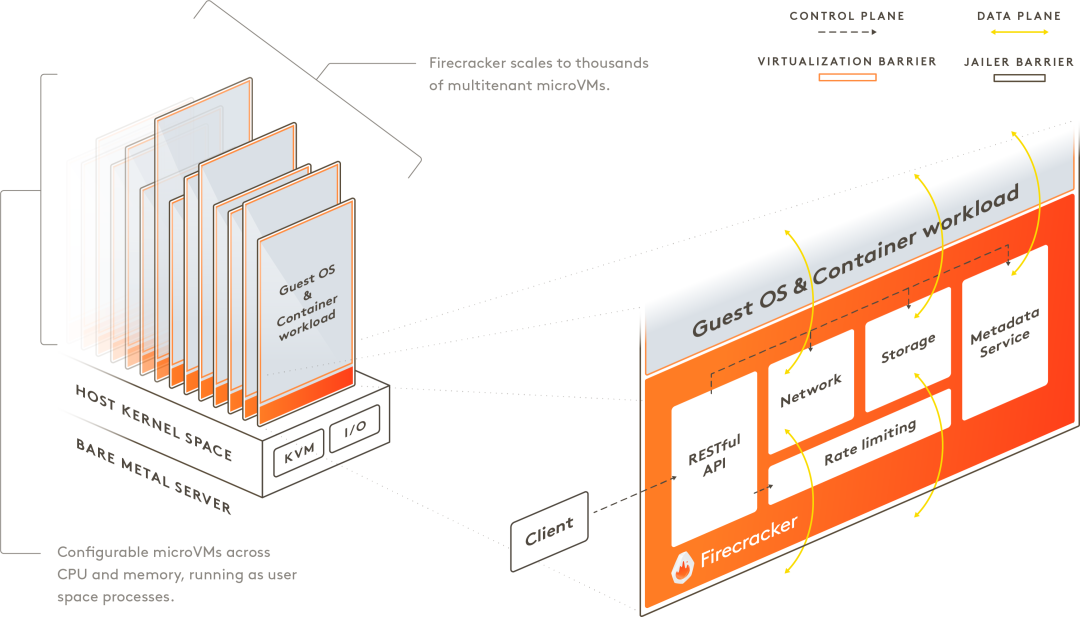

In recent years, the idea of ultra-light virtualization has become popular. Amazon's firecracker is a typical representative.

Firecracker combines the strong isolation of virtualization technology with the lightness of container technology, and puts forward a microVMconcept. The bottom layer uses KVM virtualization technology to achieve strong isolation of each microVM, and the isolated virtual machines run streamlined one by one. The version of the micro operating system, cut a lot of useless functions, a micro OS designed for containers.

Ultra-light virtualization has now become a new wave. In addition to AWS's firecracker, Google's gVisor, and Intel-led NEMU are also starting to work in this field.

to sum up

This article briefly introduces the basic concepts and basic requirements of virtualization technology. Subsequently, it was pointed out that since the early x86 architecture did not support the classic virtualization solution, various software vendors could only implement virtualization through software simulation. The representatives were the early VMware WorkStation and Xen.

However, relying solely on software has performance bottlenecks after all. Fortunately, Intel and AMD promptly introduced virtualization support at the CPU hardware level, and software vendors quickly followed up on adaptations, which greatly improved the virtualization performance experience. Representatives of this period include new versions of VMware WorkStation, Hyper-V, and KVM.

In recent years, with the in-depth development of cloud computing and microservices, the virtual granularity of virtualization technology has gradually changed from coarse to fine. From the earliest virtualized complete computer, only an operating system was virtualized later, and then an environment needed for microservices was virtualized later. The container technology represented by Docker shined in this period.

The development of technology always evolves with the development needs of the market. What is the future of virtualization and what do you think? Welcome to leave a message in the comment area.