1. To build zookeeper first, then build hbase and when build hbase

HBASE_MANAGES_ZK=false in hbase_env.sh cannot be equal to true, because hbase itself comes with zookeeper, so you have to turn off zookeeper in hbase.

2. In some configuration confs, some have two bins and sbins, so write them all when configuring environment variables! ! !

export SPARK_HOME=/usr/local/spark

export PATH=$PATH:$SPARK_HOME/sbin:$SPARK_HOME/bin

3. Configure spark: This is a big job!

You will see an error like this:

at io.netty.channel.AbstractChannelHandlerContext$WriteAndFlushTask.write(AbstractChannelHandlerContext.java:1116)

at io.netty.channel.AbstractChannelHandlerContext$AbstractWriteTask.run(AbstractChannelHandlerContext.java:1051)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:399)

It's all kinds of at, don't panic, this is python finding a library,,,, he reports such an error if he can't find the library

q First determine whether the Java version,,, #Java -version #javac -version are the same

Not the same: https://blog.csdn.net/Vast_Wang/article/details/81249038

Then run pyspark first. If an error is reported, the local library is generally not found: Make sure that anaconda (or other libraries) is installed on each node, and the environment variables are also configured

再运行 HADOOP_CONF_DIR=/usr/local/bd/hadoop/etc/hadoop pyspark --master yarn --deploy-mode client

If an error is reported, then if it is an error:

18/08/09 16:02:46 ERROR cluster.YarnSchedulerBackend$YarnSchedulerEndpoint:

Sending RequestExecutors(0,0,Map(),Set()) to AM was unsuccessful

java.io.IOException:Failed tosend RPC 8631251511338434358 to

java.nio.channels.ClosedChannelException

The solution is

修改yarn-site.xml,添加下列property

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

Be sure to make sure that each node is modified,,,,, shut down the cluster,,, start the cluster,,, start spark

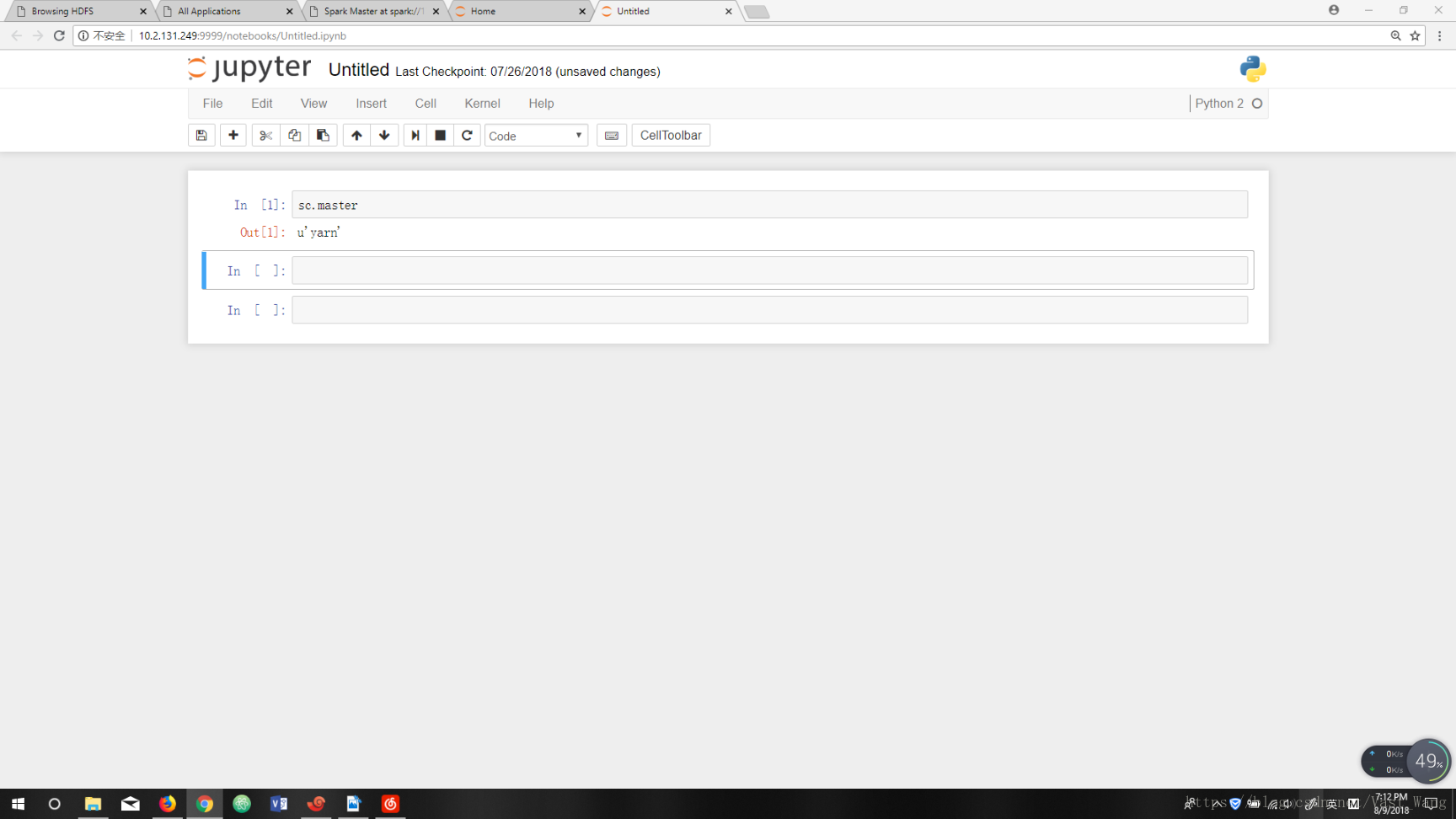

In the end this is a success!