Maximum matching algorithm for forward segmentation

Theory Introduction

Word segmentation is a basic task of natural language processing. Chinese word segmentation is different from English, and there are no spaces between words. Chinese word segmentation is the basis of text mining. For a piece of Chinese input, successfully performing Chinese word segmentation can achieve the effect of automatically identifying the meaning of the sentence by the computer. Chinese word segmentation technology belongs to the category of natural language processing technology. For a sentence, people can understand what is a word and what is not a word through their own knowledge, but how can a computer understand it? The processing process is the word segmentation algorithm.

The Chinese word segmentation method can be simply summarized as:

- Word segmentation method based on vocabulary

- Statistics-based word segmentation method

- Word segmentation method based on sequence tag

Among them, the word segmentation method based on the vocabulary is the simplest, and can be divided into:

- Forward maximum matching algorithm

- Backward Maximum Matching Algorithm

- Bidirectional maximum matching algorithm

The ideas of the three methods are very simple, and today I will use python to implement the forward maximum matching algorithm .

Word segmentation is a distributed Chinese word segmentation component implemented in Java. It provides a variety of dictionary-based word segmentation algorithms and uses the ngram model to eliminate ambiguity. It can accurately identify English, numbers, and quantifiers such as date and time, and can identify unregistered words such as names of people, places, and organizations. The component behavior can be changed through the custom configuration file, the user dictionary can be customized, the dictionary change can be automatically detected, the large-scale distributed environment can be supported, a variety of word segmentation algorithms can be flexibly specified, and the word segmentation results can be flexibly controlled by the refine function. Use part of speech tagging, synonym tagging, antonym tagging, pinyin tagging and other functions. It also integrates seamlessly with Lucene, Solr, ElasticSearch, and Luke.

Forward maximum matching algorithm

The forward maximum matching algorithm , as the name suggests, is to search from the left to the right of the sentence to be segmented to find the maximum match of the word . We need to specify the maximum length of a word. Every time we scan, we look for the word of the current length to match the word in the dictionary. If it is not found, shorten the length and continue searching until we find the word in the dictionary or become a single word.

Specific code implementation

Get the word segmentation function getSeg(text)::

def getSeg(text):

# 句子为空

if not text:

return ''

# 句子成为一个词

if len(text) == 1:

return text

# 此处写了一个递归方法

if text in word_dict:

return text

else:

small = len(text) - 1

text = text[0:small]

return getSeg(text)

Main function:main()

def main():

global test_str, word_dict

test_str = test_str.strip()

# 正向最大匹配分词测试 最大长度5

max_len = max(len(word) for word in word_dict)

result_str = [] # 保存分词结果

result_len = 0

print('input :', test_str)

while test_str:

tmp_str = test_str[0:max_len]

seg_str = getSeg(tmp_str)

seg_len = len(seg_str)

result_len = result_len + seg_len

if seg_str.strip():

result_str.append(seg_str)

test_str = test_str[seg_len:]

print('output :', result_str)

dictionary:

word_dict = ['混沌', 'Logistic', '算法', '图片', '加密', '利用', '还原', 'Lena', '验证', 'Baboon', '效果']

Test sentence:

test_str = '''一种基于混沌Logistic加密算法的图片加密与还原的方法,并利用Lena图和Baboon图来验证这种加密算法的加密效果。'''

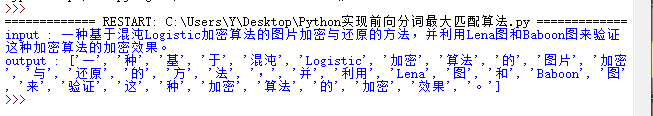

Word segmentation result