Upgraded Spark upgrade

In the CDH5.12.1 cluster, the default installed Spark is version 1.6, and it needs to be upgraded to the Spark2.1 version here. After consulting the official documentation, it is found that Spark1.6 and 2.x can be installed in parallel, which means that you can directly install the 2.x version without deleting the default 1.6 version, and the ports they use are different.

Cloudera released an overview of Apache Spark 2 (you can find the installation method and parcel package repository here)

Cloudera's official website can download the related parcel offline installation package:

https://www.cloudera.com/documentation/spark2/latest/topics/spark2_installing.html

Introduction of Cloudera Manager and 5.12.0 version:

Upgrade process

1 Offline package download

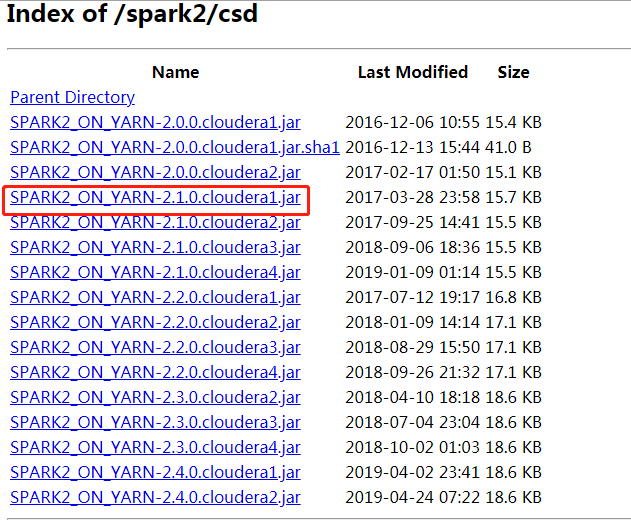

1) Required software: http://archive.cloudera.com/spark2/csd/

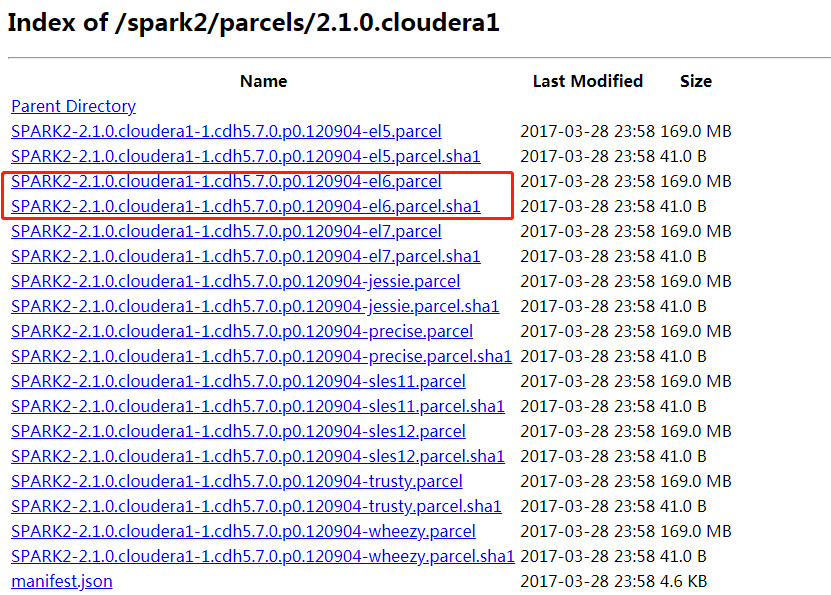

2) Download address of Parcels package: http://archive.cloudera.com/spark2/parcels/2.1.0.cloudera1/

2 Offline package upload

1) Upload the file SPARK2_ON_YARN-2.1.0.cloudera1.jar to / opt / cloudera / csd / below

2) Upload files SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904-el6.parcel and SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904-el6.parcel.sha1 to / opt / cloudera / parcel-repo /

3) Rename SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904-el6.parcel.sha1 to SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904-el6.parcel .sha

[root@hadoop101 parcel-repo]# mv /opt/cloudera/parcel-repo/SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904-el6.parcel.sha1 /opt/cloudera/parcel-repo/SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904-el6.parcel.sha

[kris@hadoop101 parcel-repo]$ ll

total 2673860

-rw-r--r-- 1 kris kris 364984320 Jul 2 2019 APACHE_PHOENIX-4.14.0-cdh5.14.2.p0.3-el7.parcel

-rw-r--r-- 1 kris kris 41 Jul 2 2019 APACHE_PHOENIX-4.14.0-cdh5.14.2.p0.3-el7.parcel.sha

-rw-r----- 1 root root 14114 Jul 2 2019 APACHE_PHOENIX-4.14.0-cdh5.14.2.p0.3-el7.parcel.torrent

-rw-r--r-- 1 cloudera-scm cloudera-scm 2108071134 Jun 27 2019 CDH-5.14.2-1.cdh5.14.2.p0.3-el7.parcel

-rw-r--r-- 1 cloudera-scm cloudera-scm 41 Jun 27 2019 CDH-5.14.2-1.cdh5.14.2.p0.3-el7.parcel.sha

-rw-r----- 1 cloudera-scm cloudera-scm 80586 Jun 27 2019 CDH-5.14.2-1.cdh5.14.2.p0.3-el7.parcel.torrent

-rw-r--r-- 1 cloudera-scm cloudera-scm 72851219 Jun 29 2019 KAFKA-3.1.1-1.3.1.1.p0.2-el7.parcel

-rw-r--r-- 1 cloudera-scm cloudera-scm 41 Jun 29 2019 KAFKA-3.1.1-1.3.1.1.p0.2-el7.parcel.sha

-rw-r----- 1 root root 2940 Jun 29 2019 KAFKA-3.1.1-1.3.1.1.p0.2-el7.parcel.torrent

-rw-r--r-- 1 cloudera-scm cloudera-scm 74062 Jun 27 2019 manifest.json

-rw-r--r-- 1 cloudera-scm cloudera-scm 191904064 Jun 29 2019 SPARK2-2.3.0.cloudera4-1.cdh5.13.3.p0.611179-el7.parcel

-rw-r--r-- 1 cloudera-scm cloudera-scm 41 Oct 5 2018 SPARK2-2.3.0.cloudera4-1.cdh5.13.3.p0.611179-el7.parcel.sha

-rw-r----- 1 cloudera-scm cloudera-scm 7521 Jun 29 2019 SPARK2-2.3.0.cloudera4-1.cdh5.13.3.p0.611179-el7.parcel.torrent

[kris@hadoop101 parcel-repo]$ pwd

/home/kris/apps/usr/webserver/cloudera/parcel-repo

[kris@hadoop101 csd]$ pwd

/home/kris/apps/usr/webserver/cloudera/csd

[kris@hadoop101 csd]$ ll

total 28

-rw-r--r-- 1 cloudera-scm cloudera-scm 5670 Feb 22 2018 KAFKA-1.2.0.jar

-rw-r--r-- 1 cloudera-scm cloudera-scm 19037 Oct 5 2018 SPARK2_ON_YARN-2.3.0.cloudera4.jar

[kris@hadoop101 csd]$ ll

total 28

-rw-r--r-- 1 cloudera-scm cloudera-scm 5670 Feb 22 2018 KAFKA-1.2.0.jar

-rw-r--r-- 1 cloudera-scm cloudera-scm 19037 Oct 5 2018 SPARK2_ON_YARN-2.3.0.cloudera4.jar

Page operation

Update Parcel

Click Parcel on the cm homepage, then click to check for new Parcel

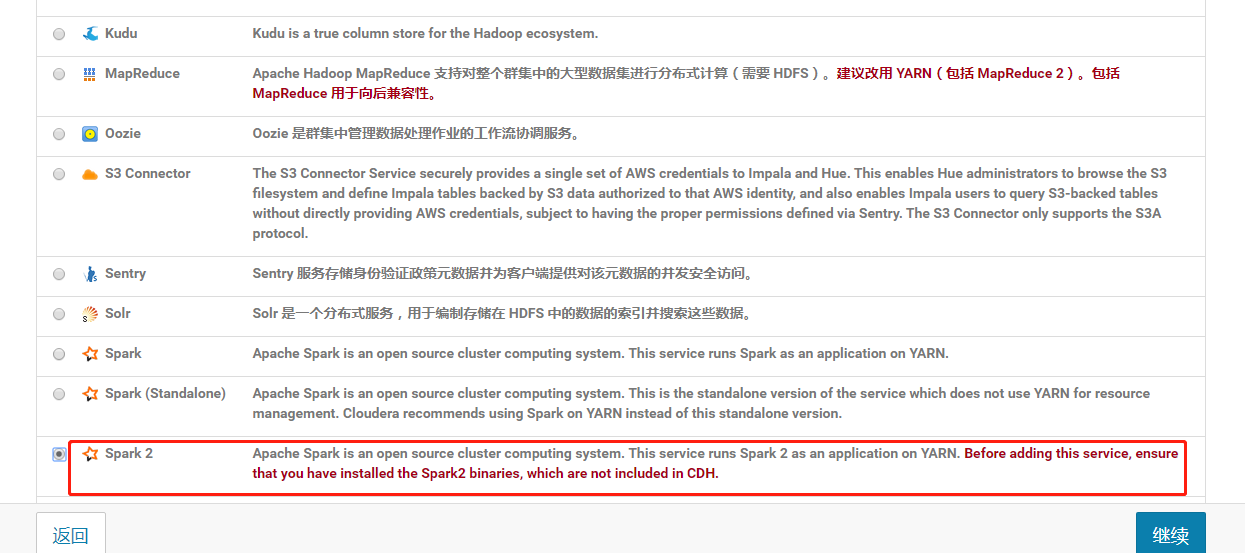

Click to assign, click to activate, return to the home page and click to add service

If there is no Spark2, restart the server:

[root@hadoop101 ~]# /opt/module/cm/cm-5.12.1/etc/init.d/cloudera-scm-server restart

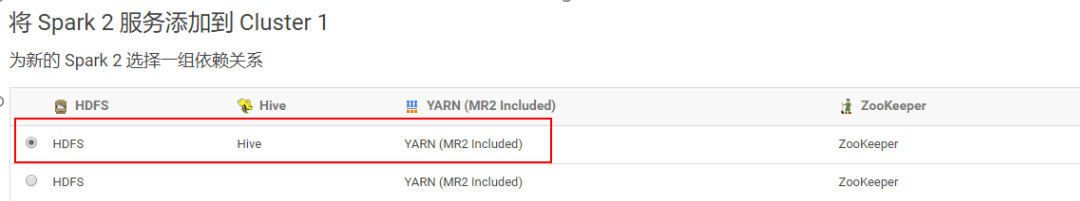

Choose a set of dependencies

Role assignment, deployment and launch

Note: I reported an error here: the client configuration ( id = 12 ) has been exited with 1 , and the expected value is 0

1 ) Cause of the problem: The reason was finally found because CM installed Spark will not go to the environment variable to find Java, need Add the Java path to the CM configuration file

2 ) Solution 1 (requires cdh restart):

[root @ hadoop101 java] # vim / opt / module / cm / cm- 5.12 . 1 / lib64 / cmf / service / client / deploy- CC . SH

in the file add the final

JAVA_HOME = / opt / module / jdk1. 8 .0_104

Export JAVA_HOME = / opt / module / jdk1. 8 .0_104

3 ) solution 2 (without having to restart cdh):

View / opt / module / cm / cm- 5.12 . 1/ lib64 / cmf / service / common / cloudera-config. sh

find the home directory of java8, you will find that cdh will not use the system default JAVA_HOME environment variable, but will be managed according to bigtop, so we need to specify in / usr / java Install jdk in the / default directory. Of course, we have in / opt / Module / jdk1. 8 installed jdk under .0_104, so creating a connection to the past

[root @ hadoop101 ~] # mkdir / usr / the Java

[root @ hadoop101 ~] # LN -s / opt / module / jdk1. 8 .0_104 / / usr / java / default

[root @ hadoop102 ~] # mkdir / usr / java

[root @ hadoop102 ~] # ln -s / opt / module / jdk1. 8 .0_104 / / usr / java / default

[root @ hadoop103 ~] # mkdir/ usr / java

[root @ hadoop103 ~] # ln -s / opt / module / jdk1. 8 .0_104 / / usr / java / default

3 ) Solution 3 (need to restart cdh):

find three hadoop101, hadoop102, hadoop103 Machine configuration, configure java home directory

Command line view command

[hdfs@hadoop101 ~]$ spa

spark2-shell spark2-submit spark-shell spark-submit spax