我们在Windows 10环境中安装好了dlib19.17和face_recognition,具体过程请参考:

https://blog.csdn.net/weixin_41943311/article/details/91866987

https://blog.csdn.net/weixin_41943311/article/details/98482615

然后,我们使用dlib19.17+face_recognition对家庭照片进行分类,速度可以达到4-6秒/张,相关内容请参考:

Windows 10+dlib19.17+face_recognition:使用人脸识别对家庭照片进行分类,4-6秒/张(一)单线程

我们对这样的速度不太满意,打算提高它的运行速度(或者说:缩短整个任务的运行时间)。

我们先想到的是使用多线程,因此我们改写了程序(用number_threads设定并发的线程数),代码如下:

# -*- coding: UTF-8 -*-

import dlib

import face_recognition

import numpy as np

import threading

from datetime import datetime

import os

import shutil

#人脸检测线程,将文件名转化为整数,按整除的余数分类待处理文件,若命中则复制文件到指定目录

def face_check_and_copy(num):

# 遍历目录下的所有.jpg文件

f = os.walk("f:\images")

for path, d, filelist in f:

for filename in filelist:

if filename.endswith('jpg'):

#将文件名的字符转化为ASCII码,累计得到一个整数

only_name = os.path.splitext(filename)[0]

int_filename = 0

for every_char in only_name:

int_filename += ord(every_char)

#不用加锁,多线程

if int_filename % number_threads == num:

image_path = os.path.join(path, filename)

# 加载图片

unknown_image = face_recognition.load_image_file(image_path)

count_checked[num] += 1

# 找到图中所有人脸的位置

face_locations = face_recognition.face_locations(unknown_image)

# face_locations = face_recognition.face_locations(unknown_image, number_of_times_to_upsample=0, model="cnn")

# 根据位置加载人脸编码的列表

face_encodings = face_recognition.face_encodings(unknown_image, face_locations)

# 遍历所有人脸编码,与已知人脸对比

for (top, right, bottom, left), face_encoding in zip(face_locations, face_encodings):

# 获得对比结果,tolerance值越低比对越严格

matches = face_recognition.compare_faces(known_face_encodings, face_encoding, tolerance=0.4)

# 获得比对成功的姓名(未做进一步处理),并复制文件到指定目录

name = "Unknown"

if True in matches:

first_match_index = matches.index(True)

name = known_face_names[first_match_index]

# 若同一个图片中有多个已知人脸,重复复制文件会报错

try:

shutil.copy(image_path, "f:/images_family")

count_copied[num] += 1

break

except shutil.Error:

break

#主程序开始

number_threads = 4

count_checked = [0 for i in range(number_threads)]

count_copied = [0 for i in range(number_threads)]

count_check,count_copy = 0,0

# 从图片中加载已知的人脸并获得编码

steve_image = face_recognition.load_image_file("f:/images/steve.jpg")

steve_face_encoding = face_recognition.face_encodings(steve_image)[0]

lucy_image = face_recognition.load_image_file("f:/images/lucy.jpg")

lucy_face_encoding = face_recognition.face_encodings(lucy_image)[0]

known_face_encodings = [

steve_face_encoding,

lucy_face_encoding,

]

known_face_names = [

"Steve",

"Lucy",

]

# 测试起始时间

t1 = datetime.now()

threadpool = []

for i in range(number_threads):

th = threading.Thread(target=face_check_and_copy, args=(i,))

threadpool.append(th)

for th in threadpool:

th.start()

for th in threadpool:

threading.Thread.join(th)

for i in range(number_threads):

count_check += count_checked[i]

count_copy += count_copied[i]

# 测试结束时间

t2 = datetime.now()

# 显示总的时间开销

print('%d pictures checked, and %d pictures copied with known faces.' %(count_check, count_copy))

print('time spend: %d seconds, %d microseconds.' %((t2-t1).seconds, (t2-t1).microseconds))

for i in range(number_threads):

print('%d pictures checked in the No.%d thread.' %(count_checked[i], i))运行的结果,有点出乎我们的意外:

这里使用了4个线程,分别检查了12个、8个、13个、17个文件,并发运行,结果并未提升运行效率,总的运行时间与单线程几乎一样,甚至还略有增加。我们尝试改变线程数量,但无论我们把number_threads定为多少,最终程序跑下来所花费的时间是相当的,没有更快,也没有更慢。

在运行程序的同时,我们观察Windows 10的CPU的利用率,也始终处于16-33%的区间。显然,Python的多线程并不能显著地提升并发性能,这是为什么呢?

原来,问题出在GIL身上。

GIL 的全程为 Global Interpreter Lock ,意即全局解释器锁。在 Python 语言的主流实现 CPython 中,GIL 是一个货真价实的全局线程锁,在解释器解释执行任何 Python 代码时,都需要先获得这把锁才行,在遇到 I/O 操作时会释放这把锁。如果是纯计算的程序,没有 I/O 操作,解释器会每隔 100 次操作就释放这把锁,让别的线程有机会执行(这个次数可以通过 sys.setcheckinterval 来调整)。所以虽然 CPython 的线程库直接封装操作系统的原生线程,但 CPython 进程做为一个整体,同一时间只会有一个获得了 GIL 的线程在跑,其它的线程都处于等待状态等着 GIL 的释放。

也就是说,对Python而言,计算密集型的多线程,其实性能和单线程是一样的。

那么,还有什么方法能加快处理图片文件的速度呢,难道Intel i7 8750H CPU的6核12线程只是摆设吗?

当然不是!

方法就是同时运行多个Python程序。我们简单修改了一下程序,让两个程序分别运行(因为我们是通过对文件名预处理的方法分配工作量的,因此两个程序之间不需要通信和同步),代码如下:

程序1:

# -*- coding: UTF-8 -*-

import dlib

import face_recognition

import numpy as np

from datetime import datetime

import os

import shutil

# 从图片中加载已知的人脸并获得编码

steve_image = face_recognition.load_image_file("f:/images/steve.jpg")

steve_face_encoding = face_recognition.face_encodings(steve_image)[0]

lucy_image = face_recognition.load_image_file("f:/images/lucy.jpg")

lucy_face_encoding = face_recognition.face_encodings(lucy_image)[0]

known_face_encodings = [

steve_face_encoding,

lucy_face_encoding,

]

known_face_names = [

"Steve",

"Lucy",

]

# 测试起始时间

t1 = datetime.now()

count_checked, count_copied = 0, 0

# 遍历目录下的所有.jpg文件

f = os.walk("f:\images")

for path,d,filelist in f:

for filename in filelist:

if filename.endswith('jpg'):

image_path = os.path.join(path, filename)

# 将文件名的字符转化为ASCII码,累计得到一个整数

only_name = os.path.splitext(filename)[0]

int_filename = 0

for every_char in only_name:

int_filename += ord(every_char)

if int_filename % 2 == 0:

# 加载图片

unknown_image = face_recognition.load_image_file(image_path)

count_checked += 1

# 找到图中所有人脸的位置

face_locations = face_recognition.face_locations(unknown_image)

#face_locations = face_recognition.face_locations(unknown_image, number_of_times_to_upsample=0, model="cnn")

# 根据位置加载人脸编码的列表

face_encodings = face_recognition.face_encodings(unknown_image, face_locations)

# 遍历所有人脸编码,与已知人脸对比

for (top, right, bottom, left), face_encoding in zip(face_locations, face_encodings):

# 获得对比结果

matches = face_recognition.compare_faces(known_face_encodings, face_encoding, tolerance=0.4)

# 获得比对成功的姓名(未做进一步处理),并复制文件到指定目录

name = "Unknown"

if True in matches:

first_match_index = matches.index(True)

name = known_face_names[first_match_index]

# 若同一个图片中有多个已知人脸,重复复制文件会报错

try:

shutil.copy(image_path,"f:/images_family")

count_copied += 1

break

except shutil.Error:

break

# 测试结束时间

t2 = datetime.now()

# 显示总的时间开销

print('No.0 process: %d pictures checked, and %d pictures copied with known faces.' %(count_checked, count_copied))

print('No.0 process: time spend: %d seconds, %d microseconds.' %((t2-t1).seconds, (t2-t1).microseconds))

程序2:

# -*- coding: UTF-8 -*-

import dlib

import face_recognition

import numpy as np

from datetime import datetime

import os

import shutil

# 从图片中加载已知的人脸并获得编码

steve_image = face_recognition.load_image_file("f:/images/steve.jpg")

steve_face_encoding = face_recognition.face_encodings(steve_image)[0]

lucy_image = face_recognition.load_image_file("f:/images/lucy.jpg")

lucy_face_encoding = face_recognition.face_encodings(lucy_image)[0]

known_face_encodings = [

steve_face_encoding,

lucy_face_encoding,

]

known_face_names = [

"Steve",

"Lucy",

]

# 测试起始时间

t1 = datetime.now()

count_checked, count_copied = 0, 0

# 遍历目录下的所有.jpg文件

f = os.walk("f:\images")

for path, d, filelist in f:

for filename in filelist:

if filename.endswith('jpg'):

image_path = os.path.join(path, filename)

# 将文件名的字符转化为ASCII码,累计得到一个整数

only_name = os.path.splitext(filename)[0]

int_filename = 0

for every_char in only_name:

int_filename += ord(every_char)

if int_filename % 2 == 1:

# 加载图片

unknown_image = face_recognition.load_image_file(image_path)

count_checked += 1

# 找到图中所有人脸的位置

face_locations = face_recognition.face_locations(unknown_image)

# face_locations = face_recognition.face_locations(unknown_image, number_of_times_to_upsample=0, model="cnn")

# 根据位置加载人脸编码的列表

face_encodings = face_recognition.face_encodings(unknown_image, face_locations)

# 遍历所有人脸编码,与已知人脸对比

for (top, right, bottom, left), face_encoding in zip(face_locations, face_encodings):

# 获得对比结果

matches = face_recognition.compare_faces(known_face_encodings, face_encoding, tolerance=0.4)

# 获得比对成功的姓名(未做进一步处理),并复制文件到指定目录

name = "Unknown"

if True in matches:

first_match_index = matches.index(True)

name = known_face_names[first_match_index]

# 若同一个图片中有多个已知人脸,重复复制文件会报错

try:

shutil.copy(image_path, "f:/images_family")

count_copied += 1

break

except shutil.Error:

break

# 测试结束时间

t2 = datetime.now()

# 显示总的时间开销

print('No.1 process: %d pictures checked, and %d pictures copied with known faces.' % (count_checked, count_copied))

print('No.1 process: time spend: %d seconds, %d microseconds.' % ((t2 - t1).seconds, (t2 - t1).microseconds))

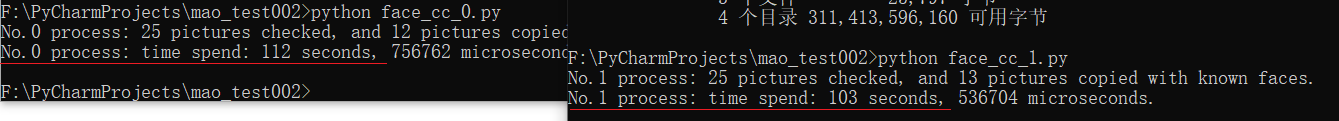

我们让两个程序同时运行,果然,总的运行时间减半了!

同时,我们观察到CPU的利用率也从16-33%区间提高到32-68%区间,甚至瞬间有到达80%的峰值。

按照6核12线程的标配,我们理论上可以同时跑满6个Python程序以尽可能占满CPU。

既然如此,为了解决Python多线程无法提高性能的问题,Python引入了 multiprocessing 多进程标准库,我们将在下一篇文章中介绍使用多进程进行并行加速的情况。

此外,也可以改用 C/C++,把计算密集型的关键部分用 C/C++ 写成 Python 扩展,在扩展里用 C 创建原生线程,而且不用锁 GIL,这样也可以充分利用 CPU 的计算资源。

参考:

http://blog.sina.com.cn/s/blog_64ecfc2f0102uzzf.html

(完)

下一篇:Windows 10+dlib19.17+face_recognition:使用人脸识别对家庭照片进行分类,2.43秒/张,速度提高40%以上(三)多进程+健壮性