英文版论文原文:https://arxiv.org/pdf/1512.02325.pdf

Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks

Shaoqing Ren,Kaiming He,Dumitru Erhan,Ross Girshick&Jian Sun`

Abstract

最新的物体检测网络依靠区域提议算法来假设物体的位置。 SPPnet [1]和Fast R-CNN [2]之类的进步减少了这些检测网络的运行时间,暴露了区域提议计算的瓶颈。在这项工作中,我们介绍了一个区域提议网络(RPN),该区域提议网络与检测网络共享全图像卷积特征,从而实现几乎免费的区域提议。 RPN是一个完全卷积的网络,可以同时预测每个位置的对象边界和对象得分。对RPN进行了端到端的培训,以生成高质量的区域建议,Fast R-CNN将其用于检测。通过共享RPN和Fast R-CNN的卷积特征,我们将RPN和Fast R-CNN进一步合并为一个网络-使用最近流行的带有“注意力”机制的神经网络术语,RPN组件告诉统一网络要看的地方。对于非常深的VGG-16模型[3],我们的检测系统在GPU上具有5fps(包括所有步骤)的帧频,同时在PASCAL VOC 2007、2012和2007上达到了最新的对象检测精度。 MS COCO数据集,每个图像仅包含300个建议。在ILSVRC和COCO 2015竞赛中,Faster R-CNN和RPN是多个赛道中第一名获胜作品的基础。代码已公开提供。

State-of-the-art object detection networks depend on region proposal algorithms to hypothesize object locations. Advances like SPPnet [1] and Fast R-CNN [2] have reduced the running time of these detection networks, exposing region proposal computation as a bottleneck. In this work, we introduce a Region Proposal Network (RPN) that shares full-image convolutional features with the detection network, thus enabling nearly cost-free region proposals. An RPN is a fully convolutional network that simultaneously predicts object bounds and objectness scores at each position. The RPN is trained end-to-end to generate high-quality region proposals, which are used by Fast R-CNN for detection. We further merge RPN and Fast R-CNN into a single network by sharing their convolutional features—using the recently popular terminology of neural networks with “attention” mechanisms, the RPN component tells the unified network where to look. For the very deep VGG-16 model [3], our detection system has a frame rate of 5fps (including all steps) on a GPU, while achieving state-of-the-art object detection accuracy on PASCAL VOC 2007, 2012, and MS COCO datasets with only 300 proposals per image. In ILSVRC and COCO 2015 competitions, Faster R-CNN and RPN are the foundations of the 1st-place winning entries in several tracks. Code has been made publicly available.

1 INTRODUCTION

区域提议方法(例如[4])和基于区域的卷积神经网络(R-CNN)[5]的成功推动了目标检测的最新进展。 尽管基于区域的CNN在计算上很昂贵,如最初在[5]中开发的,但由于在提案[1],[2]之间共享卷积,因此其成本已大大降低。 最新的化身,快速R-CNN [2],在忽略区域提案花费的时间时,使用非常深的网络[3]实现了接近实时的速度。 现在,建议是最先进的检测系统中的测试时间计算瓶颈。

Recent advances in object detection are driven by the success of region proposal methods (e.g., [4]) and region-based convolutional neural networks (R-CNNs) [5]. Although region-based CNNs were computationally expensive as originally developed in [5], their cost has been drastically reduced thanks to sharing convolutions across proposals [1], [2]. The latest incarnation, Fast R-CNN [2], achieves near real-time rates using very deep networks [3], when ignoring the time spent on region proposals. Now, proposals are the test-time computational bottleneck in state-of-the-art detection systems.

区域提议方法通常依赖于便宜的功能和经济的推理方案。 选择性搜索[4]是最流行的方法之一,它根据工程化的底层特征贪婪地合并超像素。 然而,与高效的检测网络相比[2],选择性搜索的速度要慢一个数量级,在CPU实现中每张图像2秒。 EdgeBoxes [6]当前提供建议质量和速度之间的最佳权衡,每张图像0.2秒。 尽管如此,区域提议步骤仍然消耗与检测网络一样多的运行时间。

Region proposal methods typically rely on inexpensive features and economical inference schemes. Selective Search [4], one of the most popular methods, greedily merges superpixels based on engineered low-level features. Yet when compared to efficient detection networks [2], Selective Search is an order of magnitude slower, at 2 seconds per image in a CPU implementation. EdgeBoxes [6] currently provides the best tradeoff between proposal quality and speed, at 0.2 seconds per image. Nevertheless, the region proposal step still consumes as much running time as the detection network.

可能会注意到,基于区域的快速CNN充分利用了GPU的优势,而研究中使用的区域提议方法则是在CPU上实现的,因此这种运行时比较是不公平的。 加速提案计算的一种明显方法是为GPU重新实现。 这可能是一种有效的工程解决方案,但是重新实现会忽略下游检测网络,因此会错过共享计算的重要机会。

One may note that fast region-based CNNs take advantage of GPUs, while the region proposal methods used in research are implemented on the CPU, making such runtime comparisons inequitable. An obvious way to accelerate proposal computation is to reimplement it for the GPU. This may be an effective engineering solution, but re-implementation ignores the down-stream detection network and therefore misses important opportunities for sharing computation.

在本文中,我们证明了算法的变化(使用深度卷积神经网络来计算提案)会带来一种优雅而有效的解决方案,考虑到检测网络的计算,提案的计算几乎是免费的。 为此,我们介绍了与最新的对象检测网络[1],[2]共享卷积层的新颖的区域提议网络(RPN)。 通过在测试时共享卷积,计算建议的边际成本很小(例如,每张图片10毫秒)。

In this paper, we show that an algorithmic change—computing proposals with a deep convolutional neural network—leads to an elegant and effective solution where proposal computation is nearly cost-free given the detection network’s computation. To this end, we introduce novel Region Proposal Networks (RPNs) that share convolutional layers with state-of-the-art object detection networks [1], [2]. By sharing convolutions at test-time, the marginal cost for computing proposals is small (e.g., 10ms per image).

我们的观察结果是,基于区域的检测器(如Fast R-CNN)使用的卷积特征图也可以用于生成区域建议。 在这些卷积特征之上,我们通过添加一些其他卷积层来构建RPN,这些卷积层同时回归规则网格上每个位置的区域边界和客观性得分。 因此,RPN是一种全卷积网络(FCN)[7],可以专门针对生成检测建议的任务进行端到端训练。

Our observation is that the convolutional feature maps used by region-based detectors, like Fast R-CNN, can also be used for generating region proposals. On top of these convolutional features, we construct an RPN by adding a few additional convolutional layers that simultaneously regress region bounds and objectness scores at each location on a regular grid. The RPN is thus a kind of fully convolutional network (FCN) [7] and can be trained end-to-end specifically for the task for generating detection proposals.

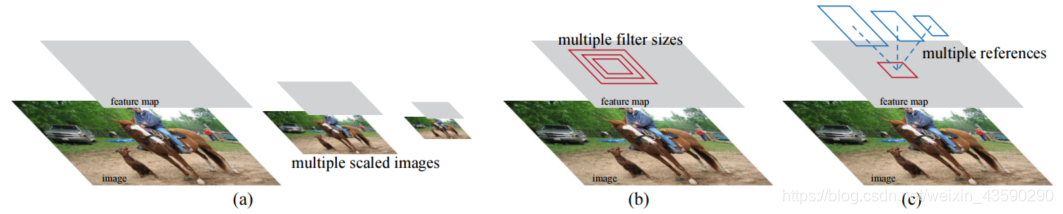

图1:解决多种规模和规模的不同方案。 (a)建立图像和特征图的金字塔,并在所有比例下运行分类器。 (b)具有多个比例/大小的滤镜金字塔在特征图上运行。 (c)我们在回归函数中使用参考箱的金字塔。

Figure 1: Different schemes for addressing multiple scales and sizes. (a) Pyramids of images and feature maps are built, and the classifier is run at all scales. (b) Pyramids of filters with multiple scales/sizes are run on the feature map. © We use pyramids of reference boxes in the regression functions.

RPN旨在以各种比例和纵横比有效预测区域提议。 与使用图像金字塔(图1,a)或滤镜金字塔(图1,b)的流行方法[8],[9],[1],[2]相比,我们介绍了新颖的“锚”盒 作为多种比例和纵横比的参考。 我们的方案可以看作是回归参考的金字塔(图1,c),它避免了枚举具有多个比例或纵横比的图像或过滤器。 当使用单比例尺图像进行训练和测试时,该模型表现良好,从而提高了运行速度。

RPNs are designed to efficiently predict region proposals with a wide range of scales and aspect ratios. In contrast to prevalent methods [8], [9], [1], [2] that use pyramids of images (Figure 1, a) or pyramids of filters (Figure 1, b), we introduce novel “anchor” boxes that serve as references at multiple scales and aspect ratios. Our scheme can be thought of as a pyramid of regression references (Figure 1, c), which avoids enumerating images or filters of multiple scales or aspect ratios. This model performs well when trained and tested using single-scale images and thus benefits running speed.

为了将RPN与Fast R-CNN [2]对象检测网络统一起来,我们提出了一种训练方案,该方案在对区域建议任务进行微调与对对象检测进行微调之间交替,同时保持建议不变。 该方案可以快速收敛,并生成具有卷积功能的统一网络,这两个任务之间共享

To unify RPNs with Fast R-CNN [2] object detection networks, we propose a training scheme that alternates between fine-tuning for the region proposal task and then fine-tuning for object detection, while keeping the proposals fixed. This scheme converges quickly and produces a unified network with convolutional features that are shared between both tasks

我们在PASCAL VOC检测基准[11]上全面评估了我们的方法,其中具有快速R-CNN的RPN产生的检测精度要优于具有快速R-CNN的选择性搜索的强基线。 同时,我们的方法在测试时几乎免除了“选择性搜索”的所有计算负担-投标的有效运行时间仅为10毫秒。 使用昂贵的非常深的模型[3],我们的检测方法在GPU上的帧速率仍然为5fps(包括所有步骤),因此在速度和准确性方面都是实用的对象检测系统。 我们还报告了MS COCO数据集的结果[12],并使用COCO数据研究了PASCAL VOC的改进。 代码已在https://github.com/shaoqingren/faster_rcnn(在MATLAB中)和https://github.com/rbgirshick/py-faster-rcnn(在Python中)中公开可用。

We comprehensively evaluate our method on the PASCAL VOC detection benchmarks [11] where RPNs with Fast R-CNNs produce detection accuracy better than the strong baseline of Selective Search with Fast R-CNNs. Meanwhile, our method waives nearly all computational burdens of Selective Search at test-time—the effective running time for proposals is just 10 milliseconds. Using the expensive very deep models of [3], our detection method still has a frame rate of 5fps (including all steps) on a GPU, and thus is a practical object detection system in terms of both speed and accuracy. We also report results on the MS COCO dataset [12] and investigate the improvements on PASCAL VOC using the COCO data. Code has been made publicly available at https://github.com/shaoqingren/faster_rcnn (in MATLAB) and https://github.com/rbgirshick/py-faster-rcnn (in Python).

该手稿的初步版本先前已发布[10]。 从那时起,RPN和Faster R-CNN的框架已被采用并推广到其他方法,例如3D对象检测[13],基于零件的检测[14],实例分割[15]和图像字幕[16]。。 我们的快速有效的物体检测系统也已建立在诸如Pinterests [17]的商业系统中,据报道用户参与度有所提高。

A preliminary version of this manuscript was published previously [10]. Since then, the frameworks of RPN and Faster R-CNN have been adopted and generalized to other methods, such as 3D object detection [13], part-based detection [14], instance segmentation [15], and image captioning [16]. Our fast and effective object detection system has also been built in com-mercial systems such as at Pinterests [17], with user engagement improvements reported.

在ILSVRC和COCO 2015竞赛中,Faster R-CNN和RPN是ImageNet检测,ImageNet本地化,COCO检测和COCO分割中几个第一名的基础[18]。 RPN完全学会了根据数据提议区域,因此可以轻松地从更深,更具表现力的功能(例如[18]中采用的101层残差网络)中受益。 在这些比赛中,其他一些领先的参赛者也使用了更快的R-CNN和RPN2。 这些结果表明,我们的方法不仅是一种实用的高性价比解决方案,而且还是提高物体检测精度的有效途径。

In ILSVRC and COCO 2015 competitions, Faster R-CNN and RPN are the basis of several 1st-place entries [18] in the tracks of ImageNet detection, ImageNet localization, COCO detection, and COCO segmentation. RPNs completely learn to propose regions from data, and thus can easily benefit from deeper and more expressive features (such as the 101-layer residual nets adopted in [18]). Faster R-CNN and RPN are also used by several other leading entries in these competitions2. These results suggest that our method is not only a cost-efficient solution for practical usage, but also an effective way of improving object detection accuracy.

2 RELATED WORK

目标提议。目标提议方法方面有大量的文献。目标提议方法的综合调查和比较可以在[19],[20],[21]中找到。广泛使用的目标提议方法包括基于超像素分组(例如,选择性搜索[4],CPMC[22],MCG[23])和那些基于滑动窗口的方法(例如窗口中的目标[24],EdgeBoxes[6])。目标提议方法被采用为独立于检测器(例如,选择性搜索[4]目标检测器,R-CNN[5]和Fast R-CNN[2])的外部模块。

Object Proposals. There is a large literature on object proposal methods. Comprehensive surveys and comparisons of object proposal methods can be found in [19], [20], [21]. Widely used object proposal methods include those based on grouping super-pixels (e.g., Selective Search [4], CPMC [22], MCG [23]) and those based on sliding windows (e.g., objectness in windows [24], EdgeBoxes [6]). Object proposal methods were adopted as external modules independent of the detectors (e.g., Selective Search [4] object detectors, R-CNN [5], and Fast R-CNN [2]).

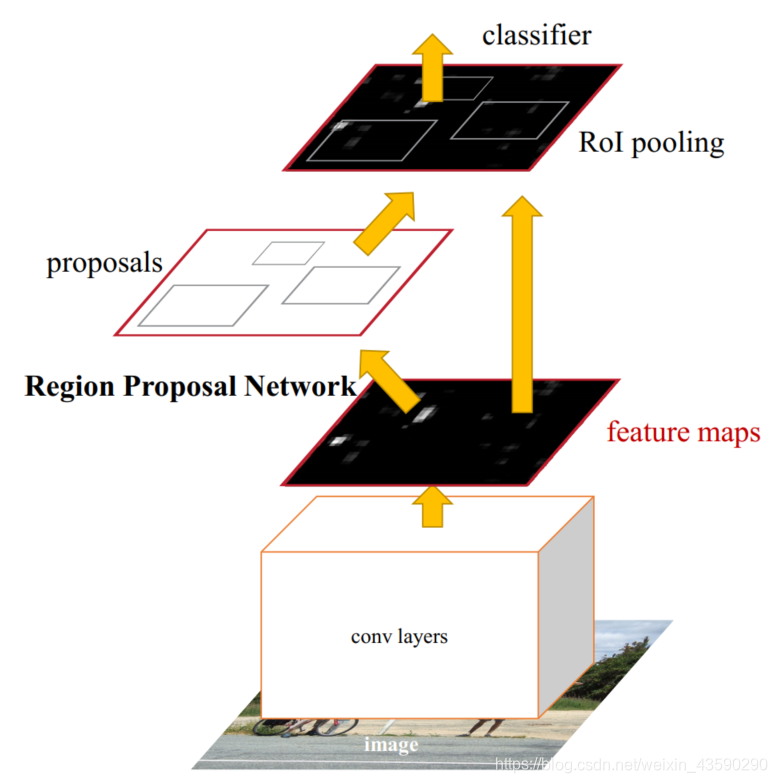

图2:更快的R-CNN是用于对象检测的单个统一网络。 RPN模块充当此统一网络的“注意”。

Figure 2: Faster R-CNN is a single, unified network for object detection. The RPN module serves as the ‘attention’ of this unified network.

用于目标检测的深度网络。R-CNN方法[5]端到端地对CNN进行训练,将提议区域分类为目标类别或背景。R-CNN主要作为分类器,并不能预测目标边界(除了通过边界框回归进行细化)。其准确度取决于区域提议模块的性能(参见[20]中的比较)。一些论文提出了使用深度网络来预测目标边界框的方法[25],[9],[26],[27]。在OverFeat方法[9]中,训练一个全连接层来预测假定单个目标定位任务的边界框坐标。然后将全连接层变成卷积层,用于检测多个类别的目标。MultiBox方法[26],[27]从网络中生成区域提议,网络最后的全连接层同时预测多个类别不相关的边界框,并推广到OverFeat的“单边界框”方式。这些类别不可知的边界框框被用作R-CNN的提议区域[5]。与我们的全卷积方案相比,MultiBox提议网络适用于单张裁剪图像或多张大型裁剪图像(例如224×224)。MultiBox在提议区域和检测网络之间不共享特征。稍后在我们的方法上下文中会讨论OverFeat和MultiBox。与我们的工作同时进行的,DeepMask方法[28]是为学习分割提议区域而开发的。

Deep Networks for Object Detection. The R-CNN method [5] trains CNNs end-to-end to classify the proposal regions into object categories or background. R-CNN mainly plays as a classifier, and it does not predict object bounds (except for refining by bounding box regression). Its accuracy depends on the performance of the region proposal module (see comparisons in [20]). Several papers have proposed ways of using deep networks for predicting object bounding boxes [25], [9], [26], [27]. In the OverFeat method [9], a fully-connected layer is trained to predict the box coordinates for the localization task that assumes a single object. The fully-connected layer is then turned into a convolutional layer for detecting multiple classspecific objects. The MultiBox methods [26], [27] generate region proposals from a network whose last fully-connected layer simultaneously predicts multiple class-agnostic boxes, generalizing the “single-box” fashion of OverFeat. These class-agnostic boxes are used as proposals for R-CNN [5]. The MultiBox proposal network is applied on a single image crop or multiple large image crops (e.g., 224×224), in contrast to our fully convolutional scheme. MultiBox does not share features between the proposal and detection networks. We discuss OverFeat and MultiBox in more depth later in context with our method. Concurrent with our work, the DeepMask method [28] is developed for learning segmentation proposals.

卷积[9],[1],[29],[7],[2]的共享计算已经越来越受到人们的关注,因为它可以有效而准确地进行视觉识别。OverFeat论文[9]计算图像金字塔的卷积特征用于分类,定位和检测。共享卷积特征映射的自适应大小池化(SPP)[1]被开发用于有效的基于区域的目标检测[1],[30]和语义分割[29]。Fast R-CNN[2]能够对共享卷积特征进行端到端的检测器训练,并显示出令人信服的准确性和速度。

Shared computation of convolutions [9], [1], [29], [7], [2] has been attracting increasing attention for efficient, yet accurate, visual recognition. The OverFeat paper [9] computes convolutional features from an image pyramid for classification, localization, and detection. Adaptively-sized pooling (SPP) [1] on shared convolutional feature maps is developed for efficient region-based object detection [1], [30] and semantic segmentation [29]. Fast R-CNN [2] enables end-to-end detector training on shared convolutional features and shows compelling accuracy and speed.

3. FASTER R-CNN

我们的目标检测系统,称为Faster R-CNN,由两个模块组成。第一个模块是提议区域的深度全卷积网络,第二个模块是使用提议区域的Fast R-CNN检测器[2]。整个系统是一个单个的,统一的目标检测网络(图2)。使用最近流行的“注意力”[31]机制的神经网络术语,RPN模块告诉Fast R-CNN模块在哪里寻找。在第3.1节中,我们介绍了区域提议网络的设计和属性。在第3.2节中,我们开发了用于训练具有共享特征模块的算法。

Our object detection system, called Faster R-CNN, is composed of two modules. The first module is a deep fully convolutional network that proposes regions, and the second module is the Fast R-CNN detector [2] that uses the proposed regions. The entire system is a single, unified network for object detection (Figure 2). Using the recently popular terminology of neural networks with attention [31] mechanisms, the RPN module tells the Fast R-CNN module where to look. In Section 3.1 we introduce the designs and properties of the network for region proposal. In Section 3.2 we develop algorithms for training both modules with features shared.

3.1 Region Proposal Networks

区域提议网络(RPN)以任意大小的图像作为输入,输出一组矩形的目标提议,每个提议都有一个目标得分。我们用全卷积网络[7]对这个过程进行建模,我们将在本节进行描述。因为我们的最终目标是与Fast R-CNN目标检测网络[2]共享计算,所以我们假设两个网络共享一组共同的卷积层。在我们的实验中,我们研究了具有5个共享卷积层的Zeiler和Fergus模型[32](ZF)和具有13个共享卷积层的Simonyan和Zisserman模型[3](VGG-16)。

A Region Proposal Network (RPN) takes an image (of any size) as input and outputs a set of rectangular object proposals, each with an objectness score.3 We model this process with a fully convolutional network [7], which we describe in this section. Because our ultimate goal is to share computation with a Fast R-CNN object detection network [2], we assume that both nets share a common set of convolutional layers. In our experiments, we investigate the Zeiler and Fergus model 32, which has 5 shareable convolutional layers and the Simonyan and Zisserman model 3, which has 13 shareable convolutional layers.

为了生成区域提议,我们在最后的共享卷积层输出的卷积特征映射上滑动一个小网络。这个小网络将输入卷积特征映射的n×n空间窗口作为输入。每个滑动窗口映射到一个低维特征(ZF为256维,VGG为512维,后面是ReLU[33])。这个特征被输入到两个子全连接层——一个边界框回归层(reg)和一个边界框分类层(cls)。在本文中,我们使用n=3,注意输入图像上的有效感受野是大的(ZF和VGG分别为171和228个像素)。图3(左)显示了这个小型网络的一个位置。请注意,因为小网络以滑动窗口方式运行,所有空间位置共享全连接层。这种架构通过一个n×n卷积层,后面是两个子1×1卷积层(分别用于reg和cls)自然地实现。

To generate region proposals, we slide a small network over the convolutional feature map output by the last shared convolutional layer. This small network takes as input an n×n spatial window of the input convolutional feature map. Each sliding window is mapped to a lower-dimensional feature (256-d for ZF and 512-d for VGG, with ReLU [33] following). This feature is fed into two sibling fully-connected layers——a box-regression layer (reg) and a box-classification layer (cls). We use n=3 in this paper, noting that the effective receptive field on the input image is large (171 and 228 pixels for ZF and VGG, respectively). This mini-network is illustrated at a single position in Figure 3 (left). Note that because the mini-network operates in a sliding-window fashion, the fully-connected layers are shared across all spatial locations. This architecture is naturally implemented with an n×n convolutional layer followed by two sibling 1 × 1 convolutional layers (for reg and cls, respectively).

3.1.1 Anchors

在每个滑动窗口位置,我们同时预测多个区域提议,其中每个位置可能提议的最大数目表示为k。因此,reg层具有4k个输出,编码k个边界框的坐标,cls层输出2k个分数,估计每个提议是目标或不是目标的概率。相对于我们称之为锚点的k个参考边界框,k个提议是参数化的。锚点位于所讨论的滑动窗口的中心,并与一个尺度和长宽比相关(图3左)。默认情况下,我们使用3个尺度和3个长宽比,在每个滑动位置产生k=9个锚点。对于大小为W×H(通常约为2400)的卷积特征映射,总共有WHk个锚点。

At each sliding-window location, we simultaneously predict multiple region proposals, where the number of maximum possible proposals for each location is denoted as k. So the reg layer has 4k outputs encoding the coordinates of k boxes, and the cls layer outputs 2k scores that estimate probability of object or not object for each proposal. The k proposals are parameterized relative to k reference boxes, which we call anchors. An anchor is centered at the sliding window in question, and is associated with a scale and aspect ratio (Figure 3, left). By default we use 3 scales and 3 aspect ratios, yielding k=9 anchors at each sliding position. For a convolutional feature map of a size W × H (typically ∼2,400), there are WHk anchors in total.