初识python 之 爬虫:使用正则表达式爬取”古诗文“网页数据 的兄弟篇。

详细代码如下:

#!/user/bin env python # author:Simple-Sir # time:2019/8/1 14:50 # 爬取糗事百科(文字)网页数据 import requests,re URLHead = 'https://www.qiushibaike.com' def getHtml(url): headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36' } respons = requests.get(url,headers=headers) html = respons.text return html def getInfos(url): html = getHtml(url) authors = re.findall(r'<h2>\n(.*?)\n</h2>',html,re.DOTALL) # 获取作者 author_sex_lvl = re.findall(r'<div class="articleGender (.*?)Icon">(\d*?)</div>',html,re.DOTALL) # 获取作者性别、等级 author_sex = [] # 性别 author_lvl = [] # 等级 for i in author_sex_lvl: author_sex.append(i[0]) author_lvl.append(i[1]) contentHerf = re.findall(r'<a href="(/article.*?)".*?class="contentHerf"',html,re.DOTALL)[1:] # 获取“详细页”href cont = [] # 内容 for contentUrl in contentHerf: contentHerf_all = URLHead + contentUrl contentHtml = getHtml(contentHerf_all) # 详细页html contents = re.findall(r'<div class="content">(.*?)</div>',contentHtml,re.DOTALL) content_br = re.sub(r'<br/>','',contents[0]) # 剔除</br>标签 content = re.sub(r'\\xa0','',content_br) cont.append(content) infos = [] for i in zip(authors,author_sex,author_lvl,cont): author,sex,lvl,text=i info ={ '作者':author, '性别': sex, '等级': lvl, '内容': text } infos.append(info) return infos def main(): page = int(input('您想获取前几页的数据?\n')) for i in range(1,page+1): url = 'https://www.qiushibaike.com/text/page/{}'.format(i) print('正在爬取第{}页数据:'.format(i)) for t in getInfos(url): print(t) print('第{}页数据已爬取完成。'.format(i)) print('所有数据已爬取完成!') if __name__ == '__main__': main()

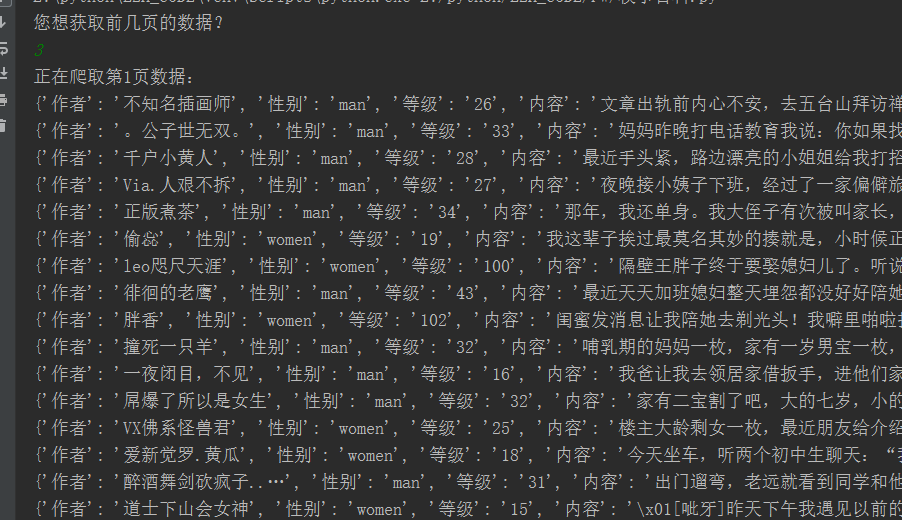

执行结果: