@每日一篇小论文----arXiv:1705.09422v7

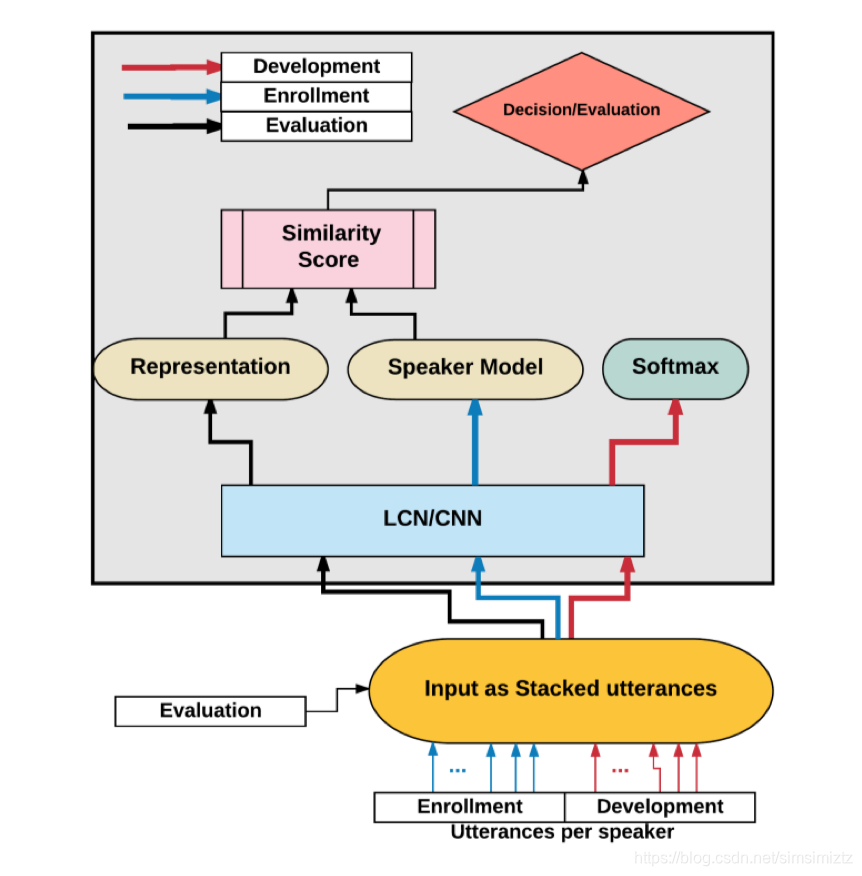

三维卷积

在本文中,已经提出了一种使用3D卷积神经网络(3D-CNN)架构的新方法,用于与文本无关的设置中的说话人验证。主要挑战之一是创建speaker model。大多数先前报道的方法基于对从扬声器的发声中提取的特征求平均来创建说话者模型,其被称为d-vector系统。在我们的论文中,我们提出了一种自适应特征学习,它利用3D-CNN进行直接说话者模型创建,其中,对于develop和enroll阶段,每个说话者的相同数量的内容被馈送到网络以表示说话者的话语,创建speaker model,同时捕获与扬声器相关的信息并构建更强大的系统以应对扬声器内变化。我们证明了所提出的方法显着优于传统的d向量验证系统。此外,所提出的系统还可以是传统d-vector系统的替代方案,传统的d-矢量系统是利用3D-CNN的one-shot话者建模系统。

核心思想

堆叠输入的feature map,构成stacked feature map,通过3-D卷积对stacked feature map处理,最后通过全连接层进行预测。

结构图如下:

数据输入

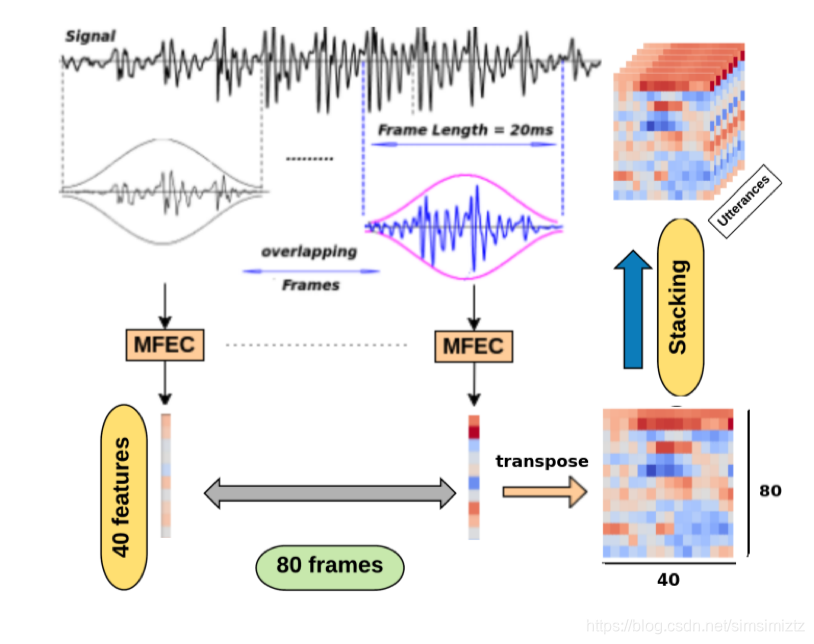

输入给神经网络的特征信息不在是MFCC特征,而是在MFCC特征进行DCT处理之前的特征参数(这样靠神经网络提取频谱之间的局部信息真的好用吗?)

时间特征重叠20ms窗口,步幅为10ms,用于生成频谱特征。 从0.8秒的声音样本,可以获得80个时间特征集(每个形成40个MFEC特征),其形成输入语音特征映射。 每个输入特征图具有ζ×80×40的维度,其由80个输入帧及其对应的光谱特征形成,其中ζ是在开发和登记阶段期间对说话者进行建模时使用的话语的数量。 默认情况下,我们设置ζ= 20。

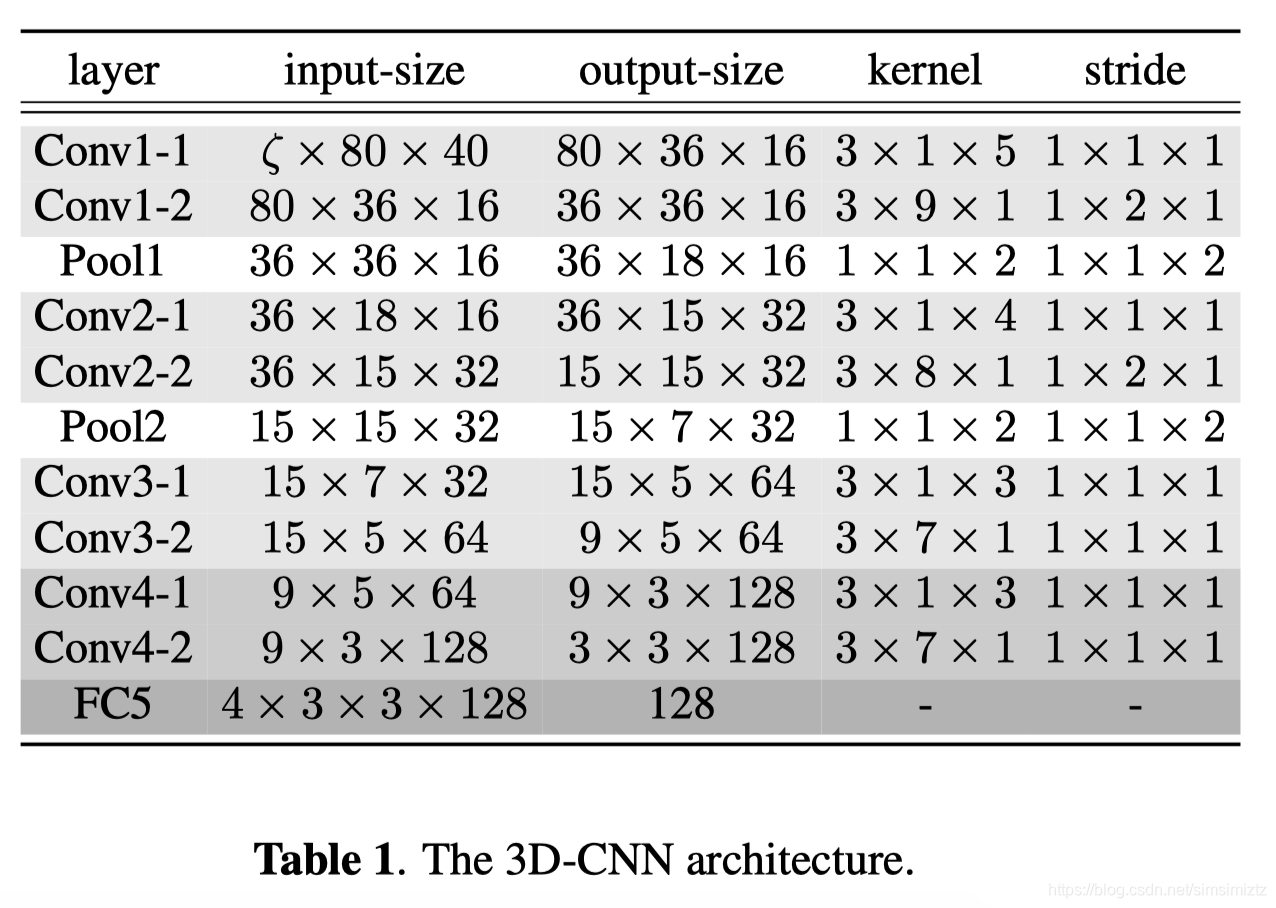

网络模型结构

参考程序

(具体程序:https://github.com/astorfi/3D-convolutional-speaker-recognition)

def speech_cnn(inputs, num_classes=1000,

is_training=True,

dropout_keep_prob=0.5,

spatial_squeeze=True,

scope='cnn'):

"""Oxford Net VGG 11-Layers version A Example.

Note: All the fully_connected layers have been transformed to conv3d layers.

To use in classification mode, resize input to 224x224.

Args:

inputs: a tensor of size [batch_size, height, width, channels].

num_classes: number of predicted classes.

is_training: whether or not the model is being trained.

dropout_keep_prob: the probability that activations are kept in the dropout

layers during training.

spatial_squeeze: whether or not should squeeze the spatial dimensions of the

outputs. Useful to remove unnecessary dimensions for classification.

scope: Optional scope for the variables.

Returns:

the last op containing the log predictions and end_points dict.

"""

end_points = {}

with tf.variable_scope(scope, 'net', [inputs]) as sc:

end_points_collection = sc.name + '_end_points'

# Collect outputs for conv3d and max_pool2d.

with tf.contrib.framework.arg_scope([tf.contrib.layers.conv3d, tf.contrib.layers.max_pool2d],

outputs_collections=end_points_collection):

##### Convolution Section #####

inputs = tf.to_float(inputs)

############ Conv-1 ###############

net = slim.conv3d(inputs, 16, [3, 1, 5], stride=[1, 1, 1], scope='conv11')

net = PReLU(net, 'conv11_activation')

net = slim.conv3d(net, 16, [3, 9, 1], stride=[1, 2, 1], scope='conv12')

net = PReLU(net, 'conv12_activation')

net = tf.nn.max_pool3d(net, strides=[1, 1, 1, 2, 1], ksize=[1, 1, 1, 2, 1], padding='VALID', name='pool1')

############ Conv-2 ###############

net = slim.conv3d(net, 32, [3, 1, 4], stride=[1, 1, 1], scope='conv21')

net = PReLU(net, 'conv21_activation')

net = slim.conv3d(net, 32, [3, 8, 1], stride=[1, 2, 1], scope='conv22')

net = PReLU(net, 'conv22_activation')

net = tf.nn.max_pool3d(net, strides=[1, 1, 1, 2, 1], ksize=[1, 1, 1, 2, 1], padding='VALID', name='pool2')

############ Conv-3 ###############

net = slim.conv3d(net, 64, [3, 1, 3], stride=[1, 1, 1], scope='conv31')

net = PReLU(net, 'conv31_activation')

net = slim.conv3d(net, 64, [3, 7, 1], stride=[1, 1, 1], scope='conv32')

net = PReLU(net, 'conv32_activation')

# net = slim.max_pool2d(net, [1, 1], stride=[4, 1], scope='pool1')

############ Conv-4 ###############

net = slim.conv3d(net, 128, [3, 1, 3], stride=[1, 1, 1], scope='conv41')

net = PReLU(net, 'conv41_activation')

net = slim.conv3d(net, 128, [3, 7, 1], stride=[1, 1, 1], scope='conv42')

net = PReLU(net, 'conv42_activation')

# net = slim.max_pool2d(net, [1, 1], stride=[4, 1], scope='pool1')

############ Conv-5 ###############

net = slim.conv3d(net, 128, [4, 3, 3], stride=[1, 1, 1], normalizer_fn=None, scope='conv51')

net = PReLU(net, 'conv51_activation')

# net = slim.conv3d(net, 256, [1, 1], stride=[1, 1], scope='conv52')

# net = PReLU(net, 'conv52_activation')

# Last layer which is the logits for classes

logits = tf.contrib.layers.conv3d(net, num_classes, [1, 1, 1], activation_fn=None, scope='fc')

# Return the collections as a dictionary

end_points = slim.utils.convert_collection_to_dict(end_points_collection)

# Squeeze spatially to eliminate extra dimensions.(embedding layer)

if spatial_squeeze:

logits = tf.squeeze(logits, [1, 2, 3], name='fc/squeezed')

end_points[sc.name + '/fc'] = logits

return logits, end_points