这是linemod的第二篇,这一篇把训练从online learning 变成了 使用3D model, 并且对于检测结果用 3种方法: color、Pose、Depth来确保不会有false positive。感觉有种不忘初心的感觉(笑

基于linemod,是前一篇的改良

initial version of LINEMOD has some disadvantages. First, templates are learnede online, which is difficule to control and results in spotty coverage og viewpoints. Second, pose output by LINEMOD is only approximately corret, since a template covers a range of views around its viewpoint. Finally, LINEMOD still suffers from the presence of false positives. Our main insight is that a 3D model of the object can be exploited to emedy these deficiencies.

We use 3D model to obtain a fine estimate of the object pose, starting from the one provided by the templates. Together with a simple test based on color, this allows us to remove false positives, by checking if the object under the recovered pose aligns well with the depth map

- Exploiting a 3D Model to Create the Templates

we build a set of templates automatically from CAD 3D models, which has several advantages. First, online learning requires physical interaction of a human operator or a robot with their environment, and therefore takes time and effort(?). Furthermore, it usually takes an educated user and careful manual interaction to collect a well sampled training set of the object that covers the whole pose range. Online methods follow a greedy approach and are not guaranteed to lead to optimal results in terms of trade-off between efficiency and robustness.

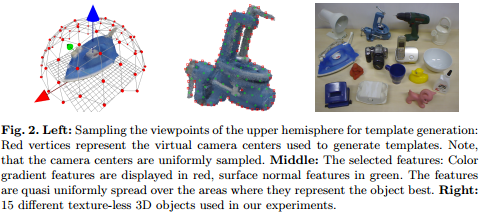

- Viewpoint Sampling: we solve this problem by recursively dividing an icosahedron. We substitute each triangle into four almost equilateral triangles, and iterate several times.the vertices of the resulting polyhedron give us then the two out-of-plane rotation angles for the sampled pose with respect to the coordinate center. Two adjacent vertices are approximately 15 degrees apart. Created templates for different in-plane rotations. Generate templates at different scales by using different sized polyhedrons, using a step size of 10 cm.

- Reducing Feature Redundancy: We consider only a subset of the feature(color gradients, surface normals) to speed up the detection with no loss of accuracy.

- Color Gradient Features: We keep only the main color gradient features located on the contour of the object silhouette. For each sampled pose, compute the object silhouette by projecting 3D model under this pose. Compute all the color gradients that lie on the silhouette contour and sort them with respect to their magnitudes (silhouette edge is not guaranteed to be only one pixel broad) ———— 1. choose the gradient with the strongest magnitude, 2. take the first feature that appears in list, 3. remove the features whose image locations are close (according to some distance threshold) to the picked feature location from the list , 4. iterate. 5. If have finished iterating through the list of features before a desired number of features is selected, decrease the distance threshold by one and start the process again. The threshold is initially set to the ratio of the area covered by the silhouette and the number of features that are supposed to be selected

- Surface Normal Features: we chose the surface normal features to be selected on the interior of the object silhouette. We first remark that normals surrounded by normals of similar orientation are recovered more reliably. create a mask for each of the 8 possible values of discretized orientations from the depth map generated for the object under the considered pose. For each of the 8 masks, we then weight each normal with the distance to the mask boundary. we first directly reject the normals with a weight smaller than a specific distance - we use 2 in practice and normalize the weights by the size of the mask they belong to. And select the feature like we have done in the color gradient feature. threshold is set to the square root of the ratio of the area covered by the rendered object and the number of features we want to keep

- PostProcssing Detections

For each template detected by LINEMOD-starting with the one with the highest similarity score, we first check the consistency of this detection by comparing the object color with the content of the color image at its location. And then we estimate the 3D pose of the corresponding object. We reject all detections whose 3D pose estimates have not converged properly. Taking the first n detections that passed all checks, we do a final pose estimate for the best of them.

- Coarse Outlier Removal by Color: Each detected template provides a coarse estimate of the object pose that is good enough for an efficient check based on color information. We consider the pixels that lie on the object projection according to the pose estimate, and count how many of them have the expected color. We decide a pixel has the expected color if the difference between its hue and the object hue (modulo \(2\pi\)) is smaller than a threshold. If the percentage of pixels that have their expected color is not large enough (at least 70% in our implementation), we reject the detection as false positive. we do not take into account the pixels that are too close to the object projection boundaries. In case the value component is below a threshold \(t_v\), we set the black hue value to blue. If the value component is larger than \(t_v\) and the saturation component below a threshold \(t_s\), we set the white hue component to yellow.

- Fast Pose Estimation and Outlier Rejection based on Depth: we use ICP and 3D model to refine the pose estimate provided by the template detection. The initial translation is estimated from the depth values covered by the initial model projection. we first subsample the 3D points from the depth map that lie on the object projection or close to it. For robustness, at each iteration \(i\), we compute the alignment using only the inlier 3D points. The inlier points are the ones that fall within a distance to the 3D model smaller than an adaptive threshold \(t_i\). \(t_0\) is initialized to the size of the object, \(t_{i+1}\) is set to three times the average distance of the inliers to the 3D model at time \(i\). After convergence, if the average distance of the inliers to the 3D model is too large, we reject the detection as false positive.

- The final ICP is followed by a final depth test. For that, we consider the pixels that lie on the object projection according to the final pose estimate, and count how many of them have the expected depth. We decide a pixel has the expected depth if the difference between its depth value and the projected object depth is smaller than a threshold. If the percentage of pixels that have their expected depth is not large enough, we reject.