varnish的原理

varnish都是以模块进行处理,配置文件中的sub vcl-deliver等等 都是一个个的模块

处理过程大致分为如下几个步骤:

(1)Receive 状态,也就是请求处理的入口状态,根据 VCL 规则判断该请求应该是 Pass 或

Pipe,或者进入 Lookup(本地查询)。

(2)Lookup 状态,进入此状态后,会在 hash 表中查找数据,若找到,则进入 Hit 状态,否则进

入 miss 状态。

(3)Pass 状态,在此状态下,会进入后端请求,即进入 fetch 状态。

(4)Fetch 状态,在 Fetch 状态下,对请求进行后端的获取,发送请求,获得数据,并进行本地

的存储。

(5)Deliver 状态, 将获取到的数据发送给客户端,然后完成本次请求。

lookup: 使用vcl-hash,将你的ip与后段服务器绑定

查看是否有缓存,有就miss,没有就hit

pass:请求给了fetch(查询)。动态数据不适合缓存的使用pass。

pipe:与pass类似,直接访问后端服务器。数据比较大,不适合缓存。

VCL:varnish configure language (varnish的专用配置语言)

kvm:底层的虚拟机,来自底层的虚拟化

qemu:虚拟外围设备,例如i/o设备

libvird:虚拟化接口,管理虚拟化设备。

varnish 和squid的区别:

Varnish访问速度更快,Varnish采用了“Visual Page Cache”技术,所有缓存数据都直接从内存读取,而squid是从硬盘读取,因而Varnish在访问速度方面会更快。(所以说varnish有个缺点,当varnish进程一旦被down,缓存数据都会从内存中完全释放,此时所有请求都会发送到后端服务器,在高并发情况下,会给后端服务器造成很大压力。)

Varnish可以支持更多的并发连接,因为Varnish的TCP连接释放要比Squid快。因而在高并发连接情况下可以支持更多TCP连接。

Varnish可以通过管理端口,使用正则表达式批量的清除部分缓存,而Squid是做不到的。

squid属于是单进程使用单核CPU,但Varnish是通过fork形式打开多进程来做处理,所以是合理的使用所有核来处理相应的请求。

事实上,varnish整体的性能将会高于squid;

client ->cdn(代理节点) ->HA + lvs(高可用)(调度节点)

之前安装6.5的虚拟机安装完rhel6.5的虚拟就会就不要在打开了,用快照安装虚拟机

varnish服务:

1:真机上挂载6.5的镜象

[kiosk@foundation36 ~]$ mount /home/kiosk/Desktop/rhel-server-6.5-x86_64-dvd.iso /var/www/html/rhel6.5/

2:利用6.5的镜像安装三台虚拟机(vm1,vm2.vm3)

qemu- img create -f qcow2 -b rhel6.5.qcow2 vm1

qemu- img create -f qcow2 -b rhel6.5.qcow2 vm2

qemu- img create -f qcow2 -b rhel6.5.qcow2 vm3

vitr-manager打开虚拟机管理,选择第四个,然后利用之前生存的镜像安装虚拟机

然后修改vm2主机名为server2,修改vm2ip为172.25.35.2

然后修改vm2主机名为server3,修改vm2ip为172.25.35.3

3:然后在server1上安装varnish包

varnish-3.0.5-1.el6.x86_64.rpm

varnish-libs-3.0.5-1.el6.x86_64.rpm

[root@server1 home]# ls

varnish-3.0.5-1.el6.x86_64.rpm varnish-libs-3.0.5-1.el6.x86_64.rpm

[root@server1 home]# yum install varnish-*4:查看varnish的配置文件:rpm -qc varnish-3.0.5-1.el6.x86_64.rpm

5:[root@server1 ~]# sysctl -a | grep file

fs.file-nr = 416 0 98864

fs.file-max = 98864(刚开始最大文件数为98864,在/etc/sysconfig/varnish中可以看到这个是

不够的,所以要将sercver1的内存加到2048)6:free -m 查看剩于内存

7:此时要将server主机关闭以后,将其内存扩大到2048MB

8:sysctl -a | grep file(内存扩大到2048MB之后,最大文件数变为188464)

[

root@server1 ~]# sysctl -a | grep file

fs.file-nr = 416 0 188464

fs.file-max = 188464

8:修改配置文件

(1) vim /etc/sysconfig/varnish

66: VARNISH_LISTEN_PORT=80

(2) vim /etc/security/limits.conf (在最后面添加下面的话)

varnish - nofile 131072

varnish - memlock 82000

varnish - nproc unlimited

(3)vim /etc/varnish/default.vcl

backend web1 {

.host = "172.25.36.2";

.port = "80";

}

9:启动varnish (没有发现报错)

/etc/init.d/varnish start

10:此时执行ps aux|grep varnish会看到一个root一个varnish(后来生成的)

[root@server1 html]# ps aux | grep varnish

root 1022 0.0 0.0 112300 1248 ? Ss 11:59 0:00 /usr/sbin/varnishd -P /var/run/varnish.pid -a :80 -f /etc/varnish/default.vcl -T 127.0.0.1:6082 -t 120 -w 50,1000,120 -u varnish -g varnish -S /etc/varnish/secret -s file,/var/lib/varnish/varnish_storage.bin,1G

varnish 1023 0.0 0.3 2281696 6148 ? Sl 11:59 0:15 /usr/sbin/varnishd -P /var/run/varnish.pid -a :80 -f /etc/varnish/default.vcl -T 127.0.0.1:6082 -t 120 -w 50,1000,120 -u varnish -g varnish -S /etc/varnish/secret -s file,/var/lib/varnish/varnish_storage.bin,1G

root 1669 0.0 0.0 103244 856 pts/0 S+ 16:49 0:00 grep varnish11:在server2主机上 安装httpd 开启httpd服务:/etc/inid.d/httpd start

12:进入到/var/www/html目录下,vim index.html

<h1>www.westos.org - server2</h1>

13:此时在真机上进行测试:

输入curl 172.25.36.1 查看到的是server2主机apache默认发布目录下的内容

<h1>www.westos.org - server2</h1>

14:在浏览器中输入172.25.36.1 也可以查看到server2默认发布目录下的内容

查看server1中是否有缓存:

1:server1主机中:编辑vim /etc/varnish/default.vcl

sub vcl_deliver{

if (obj.hits > 0){

set resp.http.X-Cache = "HIT from westos cache"; //有缓存时显示

}

else{

set resp.http.X-Cache = "MISS from westos cache"; //无缓存是显示

}

return (deliver);

}2:重启服务:

/etc/init.d/varnish reload

3:在真机中进行检测(curl -I 172.25.36.1):第一次会显示MISS那个没有缓存的,后来都是HIT有缓存的

4:清楚所有缓存的指令varnishadm ban.url .*$

5:只清楚/index.html网页的缓存:varnishadm ban.url /index.html

在真机中输入curl www.westos.org 查看server2主机(www)

curl bbs.westos.org 查看server3主机(bbs)

1:server1编辑配置文件:

backend web1 {

.host = "172.25.36.2";

.port = "80";

}

backend web2 {

.host = "172.25.36.3";

.port = "80";

}

sub vcl_recv{

if (req.http.host ~ "^(www.)?westos.org"){

set req.http.host = "www.westos.org";

set req.backend = lb;

#return(pass);

}elsif (req.http.host ~ "^bbs.westos.org") {

set req.backend = web2;

} else {

error 404 "westos cache";

}

}2:server3主机上安装httpd服务:

[root@server3 home]# cd /var/www/html/

[root@server3 html]# ls

index.html

[root@server3 html]# vim index.html

<h1> bbs.westos.org - server3</h1>

3:在真机上进行测试:

[root@foundation36 ~]# curl www.westos.org

<h1>www.westos.org - server2</h1>

[root@foundation36 ~]# curl bbs.westos.org

<h1> bbs.westos.org - server3</h1>

轮询:真机上输入curl www.westos.org 在server2和server3之间进行轮询

1:server1上编辑配置文件:vim /etc/varnish/default.vcl

记住修改完配置文件和要重启服务

director lb round-robin {

{ .backend = web1;}

{ .backend = web2;}

}

sub vcl_recv{

if (req.http.host ~ "^(www.)?westos.org"){

set req.http.host = "www.westos.org";

set req.backend = lb;

return(pass);(是否缓存进行,return (pass)就不进行缓存,为了这个轮询的实验效果,其他时候不要写

,因为varnish就是要缓存的)

}elsif (req.http.host ~ "^bbs.westos.org") {

set req.backend = web2;

} else {

error 404 "westos cache";

}

}

2:真机进行测试

[root@foundation36 ~]# curl www.westos.org

<h1>www.westos.org - server2</h1>

[root@foundation36 ~]# curl www.westos.org

<h1> bbs.westos.org - server3</h1>

server3上搭建虚拟主机:

1:server3主机上安装httpd服务

2:进入到httpd为服务的配置文件

[root@server3 ~]# vim /etc/httpd/conf/httpd.conf

搭建两台虚拟主机:

<VirtualHost *:80>

DocumentRoot /www

ServerName www.westos.org

</VirtualHost>

<VirtualHost *:80>

DocumentRoot /bbs

ServerName bbs.westos.org

</VirtualHost>

打开80端口:

NameVirtualHost *:80

3:创建/www和/bbs目录

进入到apache的默认发布目录下:

[root@server3 ~]# mkdir /www

[root@server3 ~]# mkdir /bbs

[root@server3 ~]# cd /www/

[root@server3 www]# vim index.html

<h1>www.westos.org - server3</h1>

[root@server3 www]# cd /bbs/

[root@server3 bbs]# ls

[root@server3 bbs]# vim index.html

<h1>bbs.westos.org - server3</h1>

4:在真机(即访问的主机上)修改本地解析

172.25.36.1 server1

172.25.36.3 server3 www.westos.org bbs.westos.org

cdn推送管理:

1:在server1主机(cdn节点)上安装bansys.zip压缩包

2:安装unzip httpd php

3:解压bansys.zip到/var/www/html目录下

[root@server1 home]# unzip bansys.zip -d /var/www/html/

4:进入/var/www/html

[root@server1 html]# ls

bansys

5:进入到bansys下;编辑config.php文件

[root@server1 bansys]# ls

class_socket.php config.php index.php purge_action.php static

[root@server1 bansys]# vim config.php

//varnish主机列表

//可定义多个主机列表

$var_group1 = array(

'host' => array('172.25.36.1'),

'port' => '8080',

);

//varnish群组定义

//对主机列表进行绑定

$VAR_CLUSTER = array(

'www.westos.org' => $var_group1,

6:进入apache默认发布目录

修改默认端口为8080

重起httpd服务

[root@server1 bansys]# vim /etc/httpd/conf/httpd.conf

[root@server1 bansys]# /etc/init.d/httpd restart

Stopping httpd: [FAILED]

Starting httpd: httpd: Could not reliably determine the server's fully qualified domain name, using 172.25.36.1 for ServerName

[ OK ]

7:将bansys中的文件都移到/var/www/html目录下:

[root@server1 bansys]# mv * ../

[root@server1 bansys]# ls

[root@server1 bansys]# cd ..

[root@server1 html]# ls

bansys class_socket.php config.php index.php purge_action.php stat

8:此时在浏览器中输入172.25.36.1:8080已经能够查看到cdn推送管理的界面了

9:在server1上编辑vim /etc/varnish/default.vcl

添加:

acl westos{

"127.0.0.1";

"172.25.36.0"/24;

}

sub vcl_recv {

if (req.request == "BAN") {

if (! client.ip ~ westos) {

error 405 "Not allowed.";

}

ban("req.url ~ " + req.url);

error 200 "ban added";

}

}

10:在真机上修改本地解析

172.25.36.1 server1 www.westos.org bbs.westos.org

172.25.36.3 server3

11:将之前写的return (pass)注释掉

12:检测(实现cdn推送功能)

在真机上多输入:curl -I www.westos.org 查看到是有缓存的

在cdn测试页面中选择HTTP,输入.*,再次输入curl -I www.westos.org发现缓存被清除了

server1作为调度节点,server2 server3开启apache

rr:轮询

-g DR:直连模式

DR模式:

1:servre1充当调度节点

2:在yum源中查看ipvsadm包

[root@foundation36 kiosk]# cd /var/www/html/rhel6.5

[root@foundation36 rhel6.5]# ls

EFI Packages RELEASE-NOTES-pa-IN.html

EULA README RELEASE-NOTES-pt-BR.html

EULA_de RELEASE-NOTES-as-IN.html RELEASE-NOTES-ru-RU.html

EULA_en RELEASE-NOTES-bn-IN.html RELEASE-NOTES-si-LK.html

EULA_es RELEASE-NOTES-de-DE.html RELEASE-NOTES-ta-IN.html

EULA_fr RELEASE-NOTES-en-US.html RELEASE-NOTES-te-IN.html

EULA_it RELEASE-NOTES-es-ES.html RELEASE-NOTES-zh-CN.html

EULA_ja RELEASE-NOTES-fr-FR.html RELEASE-NOTES-zh-TW.html

EULA_ko RELEASE-NOTES-gu-IN.html repodata

EULA_pt RELEASE-NOTES-hi-IN.html ResilientStorage

EULA_zh RELEASE-NOTES-it-IT.html RPM-GPG-KEY-redhat-beta

GPL RELEASE-NOTES-ja-JP.html RPM-GPG-KEY-redhat-release

HighAvailability RELEASE-NOTES-kn-IN.html ScalableFileSystem

images RELEASE-NOTES-ko-KR.html Server

isolinux RELEASE-NOTES-ml-IN.html TRANS.TBL

LoadBalancer RELEASE-NOTES-mr-IN.html

media.repo RELEASE-NOTES-or-IN.html

[root@foundation36 rhel6.5]#

[root@foundation36 rhel6.5]# ls

EFI Packages RELEASE-NOTES-pa-IN.html

EULA README RELEASE-NOTES-pt-BR.html

EULA_de RELEASE-NOTES-as-IN.html RELEASE-NOTES-ru-RU.html

EULA_en RELEASE-NOTES-bn-IN.html RELEASE-NOTES-si-LK.html

EULA_es RELEASE-NOTES-de-DE.html RELEASE-NOTES-ta-IN.html

EULA_fr RELEASE-NOTES-en-US.html RELEASE-NOTES-te-IN.html

EULA_it RELEASE-NOTES-es-ES.html RELEASE-NOTES-zh-CN.html

EULA_ja RELEASE-NOTES-fr-FR.html RELEASE-NOTES-zh-TW.html

EULA_ko RELEASE-NOTES-gu-IN.html repodata

EULA_pt RELEASE-NOTES-hi-IN.html ResilientStorage

EULA_zh RELEASE-NOTES-it-IT.html RPM-GPG-KEY-redhat-beta

GPL RELEASE-NOTES-ja-JP.html RPM-GPG-KEY-redhat-release

HighAvailability RELEASE-NOTES-kn-IN.html ScalableFileSystem

images RELEASE-NOTES-ko-KR.html Server

isolinux RELEASE-NOTES-ml-IN.html TRANS.TBL

LoadBalancer RELEASE-NOTES-mr-IN.html

media.repo RELEASE-NOTES-or-IN.html

[root@foundation36 rhel6.5]# cd LoadBalancer/

[root@foundation36 LoadBalancer]# ls

listing repodata TRANS.TBL

[root@foundation36 LoadBalancer]# cd repodata/

[root@foundation36 repodata]# ls

0e090fe29fd33203c62b483f2332ff281c9f00d8f89010493da60940d95dffdf-other.sqlite.bz2

21865f1138fb05ff24de3914e00639e4848577e11710fad05eef652faf0a6df6-primary.sqlite.bz2

3d38c0d2cf11c4fb29ddfd10cd9f019aed2375a7ceccba0e462ffb825bd0c8c9-other.xml.gz

5c6dc06784de5f4f26aa45d3733027baf2f591f3d22594d43f86d50e69f82348-filelists.xml.gz

75abe16774cfeeee3114bdaa50d7c21a02fa214db43f77510f1b3d3f6dcbe01a-filelists.sqlite.bz2

80b0529eea83326f934c6226b67c8cd6d918c2e15576c515bd83433ae0fbef7b-comps-rhel6-LoadBalancer.xml.gz

a347d2267d6aa9956833d217d83c83ef18ef7be44e507e066be94b3d95859352-primary.xml.gz

e3fd7ebdc8f4e1cfff7f16aaaa9ffcf6f11075bffdac180f47780bbc239c5379-comps-rhel6-LoadBalancer.xml

productid

productid.gz

repomd.xml

TRANS.TBL3:给yum源加上ipvsadm包:

[rhel6.5]

name=rhel6.5

baseurl=http://172.25.36.250/rhel6.5

gpgcheck=0

enanled=1

[LoadBalancer]

name=LoadBalancer

baseurl=http://172.25.36.250/rhel6.5/LoadBalancer

gpgcheck=0

enabled=14:在调度节点server1上安装ipvsadm

[root@server1 ~]# yum install ipvsadm -y

5:给server1添加虚拟ip 172.25.36.100,调度server2和server3

[root@server1 ~]# ipvsadm -A -t 172.25.36.100:80 -s rr

[root@server1 ~]# ipvsadm -a -t 172.25.36.100:80 -r 172.25.36.2:80 -g

[root@server1 ~]# ipvsadm -a -t 172.25.36.100:80 -r 172.25.36.3:80 -g

[root@server1 ~]# ipvsadm -l

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.25.36.100:http rr

-> server2:http Route 1 0 0

-> server3:http Route 1 0 0

5:在server2和server3上打开apache服务

[root@server2 ~]# /etc/init.d/httpd start

Starting httpd: httpd: Could not reliably determine the server's fully qualified domain name, using 172.25.36.2 for ServerName

6:给server2和server3的eth0网卡添加172.25.36.100 ip

[root@server2 ~]# ip addr add 172.25.36.100/32 dev eth0

[root@server2 ~]# ip addr show eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:82:be:3e brd ff:ff:ff:ff:ff:ff

inet 172.25.36.2/16 brd 172.25.255.255 scope global eth0

inet 172.25.36.100/32 scope global eth0

inet6 fe80::5054:ff:fe82:be3e/64 scope link

valid_lft forever preferred_lft forever

7:在真机上进行测试:

开始在server2和server3之间随机访问:

[root@foundation36 ~]# curl 172.25.36.100

<h1>www.westos.org - server3</h1>

[root@foundation36 ~]# curl 172.25.36.100

<h1>www.westos.org - server2</h1>

[root@foundation36 ~]# curl 172.25.36.100

<h1>www.westos.org - server3</h1>

[root@foundation36 ~]# curl 172.25.36.100

<h1>www.westos.org - server2</h1>

[root@foundation36 ~]# curl 172.25.36.100

<h1>www.westos.org - server3</h1>

[root@foundation36 ~]# curl 172.25.36.100

<h1>www.westos.org - server2</h1>

ipvsadm -ln查看访问次数

8:清理一次缓存之后在server1 server2 server3随机绑定(此时绑定的是server3)

[root@foundation36 ~]# arp -an | grep 100

? (172.25.36.100) at 52:54:00:82:be:3e [ether] on br0

[root@foundation36 ~]# arp -d 172.25.36.100

[root@foundation36 ~]# curl 172.25.36.100

<h1>www.westos.org - server3</h1>

[root@foundation36 ~]# curl 172.25.36.100

<h1>www.westos.org - server3</h1>

[root@foundation36 ~]# curl 172.25.36.100

<h1>www.westos.org - server3</h1>

9:[root@foundation36 ~]# arp -d 172.25.36.100(在清理一次缓存)

此时看运气随机在server1 server2 server3之间随机绑定

10:server1上保存策略

[root@server1 ~]# /etc/init.d/ipvsadm save

ipvsadm: Saving IPVS table to /etc/sysconfig/ipvsadm: [ OK ]

[root@server1 ~]# vi /etc/sysconfig/ipvsadm

上面这样子是危险的,我们应该让客户端只能访问server1调度机,而不应该让客户端访问后端的server2和server3

此时就要给server2和server3添加防火墙策略,让客户端的真机只能访问server1调度机

1:server2和server3上安装:

[root@server2 ~]# yum install arptables_jf -y

2:刚开始查看防火墙是没有策略的

[root@server2 ~]# arptables -L

Chain IN (policy ACCEPT)

target source-ip destination-ip source-hw destination-hw hlen op hrd pro

Chain OUT (policy ACCEPT)

target source-ip destination-ip source-hw destination-hw hlen op hrd pro

Chain FORWARD (policy ACCEPT)

target source-ip destination-ip source-hw destination-hw hlen op hrd pro

3:给server2和server3添加防火墙策略:

[root@server2 ~]# arptables -A IN -d 172.25.36.100 -j DROP

[root@server2 ~]# arptables -A OUT -s 172.25.36.100 -j mangle --mangle-ip-s 172.25.36.2

[root@server2 ~]# arptables -L

Chain IN (policy ACCEPT)

target source-ip destination-ip source-hw destination-hw hlen op hrd pro

DROP anywhere 172.25.36.100 anywhere anywhere any any any any

Chain OUT (policy ACCEPT)

target source-ip destination-ip source-hw destination-hw hlen op hrd pro

mangle 172.25.36.100 anywhere anywhere anywhere any any any any --mangle-ip-s server2

Chain FORWARD (policy ACCEPT)

target source-ip destination-ip source-hw destination-hw hlen op hrd pro

4:保存策略

[root@server2 ~]# /etc/init.d/arptables_jf save

Saving current rules to /etc/sysconfig/arptables: [ OK ]

5:server3上也是这样:

(1)[root@server3 ~]# yum install arptables_jf -y

(2)[root@server3 ~]# arptables -A IN -d 172.25.36.100 -j DROP

(3)[root@server3 ~]# arptables -A OUT -s 172.25.36.100 -j mangle --mangle-ip-s 172.25.36.3

(4)[root@server3 ~]# /etc/init.d/arptables_jf save

Saving current rules to /etc/sysconfig/arptables: [ OK ]

6:客户端(真机上进行访问):清理缓存,再次进行检测

此时发现无法连接到server2和server3,只能连接到server1调度机

[root@foundation36 ~]# arp -d 172.25.36.100

[root@foundation36 ~]# curl 172.25.36.100

<h1>www.westos.org - server3</h1>

[root@foundation36 ~]# curl 172.25.36.100

<h1>www.westos.org - server2</h1>

[root@foundation36 ~]# curl 172.25.36.100

<h1>www.westos.org - server3</h1>

[root@foundation36 ~]# curl 172.25.36.100

<h1>www.westos.org - server2</h1>

[root@foundation36 ~]# curl 172.25.36.100

<h1>www.westos.org - server3</h1>

[root@foundation36 ~]# curl 172.25.36.100

<h1>www.westos.org - server2</h1>

[root@foundation36 ~]# curl 172.25.36.100隧道模式:

1:server1上清理之前的策略

[root@server1 ~]# ipvsadm -C

[root@server1 ~]# ipvsadm -l

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn2:安装modprobe ipip

[root@server1 ~]# modprobe ipip

发现出现了一个tunl0

3:删除之前eth0上的ip:172.25.36.100/24

[root@server1 ~]# ip addr del 172.25.36.100/24 dev eth0

4:给tunl0添加ip:172.25.37.100/24

[root@server1 ~]# ip addr add 172.25.36.100/24 dev tunl0

[root@server1 ~]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:a5:d0:68 brd ff:ff:ff:ff:ff:ff

inet 172.25.36.1/24 brd 172.25.36.255 scope global eth0

inet6 fe80::5054:ff:fea5:d068/64 scope link

valid_lft forever preferred_lft forever

3: tunl0: <NOARP> mtu 1480 qdisc noop state DOWN

link/ipip 0.0.0.0 brd 0.0.0.0

inet 172.25.36.100/24 scope global tunl0

4:激活tunl0

[root@server1 ~]# ip link set up tunl0

[root@server1 ~]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:a5:d0:68 brd ff:ff:ff:ff:ff:ff

inet 172.25.36.1/24 brd 172.25.36.255 scope global eth0

inet6 fe80::5054:ff:fea5:d068/64 scope link

valid_lft forever preferred_lft forever

3: tunl0: <NOARP,UP,LOWER_UP> mtu 1480 qdisc noqueue state UNKNOWN

link/ipip 0.0.0.0 brd 0.0.0.0

inet 172.25.36.100/24 scope global tunl0

5:在server2上modprobe ipip 添加tunl0隧道

6:删除eth0上的172.25.36.100/32,给tunl0隧道添加

[root@server2 ~]# ip addr del 172.25.36.100/32 dev eth0

[root@server2 ~]# ip addr add 172.25.36.100/32 dev tunl0

7:需要关闭反向过滤 sysctl -a | grep rp_filter查看最开始的

[root@server2 ~]# sysctl -a | grep rp_filter

net.ipv4.conf.all.rp_filter = 0

net.ipv4.conf.all.arp_filter = 0

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.default.arp_filter = 0

net.ipv4.conf.lo.rp_filter = 1

net.ipv4.conf.lo.arp_filter = 0

net.ipv4.conf.eth0.rp_filter = 1

net.ipv4.conf.eth0.arp_filter = 0

net.ipv4.conf.tunl0.rp_filter = 1

net.ipv4.conf.tunl0.arp_filter = 08:将其中的1表示开启的都关闭

[root@server2 ~]# sysctl -w net.ipv4.conf.default.rp_filter=0

net.ipv4.conf.default.rp_filter = 0

[root@server2 ~]# sysctl -w net.ipv4.conf.lo.rp_filter=0

net.ipv4.conf.lo.rp_filter = 0

[root@server2 ~]# sysctl -w net.ipv4.conf.eth0.rp_filter=0

net.ipv4.conf.eth0.rp_filter = 0

[root@server2 ~]# sysctl -w net.ipv4.conf.tunl0.rp_filter=0

net.ipv4.conf.tunl0.rp_filter = 09:但是net.ipv4.conf.default.rp_filter只能在配置文件在进行关闭

[root@server2 ~]# vim /etc/sysctl.conf

10:重新加载

[root@server2 ~]# sysctl -p

net.ipv4.ip_forward = 0

net.ipv4.conf.default.rp_filter = 0

net.ipv4.conf.default.accept_source_route = 0

kernel.sysrq = 0

kernel.core_uses_pid = 1

net.ipv4.tcp_syncookies = 1

error: "net.bridge.bridge-nf-call-ip6tables" is an unknown key

error: "net.bridge.bridge-nf-call-iptables" is an unknown key

error: "net.bridge.bridge-nf-call-arptables" is an unknown key

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.shmmax = 68719476736

kernel.shmall = 4294967296

此时在查看全都为1了

[root@server2 ~]# sysctl -a | grep rp_filter

net.ipv4.conf.all.rp_filter = 0

net.ipv4.conf.all.arp_filter = 0

net.ipv4.conf.default.rp_filter = 0

net.ipv4.conf.default.arp_filter = 0

net.ipv4.conf.lo.rp_filter = 0

net.ipv4.conf.lo.arp_filter = 0

net.ipv4.conf.eth0.rp_filter = 0

net.ipv4.conf.eth0.arp_filter = 0

net.ipv4.conf.tunl0.rp_filter = 0

net.ipv4.conf.tunl0.arp_filter = 0servcer3上的操作和server2上是一样的

11:客户端(真机上进行检测)

和DR一样,只能访问server1调度机,无法直接连接到server2和server3

[kiosk@foundation36 ~]$ curl 172.25.36.100

<h1>www.westos.org - server3</h1>

[kiosk@foundation36 ~]$ curl 172.25.36.100

<h1>www.westos.org - server2</h1>

[kiosk@foundation36 ~]$ curl 172.25.36.100

<h1>www.westos.org - server3</h1>

[kiosk@foundation36 ~]$ curl 172.25.36.100

<h1>www.westos.org - server2</h1>nat模式:

原理图:

地址翻译实现虚拟服务器:

调度器接受到客户端的请求之后,根据调度算法,将客户端的的请求发送给服务器,服务器处理完请求,查看默认路由(NAT模式下需要将服务器的默认网关设置为调度器的ip),LB接受到响应包以后,将源IP进行修改,然后将响应数据发送给客户端。

原理详情:

1)客户端请求数据,然后将数据发送给LB;

2)LB 接受客户端的请求,根据调度算法将客户端的请求包的源IP和端口,修改为服务器,然后将这条连接信息保存在hash表中;

3)数据包经过服务器的处理,服务器的默认网关是LB,然后将相应数据发送给LB;

4)LB 收到服务器的响应包以后,根据hash表中的连接信息,将源IP改为LB自己,然后将数据发送给客户端。

注:NAT模式可以进行端口转发;从客户端到服务器只进行了DNAt(目的IP的转换),从服务器到客户端进行了SNAT的转换(源IP的转换),整个过程中服务器对于客户端来说时透明的,保证了服务器的私密性

二、实验部署:

(1)实验环境:

1:所有主机均为rhel6.5,selinux为disabled,防火墙是关闭的状态

server1 Load Balancer(调度器) <添加2个网卡 eht0 eth1>

server2 Real Server

server3 Real Server

server4 Clients (充当客户端角色,进行访问测试)(此处我用的是真机充当客户端进行访问)

(2)实验过程:

server1(LB):

1:给server1添加一块网卡eth1

2:添加ip以及网关(网关就设置成ip<和客户端是同一网段的ip>)

[root@server1 ~]# cd /etc/sysconfig/network-scripts/

[root@server1 network-scripts]# vim ifcfg-eth1

[root@server1 ~]# /etc/init.d/network restart

3:在srever1调度机上

4:查看server1的网关:

5:在server1上

[root@server1 ~]# echo "1" > /proc/sys/net/ipv4/ip_forward ##开启ip转发,确保两块网卡的数据包是可以传送的;内核参数

[root@server1 ~]# cat /proc/sys/net/ipv4/ip_forward

16:server2(RS)

[root@server2 ~]# cd /var/www/html

[root@server2 ~]# vim index.html

www.westos.org - server2

[root@server2 ~]# /etc/init.d/httpd start

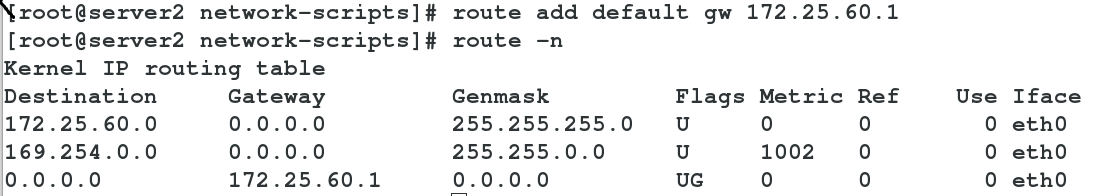

[root@server2 network-scripts]# route add default gw 172.25.60.1 ##添加网关

[root@server2 network-scripts]# route -n

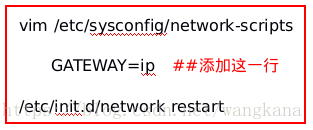

注:网关的添加方式有2种:

(1)如上的命令方式: route add default gw ip ##临时修改

(2)文件修改(永久修改)

server3(RS):

[root@server3 ~]# cd /var/www/html

[root@server3 ~]# vim index.html

bbs.westos.org

[root@server3 ~]# /etc/init.d/httpd restart

[root@server3 network-scripts]# route add default gw 172.25.60.1

[root@server3 network-scripts]# route -n

server4(客户端测试):客户端的ip必须和server1的eth1网卡是同一网段的

在server2和srevre3之间进行轮询

所以说添加网关的意义: 客户端只知道172.25.60.1(调度器的VIP),通过访问它发送请求数据包,而调度器的真实IP和RS的RIP,不在同一个网段内;数据包不能到达真实后端服务器,所以我们需要设置在RS将LB的RIP设置为网关,让数据包可以到达真实服务器

DR直连(当被调度的主机down之后应该怎么办)

在server1调度机上

1:ipvsadm -C 先清楚之前做的隧道的策略

2:卸载lunt0隧道:

[root@server1 ~]# modprobe -r ipip

[root@server1 ~]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:a5:d0:68 brd ff:ff:ff:ff:ff:ff

inet 172.25.36.1/24 brd 172.25.36.255 scope global eth0

inet6 fe80::5054:ff:fea5:d068/64 scope link

valid_lft forever preferred_lft forever3:查看之前做的dr直连保存的策略还在,清楚之前的策略:

[root@server1 ~]# vim /etc/sysconfig/ipvsadm

[root@server1 ~]# /etc/init.d/ipvsadm save

ipvsadm: Saving IPVS table to /etc/sysconfig/ipvsadm: [ OK ]

[root@server1 ~]# cat /etc/sysconfig/ipvreferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:37:e4:b8 brd ff:ff:ff:ff:ff:ff

inet 172.25.0.1/24 brd 172.25.0.255 scope global eth0

inet 172.25.0.100/32 scope global eth0

inet6 fe80::5054:ff:fe37:e4b8/64 scope link

valid_lft forever preferred_lft forever

sad4:在server2和servre3上卸载lunt0隧道

[root@server2 ~]# modprobe -r ipip

[root@server2 ~]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:82:be:3e brd ff:ff:ff:ff:ff:ff

inet 172.25.36.2/16 brd 172.25.255.255 scope global eth0

inet6 fe80::5054:ff:fe82:be3e/64 scope link

valid_lft forever preferred_lft forever5:给server2的eth0上加入虚拟ip

[root@server2 ~]# ip addr add 172.25.36.100/32 dev eth0

[root@server2 ~]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:82:be:3e brd ff:ff:ff:ff:ff:ff

inet 172.25.36.2/16 brd 172.25.255.255 scope global eth0

inet 172.25.36.100/32 scope global eth0

inet6 fe80::5054:ff:fe82:be3e/64 scope link

valid_lft forever preferred_lft forever6:server3的操作是一样的:

[root@server3 ~]# modprobe -r ipip

[root@server3 ~]# ip addrshow

Object "addrshow" is unknown, try "ip help".

[root@server3 ~]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:a7:a0:f1 brd ff:ff:ff:ff:ff:ff

inet 172.25.36.3/16 brd 172.25.255.255 scope global eth0

inet6 fe80::5054:ff:fea7:a0f1/64 scope link

valid_lft forever preferred_lft forever

[root@server3 ~]# ip addr add 172.25.36.100/32 dev eth0

[root@server3 ~]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:a7:a0:f1 brd ff:ff:ff:ff:ff:ff

inet 172.25.36.3/16 brd 172.25.255.255 scope global eth0

inet 172.25.36.100/32 scope global eth0

inet6 fe80::5054:ff:fea7:a0f1/64 scope link

valid_lft forever preferred_lft forever7:arptables -L

在server2和server3上查看,之前做的防火墙策略还存在

8:此时在客户端及(真机上进行检测)

会发现在servcer2和server3之间进行轮询

9:执行/etc/init.d/httpd stop

将server3的httpd服务关闭,此时在服务端(真机)上进行检测

访问server2时可以进行正常访问,但是访问server3时就会现实连接拒绝

这在真实的生产环境中是不允许的,总不能让客户议会能访问一会不能访问

此时需要在server1上进行操作

10:配置server1上的yum源(安装高可用包)

[rhel6.5]

name=rhel6.5

baseurl=http://172.25.36.250/rhel6.5

gpgcheck=0

enanled=1

[LoadBalancer]

name=LoadBalancer

baseurl=http://172.25.36.250/rhel6.5/LoadBalancer

gpgcheck=0

enabled=1

[HighAvailability]

name=HighAvailability

baseurl=http://172.25.36.250/rhel6.5/HighAvailability

gpgcheck=0

enabled=111:还要安装ldirecyord-3.9.5-3.1x86_64.rpm依赖包

12:rpm -qa | grep ldirec 查看主配置文件

13:rpm -qpl ldirecyord-3.9.5-3.1x86_64.rpm 查看所有的配置文件

14:cd /etc/ha.d

15: cp /usr/share/doc/ldirecyord-3.9.5/ldirectord.cf . 复制当前目录下

[root@server1 ha.d]# ls

ldirectord.cf resource.d shellfuncs

16:[root@server1 ha.d]# vim ldirectord.cf

编辑配置文件

# Sample for an http virtual service

virtual=172.25.36.100:80

real=172.25.36.2:80 gate

real=172.25.36.3:80 gate

real=192.168.6.6:80 gate

fallback=127.0.0.1:80 gate

service=http

scheduler=rr

#persistent=600

#netmask=255.255.255.255

protocol=tcp

checktype=negotiate

checkport=80

request="index.html"

#receive="Test Page"

#virtualhost=www.x.y.z

17:重新开启服务

[root@server1 ha.d]# /etc/init.d/ldirectord start

Starting ldirectord... success

[root@server1 ha.d]#

18:此时在真机上进行测试,即使把server3的httpd服务关闭了

也会直接跳到server2上,不会出现server3被拒绝的情况

19:如果server2和server3的httpd服务都关闭了。则会跳到server1上。

[root@server1 ha.d]# cd /var/www/html/

[root@server1 html]# ls

index.html

[root@server1 html]# vim index.html #此时会显示server1上apache默认发布目录下的内容

20:记住要将httpd服务的端口重新改为80

上面是后在机器server2或者server3出现问题的情况

下面的操作是如果server1出现问题该怎么办

1:此时就需要一台备用的server4调度机

2:利用快照生存vm4:qemu-img create -f qcow2 -b rhel6.5.qcow2 vm4

记住要修改vm4的主机名和ip

3:把server1的yun源复制一份给server4

[root@server1 ~]# cat /etc/yum.repos.d/yum.repo

[rhel6.5]

name=rhel6.5

baseurl=http://172.25.36.250/rhel6.5

gpgcheck=0

enanled=1

[LoadBalancer]

name=LoadBalancer

baseurl=http://172.25.36.250/rhel6.5/LoadBalancer

gpgcheck=0

enabled=1

[HighAvailability]

name=HighAvailability

baseurl=http://172.25.36.250/rhel6.5/HighAvailability

gpgcheck=0

enabled=1

4:在server1上解压

[root@server1 home]# ls

bansys.zip varnish-3.0.5-1.el6.x86_64.rpm

keepalived-2.0.6.tar.gz varnish-libs-3.0.5-1.el6.x86_64.rpm

ldirectord-3.9.5-3.1.x86_64.rpm

[root@server1 home]# tar zxf keepalived-2.0.6.tar.gz

[root@server1 home]# ls

bansys.zip keepalived-2.0.6.tar.gz varnish-3.0.5-1.el6.x86_64.rpm

keepalived-2.0.6 ldirectord-3.9.5-3.1.x86_64.rpm varnish-libs-3.0.5-1.el6.x86_64.rpm

5:安装gcc模块:(源码编译三部曲)

[root@server1 keepalived-2.0.6]# yum install openssl-devel

6: ./configure --prefix=/usr/local/keepalived --with- init=SYSV

7:[root@server1 keepalived-2.0.6]# make && make install

8:进入相关目录并给keepalived执行权限

[root@server1 keepalived-2.0.6]# cd /usr/local/

[root@server1 local]# ll

total 44

drwxr-xr-x. 2 root root 4096 Jun 28 2011 bin

drwxr-xr-x. 2 root root 4096 Jun 28 2011 etc

drwxr-xr-x. 2 root root 4096 Jun 28 2011 games

drwxr-xr-x. 2 root root 4096 Jun 28 2011 include

drwxr-xr-x 6 root root 4096 Jan 25 15:37 keepalived

drwxr-xr-x. 2 root root 4096 Jun 28 2011 lib

drwxr-xr-x. 2 root root 4096 Jun 28 2011 lib64

drwxr-xr-x. 2 root root 4096 Jun 28 2011 libexec

drwxr-xr-x. 2 root root 4096 Jun 28 2011 sbin

drwxr-xr-x. 5 root root 4096 Jan 24 10:25 share

drwxr-xr-x. 2 root root 4096 Jun 28 2011 src

[root@server1 local]# cd keepalived/

[root@server1 keepalived]# ls

bin etc sbin share

[root@server1 keepalived]# cd etc/

[root@server1 etc]# ls

init keepalived rc.d sysconfig

[root@server1 etc]# cd rc.d/

[root@server1 rc.d]# ls

init.d

[root@server1 rc.d]# cd init.d/

[root@server1 init.d]# ls

keepalived

[root@server1 init.d]# chmod +x keepalived

9:作相关ian目录的软连接:

[root@server1 init.d]# ln -s /usr/local/keepalived/etc/rc.d/init.d/keepalived /etc/init.d/

[root@server1 init.d]# ln -s /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/

[root@server1 init.d]# ln -s /usr/local/keepalived/etc/keepalived/ /etc/

[root@server1 init.d]# ln -s /usr/local/keepalived/sbin/keepalived /sbin/

10:关闭ldirectord并且让他开机不启动:

[root@server1 init.d]# /etc/init.d/ldirectord stop

Stopping ldirectord... success

[root@server1 init.d]# chkconfig ldirectord off

11:删除之前作在eth0上的172.25.36.100这个ip

12:[root@server1 init.d]# ip addr del 172.25.36.100/24 dev eth0

13:[root@server1 keepalived]# ls

keepalived.conf samples

[root@server1 keepalived]# vim keepalived.conf 编辑配置文件

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict #(此处注释)

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state MASTER #(主的) (server4上此处改为BACKUP)

interface eth0

virtual_router_id 36

priority 100 #(优先级别为100) (server4上优先级为50)

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.36.100

}

}

virtual_server 172.25.36.100 80 {

delay_loop 6

lb_algo rr

lb_kind DR

#persistence_timeout 50 (此处也注释)

protocol TCP

real_server 172.25.36.2 80 {

TCP_CHECK{

weight 1

connect_timeout 3

retry 3

delay_before_retry 3

}

}

real_server 172.25.36.3 80 {

TCP_CHECK{

weight 1

connect_timeout 3

retry 3

delay_before_retry 3

}

}

14:将这个发送给server4,主要server4作为副的,配置文件中有两处要修改(BACKUP和优先级)

[root@server1 local]# scp -r keepalived/ root@server4:/usr/local/

15:启动服务

[root@server4 keepalived]# /etc/init.d/keepalived start

Starting keepalived: [ OK ]

16:此时在真机上进行检测

在server2和server3上进行轮询

如果把server2的httpd服务关闭,客户端会直接跳到server3上,不会出现连接拒绝的情况。

17:此时可以看到172.25.36.100在sever1上,如果把server1上的 /etc/init.d/ldirectord stop关闭之后,此时

172.25.36.100ip自动跳到备用的server4主机上

就实现了,即使主的servre1调度机坏了,server4副的调度机会接着进行工作