Optical Flow Guided Feature A Fast and Robust Motion Representation for Video Action Recognition论文解读

论文引用:Sun S , Kuang Z , Ouyang W , et al. Optical Flow Guided Feature: A Fast and Robust Motion Representation for Video Action Recognition[J]. 2017.

1. Abstract

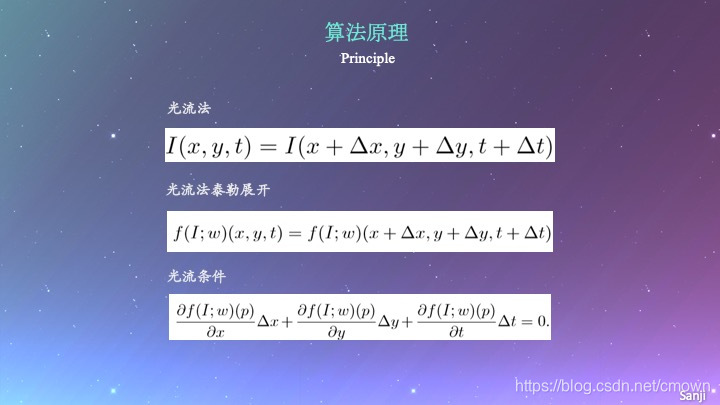

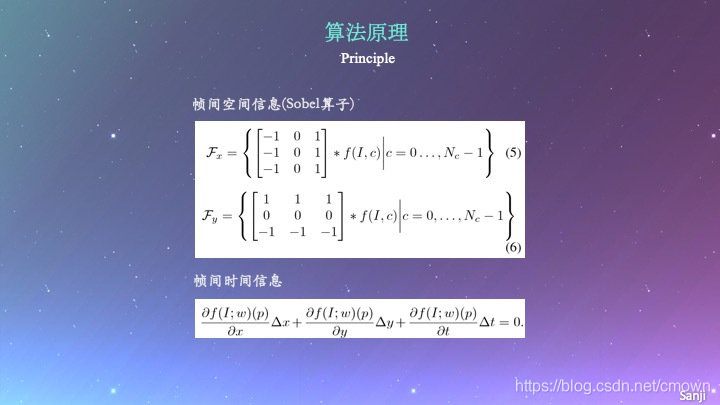

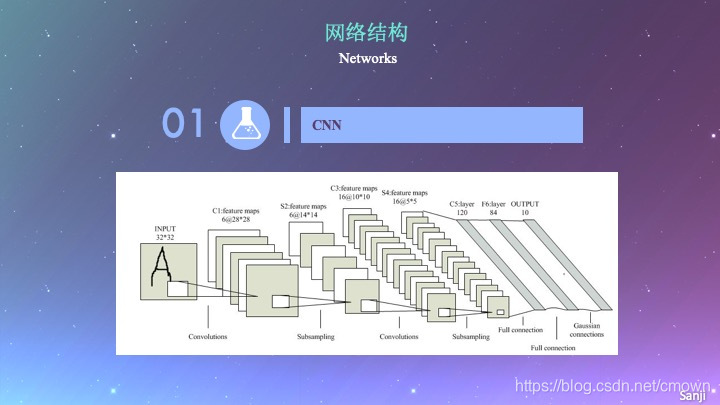

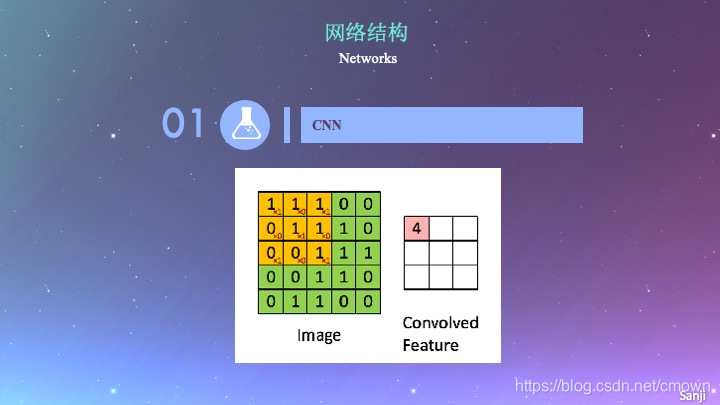

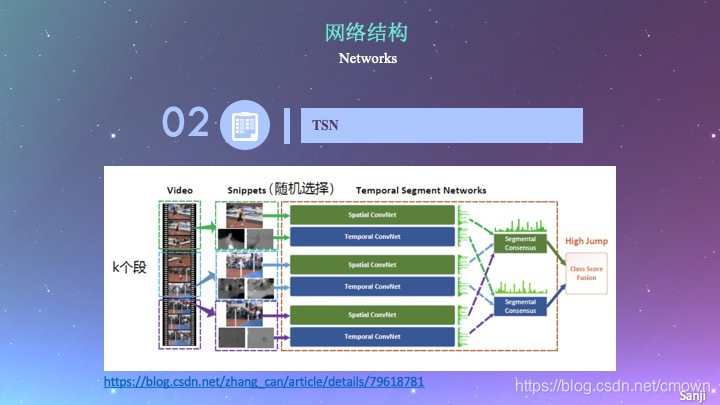

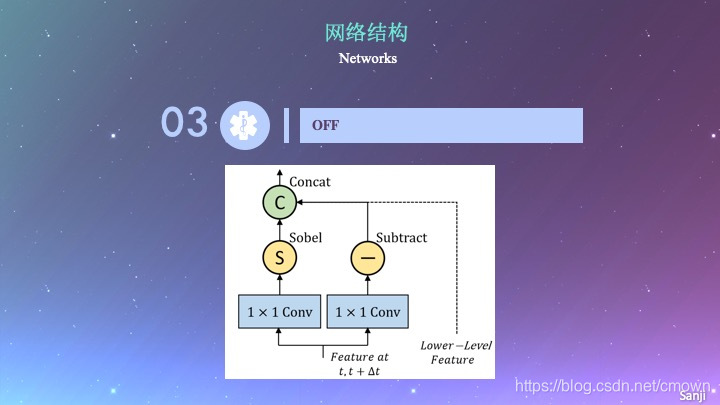

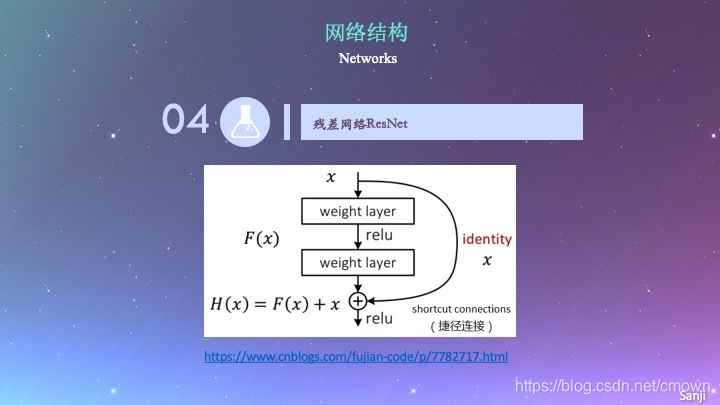

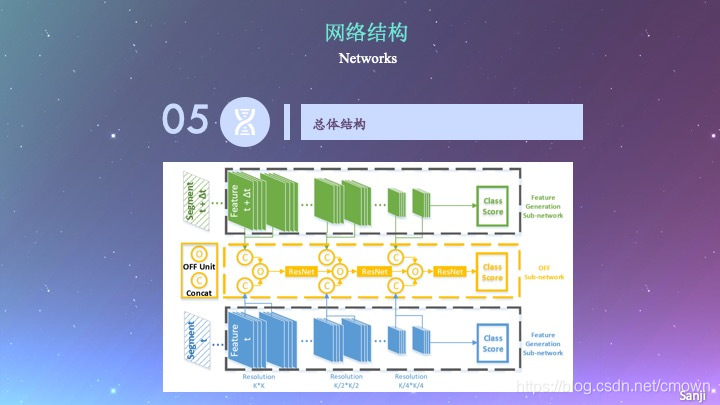

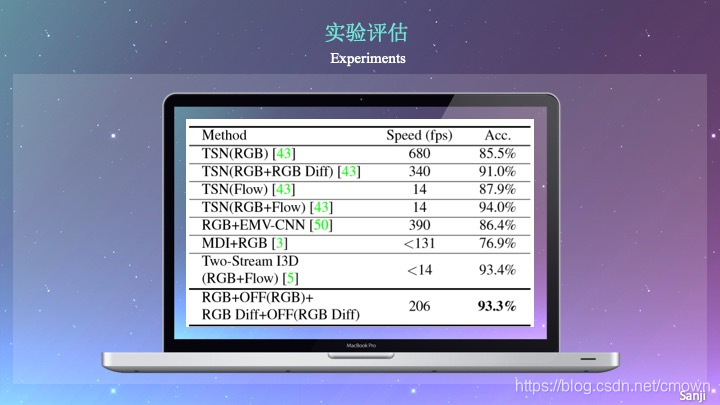

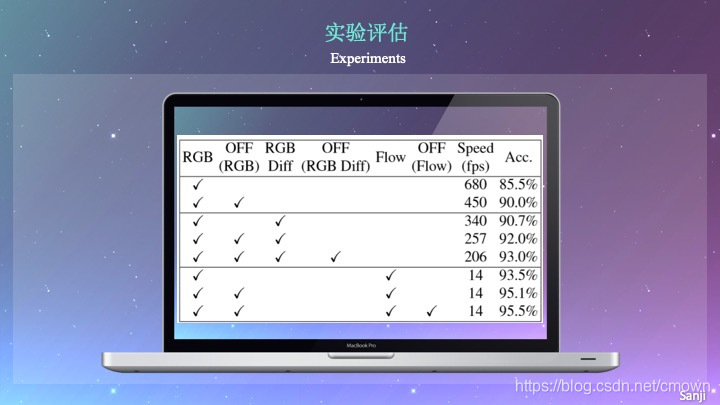

Motion representation plays a vital role in human action recognition in videos. In this study, we introduce a novel compact motion representation for video action recogni- tion, named Optical Flow guided Feature (OFF), which en- ables the network to distill temporal information through a fast and robust approach. The OFF is derived from the definition of optical flow and is orthogonal to the optical flow. The derivation also provides theoretical support for using the difference between two frames. By directly cal- culating pixel-wise spatio-temporal gradients of the deep feature maps, the OFF could be embedded in any existing CNN based video action recognition framework with only a slight additional cost. It enables the CNN to extract spatio- temporal information, especially the temporal information between frames simultaneously. This simple but powerful idea is validated by experimental results. The network with OFF fed only by RGB inputs achieves a competitive accu- racy of 93.3% on UCF-101, which is comparable with the result obtained by two streams (RGB and optical flow), but is 15 times faster in speed. Experimental results also show that OFF is complementary to other motion modalities such as optical flow. When the proposed method is plugged into the state-of-the-art video action recognition framework, it has 96.0% and 74.2% accuracy on UCF-101 and HMDB- 51 respectively. The code for this project is available at: https://github.com/kevin-ssy/Optical-Flow-Guided-Feature

2. 论文解读