A Bidirectional LSTM Approach with Word Embeddings for Sentence Boundary Detection

- 219 Downloads

Abstract

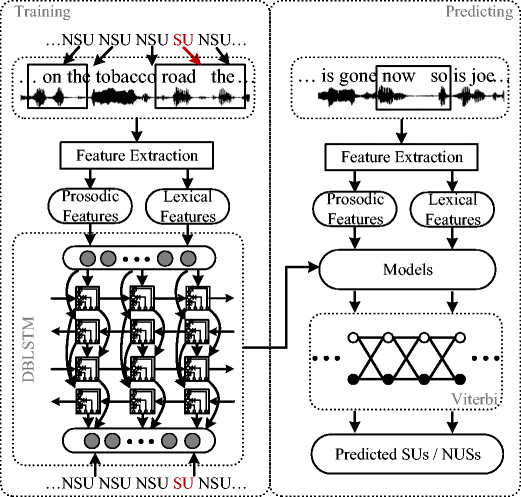

Recovering sentence boundaries from speech and its transcripts is essential for readability and downstream speech and language processing tasks. In this paper, we propose to use deep recurrent neural network to detect sentence boundaries in broadcast news by modeling rich prosodic and lexical features extracted at each inter-word position. We introduce an unsupervised word embedding to represent word identity, learned from the Continuous Bag-of-Words (CBOW) model, into sentence boundary detection task as an effective feature. The word embedding contains syntactic information that is essential for this detection task. In addition, we propose another two low-dimensional word embeddings derived from a neural network that includes class and context information to represent words by supervised learning: one is extracted from the projection layer, the other one comes from the last hidden layer. Furthermore, we propose a deep bidirectional Long Short Term Memory (LSTM) based architecture with Viterbi decoding for sentence boundary detection. Under this framework, the long-range dependencies of prosodic and lexical information in temporal sequences are modeled effectively. Compared with previous state-of-the-art DNN-CRF method, the proposed LSTM approach reduces 24.8% and 9.8% relative NIST SU error in reference and recognition transcripts, respectively.

Keywords

Sentence boundary detection Word embedding Recurrent neural network Long short-term memory1 Introduction

- ASR Output:

-

americans have come a long way on the tobacco road the romance is gone so joe camel smokers are out in the cold banned in baseball parks restaurants and even in some bars

- Human Transcript:

-

Americans have come a long way on the tobacco road. The romance is gone now. So is Joe Camel. Smokers are out in the cold banned in baseball parks restaurants and even in some bars.

As we know, punctuation, in particular sentence boundaries, is crucial to human legibility [2]. Words without appropriate sentence boundaries may cause ambiguous meaning of some utterances. In a dictation system like voice input on mobile phones, user experience can be greatly improved if punctuations are automatically inserted as the user speaks. Besides improving readability, the presence of sentence boundaries in the ASR transcripts can help downstream language processing applications such as parsing [3], information retrieval [4], speech summarization [5], topic segmentation [6, 7] and machine translation [8, 9]. In these tasks, it is assumed that the transcripts have been already delimited into sentence-like units (SUs). Kahn et al. [3] showed that the error reduced significantly in parsing performance by using an automatic sentence boundary detection system. Matusov et al. [9] reported that sentence boundaries are extremely beneficial for machine translation. Thus, sentence boundary detection is an important precursor to bridge automatic speech recognition and downstream speech and language processing tasks.

Sentence boundary detection, also called sentence segmentation, aims to break a running audio stream into sentences or to recover the punctuations in speech recognition transcripts. This problem has been previously formulated as one of the metadata extraction (MDE) tasks in the DARPA-sponsored EARS program2 and NIST rich transcription (RT) evaluations.3 The goal of this work is to create an enriched speech transcript with sentence boundaries. The sentence boundary detection task is usually formulated as a binary classification or sequence tagging problem where we decide whether a candidate position should be a sentence boundary or not. The boundary candidate can be any inter-word region in a text or a salient pause in an audio stream. Features are always extracted from either text or audio stream or both near the candidate period. The features from text are named as lexical features, others from audio are called as prosodic features.

In the past several years, deep learning methods have been successfully applied to many sequential prediction and classification tasks, such as speech recognition [1, 10, 11], word segmentation [12], part-of-speech tagging and chunking [13]. A deep neural network (DNN) learns a hierarchy of nonlinear feature detectors that can capture complex statistical patterns. In a deep structure, the primitive layer in the DNN nonlinearly transforms the inputs into a higher level, resulting in a more abstract representation that better models the underlying factors of the data. Our recently proposed DNN-CRF work [14] has shown that by capturing a hierarchy of prosodic information the DNN is able to detect sentence boundary in a more effective way.

- 1)

We introduce three continuous valued word embeddings as new lexical features to represent word identities into the sentence boundary detection task. The first one is an unsupervised word embedding, trained by Continuous Bag-of-Words (CBOW) model [15]. The second one is derived from the projection layer of a LSTM [16] based neural network through supervised learning. The third one is extracted from the last hidden layer of the neural network. Experimental results show the word embedding is good lexical feature in the sentence boundary detection task and improves the performance significantly.

- 2)

We propose a deep bidirectional LSTM based architecture with global Viterbi decoding for sentence boundary detection. This approach is designed to effectively utilize prosodic and lexical features, so as to exploit their temporal and complementary information. Compared with the previous DNN-CRF method, the proposed approach reduces 24.8% and 9.8% relative NIST SU Error in reference and recognition transcripts, respectively.

In Section 2, we provide a brief review on previous studies related to the sentence boundary detection task. In Section 3, we describe the proposed sentence boundary detection approach. In Section 4, the conventional prosodic and lexical features are described. After that, we introduce the new lexical features (word embedding) in Section 5. We discuss the experiments and results in Section 6. Finally, the conclusions are drawn in Section 7.

2 Related Works

For a classification or sequence tagging problem, studies mainly focus on finding useful features and models. For the sentence boundary detection task, researchers mostly investigate new features and models that are effective in discriminating sentence boundaries or non-boundaries. For the features, speech prosodic cues and lexical knowledge sources are investigated a lot. Prosodic cues, described by pause, pitch and energy characteristics extracted from the speech signals, always convey important structural information and reflect breaks in the temporal and intonational contour [17, 18, 19, 20]. Studies show that sentence boundaries are often signaled by a significant pause and a pitch reset [6, 14, 19, 21, 22]. Lexical knowledge sources, such as Part-of-Speech (POS) tags and syntactic Chunk tags, are well known information that indicates important syntactic knowledge of sentences [21]. For the models, several discriminative and generative models have been studied, including Decision Tree (DT) [6, 22, 23], Multi-layer Perception (MLP) [24], Hidden Markov Model (HMM) [6, 21], Maximum Entropy (ME) [21], Conditional Random Fields (CRF) [14, 21, 25, 26, 27], and so on.

Inspired by the finding that the speech prosodic structure is highly related to the discourse structure [6, 28], some researchers have studied the use of only prosodic cues in sentence boundary detection. For example, Haase et al. [23] proposed a DT approach based on a set of features related to F 0 contours and energy envelopes. Shriberg and Stolcke [6] have shown that a DT model learned from prosodic features can achieve comparable performance with that learned from complicated lexical features. It is worth noting that, as compared with the lexical approaches, prosodic approaches usually do not use textual information and the influence of unavoidable speech recognition errors can be avoided. In addition, prosodic cues are known to be relevant to discourse structure across languages [29] and hence prosodic-based approaches can be directly applied to multilingual scenarios [29, 30, 31].

Although prosodic approaches have benefit in avoiding the effect of speech recognition errors, lexical information is still worth studying. Because the semantic and syntax cues are highly relevant to sentence boundaries [14, 21, 24, 32]. Stolcke and Shriberg [32] studied the relevance of several word-level features for segmentation of spontaneous speech on the Switchboard corpus. Their best results were achieved by using POS n-grams, enhanced by a couple of trigger words and biases. Similarly, on the same corpus, Gavalda et al. [24] designed a multi-layer perception (MLP) system based on the features of trigger words and POS tags in a sliding window reflecting lexical context. Stevenson and Gaizauskas [33] implemented a memory-based learning algorithm to detect sentence boundary on the Wall Street Journal (WSJ) corpus. They extracted totally 13 lexical features to predict whether an inter-word position is a boundary or not. In addition, statistical language model has been widely used in sentence boundary detection [5, 34, 35, 36] and punctuation prediction [37].

However, the above works only use either prosodic information or lexical knowledge. Good results of sentence boundary detection are often achieved by using both lexical and prosodic information, since these two knowledge sources are complementary in improving the performance. Gotoh and Renals [38] combined the probabilities from a language model and a pause duration model to make sentence boundary decisions. Later, they proposed a statistical finite state model that combines prosodic, linguistic and punctuation class features to annotate punctuation in broadcast news [39]. Kim and Woodland [40] performed punctuation insertion during speech recognition. Prosodic features together with language model probabilities were used within a decision tree framework. Shriberg et al. [6] integrated both lexical and prosodic features by a decision tree - hidden Markov model (DT-HMM) approach, where decision tree over prosodic features is followed by a hidden Markov model of lexical features. Since the HMM has a drawback that maximizes the joint probability of the observations and hidden events, as opposed to maximizing the posterior probability that would be a more suitable criterion to the classification task, Liu et al. [21] proposed a decision tree - conditional random fields (DT-CRF) approach that pushed the state-of-the-art performance of sentence boundary detection to a new level. Similar to the DT-HMM approach [6], the boundary/non-boundary posterior probabilities from the DT prosodic model were quantized and then integrated with lexical features in a linear-chain CRF. In the CRF, the conditional probability of an entire label sequence given a feature sequence is modeled with an exponential distribution. Furthermore, instead of a DT model in modeling prosodic features, our previous work [14] proposed a deep neural network - conditional random fields (DNN-CRF) approach that nonlinearly transformed the prosodic features into posterior probabilities. Then the posterior probabilities were integrated with lexical features in the way similar to the previous work [21]. This approach improved the performance a lot, because of DNN’s ability in learning good representations from raw features through several nonlinear transformations.

Different from the aforementioned studies, the method developed in this paper trains the model using a rich set of both prosodic and lexical features. Besides, unlike the way of integrating different kind of features in previous DT-HMM [6], DT-CRF [21] and DNN-CRF [14] approaches, our proposed method combines the prosodic and lexical features at the beginning as the inputs of a single model without individually modeling each category features. Our motivation is to learn the salient and complementary information between the combined raw features for effectively discriminating sentence boundary or non-boundary by the model itself. Another difference is that a deep bidirectional LSTM network is used to learn effective feature representations and capture long term memory, so as to exploit the temporal information. The structure of the deep bidirectional LSTM network will circumvent the serious limitations of shallow models or DNN using a fixed window size in previous studies. Our experiments show that differences lead to significant improvement in sentence boundary detection task.

3 Proposed Deep Bidirectional LSTM Approach

The architecture of our proposed sentence boundary detection system.