前言: 前面的一篇文章(MapReduce原理)中说明MapReduce在提供方便时内部框架一直都在进行排序、分组、分块,我们在使用的时候将系统的排序改写成自己想要的排序时,在很大计算量的时候就能减少一次排序,会很大提高效率。

(Comparable会将reduce阶段的任务在map阶段进行)

案例:求不同电影(根据ID分)的评分的前二十条数据

求解思路:

1.未经过改造的

原始数据:{“movie”:”3671”,”rate”:”4”,”timeStamp”:”997454367”,”uid”:”6040”}

结果:movie相同,根据rate排序,取前二十条数据。

(1) MapTask:

public static class MapTask extends Mapper<LongWritable, Text, Text, MovieBean>{

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, MovieBean>.Context context)

throws IOException, InterruptedException {

try {

MovieBean movieBean = JSON.parseObject(value.toString(), MovieBean.class);//将JSON数据转换

String movie = movieBean.getMovie();

context.write(new Text(movie), movieBean);//movieId作为key,整个数据作为value

} catch (Exception e) {

}

}

}(2)ReduceTask

public static class ReduceTask extends Reducer<Text, MovieBean, MovieBean, NullWritable>{ //只需要排整个bean就好,所以value不需要值

@Override

protected void reduce(Text movieId, Iterable<MovieBean> movieBeans,

Reducer<Text, MovieBean, MovieBean, NullWritable>.Context context)

throws IOException, InterruptedException {

List<MovieBean> list = new ArrayList<>();

for (MovieBean movieBean : movieBeans) {

MovieBean movieBean2 = new MovieBean();

movieBean2.set(movieBean);

list.add(movieBean2);//因为每次添加的时候是引用的地址,如果不创建新对象的话就引用同一个对象,最后后面的数据覆盖前面的数据就会产生数据一样的尴尬

}

Collections.sort(list, new Comparator<MovieBean>() {//按照rate排序,重写了方法

@Override

public int compare(MovieBean o1, MovieBean o2) {

return o2.getRate() - o1.getRate();

}

});

for (int i = 0; i < Math.min(20, list.size()); i++) {

context.write(list.get(i), NullWritable.get());

}

//context.write(new MovieBean(), NullWritable.get());

}

}(3)Driver

public static void main(String[] args) throws Exception{

Configuration conf = new Configuration();

Job job = Job.getInstance(conf, "avg");

//设置map和reduce,以及提交的jar

job.setMapperClass(MapTask.class);

job.setReducerClass(ReduceTask.class);

job.setJarByClass(TopN1.class);

//设置输入输出类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(MovieBean.class);

job.setOutputKeyClass(MovieBean.class);

job.setOutputValueClass(NullWritable.class);

//输入和输出目录

FileInputFormat.addInputPath(job, new Path("d:/data/rating.json"));

FileOutputFormat.setOutputPath(job, new Path("d:\\data\\out\\topN1"));

//判断文件是否存在

File file = new File("d:\\data\\out\\topN1");

if(file.exists()){

FileUtils.deleteDirectory(file);

}

//提交任务

boolean completion = job.waitForCompletion(true);

System.out.println(completion?"你很优秀!!!":"滚去调bug!!");

}

(4)MovieBean

package cn.pengpeng.day07.topn1;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import org.apache.hadoop.io.Writable;

/**

* Writable hadoop 序列化接口

* @author root

*

*/

public class MovieBean implements Writable{

//{"movie":"1193","rate":"5","timeStamp":"978300760","uid":"1"}

private String movie;

private int rate;

private String timeStamp;

private String uid;

@Override

public void write(DataOutput out) throws IOException {

out.writeUTF(movie);

out.writeInt(rate);

out.writeUTF(timeStamp);

out.writeUTF(uid);

}

@Override

public void readFields(DataInput in) throws IOException {

movie = in.readUTF();

rate = in.readInt();

timeStamp = in.readUTF();

uid = in.readUTF();

}

public String getMovie() {

return movie;

}

public void setMovie(String movie) {

this.movie = movie;

}

public int getRate() {

return rate;

}

public void setRate(int rate) {

this.rate = rate;

}

public String getTimeStamp() {

return timeStamp;

}

public void setTimeStamp(String timeStamp) {

this.timeStamp = timeStamp;

}

public String getUid() {

return uid;

}

public void setUid(String uid) {

this.uid = uid;

}

@Override

public String toString() {

return "MovieBean [movie=" + movie + ", rate=" + rate + ", timeStamp=" + timeStamp + ", uid=" + uid + "]";

}

public void set(MovieBean movieBean) {

this.movie = movieBean.getMovie();

this.rate = movieBean.getRate();

this.timeStamp = movieBean.getTimeStamp();

this.uid = movieBean.getUid();

}

}

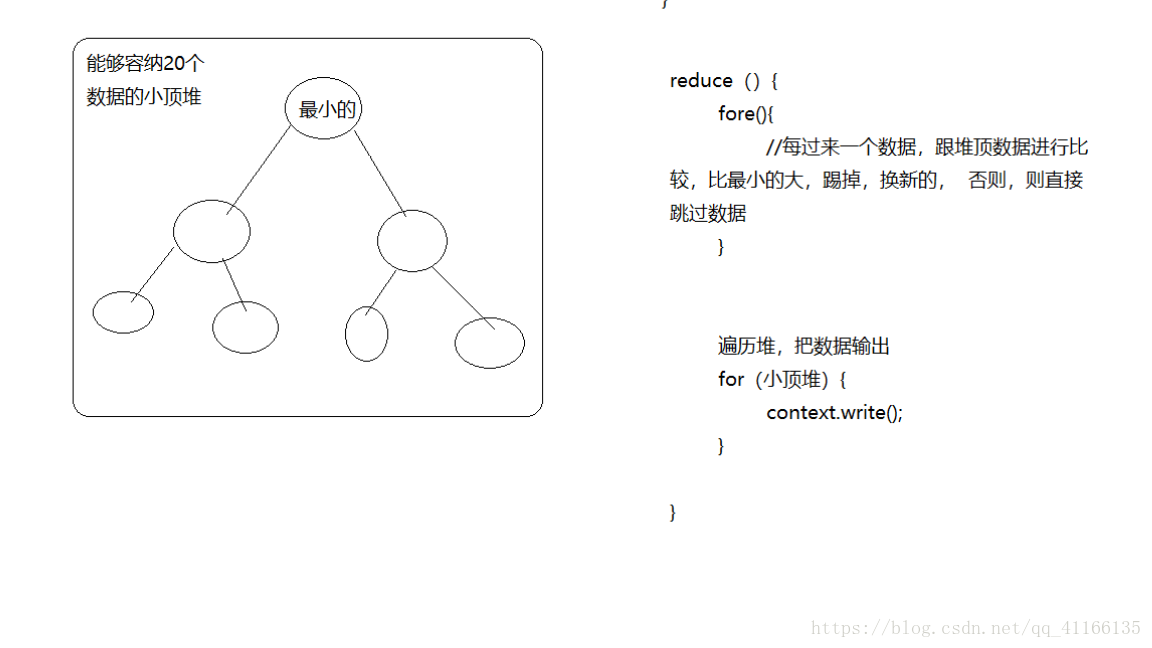

2.利用堆排序去节省空间(可以通过控制堆的大小去控制所占内存的大小)

(1)MapTask

public static class MapTask extends Mapper<LongWritable, Text, Text, MovieBean>{

//每次读数据都要新建对象,特别浪费空间,所以在外边新建

ObjectMapper objectMapper = new ObjectMapper();

MovieBean movieBean = new MovieBean();

Text text = new Text();

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, MovieBean>.Context context)

throws IOException, InterruptedException {

MovieBean readValue = objectMapper.readValue(value.toString(), MovieBean.class);

text.set(readValue.getMovie());

// 从这里交给maptask的kv对象,会被maptask序列化后存储,所以不用担心覆盖的问题

context.write(text, readValue);

}

}(2)ReduceTask

public static class ReduceTask extends Reducer<Text, MovieBean, MovieBean, NullWritable>{

@Override

protected void reduce(Text key, Iterable<MovieBean> values,

Reducer<Text, MovieBean, MovieBean, NullWritable>.Context context) throws IOException, InterruptedException {

//利用堆排序去节省空间,把他限制在20个对象

TreeSet<MovieBean> tree = new TreeSet<>(new Comparator<MovieBean>() {

@Override

//

public int compare(MovieBean o1, MovieBean o2) {//堆排序的原则,先按评分排,后按Uid排

// TODO Auto-generated method stub

if(o1.getRate()-o2.getRate() == 0) {

return o1.getUid().compareTo(o2.getUid());

}

else {

return o1.getRate()-o2.getRate();

}

}

});

for (MovieBean movieBean : values) {

MovieBean mBean = new MovieBean();

mBean.set(movieBean.getMovie(), movieBean.getRate(), movieBean.getTimeStamp(), movieBean.getUid());

if(tree.size()<=20) { //先把堆的前20填满

tree.add(mBean);

}

else {//将新添加的元素进行更新,如果小于堆顶直接丢弃,大于堆顶交换

MovieBean first = tree.first();

if(first.getRate()<mBean.getRate()) {

tree.remove(first);

tree.add(mBean);

}

}

}

for (MovieBean movieBean : tree) {

context.write(movieBean, NullWritable.get());

}

}

}(3)Driver和之前的没有任何区别,改下路径就好

3.MapReduce在执行的过程中会进行排序所以可以利用系统的排序节省一次排序所占用的时间和空间(根据key进行排序)

(1)首先修改分区的代码,如果不分区,不同的文件会出现相同的key

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.mapreduce.Partitioner;

/**

* 分区的:

* 把想要的数据分到相同的reduce里面

* @author Hailong

*

*/

public class MyPartition extends Partitioner<MovieBean, NullWritable>{

/**

* numPartition代表多少个reduceTask

* key map 端输出的key

* value map端输出的value

*/

@Override

public int getPartition(MovieBean key, NullWritable value, int numPartitions) {

//进行分块,& Integer.MAX_VALUE的代码是让前面的值为正

//想要指定那块,在后边赋值就OK

return (key.getMovie().hashCode() & Integer.MAX_VALUE)%numPartitions;

}

}(2)然后进行分组,不进行分组的话每个电影都是一个组,因为是按key进行分类,key就是bean对象,所以最后的结果就会是全部的数据

import org.apache.hadoop.io.WritableComparable;

import org.apache.hadoop.io.WritableComparator;

public class MyGroup extends WritableComparator{

public MyGroup() {

super(MovieBean.class,true); //使用自己的类,之前系统默认为false

}

@Override

public int compare(WritableComparable a, WritableComparable b) {//专门用于指定使用什么进行分组

MovieBean bean1 = (MovieBean)a;

MovieBean bean2 = (MovieBean)b;

return bean1.getMovie().compareTo(bean2.getMovie()); //相同的movie,所以按movieId进行分组

}

}

(3)实现排序

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import org.apache.hadoop.io.WritableComparable;

//在Map中一直使用对象不会覆盖,都序列化后存储了所以不用担心覆盖的问题

public class MovieBean implements WritableComparable<MovieBean>{ //实现了这个接口,重写了其中的方法

private String movie;

private int rate;

private String timeStamp;

private String uid;

public void set(String movie, int rate, String timeStamp, String uid) {

this.movie = movie;

this.rate = rate;

this.timeStamp = timeStamp;

this.uid = uid;

}

public String getMovie() {

return movie;

}

public void setMovie(String movie) {

this.movie = movie;

}

public int getRate() {

return rate;

}

public void setRate(int rate) {

this.rate = rate;

}

public String getTimeStamp() {

return timeStamp;

}

public void setTimeStamp(String timeStamp) {

this.timeStamp = timeStamp;

}

public String getUid() {

return uid;

}

public void setUid(String uid) {

this.uid = uid;

}

@Override

public String toString() {

return "MovieBean [movie=" + movie + ", rate=" + rate + ", timeStamp=" + timeStamp + ", uid=" + uid + "]";

}

@Override

public void readFields(DataInput in) throws IOException {

this.movie = in.readUTF();

this.rate = in.readInt();

this.timeStamp = in.readUTF();

this.uid = in.readUTF();

}

@Override

public void write(DataOutput out) throws IOException {

out.writeUTF(this.movie);

out.writeInt(this.rate);

out.writeUTF(this.timeStamp);

out.writeUTF(this.uid);

}

// 比较规则:先比评分,如果相同,再比电影名称

@Override

public int compareTo(MovieBean o) {

// TODO Auto-generated method stub

//return Integer.compare(o.getRate(), this.getRate()==0?this.getMovie().compareTo(o.getMovie()):Integer.compare(o.getRate(), this.getRate()));

if(o.getMovie().compareTo(this.getMovie())==0){

return o.getRate() - this.getRate();

}else{

return o.getMovie().compareTo(this.getMovie());

}

}

}

(4)Driver中必须加几个参数来让框架知道自己设置的具体方法

job.setNumReduceTasks(2); //ReduceTask的分区数

job.setPartitionerClass(MyPartition.class);//加载设置分区的文件

job.setGroupingComparatorClass(MyGroup.class);//设置分组